In this article, you will see a C# desktop application that invokes two TensorFlow AI models that were initially written in Python.

Introduction

This article showcases a C# desktop application that invokes two TensorFlow AI models that were initially written in Python. To this end, it uses the PythonRunner class, which I presented in more detail in a previous article. Basically, it is a component that lets you call Python scripts (both synchronously and asynchronously) from C# code (with a variable list of arguments) and returns its output (which can also be a chart or figure - in this case, it will be returned to the C# client application as a Bitmap). By making Python scripts easily callable from C# client code, these clients have full access to the world of (Data) Science, Machine Learning, and Artificial Intelligence - and, moreover, to Python's impressively feature-rich libraries for charting, plotting, and data visualization (like e.g., matplotlib and seaborn).

While the application makes use of the Python/TensorFlow AI stack, this article is not intended to be an introduction to these issues. There are dozens of other sources for that, most of them far better and more instructive than anything I could write. (You just need to fire up your search engine of choice ...). Instead, this article focuses on bridging the gap between Python and C#, shows how Python scripts can be called in a generic manner, how the called scripts must be shaped, and how the scripts' output then can be processed back on the C# side. In short: It's all about integrating Python and C#.

Now to the sample app: It is designed to classify handwritten digits (0-9). To accomplish this, it invokes two neural networks that were created and trained with Keras, a Python package that basically is a high-level neural networks API, written in Python and capable of running on top of Google's TensorFlow platform. Keras makes it very easy and simple to create powerful neural nets (the script for creating, training, and evaluating the simpler of the two used models contains no more than 15 LOC!) and is the quasi-standard for AI in the Python world.

The models are trained and validated with the MNIST dataset. This dataset consists of 60.000/10.000 (train/test) standardized images of the digits 0-9, each in 8bpp reversed greyscale format and 28x28 pixels in size. The MNIST dataset is so common in AI (especially for examples, tutorials and the like) that it sometimes is called The 'Hello World' of AI programming (and is also natively included with the Keras package). Below are some example MNIST images:

Motivation and Background

As a freelance C# developer with almost 15 years of experience, I started my journey into ML and AI a few months ago. I did this partly because I wanted to widen my skills as a developer, partly because I was curious what this hype around ML and AI is all about. Especially, I was puzzled by the many YouTube videos titled 'Python vs. C#' or similar. As I got some personal insight, I found out that this question is largely a non-issue - simply because the two programming languages stand for two totally different jobs. So the question should be more along the lines 'Data Scientist/Business Analyst/AI expert vs. Software Developer'. Both are completely different jobs, with different theoretical background and different business domains. An average (or mildly above average) person just won't be able to be excellent in both domains - moreover, I don't think that this would be desirable from a business/organizational perspective. It's like trying to be a plumber and a mechanic at the same time - I doubt that such a person will be among the best of its breed in either profession.

That's why I think that components that try to bring ML and AI to the .NET/C# world (like e.g. Microsoft's ML.NET or the SciSharp STACK) might be impressive in themselves, but they slightly miss the point. Instead, it IMHO makes much more sense to connect the two domains, thus enabling collaboration between a company's Data Scientists/Business Analysts/AI experts on the one side and its application developers on the other. The sample app that I will present here demonstrates how this can be done.

Prerequisites

Before I describe the app in some more detail and highlight some noteworthy lines of code, I should clarify some preparational points.

Python Environment

First, we need a running Python environment. (Caution: The Python version must be 3.6, 64bit! This is required by TensorFlow.) Additionally, these packages should be installed:

Don't bother: What looks like a pretty long list for the novice actually is quite standard for a seasoned scientific/AI/ML programmer. And if you're not sure how to install the environment or the packages: You can find dozens of tutorials and How-Tos on these issues around the web (both written and as video).

Now, we need to go one last step and tell the sample application where it can find the Python environment. This is done in the application's App.config (it also contains the name of the subfolder with the scripts and a timeout value):

...

<appSettings>

<add key="pythonPath"

value="C:\\Program Files (x86)\\Microsoft Visual Studio\\Shared\\Python36_64\\python.exe" />

<add key="scripts" value="scripts" />

<add key="scriptTimeout" value="30000" />

</appSettings>

...

Building and Training the AI Models

As I mentioned above, the sample app invokes two different neural networks and combines their results. The first one is a very simple Neural Network with two hidden layers (128 nodes each), the second one is a slightly more complex Convolutional Neural Network (or CNN for short). Both nets were trained and evaluated with the already described MNIST dataset, giving an accuracy of 94.97% (simple) and 98.05% (CNN). The trained models were saved and then the files/folders were copied to the location where the sample app expects them.

For those who are interested in the details of creating and training the two models (it's depressingly simple): The respective scripts can be found in the solution under scripts/create_train_{simple|cnn}.py.

The Sample App

Before we finally dive into the source code, let me briefly explain the various areas and functions of the sample app.

Drawing Digits

The left top area of the app's window contains a canvas where you can draw a digit with the mouse:

The left button underneath the drawing canvas (the one with the asterisk) clears the current figure, the right one does the actual prediction (by calling the predict.py Python script). The small preview image shows the pixels that are used for the prediction (i.e., they are provided as a parameter to predict.py). It is a resized and greyscaled 8bpp version of the current drawing. - The more complex and elaborate image preprocessing steps take place in the called Python script. While it is not exactly the scope of this article, note that you can dive into the details by inspecting the predict.py script (the image optimization part of the code was largely inspired by this blog post).

Raw Model Output

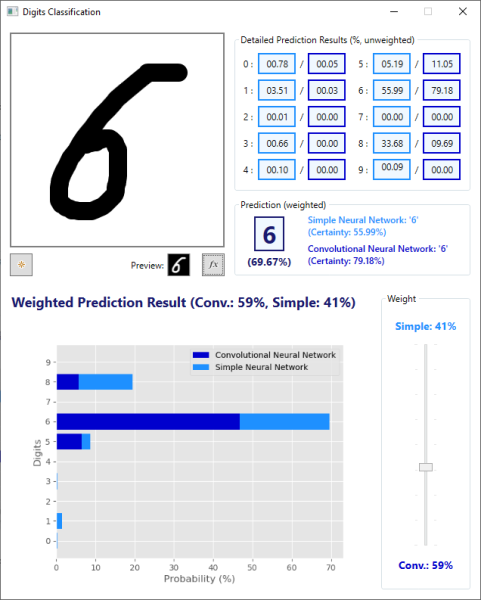

The top right group box ("Detailed Prediction Results (%, unweighted)") contains the raw output from the two AI models as series of percentages - one value for each digit. These values are actually the activation weights of the nets output layers, but they can be interpreted as probabilities in the context of digit classification. The light blue boxes contains the output from the simple network, the boxes in somewhat darker blue the output from the CNN.

Weighting the Two Models

The slider on the bottom right side of the window lets you vary the relative weights that the two models have for calculating the final prediction result. This final result is calculated as weighted average from the raw output of the two models.

This final result then is displayed in the big box in the middle of the window, together with the calculated probability for its correctness.

Showing the Calculation as Stacked Bar Chart

And finally, most of the bottom area of the application window is used to display a stacked bar chart with the weighted results of the prediction (the chart is produced by another Python script, chart.py). Again, the lighter/darker blue color coding applies.

Points of Interest

Now that we have seen the various aspects of the application more from a user's point of view, let's finally see some interesting pieces of code.

Principal Structure of the Code

Generally, the project consists of

- the C# files,

- the Python scripts in a

scripts subfolder (predict.py, chart.py), - the two TensorFlow AI models in the

scripts/model subfolder (the simple model is stored as a folder structure, the CNN as a single file).

The C# part of the application uses WPF and follows the MVVM architectural pattern. In other words: the view code (which consists solely of XAML) lives in the MainWindow class, whereas the MainViewModel holds the application's data and does the (not so complicated) calculations.

SnapshotCanvas

The first thing to note is the drawing control. I used a derivation of the regular InkCanvas control for that. It is enhanced by the Snapshot dependency property, which continuously renders itself as a bitmap each time the user adds a new stroke to the canvas:

public class SnapshotInkCanvas : System.Windows.Controls.InkCanvas

{

#region Properties

public Bitmap Snapshot

{

get => (Bitmap)GetValue(SnapshotProperty);

set => SetValue(SnapshotProperty, value);

}

#endregion // Properties

#region Dependency Properties

public static readonly DependencyProperty SnapshotProperty = DependencyProperty.Register(

"Snapshot",

typeof(Bitmap),

typeof(SnapshotInkCanvas));

#endregion // Dependency Properties

#region Construction

public SnapshotInkCanvas()

{

StrokeCollected += (sender, args) => UpdateSnapshot();

StrokeErased += (sender, args) => Snapshot = null;

}

#endregion // Construction

#region Implementation

private void UpdateSnapshot()

{

var renderTargetBitmap = new RenderTargetBitmap(

(int)Width,

(int)Height,

StandardBitmap.HorizontalResolution,

StandardBitmap.VerticalResolution,

PixelFormats.Default);

var drawingVisual = new DrawingVisual();

using (DrawingContext ctx = drawingVisual.RenderOpen())

{

ctx.DrawRectangle(

new VisualBrush(this),

null,

new Rect(0, 0, Width, Height));

}

renderTargetBitmap.Render(drawingVisual);

var encoder = new BmpBitmapEncoder();

encoder.Frames.Add(BitmapFrame.Create(renderTargetBitmap));

using (var stream = new MemoryStream())

{

encoder.Save(stream);

Snapshot = new Bitmap(stream);

}

}

#endregion // Implementation

}

The control's Snapshot then is bound via XAML ...

<local:SnapshotInkCanvas Snapshot="{Binding CanvasAsBitmap, Mode=OneWayToSource}" ... />

... to the MainViewModel's CanvasAsBitmap property:

public Bitmap CanvasAsBitmap

{

set

{

_canvasAsBitmap = value;

UpdatePreview();

}

}

(Note that this is one of the rare occasions where the data flow is exclusively outside-in (the binding is OneWayToSource).)

PythonRunner

The PythonRunner class is at the very heart of the application. It is a component that lets you run Python scripts from a C# client, with a variable list of arguments, both synchronously and asynchronously. The scripts can produce textual output as well as images which will be converted to C# Images. I have described this class (together with the conditions that the called Python scripts must meet) in more detail in a previous article ("Using Python Scripts from a C# Client (Including Plots and Images)").

Starting the Prediction Process

Remember this little 'Execute' button from the screenshot above? This is where the action is triggered and all the AI magic begins. The following command method is bound to the button (via WPF's command binding):

private async void ExecEvaluateAsync( object o = null)

{

try

{

if (IsProcessing)

{

throw new InvalidOperationException("Calculation is already running.");

}

IsProcessing = true;

if (_previewBitmap == null)

{

throw new InvalidOperationException("No drawing data available.");

}

byte[] mnistBytes = GetMnistBytes();

SetPredictionResults(await RunPrediction(mnistBytes));

await DrawChart();

}

catch (PythonRunnerException runnerException)

{

_messageService.Exception(runnerException.InnerException);

}

catch (Exception exception)

{

_messageService.Exception(exception);

}

finally

{

IsProcessing = false;

CommandManager.InvalidateRequerySuggested();

}

}

As we can see, the command method - besides some boilerplate code and error-checking - asynchronously calls the methods for performing the digit classification (RunPrediction(...)) and then for drawing the bar chart (DrawChart()). In the following, we will go through both processes.

Raw Results from the Two Models

Getting (and Parsing) the Raw Results from the Two Neural Networks

To run the two TensorFlow AI models against the user input, the C# client application first invokes the Python script (predict.py, the path is stored in the viewmodel's _predictScript field). The script expects a comma-separated list which represents the greyscale bytes of the digit:

private async Task<string> RunPrediction(byte[] mnistBytes)

{

return await _pythonRunner.ExecuteAsync(

_predictScript,

mnistBytes.Select(b => b.ToString()).Aggregate((b1, b2) => b1 + "," + b2));

}

The output from the script (i.e., the string that is returned to the viewmodel) looks like this:

00.18 02.66 16.88 04.23 22.22 07.27 05.78 01.40 29.66 09.74

00.00 00.11 52.72 00.09 00.30 00.04 00.02 00.77 45.94 00.00

Each line represents a series of probabilities for the digits 0-9 (as percentage values), with the first line representing the output from the simple neural net, the second one is the result from the CNN.

This output string then is parsed into two string arrays, which are stored in the viewmodel's PredictionResultSimple and PredictionResultCnn properties. Also, the SetPredictionText() method formats two strings with the two results into a human-readable form that can be presented to the user:

private void SetPredictionResults(string prediction)

{

_predictionResult = prediction;

if (string.IsNullOrEmpty(_predictionResult))

{

PredictionResultSimple = null;

PredictionResultCnn = null;

}

else

{

string[] lines = _predictionResult.Split('\n');

PredictionResultSimple = lines[0].Split(new[] { ' ' },

StringSplitOptions.RemoveEmptyEntries);

PredictionResultCnn = lines[1].Split(new[] { ' ' },

StringSplitOptions.RemoveEmptyEntries);

}

SetPredictionText();

OnPropertyChanged(nameof(PredictionResultSimple));

OnPropertyChanged(nameof(PredictionResultCnn));

}

...

public string[] PredictionResultSimple { get; private set; }

public string[] PredictionResultCnn { get; private set; }

...

public void SetPredictionText()

{

if (PredictionResultSimple == null || PredictionResultCnn == null)

{

PredictionTextSimple = null;

PredictionTextCnn = null;

}

else

{

string fmt = "{0}: '{1}'\n(Certainty: {2}%)";

string percentageSimple = PredictionResultSimple.Max();

int digitSimple = Array.IndexOf(PredictionResultSimple, percentageSimple);

PredictionTextSimple = string.Format(fmt, "Simple Neural Network",

digitSimple, percentageSimple);

string percentageCnn = PredictionResultCnn.Max();

int digitCnn = Array.IndexOf(PredictionResultCnn, percentageCnn);

PredictionTextCnn = string.Format(fmt, "Convolutional Neural Network",

digitCnn, percentageCnn);

}

OnPropertyChanged(nameof(PredictionTextSimple));

OnPropertyChanged(nameof(PredictionTextCnn));

SetWeightedPrediction();

}

(To find out the concrete predicted digit is really straightforward in our case: Since the numbers represent probabilities for the digits 0-9, the digit in question simply is equal to the index of the highest value in the array.)

Displaying the Raw Results

Now that we have the predictions from the two neural nets, all that's left to do is to bind our viewmodel's PredictionResultSimple and PredictionResultCnn properties to the corresponding controls in the UI, the series of read-only text boxes shown above. This is easily done using array syntax:

<TextBox Grid.Row="0" Grid.Column="1"

Text="{Binding PredictionResultSimple[0]}" />

<TextBox Grid.Row="1" Grid.Column="1"

Text="{Binding PredictionResultSimple[1]}" />

...

<TextBox Grid.Row="0" Grid.Column="3"

Text="{Binding PredictionResultCnn[0]}" />

<TextBox Grid.Row="1" Grid.Column="3"

Text="{Binding PredictionResultCnn[1]}" />

Weighted Result

Calculating and Displaying the Weighted Result

With the unweighted output from the two neural networks now available, we can go on and calculate the weighted overall result.

Remember that the relative weight of the two nets can be varied by the user via the slider control that was shown above. In MainWindow.xaml, we therefore bind the Value property of the Slider control to the viewmodel's Weight property:

<Slider Grid.Row="1"

Orientation="Vertical"

HorizontalAlignment="Center"

Minimum="0.1" Maximum="0.9"

TickFrequency="0.1"

SmallChange="0.1"

LargeChange="0.3"

TickPlacement="Both"

Value="{Binding Weight}" />

In the viewmodel then, the Weight property is used to calculate the weighted average from the output of the two AI models:

public double Weight

{

get => _weight;

set

{

if (Math.Abs(_weight - value) > 0.09999999999)

{

_weight = Math.Round(value, 2);

_simpleFactor = _weight;

_cnnFactor = 1 - _weight;

OnPropertyChanged();

OnPropertyChanged(nameof(WeightSimple));

OnPropertyChanged(nameof(WeightCnn));

ClearResults();

}

}

}

...

private void SetWeightedPrediction()

{

if (PredictionResultSimple == null || PredictionResultCnn == null)

{

WeightedPrediction = '\0';

WeightedPredictionCertainty = null;

}

else

{

double[] combinedPercentages = new double[10];

for (int i = 0; i < 10; i++)

{

combinedPercentages[i] =

(Convert.ToDouble(PredictionResultSimple[i]) * _simpleFactor * 2 +

Convert.ToDouble(PredictionResultCnn[i]) * _cnnFactor * 2) / 2;

}

double max = combinedPercentages.Max();

WeightedPrediction = Array.IndexOf(combinedPercentages, max).ToString()[0];

WeightedPredictionCertainty = $"({max:00.00}%)";

}

OnPropertyChanged(nameof(WeightedPredictionCertainty));

OnPropertyChanged(nameof(WeightedPrediction));

}

Invoking the Script to Get the Stacked Bar Chart

The bar chart which visually presents the weighted results is produced by another Python script, chart.py. It gets as input the output from the other script (i.e., the two lines with the percentage values), together with the weights for the simple and the convolutional neural network. This is the respective method call from the MainViewModel class, which sets the BarChart and the ChartTitle properties:

internal async Task DrawChart()

{

BarChart = null;

ChartTitle = null;

var bitmap = await _pythonRunner.GetImageAsync(

_chartScript,

_predictionResult,

_simpleFactor,

_cnnFactor);

if (bitmap != null)

{

BarChart = Imaging.CreateBitmapSourceFromHBitmap(

bitmap.GetHbitmap(),

IntPtr.Zero,

Int32Rect.Empty,

BitmapSizeOptions.FromEmptyOptions());

ChartTitle = $"Weighted Prediction Result (Conv.: {_cnnFactor * 100}%, " +

$"Simple: {_simpleFactor * 100}%)";

}

OnPropertyChanged(nameof(ChartTitle));

}

...

public BitmapSource BarChart

{

get => _barChart;

set

{

if (!Equals(_barChart, value))

{

_barChart = value;

OnPropertyChanged();

}

}

}

public string ChartTitle { get; private set; }

(Note that the raw Bitmap is converted to an object of type BitmapSource, a specialized class used in WPF for image processing.)

The last step then is binding the BarChart property to a WPF Image control:

<Image Source="{Binding BarChart}" />

History

- 10th October, 2019: Initial version