Here we'll look at: Selecting a pretrained model, loading our model into a detector variable, going through the code of randomly pulling up an image and start detecting people, and going through how ImageAI's method for filtering objects, detectCustomObjectsFromImage.

In this series, we’ll learn how to use Python, OpenCV (an open source computer vision library), and ImageAI (a deep learning library for vision) to train AI to detect whether workers are wearing hardhats. In the process, we’ll create an end-to-end solution you can use in real life—this isn’t just an academic exercise!

This is an important use case because many companies must ensure workers have the proper safety equipment. But what we’ll learn is useful beyond just detecting hardhats. By the end of the series, you’ll be able to use AI to detect nearly any kind of object in an image or video stream.

You’re currently on article 3 of 6:

- Installing OpenCV and ImageAI for Object Detection

- Finding Training Data for OpenCV and ImageAI Object Detection

- Using Pre-trained Models to Detect Objects With OpenCV and ImageAI

- Preparing Images for Object Detection With OpenCV and ImageAI

- Training a Custom Model With OpenCV and ImageAI

- Detecting Custom Model Objects with OpenCV and ImageAI

Now that we’ve loaded and tested the OpenCV library, let’s have a look at some of the pretrained models we can use in ImageAI to start detecting people in images.

ImageAI provides a number of very convenient methods for performing object detection on images and videos, using a combination of Keras, TensorFlow, OpenCV, and trained models.

Selecting a Pretrained Model

The ImageAI GitHub repository stores a number of pretrained models for image recognition and object detection, including:

- ResNet – a convolutional neural network that’s built for high performance and accuracy, but with a longer detection time

- YOLOv3 – an implementation of the You Only Look Once (YOLO) algorithm that’s built for moderate performance, accuracy, and detection time

- TinyYOLOv3 – another YOLO implementation, but built for quick detection and performance, with moderate accuracy

Since we’re hoping to build a reasonably accurate detection program that may need to work with video, let’s choose YOLOv3. Start a new code block and enter the following:

modelRetinaNet = 'https://github.com/OlafenwaMoses/ImageAI/releases/download/1.0/resnet50_coco_best_v2.0.1.h5'

modelYOLOv3 = 'https://github.com/OlafenwaMoses/ImageAI/releases/download/1.0/yolo.h5'

modelTinyYOLOv3 = 'https://github.com/OlafenwaMoses/ImageAI/releases/download/1.0/yolo-tiny.h5'

if not os.path.exists('yolo.h5'):

r = req.get(modelYOLOv3, timeout=0.5)

with open('yolo.h5', 'wb') as outfile:

outfile.write(r.content)

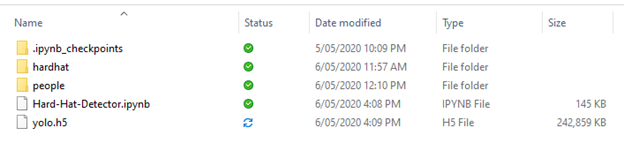

I've included all three links in the setup of this code block so they can be changed if necessary. Using a method similar to the earlier one, this code block will download the relevant model and save it to the project's base directory, ready to be used.

Detecting People

Once the model has been downloaded, we need to load it into our detector. We really only want to do this once as loading the model can take a bit of time, so let's create a code block just for loading the model:

detector = ObjectDetection()

detector.setModelTypeAsYOLOv3()

detector.setModelPath('yolo.h5')

detector.loadModel()

This code block loads our model into a detector variable using:

setModelTypeAsYOLOv3() – sets the model we’re using to detect objects as a YOLOv3; other options include setModelTypeAsRetinaNet or setModelTypeAsTinyYOLOv3setModelPath() – provides the location of the modelloadModel() – loads the model into memory

You’ll get a few warnings the first time you use the detector as the versions of TensorFlow are becoming older, but these are safe to ignore for now. When the model is active and ready to go, let’s randomly pull up an image and start detecting people. Create a new code block and add the following:

import random

peopleImages = os.listdir("people")

randomFile = peopleImages[random.randint(0, len(peopleImages) - 1)]

detectedImage, detections = detector.detectObjectsFromImage(output_type="array", input_image="people/{0}".format(randomFile), minimum_percentage_probability=30)

convertedImage = cv.cvtColor(detectedImage, cv.COLOR_RGB2BGR)

showImage(convertedImage)

for eachObject in detections:

print(eachObject["name"] , " : ", eachObject["percentage_probability"], " : ", eachObject["box_points"] )

print("--------------------------------")

This code block is split into three sections. The first section just picks a random file from our "people" directory to perform detection on. The second part uses the model to perform the detection by:

- Using the

detectObjectsFromImage method, which can return a NumPy array (into the first variable, detectedImage) or output to an image file:

- The

input_image parameter specifies the file to perform the detections on - The

minimum_percentage_probability parameter specifies how confident the model is that the object matches a trained type - The method also outputs the list of detections to the variable

detections

- Taking the detected image NumPy array and loading it into an OpenCV image. OpenCV uses a BGR array to store data, so we need to convert the RGB array to BGR.

- Using the

showImage method in OpenCV to display the image.

After we display the image, the last code block outputs the different detections and the bounding boxes that cover them. The first time you run the detections you’ll notice it takes a long time for the image to display. This is because the model is "warming up." If you rerun this code block, however, the images should display pretty quickly. Also, depending on your image, you might see that it’s not just people being detected when you run the model. This is because the YOLOv3 model provided was trained on about 80 different types of objects.

The objects are all tagged as well. If you hit any key to close the image and look at the text that’s outputted, you’ll see three values:

- The name of the object. In this case, most of the objects should be a person, but you might find a cup, tie, handbag, and so forth.

- The confidence level of the model that the detection is correct, perhaps 98% or 44%, or whatever. The

minimum_percentage_probability sets the threshold here. - The bounding box that covers the object in pixel dimensions from top left to bottom right, X,Y to Z,W, for example.

Detecting Only People

All this looks pretty good, but what if we want to detect only people in our source? Fortunately, ImageAI provides a method for filtering objects: detectCustomObjectsFromImage. Let's modify the second section of our previous code block to see how this works:

peopleOnly = detector.CustomObjects(person=True)

detectedImage, detections = detector.detectCustomObjectsFromImage(custom_objects=peopleOnly, output_type="array", input_image="people/{0}".format(randomFile), minimum_percentage_probability=30)

convertedImage = cv.cvtColor(detectedImage, cv.COLOR_RGB2BGR)

showImage(convertedImage)

If you run this code block a couple of times, you’ll see that now only people are being detected in all the images. And now that we have a reasonable detector set up, we’re going to make our own detector model to look for people wearing hardhats.

Up Next

We’ve seen how we can use a pretrained model to detect people in our image dataset.

Next, we’ll learn how to train a custom AI model.