Here we look at: Gathering test images, imports TensorFlow.js and a collection of sample images, add an image element (set to 224px), add a status text element with functions to set the images, add code to randomly pick and load one of the images, running object recognition, and adding code to show the prediction result in text.

TensorFlow + JavaScript. The most popular, cutting-edge AI framework now supports the most widely used programming language on the planet, so let’s make magic happen through deep learning right in our web browser, GPU-accelerated via WebGL using TensorFlow.js!

In this article, we’ll dive into computer vision running right within a web browser. I will show you how easily and quickly you can start detecting and recognizing objects in images using the pre-trained MobileNet model in TensorFlow.js.

Starting Point

To analyze images with TensorFlow.js, we first need to:

- Gather test images of dogs, food, and more (the images used in this project are from pexels.com)

- Import TensorFlow.js

- Add code to randomly pick and load one of the images

- Add code to show the prediction result in text

Here’s our starting point:

<html>

<head>

<title>Dogs and Pizza: Computer Vision in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<style>

img {

object-fit: cover;

}

</style>

</head>

<body>

<img id="image" src="" width="224" height="224" />

<h1 id="status">Loading...</h1>

<script>

const images = [

"web/dalmation.jpg",

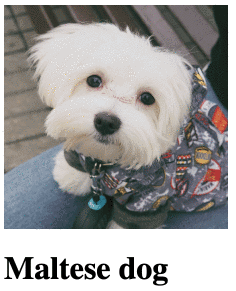

"web/maltese.jpg",

"web/pug.jpg",

"web/pomeranians.jpg",

"web/pizzaslice.jpg",

"web/pizzaboard.jpg",

"web/squarepizza.jpg",

"web/pizza.jpg",

"web/salad.jpg",

"web/salad2.jpg",

"web/kitty.jpg",

"web/upsidedowncat.jpg",

];

function pickImage() {

document.getElementById( "image" ).src = images[ Math.floor( Math.random() * images.length ) ];

}

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

(async () => {

setInterval( pickImage, 5000 );

})();

</script>

</body>

</html>

This web code imports TensorFlow.js and a collection of sample images, adds an image element (set to 224px), and then adds a status text element with functions to set the images. You can update the array of images to match the filenames of other test images you download.

Open the page with the above code in your browser. You should see an image updating every five seconds to a random selection.

A Note on Hosting

Before we go further, I want to note that, for this project to run properly, the webpage and images must be served from a web server (due to HTML5 canvas restrictions).

Otherwise, you may run into this error when TensorFlow.js tries to read the image pixels:

DOMException: Failed to execute 'texImage2D' on 'WebGL2RenderingContext': Tainted canvases may not be loaded.

If you don’t have a server like Apache or IIS running, you can run one easily in NodeJS with WebWebWeb, an NPM module I’ve built for this very purpose.

MobileNet v1

From GitHub: "MobileNets are small, low-latency, low-power models parameterized to meet the resource constraints of a variety of use cases."

For this project, we will use MobileNet v1, which has been trained on millions of images to recognize 1000 different categories of objects ranging from the different dog breeds to the various types of food. There are multiple variations of the model to allow developers to make tradeoffs between the complexity/size and prediction accuracy.

Thankfully, Google hosts the model on their servers, so we can use it directly in our project. We’ll use the smallest, 0.25 patch variation for 224x224 pixel image input.

Let’s add it to our script and load the model with TensorFlow.js.

const mobilenet = "https://storage.googleapis.com/tfjs-models/tfjs/mobilenet_v1_0.25_224/model.json";

let model = null;

(async () => {

model = await tf.loadLayersModel( mobilenet );

setInterval( pickImage, 5000 );

})();

Running Object Recognition

Before passing our image to the TensorFlow model, we need to convert it from pixels to tensors. We also have to convert each RGB pixel color to a floating-point value between -1.0 and 1.0 because that is the value range the MobileNet model was trained with.

TensorFlow.js has built-in functions to help us perform these operations easily. Keeping memory management in mind, we can write a function to run the model and get the predicted object identifier like this:

async function predictImage() {

let result = tf.tidy( () => {

const img = tf.browser.fromPixels( document.getElementById( "image" ) ).toFloat();

const normalized = img.div( 127 ).sub( 1 );

const input = normalized.reshape( [ 1, 224, 224, 3 ] );

return model.predict( input );

});

let prediction = await result.data();

result.dispose();

let id = prediction.indexOf( Math.max( ...prediction ) );

setText( labels[ id ] );

}

You’ll notice that the above function refers to the labels array to show the predicted text with setText(). This array is a predefined list of ImageNet category labels that match up with the predictions that MobileNet was trained on.

If you download it, remember to include it as a script in your page in the <head> tag like this:

<script src="web/labels.js"></script>

Finally, let’s have the image element call run this prediction function whenever a new image is loaded by setting its onload handler at the bottom of our code:

(async () => {

model = await tf.loadLayersModel( mobilenet );

setInterval( pickImage, 5000 );

document.getElementById( "image" ).onload = predictImage;

})();

Once you are done, your model should start predicting what is in the image.

Finish Line

Now you’ve put all the pieces together. Just like that, you’ve got a webpage that uses computer vision and can recognize objects. Not bad, eh?

<html>

<head>

<title>Dogs and Pizza: Computer Vision in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<style>

img {

object-fit: cover;

}

</style>

<script src="web/labels.js"></script>

</head>

<body>

<img id="image" src="" width="224" height="224" />

<h1 id="status">Loading...</h1>

<script>

const images = [

"web/dalmation.jpg",

"web/maltese.jpg",

"web/pug.jpg",

"web/pomeranians.jpg",

"web/pizzaslice.jpg",

"web/pizzaboard.jpg",

"web/squarepizza.jpg",

"web/pizza.jpg",

"web/salad.jpg",

"web/salad2.jpg",

"web/kitty.jpg",

"web/upsidedowncat.jpg",

];

function pickImage() {

document.getElementById( "image" ).src = images[ Math.floor( Math.random() * images.length ) ];

}

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

async function predictImage() {

let result = tf.tidy( () => {

const img = tf.browser.fromPixels( document.getElementById( "image" ) ).toFloat();

const normalized = img.div( 127 ).sub( 1 );

const input = normalized.reshape( [ 1, 224, 224, 3 ] );

return model.predict( input );

});

let prediction = await result.data();

result.dispose();

let id = prediction.indexOf( Math.max( ...prediction ) );

setText( labels[ id ] );

}

const mobilenet = "https://storage.googleapis.com/tfjs-models/tfjs/mobilenet_v1_0.25_224/model.json";

let model = null;

(async () => {

model = await tf.loadLayersModel( mobilenet );

setInterval( pickImage, 5000 );

document.getElementById( "image" ).onload = predictImage;

})();

</script>

</body>

</html>

What’s Next? Fluffy Animals?

With just a bit of code, we loaded a pre-trained model using TensorFlow.js to recognize objects from a list, inside a web browser. Imagine what you could do with this. Maybe create a web app that automatically classifies and sorts through thousands of photos.

Now, what if we want to recognize other objects that aren’t in the list of 1000 categories?

Follow along with the next article in this series to learn how you can expand this project to quickly train a Convolutional Neural Network to recognize... just about anything you wish.