Here we will use face-api.js and a landmark detection library to detect human facial expressions and eliminate the training step of grumpiness detection.

Facial expression recognition, one of the key areas of interest in image recognition, has always been in demand, but the training step is a big hindrance to making it production-ready. To side step this obstacle, let me introduce you to face-api.js, a JavaScript-based face recognition library implemented on top of TensorFlow.js.

Face-api.js is powerful and easy to use, exposing you only to what’s necessary for configuration. It implements several convolutional neural networks (CNNs) but hides all the underlying layers of writing neural nets to solve face detection, recognition, and landmark detection. You can get the library and models here.

Setting Up a Server

Before we dig into any code, we need to set up a server.

If you’ve been following along, you’ve seen that we kept things simple by working directly with a browser and not setting up a server. This approach has worked up until now, but if we try to serve the face-api.js model straight into the browser then we will run into an error, as HTML5 requires us to serve web pages and images from a web server.

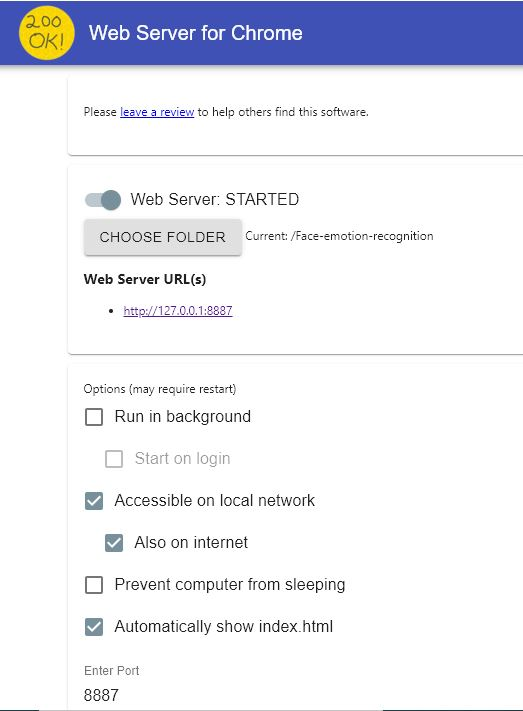

A simple way to set up a server is to set up Web Server for Chrome. Launch the server and set it as follows

Now you can access your application by going to http://127.0.0.1:8887.

Setting Up the HTML

Once you’ve set up the server, create an HTML document and import the face-api library:

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="application/x-javascript" src="face-api.js"></script>

We also need to include a video tag and the JavaScript file to handle our app’s logic. Here’s the final look of the document:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="application/x-javascript" src="face-api.js"></script>

</head>

<body>

<h1>Real-Time grumpiness Detection using face-api.js</h1>

<video autoplay muted id="video" width="224" height="224" style=" margin: auto;"></video>

<div id="prediction">Loading</div>

<script type="text/javascript" defer src="index.js"></script>

</body>

</html>

Getting a Live Video Feed

Let’s move to our JavaScript file and define a few important variables:

const video = document.getElementById("video");

const text = document.getElementById("prediction");

Now we set up our function to start the video:

function startVideo() {

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

if (navigator.getUserMedia) {

navigator.getUserMedia({ video: true },

function(stream) {

var video = document.querySelector('video');

video.srcObject = stream;

video.onloadedmetadata = function(e) {

video.play();

};

},

function(err) {

console.log(err.name);

}

);

} else {

document.body.innerText ="getUserMedia not supported";

console.log("getUserMedia not supported");

}

}

Using face-api.js for Predictions

Instead of downloading all the models, you can load them from URIs like this:

let url = "https://raw.githubusercontent.com/justadudewhohacks/face-api.js/master/weights/";

faceapi.nets.tinyFaceDetector.loadFromUri(url + 'tiny_face_detector_model-weights_manifest.json'),

faceapi.nets.faceLandmark68Net.loadFromUri(url + 'face_landmark_68_model-weights_manifest.json'),

faceapi.nets.faceRecognitionNet.loadFromUri(url + 'face_recognition_model-weights_manifest.json'),

faceapi.nets.faceExpressionNet.loadFromUri(url + 'face_expression_model-weights_manifest.json')

Face-api.js classifies emotions into seven categories: happy, sad, angry, disgusted, fearful, neutral, and surprised. Since we’re interested in two main categories, neutral and grumpy, we’re setting top_prediction to be neutral. A person is often considered to be grumpy when they’re sad, angry, or disgusted, so if the prediction score for any of these emotions is greater than the prediction score for neutral, then we will display the predicted emotion as grumpy. Let’s get our top prediction in string format:

function prediction_string(obj) {

let top_prediction = "neutral";

let maxVal = 0;

var str = top_prediction;

if (!obj) return str;

obj = obj.expressions;

for (var p in obj) {

if (obj.hasOwnProperty(p)) {

if (obj[p] > maxVal) {

maxVal = obj[p];

top_prediction = p;

if (p===obj.sad || obj.disgusted || obj.angry){

top_prediction="grumpy"

}

}

}

}

return top_prediction;

}

Lastly, we need to actually get the prediction from the face-api model:

const predictions = await faceapi

.detectAllFaces(video, new faceapi.TinyFaceDetectorOptions())

.withFaceLandmarks()

.withFaceExpressions();

Putting the Code Together

The final code looks like this:

const video = document.getElementById("video");

const canvas = document.getElementById("canvas");

const text = document.getElementById("prediction");

Promise.all([

faceapi.nets.tinyFaceDetector.loadFromUri('/models'),

faceapi.nets.faceLandmark68Net.loadFromUri('/models'),

faceapi.nets.faceRecognitionNet.loadFromUri('/models'),

faceapi.nets.faceExpressionNet.loadFromUri('/models'),

]).then(startVideo);

function prediction_string(obj) {

let top_prediction = "neutral";

let maxVal = 0;

var str = top_prediction;

if (!obj) return str;

obj = obj.expressions;

for (var p in obj) {

if (obj.hasOwnProperty(p)) {

if (obj[p] > maxVal) {

maxVal = obj[p];

top_prediction = p;

if (p===obj.sad || obj.disgusted || obj.angry){

top_prediction="grumpy"

}

}

}

}

return top_prediction;

}

function startVideo() {

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

if (navigator.getUserMedia) {

navigator.getUserMedia({ video: true },

function(stream) {

var video = document.querySelector('video');

video.srcObject = stream;

video.onloadedmetadata = function(e) {

video.play();

};

},

function(err) {

console.log(err.name);

}

);

} else {

document.body.innerText ="getUserMedia not supported";

console.log("getUserMedia not supported");

}

}

video.addEventListener("play", () => {

let visitedMsg = true;

setInterval(async () => {

const predictions = await faceapi

.detectAllFaces(video, new faceapi.TinyFaceDetectorOptions())

.withFaceLandmarks()

.withFaceExpressions();

if (visitedMsg) {

text.innerText = "Your expression";

visitedMsg = false;

}

text.innerHTML = prediction_string(predictions[0]);

}, 100);

});

Testing it Out

Now run your app in Chrome Web Server (or any other web server of your choice). For Chrome Web Server, go to http://127.0.0.1:8887 to see your app in action.

What’s Next?

Now that we’ve seen how easy it is to predict human facial expressions using face-api.js, can we do more with it? In the next article, we’ll predict someone’s gender and age in the browser.