Here we look at setting up the UI, selecting the correct camera, look at an image capture session, and getting speed updates.

This is the sixth article in a series on how to build a real-time hazard detection system using Android and TensorFlow Lite. In the previous entries, we prepared a trained network model for use in Android, created a project that uses TensorFlow Lite, and worked on other components for the solution. But until now, development has been with static images.

In this installation, we will switch from using static images to using the live feed from the camera. Most of the code that we have written will work without modification. If you’ve followed along in the previous articles, you should already have set up the permissions for the application to allow access of the camera.

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

Setting up the UI

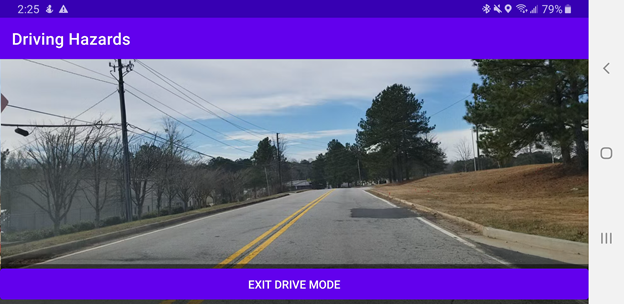

The UI for this portion of the project will be built within the fullscreen activity that was created by Android Studio. The content of the interface will have a few items. A TextureView on the layout shows the video from the camera. InfoOverlayView, a view created in one of the previous articles of this series, is used to render highlights on top of the video feed.

<androidx.constraintlayout.widget.ConstraintLayout

android:id="@+id/fullscreen_content"

android:keepScreenOn="true">

<TextureView android:id="@+id/camera_preview" />

<net.j2i.drivinghazards.InfoOverlayView />

</androidx.constraintlayout.widget.ConstraintLayout>

The TextureView does not automatically display a video feed. Instead, we must write code to update it with the images coming from the video camera. When the TextureView is updated, we can retrieve the Bitmap for the frame being displayed and pass that to the Detector. When the TextureView is ready to display content, it notifies a SurfaceTextureListener. We make a SurfaceTextureListener and select and open the camera. The interface for a SurfaceTextureListener looks like the following.

SurfaceTextureListener {

fun onSurfaceTextureAvailable(

surface: SurfaceTexture, width: Int, height: Int

)

fun onSurfaceTextureSizeChanged(

surface: SurfaceTexture, width: Int, height: Int

)

override fun onSurfaceTextureDestroyed(surface: SurfaceTexture): Boolean

override fun onSurfaceTextureUpdated(surface: SurfaceTexture)

}

The functions onSurfaceTextureAvailable and onSurfaceTextureUpdated are the functions of the most interest. We open the camera in onSurfaceTextureAvailable and receive updates in onSurfaceTextureUpdated.

Selecting the Correct Camera

In this simplified implementation of onSurfaceTextureAvailable, the application gets a list of the camera IDs and checks them one at a time until it finds a camera that is not the front facing camera. For most devices there will only be two cameras. Some devices support having a third camera connected to the phone with USB. If we wanted to use an external camera on a device that supports one and ignore the built in camera, the selection logic could be changed to filter for cameras that have a LENS_FACING attribute value of LENS_FACING_FRONT. The function openCamera is a function that we defined within our code that will be detailed in a moment.

override fun onSurfaceTextureAvailable(

surface: SurfaceTexture,

width: Int,

height: Int

) {

val cm = getSystemService(CAMERA_SERVICE) as CameraManager

for (cameraID in cm.cameraIdList) {

val characteristics = cm.getCameraCharacteristics(cameraID!!)

if (characteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_FRONT) {

continue //Skip front facing camera

}

mCameraID = cameraID

openCamera()

return

}

}

Within the function openCamera, we use the CameraManager to open the selected hardware camera. The SurfaceTexture object within the TextView from the UI layout is assigned a size. We also build our configuration for the preview, setting the width, height, and orientation for the preview.

val manager = getSystemService(CAMERA_SERVICE) as CameraManager

manager.openCamera(mCameraID!!, mCameraStateCallback!!, mBackgroundHandler)

val texture = camera_preview!!.surfaceTexture

texture!!.setDefaultBufferSize(previewSize!!.width, previewSize!!.height)

val previewSurface = Surface(texture)

mPreviewCaptureRequestBuilder =

mCameraDevice!!.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW)

mPreviewCaptureRequestBuilder!!.set(CaptureRequest.JPEG_ORIENTATION, mCameraOrientation)

mPreviewCaptureRequestBuilder!!.addTarget(previewSurface)

The Image Capture Session

With the configuration complete, we can start our capture session. The camera will activate and we will start receiving updated image frames from it.

mCameraDevice!!.createCaptureSession(

Arrays.asList(previewSurface),

object : CameraCaptureSession.StateCallback() {

override fun onConfigured(session: CameraCaptureSession) {

if (mCameraDevice == null) return

mPreviewCaptureRequest = mPreviewCaptureRequestBuilder!!.build()

mCameraCaptureSession = session

mCameraCaptureSession!!.setRepeatingRequest(

mPreviewCaptureRequest!!,

mSessionCaptureCallback,

mBackgroundHandler

)

}

}, null

)

The updated frames are delivered back to the SurfaceTextureListener declared earlier. In its onSurfceTextureUpdated method, with just a few lines of code, we can obtain the Bitmap for the frame. This BitMap can be passed to the detector for it to look for hazards.

override fun onSurfaceTextureUpdated(surface: SurfaceTexture) {

val bitmap = Bitmap.createBitmap(

camera_preview!!.width,

camera_preview!!.height,

Bitmap.Config.ARGB_8888

)

camera_preview!!.getBitmap(bitmap)

}

Getting Speed Updates

In an earlier part of this series, we mentioned that we do not want the detector to send alerts if hazards are detected while the vehicle is not moving. The Detector instance must receive updates on the current speed. In the following code, a LocationListener is declared that only updates the speed for the detector. A location provider is then used to request that location updates be sent to our location listener.

fun requestLocation() {

locationListener = LocationListener { location ->

detector.currentSpeedMPS = location.speed

}

val locationManager = this.getSystemService(LOCATION_SERVICE) as LocationManager

val provider = locationManager.getProvider(LocationManager.GPS_PROVIDER)

val criteria = Criteria()

criteria.accuracy = Criteria.ACCURACY_FINE

val providerName = locationManager.getBestProvider(criteria, true)

val isProviderEnabled = locationManager.isProviderEnabled(providerName!!)

if (isProviderEnabled) locationManager.requestLocationUpdates(

providerName,

1000,

1f,

locationListener!!

)

}

Conclusion

With this in place, we now have a working hazard detector. The development of the code for the hazard detector was relatively low effort. There is room to improve the detector by expanding on the dataset that it detects. Doing this would require a high volume of photographs of the hazards in a labeled dataset (see Training Your Own TensorFlow Neural Network For Android).

Creating this dataset requires more effort, as you must label areas of the photograph as containing objects of interest. You have probably contributed to this effort without knowing it by completing a task such as identifying all of the crosswalks, traffic signals, or other objects in a picture of a road. Completing those tests helps in building a large dataset of labeled data for development of self driving cars and car safety systems.

With this project complete, you’ve built an application that contains some of the functionality that you would find in a self driving vehicle!