Here we will learn how to use AI models to detect the shape of faces.

Introduction

Apps like Snapchat offer an amazing variety of face filters and lenses that let you overlay interesting things on your photos and videos. If you’ve ever given yourself virtual dog ears or a party hat, you know how much fun it can be!

Have you wondered how you’d create these kinds of filters from scratch? Well, now’s your chance to learn, all within your web browser! In this series, we’re going to see how to create Snapchat-style filters in the browser, train an AI model to understand facial expressions, and do even more using Tensorflow.js and face tracking.

You are welcome to download the demo of this project. You may need to enable WebGL in your web browser for performance. You can also download the code and files for this series.

We are assuming that you are familiar with JavaScript and HTML and have at least a basic understanding of neural networks. If you are new to TensorFlow.js, we recommend that you check out this guide: Getting Started with Deep Learning in Your Browser Using TensorFlow.js.

If you would like to see more of what is possible in the web browser withTensorFlow.js, check out these AI series: Computer Vision with TensorFlow.js and Chatbots using TensorFlow.js.

The first step to creating a face filter from scratch is to detect and locate faces in images, so we can start here.

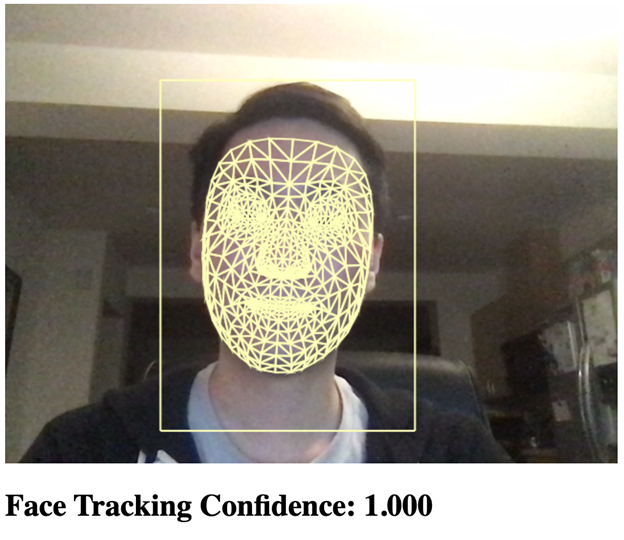

Face tracking can be done with TensorFlow.js and the Face Landmarks Detection model, which can get us 486 different key points, in 3D, for each face inside an image or video frame, within a couple of milliseconds. What makes this especially great is that the model can run inside a web page, so that you can track faces on mobile devices too, using the same code.

Let’s set up a project to load the model and run face tracking on a webcam video feed.

Starting Point

Here is a starter template of the web page we will use for face tracking.

This template includes:

- The TensorFlow.js libraries required for this project

- A reference face mesh index set in triangles.js (included in the project code)

- A canvas element for the rendered output

- A hidden video element for the webcam

- A status text element and the

setText utility function - Canvas

drawLine and drawTriangle utility functions

<html>

<head>

<title>Real-Time Face Tracking in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.4.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/face-landmarks-detection@0.0.1/dist/face-landmarks-detection.js"></script>

<script src="web/triangles.js"></script>

</head>

<body>

<canvas id="output"></canvas>

<video id="webcam" playsinline style="

visibility: hidden;

width: auto;

height: auto;

">

</video>

<h1 id="status">Loading...</h1>

<script>

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

function drawLine( ctx, x1, y1, x2, y2 ) {

ctx.beginPath();

ctx.moveTo( x1, y1 );

ctx.lineTo( x2, y2 );

ctx.stroke();

}

function drawTriangle( ctx, x1, y1, x2, y2, x3, y3 ) {

ctx.beginPath();

ctx.moveTo( x1, y1 );

ctx.lineTo( x2, y2 );

ctx.lineTo( x3, y3 );

ctx.lineTo( x1, y1 );

ctx.stroke();

}

(async () => {

})();

</script>

</body>

</html>

Using the HTML5 Webcam API with TensorFlow.js

Starting the webcam is quite simple in JavaScript if you have a code snippet for it. Here is a utility function for you to start the webcam and request access from the user:

async function setupWebcam() {

return new Promise( ( resolve, reject ) => {

const webcamElement = document.getElementById( "webcam" );

const navigatorAny = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

navigatorAny.webkitGetUserMedia || navigatorAny.mozGetUserMedia ||

navigatorAny.msGetUserMedia;

if( navigator.getUserMedia ) {

navigator.getUserMedia( { video: true },

stream => {

webcamElement.srcObject = stream;

webcamElement.addEventListener( "loadeddata", resolve, false );

},

error => reject());

}

else {

reject();

}

});

}

We can call this setupWebcam function in an async block at the bottom of our code and make it play the webcam video after it loads.

(async () => {

await setupWebcam();

const video = document.getElementById( "webcam" );

video.play();

})();

Next, let’s set up the output canvas and prepare to draw lines and triangles for the bounding box and face wireframe.

The canvas context will be used to output the face tracking results, so we can save that globally outside of the async block. Note that we mirrored the webcam horizontally to behave more naturally like a real mirror.

let output = null;

(async () => {

await setupWebcam();

const video = document.getElementById( "webcam" );

video.play();

let videoWidth = video.videoWidth;

let videoHeight = video.videoHeight;

video.width = videoWidth;

video.height = videoHeight;

let canvas = document.getElementById( "output" );

canvas.width = video.width;

canvas.height = video.height;

output = canvas.getContext( "2d" );

output.translate( canvas.width, 0 );

output.scale( -1, 1 );

output.fillStyle = "#fdffb6";

output.strokeStyle = "#fdffb6";

output.lineWidth = 2;

})();

Let’s Track Some Faces

Now we’re ready! All we need is to load the TensorFlow Face Landmarks Detection model and run it on our webcam frames to show the results.

First, we need a global model variable to store the loaded model:

let model = null;

Then we can load the model at the end of the async block and set the status text to indicate that our face tracking app is ready:

model = await faceLandmarksDetection.load(

faceLandmarksDetection.SupportedPackages.mediapipeFacemesh

);

setText( "Loaded!" );

Now let’s create a function called trackFace that takes the webcam video frames, runs the face tracking model, copies the webcam image to the output canvas, and then draws a bounding box around the face and wireframe mesh triangles on top of the face.

async function trackFace() {

const video = document.getElementById( "webcam" );

const faces = await model.estimateFaces( {

input: video,

returnTensors: false,

flipHorizontal: false,

});

output.drawImage(

video,

0, 0, video.width, video.height,

0, 0, video.width, video.height

);

faces.forEach( face => {

setText( `Face Tracking Confidence: ${face.faceInViewConfidence.toFixed( 3 )}` );

const x1 = face.boundingBox.topLeft[ 0 ];

const y1 = face.boundingBox.topLeft[ 1 ];

const x2 = face.boundingBox.bottomRight[ 0 ];

const y2 = face.boundingBox.bottomRight[ 1 ];

const bWidth = x2 - x1;

const bHeight = y2 - y1;

drawLine( output, x1, y1, x2, y1 );

drawLine( output, x2, y1, x2, y2 );

drawLine( output, x1, y2, x2, y2 );

drawLine( output, x1, y1, x1, y2 );

const keypoints = face.scaledMesh;

for( let i = 0; i < FaceTriangles.length / 3; i++ ) {

let pointA = keypoints[ FaceTriangles[ i * 3 ] ];

let pointB = keypoints[ FaceTriangles[ i * 3 + 1 ] ];

let pointC = keypoints[ FaceTriangles[ i * 3 + 2 ] ];

drawTriangle( output, pointA[ 0 ], pointA[ 1 ], pointB[ 0 ], pointB[ 1 ], pointC[ 0 ], pointC[ 1 ] );

}

});

requestAnimationFrame( trackFace );

}

Finally, we can kick off the first frame for tracking by calling this function at the end of our async block:

(async () => {

...

trackFace();

})();

Finish Line

The full code should look like this:

<html>

<head>

<title>Real-Time Face Tracking in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.4.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/face-landmarks-detection@0.0.1/dist/face-landmarks-detection.js"></script>

<script src="web/triangles.js"></script>

</head>

<body>

<canvas id="output"></canvas>

<video id="webcam" playsinline style="

visibility: hidden;

width: auto;

height: auto;

">

</video>

<h1 id="status">Loading...</h1>

<script>

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

function drawLine( ctx, x1, y1, x2, y2 ) {

ctx.beginPath();

ctx.moveTo( x1, y1 );

ctx.lineTo( x2, y2 );

ctx.stroke();

}

function drawTriangle( ctx, x1, y1, x2, y2, x3, y3 ) {

ctx.beginPath();

ctx.moveTo( x1, y1 );

ctx.lineTo( x2, y2 );

ctx.lineTo( x3, y3 );

ctx.lineTo( x1, y1 );

ctx.stroke();

}

let output = null;

let model = null;

async function setupWebcam() {

return new Promise( ( resolve, reject ) => {

const webcamElement = document.getElementById( "webcam" );

const navigatorAny = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

navigatorAny.webkitGetUserMedia || navigatorAny.mozGetUserMedia ||

navigatorAny.msGetUserMedia;

if( navigator.getUserMedia ) {

navigator.getUserMedia( { video: true },

stream => {

webcamElement.srcObject = stream;

webcamElement.addEventListener( "loadeddata", resolve, false );

},

error => reject());

}

else {

reject();

}

});

}

async function trackFace() {

const video = document.getElementById( "webcam" );

const faces = await model.estimateFaces( {

input: video,

returnTensors: false,

flipHorizontal: false,

});

output.drawImage(

video,

0, 0, video.width, video.height,

0, 0, video.width, video.height

);

faces.forEach( face => {

setText( `Face Tracking Confidence: ${face.faceInViewConfidence.toFixed( 3 )}` );

const x1 = face.boundingBox.topLeft[ 0 ];

const y1 = face.boundingBox.topLeft[ 1 ];

const x2 = face.boundingBox.bottomRight[ 0 ];

const y2 = face.boundingBox.bottomRight[ 1 ];

const bWidth = x2 - x1;

const bHeight = y2 - y1;

drawLine( output, x1, y1, x2, y1 );

drawLine( output, x2, y1, x2, y2 );

drawLine( output, x1, y2, x2, y2 );

drawLine( output, x1, y1, x1, y2 );

const keypoints = face.scaledMesh;

for( let i = 0; i < FaceTriangles.length / 3; i++ ) {

let pointA = keypoints[ FaceTriangles[ i * 3 ] ];

let pointB = keypoints[ FaceTriangles[ i * 3 + 1 ] ];

let pointC = keypoints[ FaceTriangles[ i * 3 + 2 ] ];

drawTriangle( output, pointA[ 0 ], pointA[ 1 ], pointB[ 0 ], pointB[ 1 ], pointC[ 0 ], pointC[ 1 ] );

}

});

requestAnimationFrame( trackFace );

}

(async () => {

await setupWebcam();

const video = document.getElementById( "webcam" );

video.play();

let videoWidth = video.videoWidth;

let videoHeight = video.videoHeight;

video.width = videoWidth;

video.height = videoHeight;

let canvas = document.getElementById( "output" );

canvas.width = video.width;

canvas.height = video.height;

output = canvas.getContext( "2d" );

output.translate( canvas.width, 0 );

output.scale( -1, 1 );

output.fillStyle = "#fdffb6";

output.strokeStyle = "#fdffb6";

output.lineWidth = 2;

model = await faceLandmarksDetection.load(

faceLandmarksDetection.SupportedPackages.mediapipeFacemesh

);

setText( "Loaded!" );

trackFace();

})();

</script>

</body>

</html>

What’s Next? Can Face Tracking Do More?

By combining the TensorFlow Face Landmarks Detection model with the webcam video, we were able to track faces in real time right inside the browser. Our face tracking code also works on images, and the key points could tell us more than we might first expect. Maybe we should try it on a dataset of faces, like FER+ Facial Expression Recognition?

In the next article of this series, we’ll use Deep Learning on the tracked faces of the FER+ dataset and attempt to accurately predict a person’s emotion from facial points in the browser with TensorFlow.js.