Here we train the VGG16 model and evaluated its performance on the test image set.

Introduction

The availability of datasets like DeepFashion open up new possibilities for the fashion industry. In this series of articles, we’ll showcase an AI-powered deep learning system that can revolutionize the fashion design industry by helping us better understand customers’ needs.

In this project, we’ll use:

- Jupyter Notebook as the IDE

- Libraries:

- A custom subset of the DeepFashion dataset — relatively small to reduce the computational and memory overhead

We are assuming that you are familiar with the concepts of deep learning, as well as with Jupyter Notebooks and TensorFlow. If you’re new to Jupyter Notebooks, start with this tutorial. You are welcome to download the project code.

In the previous article, we trained the VGG16 model and evaluated its performance on the test image set. In this article, we’ll evaluate our trained network on some test images, as well as on images taken with a camera, to verify the model robustness when detecting real clothes in images that may contain more than one clothing category.

Evaluating on Test Images

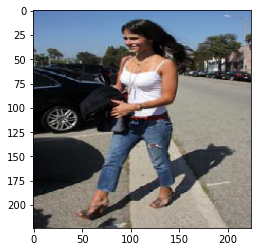

Let’s pass to the network an image taken from the Jeans category and see if the network will be able to classify the clothing item correctly. Note that the selected image will be hard to classify as it will contain more than one clothing type: for example, Jeans and Top. The image will be read and processed with preprocess_input that resizes the image and rescales it to fit the trained network’s input.

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input

img_path = r'C:\Users\abdul\Desktop\ContentLab\P2\DeepFashion\Test\Jeans\img_00000052.jpg'

img = image.load_img(img_path, target_size=(224,224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

plt.imshow(img)

After selecting the image, we’ll pass it through the model and get the output (prediction).

def get_class_string_from_index(index):

for class_string, class_index in test_generator.class_indices.items():

if class_index == index:

return class_string

Predicted_Class=np.argmax(c, axis = 1)

print('Predicted_Class is:', Predicted_Class)

true_index = 5

print("True label: " + get_class_string_from_index(true_index))

print("Predicted label: " + get_class_string_from_index(Predicted_Class))

As seen in the above image, the model has successively recognized the category as "Jeans".

Calculating the Number of Misclassified Images

Let’s further investigate the model’s robustness in detecting clothing categories. To do this, we’ll create a function that will select a random batch of images from the test set and pass it through the model to predict their classes, then calculate the number of misclassified images.

test_generator = test_datagen.flow_from_directory(test_dir, target_size=(224, 224), batch_size=3, class_mode='categorical')

X_test, y_test = next(test_generator)

X_test=X_test/255

preds = full_model.predict(X_test)

pred_labels = np.argmax(preds, axis=1)

true_labels = np.argmax(y_test, axis=1)

print (pred_labels)

print (true_labels)

As you can see above, we defined a batch size of three to avoid computer memory issues. This means the network will select only three images and classify them to calculate the number of misclassified images among these three. You can increase the batch size as you see fit.

Now, let’s calculate the number of misclassified images.

mispred_img = X_test[pred_labels!=true_labels]

mispred_true = true_labels[pred_labels!=true_labels]

mispred_pred = pred_labels[pred_labels!=true_labels]

print ('number of misclassified images:', mispred_img.shape[0])

If misclassified images are found, let’s plot them using this function:

def plot_img_results(array, true, pred, i, n=1):

ncols = 3

nrows = n/ncols + 1

fig = plt.figure( figsize=(ncols*2, nrows*2), dpi=100)

for j in range(n):

index = j+i

plt.subplot(nrows,ncols, j+1)

plt.imshow(array[index])

plt.title('true: {} pred: {}'.format(true[index], pred[index]))

plt.axis('off')

plot_img_results(mispred_img, mispred_true, mispred_pred, 0, len(mispred_img))

To see which class each class number refers to, run the following:

Classes[13]

Evaluating the Model Using a Specific Dataset

Now we’ll create a function that will select any image from any dataset — such as training, testing, or validation — and show the results as "true vs predicted class" under the images. To make the results easier to interpret, we’ll show the category name (for example, "Jeans") rather than as a class number (for example, "5").

def get_class_string_from_index(index):

for class_string, class_index in test_generator.class_indices.items():

if class_index == index:

return class_string

test_generator = test_datagen.flow_from_directory(test_dir, target_size=(224, 224), batch_size=7, class_mode='categorical')

X_test, y_test = next(test_generator)

X_test=X_test/255

image = X_test[2]

true_index = np.argmax(y_test(2)])

plt.imshow(image)

plt.axis('off')

plt.show()

prediction_scores = full_model.predict(np.expand_dims(image, axis=0))

predicted_index = np.argmax(prediction_scores)

print("True label: " + get_class_string_from_index(true_index))

print("Predicted label: " + get_class_string_from_index(predicted_index))

Evaluating the Model Using Camera Images

In this part, we’ll investigate the model performance on images taken by a camera. We took 12 images of clothes placed on a bed, as well as of individuals wearing different types of clothes, and let the trained model classify them. To keep things interesting, we selected men’s clothes (because as most of the training images were of women’s clothes). The clothes were not categorized. We just fed them to the network and let it figure out what category these clothes belonged to.

The network did well with the images of good quality (high contrast images that have not been flipped). Some images were assigned the correct classes, some were assigned a similar class, and others were labeled incorrectly.

Improving the Network Performance

As we showed in previous sections, the network performance was quite good. However, it can be improved. Is it about data? Yes, it is: the original DeepFashion dataset is huge, and we only used a very small portion of it.

Let’s use data augmentation to increase the volume of the network training data. This is likely to improve the performance of the network when tested on new images of the various types and of different quality. The goal of data augmentation is to enhance the generalization capability of the network. This goal is achieved by training the network on augmented images that can cover all image permutations the trained network may encounter when tested on real images.

In Keras, data augmentation is easy to implement. You can simply add to the ImageDataGenerator function the required types of augmenting operations: rotation, zooming, shift translation, flipping, and so on. Our DataLoad function with the augmentation implemented would look as follows:

from tensorflow.keras.preprocessing.image import ImageDataGenerator

batch_size = 3

def DataLoad(shape, preprocessing):

'''Create the training and validation datasets for

a given image shape.

'''

imgdatagen = ImageDataGenerator(

preprocessing_function = preprocessing,

rotation_range=10, width_shift_range=0.1, height_shift_range=0.1, shear_range=0.15, z oom_range=0.1,

channel_shift_range=10., horizontal_flip=True,

validation_split = 0.1,

)

height, width = shape

train_dataset = imgdatagen.flow_from_directory(

os.getcwd(),

target_size = (height, width),

classes = ['Blazer', 'Blouse', 'Cardigan', 'Dress', 'Jacket',

'Jeans', 'Jumpsuit', 'Romper', 'Shorts', 'Skirts', 'Sweater', 'Sweatpants', 'Tank', 'Tee', 'Top'],

batch_size = batch_size,

subset = 'training',

)

val_dataset = imgdatagen.flow_from_directory(

os.getcwd(),

target_size = (height, width),

classes = ['Blazer', 'Blouse', 'Cardigan', 'Dress', 'Jacket',

'Jeans', 'Jumpsuit', 'Romper', 'Shorts', 'Skirts', 'Sweater', 'Sweatpants', 'Tank', 'Tee', 'Top'],

batch_size = batch_size,

subset = 'validation'

)

return train_dataset, val_dataset

The code below shows how ImageDataGenerator is augmenting images, with some examples.

import matplotlib.pyplot as plt

import numpy as np

import os

import random

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.preprocessing.image import ImageDataGenerator

%matplotlib inline

def plotImages(images_arr):

fig, axes = plt.subplots(1, 10, figsize=(20,20))

axes = axes.flatten()

for img, ax in zip( images_arr, axes):

ax.imshow(img)

ax.axis('off')

plt.tight_layout()

plt.show()

gen = ImageDataGenerator(rotation_range=10, width_shift_range=0.1, height_shift_range=0.1, shear_range=0.15, zoom_range=0.1,

channel_shift_range=10., horizontal_flip=True)

Now, we can read any image and display it, along with its augmented derivatives.

image_path = r'C:\Users\abdul\Desktop\ContentLab\P2\DeepFashion\Train\Blouse\img_00000003.jpg'

image = np.expand_dims(plt.imread(image_path),0)

plt.imshow(image[0])

The augmented images derived from the above image are shown below.

aug_iter = gen.flow(image)

aug_images = [next(aug_iter)[0].astype(np.uint8) for i in range(10)]

plotImages(aug_images)

Next Steps

In the next article, we’ll show you how to build a Generative Adversarial Network (GAN) for fashion design generation. Stay tuned!