Here I show how to build, train, and implement autoencoders for deep fake creation.

Deep fakes - the use of deep learning to swap one person's face into another in video - are one of the most interesting and frightening ways that AI is being used today.

While deep fakes can be used for legitimate purposes, they can also be used in disinformation. With the ability to easily swap someone's face into any video, can we really trust what our eyes are telling us? A real-looking video of a politician or actor doing or saying something shocking might not be real at all.

In this article series, we're going to show how deep fakes work, and show how to implement them from scratch. We'll then take a look at DeepFaceLab, which is the all-in-one Tensorflow-powered tool often used for creating convincing deep fakes.

In the previous article we preprocessed our data by converting four videos into two facesets. In this article, we’ll build the autoencoders that will use the facesets. In my particular case, I’ve been working on Kaggle Notebooks since the beginning so I’ll use the previous Notebook’s output as the input of the one that I’m going to use now. I’ll use this Notebook to tune the models’ hyperparameters but the actual heavy duty training will be run in the next topic where we’ll train our models on Google AI Platform using a Docker Container.

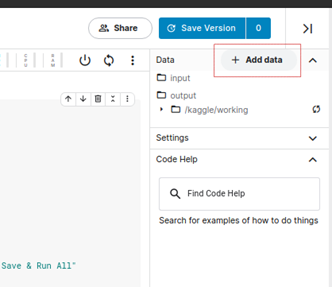

If you don’t know how to use a previous notebook’s output as the input of a new one, just create a new notebook and click the "Add data" button at the upper right corner:

Click "Notebook Output Files" and then "Your Datasets":

Finally, click "Add" next to the output file you want to import:

If you’re running this project locally, you’ll be using the images saved in the results_1 and results_2 folders. Once you’ve imported the required data, let’s jump into the actual code!

Setting up the Basics in the Notebook

Let’s start by importing the required libraries:

import numpy as np

import pandas as pd

import os

import matplotlib.pyplot as plt

import cv2

from tensorflow.keras.models import load_model

from sklearn.model_selection import train_test_split

import keras

from keras import layers

from tensorflow.keras.callbacks import ModelCheckpoint

import tensorflow as tf

Now let’s load the face sets that we’re going to use and split them to start the training.

Dataset Creation and Splitting

Let’s create a function to load up the data in a shape our models can understand. To do this, I’ll create a function that will help us with this task:

def create_dataset(path):

images = []

for dirname, _, filenames in os.walk(path):

for filename in filenames:

image = cv2.imread(os.path.join(dirname, filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = image.astype('float32')

image /= 255.0

images.append(image)

images = np.array(images)

return images

Essentially this function reads every image in the respective folder as an array of integers, corrects the color channels from BGR to RGB, converts the array data type to float, and normalizes it to put the values into a range between 0 and 1 instead of 0 and 255. This will help our future models converge more easily.

To call the function and start the data loading, run:

faces_1 = create_dataset('/kaggle/input/presidentsfacesdataset/trump/')

faces_2 = create_dataset('/kaggle/input/presidentsfacesdataset/biden/')

And now finally we’re ready to split our dataset:

X_train_a, X_test_a, y_train_a, y_test_a = train_test_split(faces_1, faces_1, test_size=0.20, random_state=0)

X_train_b, X_test_b, y_train_b, y_test_b = train_test_split(faces_2, faces_2, test_size=0.15, random_state=0)

Creating and Training the Autoencoders

We’ve reached the core of this series! As you may recall, we need to create and train two autoencoders and then swap their elements to get the resulting model that will do the face transformations for us. We’ll use the Keras Functional API to build our models.

Issue the following commands to create the encoder structure:

input_img = layers.Input(shape=(120, 120, 3))

x = layers.Conv2D(256,kernel_size=5, strides=2, padding='same',activation='relu')(input_img)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(512,kernel_size=5, strides=2, padding='same',activation='relu')(x)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(1024,kernel_size=5, strides=2, padding='same',activation='relu')(x)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Flatten()(x)

x = layers.Dense(9216)(x)

encoded = layers.Reshape((3,3,1024))(x)

encoder = keras.Model(input_img, encoded,name="encoder")

Look carefully at the code above. The first Conv2D layer of the decoder needs to have the same number of filters as its last input depth has, so we force this model to output tensors of shape (3,3,1024). That way, the decoder can start with 1024 filters in the first Conv2D layer. The last MaxPooling layer from this model outputs a multidimensional tensor, so we make it flat with Flatten and finally force it to be of shape (3,3,1024) with Dense and Reshape layers. With the last line, we define this model’s input and output and give it a name. Let’s create the decoder structure:

decoder_input= layers.Input(shape=((3,3,1024)))

x = layers.Conv2D(1024,kernel_size=5, strides=2, padding='same',activation='relu')(decoder_input)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(512,kernel_size=5, strides=2, padding='same',activation='relu')(x)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(256,kernel_size=5, strides=2, padding='same',activation='relu')(x)

x = layers.Flatten()(x)

x = layers.Dense(np.prod((120, 120, 3)))(x)

decoded = layers.Reshape((120, 120, 3))(x)

decoder = keras.Model(decoder_input, decoded,name="decoder")

In this chunk of code, there’s an unusual layer: UpSampling2D. If in the encoder we want to reduce the dimensionality of our input image and use a MaxPooling2D layer to get its latent representation, we’ll need a way to restore this dimensionality to get the input image at the end of the decoder.

To achieve this, we implement an UpSampling layer to do this task for us. Essentially it does upsampling in the simplest way possible: it doubles each row and column. For example, if we passed the array [[1,2],[3,4]] to this layer, then the output would be [[1,1,2,2],[1,1,2,2],[3,3,4,4],[3,3,4,4]]. If you do the math, the decoder input shape is (3,3,1024) and the output is (120,120,3) which is the dimension of the original input image.

Finally, to compile the autoencoder with its two elements, issue the following commands:

auto_input = layers.Input(shape=(120,120,3))

encoded = encoder(auto_input)

decoded = decoder(encoded)

autoencoder = keras.Model(auto_input, decoded,name="autoencoder")

autoencoder.compile(optimizer=keras.optimizers.Adam(lr=5e-5, beta_1=0.5, beta_2=0.999), loss='mae')

autoencoder.summary()

Model: "autoencoder"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) [(None, 120, 120, 3)] 0

_________________________________________________________________

encoder (Functional) (None, 3, 3, 1024) 54162944

_________________________________________________________________

decoder (Functional) (None, 120, 120, 3) 86880192

=================================================================

Total params: 141,043,136

Trainable params: 141,043,136

Non-trainable params: 0

_________________________________________________________________

Notice that we have a huge number of model parameters that need to be trained from scratch, which means that this process will definitely take hours or even days, so get prepared to wait for it. I’m using Adam as the optimizer and MAE (mean average error) as the loss to be reduced (because this task can be modeled as a regression).

The model creation is all set. It’s time to start the training for both src and dst autoencoders. To do this, you can use the fit method as if you’re training a regular deep learning model. In this particular case, I’ll use ModelCheckpoint implementation from Keras to save the best model achieved in training. To start the src training, issue the command:

checkpoint1 = ModelCheckpoint("/kaggle/working/autoencoder_a.hdf5", monitor='val_loss', verbose=1,save_best_only=True, mode='auto', period=1)

history1 = autoencoder.fit(X_train_a, X_train_a, epochs=2700, batch_size=512, shuffle=True, validation_data=(X_test_a, X_test_a), callbacks=[checkpoint1])

I’m training for 2700 epochs in this notebook, but to achieve acceptable results you should train this model for at least 20000 iterations. At the end of the process, the model has obtained a loss of 0.01926, which could be even lower if you train for a longer period of time. To give you an idea of how good the metric achieved is, let’s plot a reference and its respective reconstruction:

%matplotlib inline

plt.figure()

plt.imshow(X_test_a[30])

plt.show()

Loading the best model obtained during training and plotting the respective image reconstruction:

autoencoder_a = load_model("/kaggle/working/autoencoder_a.hdf5")

output_image = autoencoder_a.predict(np.array([X_test_a[30]]))

plt.figure()

plt.imshow(output_image[0])

plt.show()

Not bad, huh? This is a randomly selected image and even though it looks good, the loss is still very high. That means the high quality of this reconstruction was lucky. We wouldn't normally expect such a good result when loss is high, and we shouldn't count on it. That being said, let’s start dst training:

checkpoint2 = ModelCheckpoint("/kaggle/working/autoencoder_b.hdf5", monitor='val_loss', verbose=1,save_best_only=True, mode='auto', period=1)

history2 = autoencoder.fit(X_train_b, X_train_b,epochs=2700,batch_size=512,shuffle=True,validation_data=(X_test_b, X_test_b),callbacks=[checkpoint2])

At the end of training, the best autoencoder model obtained 0.02634 of loss which is slightly higher than the src one. Keep in mind that this faceset is smaller though. To give us an idea of how well it reconstructs images, let’s run:

plt.figure()

plt.imshow(X_test_b[0])

plt.show()

autoencoder_b = load_model("/kaggle/working/autoencoder_b.hdf5")

output_image = autoencoder_b.predict(np.array([X_test_b[0]]))

plt.figure()

plt.imshow(output_image[0])

plt.show()

As I said with the previous autoencoder, just because this reconstruction looks good doesn’t mean they’ll all look this way. Actually, as this loss is higher, this autoencoder performs poorer than the previous one. If we needed to reduce the loss, a good approach would be to increment this faceset with more frames, use data augmentation or train for many more epochs.

Swapping Encoders and Decoders in Keras

Now that we’ve trained our autoencoders, it’s time to swap their parts. If we want to obtain dst faces with src facial gestures then we need to take the src encoder and the dst decoder, and put them together. If we required the opposite, then we’d need to combine dst encoder with src decoder. Let’s get hands on the code:

encoder_a = keras.Model(autoencoder_a.layers[1].input, autoencoder_a.layers[1].output)

decoder_a = keras.Model(autoencoder_a.layers[2].input, autoencoder_a.layers[2].output)

encoder_b = keras.Model(autoencoder_b.layers[1].input, autoencoder_b.layers[1].output)

decoder_b = keras.Model(autoencoder_b.layers[2].input, autoencoder_b.layers[2].output)

input_test = encoder_a.predict(np.array([X_test_a[30]]))

output_test = decoder_b.predict(input_test)

input_test = encoder_b.predict(np.array([X_test_b[30]]))

output_test = decoder_a.predict(input_test)

output_test is the resulting transformed image.

And with that, you’ve just completed the hardest part. See you in the next article, where I’ll give a brief intro about Docker Containers before jumping into how to train your models in Google AI Platform.