Here I’ll help you to set everything you need before starting your models’ training with some help from Docker containers.

Deep fakes - the use of deep learning to swap one person's face into another in video - are one of the most interesting and frightening ways that AI is being used today.

While deep fakes can be used for legitimate purposes, they can also be used in disinformation. With the ability to easily swap someone's face into any video, can we really trust what our eyes are telling us? A real-looking video of a politician or actor doing or saying something shocking might not be real at all.

In this article series, we're going to show how deep fakes work, and show how to implement them from scratch. We'll then take a look at DeepFaceLab, which is the all-in-one Tensorflow-powered tool often used for creating convincing deep fakes.

Google AI Platform is Google's fully managed, end-to-end platform specially developed for data science and machine learning operations. It’s part of Google Cloud Platform and has been gaining a lot of popularity recently, especially because it’s extremely simple to understand and use. AI Platform makes it extremely easy for ML engineers and data scientists to take their ML projects from the scratch space to production in a quick and cost-effective way.

That being said, it’s not free. It can become quite expensive, as can any other similar platform. But it's certainly cheaper (and faster) than hiring a CGI team!

Note that we're using Google Cloud because we have to deploy our AI containers somewhere to use an AIOps approach to model training. Don't interpret this to mean that Google Cloud is necessarily the best choice. But to actually start our training, we need to pick a specific platform to run it on. This same basic approach will work just as well on AWS or Azure.

In this article I’ll help you to set everything you need before starting your models’ training with some help from Docker containers. Let’s get hands on!

Checking the Prerequisites

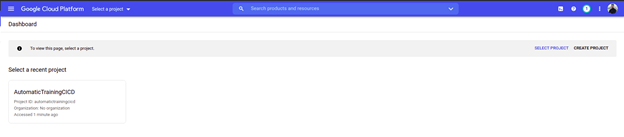

First, create your Google Cloud Platform account. It’s pretty straightforward: launch cloud.google.com, hit "Get started for free", choose your Google account from the list, log in as you’d normally do. and that’s it. You should see the main dashboard. If you’re asked to enter your billing information, then go ahead and do it, it’s completely normal, there are some services in there that are not in their free tier that they would need to charge for in case of use.

Next, in the Google Cloud Console, on the project selector page, make sure that you select or create a project.

Then, check that billing is enabled. This, as I said before, is needed because they need to charge for anything you used outside the free tier on their platform.

Lastly, enable the AI Platform Training & Prediction, Compute Engine and Container Registry APIs. This to give the right permissions to your role in order to perform the tasks we’re about to do. Select your project in the dropdown list and hit on continue to enable the APIs. You don’t need to download the credentials:

Setting Everything up on Your Local Computer

We still need to install and configure a few things on your computer in order to interact remotely with GCP and prepare the Docker container.

Start by installing and initializing the Cloud SDK. This is the kit that allows you to interact with GCP from your computer’s terminal. Get the right installer here. Select your computer’s OS type and follow the instructions.

Next, install Docker on your machine. Go to their official website and get the right version for your OS type.

Once the installation has been completed, If your computer runs any Linux-based OS, you need to add your username to the Docker group in order to run Docker commands without using sudo. To do so, open up a terminal window and issue sudo usermod -a -G docker ${USER} then restart the machine.

Finally, to ensure that Docker is up and running, run docker run busybox date and if it returns the current time and date then you’re good to go!

Creating the Local Variables Required to Submit the Training Job

There are some additional steps before jumping into the actual code. Let’s get started.

You must specify a name for the bucket where your model and its related files will be saved. To do so, we’ll use your project ID as the identifier. Run PROJECT_ID=$(gcloud config list project --format "value(core.project)") and then BUCKET_NAME=${PROJECT_ID}-aiplatform

Next, define the region your training job will be executed. Run REGION=us-central1 (You can find all regions available here).

There are some other variables required. To create them, run:

IMAGE_REPO_NAME=tf_df_custom_container

IMAGE_TAG=tf_df_gpu

IMAGE_URI=gcr.io/$PROJECT_ID/$IMAGE_REPO_NAME:$IMAGE_TAG

MODEL_DIR=df_model_$(date +%Y%m%d_%H%M%S)

JOB_NAME=df_job_$(date +%Y%m%d_%H%M%S)

Creating the Bucket Required for the Project

It’s okay if you’re not familiar with buckets. In GCP and some other cloud providers such as AWS, a bucket is a place where you can store data. It behaves like a directory in the cloud. To create the one required for our project, run gsutil mb -l $REGION gs://$BUCKET_NAME

This is a lot of information to take in, I know, but we’re really close to the end so stick with me! In the next article, I’ll deep dive into creating our Docker container for the training of our deep fake models and submitting the job onto Google AI Platform.