Here we give a short introduction to Docker Containers, then explain why it would be useful for our face recognition system. Then we show how to install Tensorflow image and additional software (Keras, MTCNN). Next we modify the Python code of face identification for launching on the Docker container and demonstrate the recognition on a video.

Introduction

Face recognition is one area of artificial intelligence (AI) where the modern approaches of deep learning (DL) have had great success during the last decade. The best face recognition systems can recognize people in images and video with the same precision humans can – or even better.

Our series of articles on this topic is divided into two parts:

- Face detection, where the client-side application detects human faces in images or in a video feed, aligns the detected face pictures, and submits them to the server.

- Face recognition (this part), where the server-side application performs face recognition.

We assume that you are familiar with DNN, Python, Keras, and TensorFlow. You are welcome to download this project code to follow along.

In the previous article, we focused on developing and testing a face identification algorithm along with the face detection module. In this article, we’ll show you how to create and use Docker containers for our face recognition system.

The Docker container technology can be very useful when developing and deploying AI-based software. Typically, a modern AI-based application requires some pre-installed software, such as a DNN framework (in our case, Keras with the TensorFlow backend). An application can also depend on DNN models and the corresponding libraries (MTCNN for our face detector). Moreover, as we are going to develop a web API for the server-side application, we need an appropriate framework to be installed on our PC. Containerizing our server software will help us develop, configure, test, and deploy the application.

Creating a Container Image

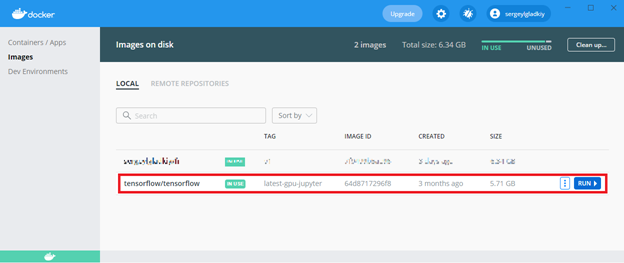

Let’s start with creating an image for the container. We’ll use the Docker Desktop software to manage images and containers. We can create the image for the application from scratch, but it’s faster and easier to create an image based on one of the existing AI containers. The most suitable for us is the TensorFlow container with the pre-installed latest version of the TensorFlow framework.

First, we must download the image to our PC:

docker pull tensorflow/tensorflow:latest-gpu-jupyter

After downloading the image, we can see it on the Images tab of Docker Desktop. Run the container by clicking the Run button.

Configuring Container

The next step is to configure the container for launching our face recognition server. On the Containers/Apps tab, run a terminal window for the container. Install the Keras framework and the MTCNN library with the following commands:

# pip install keras

# pip install mtcnn

A successful installation log is shown in the picture below.

Note that the latest Python OpenCV framework is installed with the MTCNN library. We only need to add the libGL library for completeness:

# apt install libgl1-mesa-glx

We’ll use the Flask microframework for the web API. We need to install this framework together with the jsonpickle package using simple pip commands.

# pip install Flask

# pip install jsonpickle

Now the container is configured to run the face recognition application. Before running it, we need to slightly modify the Python code developed in the previous article to enable launching the application without the UI. Here is the modified code of the video recognizer class:

class VideoFR:

def __init__(self, detector, rec, f_db):

self.detector = detector

self.rec = rec

self.f_db = f_db

def process(self, video, align=False, save_path=None):

detection_num = 0;

rec_num = 0

capture = cv2.VideoCapture(video)

img = None

frame_count = 0

dt = 0

if align:

fa = Face_Align_Mouth(160)

while(True):

(ret, frame) = capture.read()

if frame is None:

break

frame_count = frame_count+1

faces = self.detector.detect(frame)

f_count = len(faces)

detection_num += f_count

names = None

if (f_count>0) and (not (self.f_db is None)):

t1 = time.time()

names = [None]*f_count

for (i, face) in enumerate(faces):

if align:

(f_cropped, f_img) = fa.align(frame, face)

else:

(f_cropped, f_img) = self.detector.extract(frame, face)

if (not (f_img is None)) and (not f_img.size==0):

embds = self.rec.embeddings(f_img)

data = self.rec.recognize(embds, self.f_db)

if not (data is None):

rec_num += 1

(name, dist, p_photo) = data

conf = 1.0 - dist

names[i] = (name, conf)

print("Recognized: "+name+" "+str(conf))

if not (save_path is None):

percent = int(conf*100)

ps = ("%03d" % rec_num)+"_"+name+"_"+("%03d" % percent)+".png"

ps = os.path.join(save_path, ps)

cv2.imwrite(ps, f_img)

t2 = time.time()

dt = dt + (t2-t1)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

if dt>0:

fps = detection_num/dt

else:

fps = 0

return (detection_num, rec_num, fps)

In the process method of the class, we removed the code that had shown frames with the OpenCV windows. We also added the save_path parameter that specifies where to save the resulting images of the recognized faces.

We must also modify the code for creating and running a video recognizer in the container:

if __name__ == "__main__":

v_file = str(sys.argv[1])

m_file = r"/home/pi_fr/net/facenet_keras.h5"

rec = FaceNetRec(m_file, 0.5)

print("Recognizer loaded.")

print(rec.get_model().inputs)

print(rec.get_model().outputs)

save_path = r"/home/pi_fr/rec"

db_path = r"/home/pi_fr/db"

f_db = FaceDB()

f_db.load(db_path, rec)

db_f_count = len(f_db.get_data())

print("Face DB loaded: "+str(db_f_count))

d = MTCNN_Detector(50, 0.95)

vr = VideoFR(d, rec, f_db)

(f_count, rec_count, fps) = vr.process(v_file, True, save_path)

print("Face detections: "+str(f_count))

print("Face recognitions: "+str(rec_count))

print("FPS: "+str(fps))

In the code, we specify the path to a video file as the first command line argument. All other paths (to the FaceNet model, the face database, and the folder for saving the resulting images) are specified directly in the code. Therefore, we must preconfigure our file system and copy the required data to the container. This can be done with the docker cp command.

Running the Container

Now we can run our Python code from the terminal with the following command:

The output in the terminal will show the progress of the recognition process. The process saves the recognized data to the specified folder, which we can explore with the ls command.

You can copy the data to the host machine with the docker cp command. Here are some resulting face images.

Now our AI container is totally configured. We can run our recognition server in the container environment and test its performance. We can also improve the identification algorithms and develop additional AI-based software. We can even use the platform to create, train, and test new DNN models (based on Keras with the TensorFlow backend).

What’s great is that we can easily share our container with other developers. We just need to save the container as a Docker image and push it to the repository. You can use the following commands:

c:\>docker commit -m "Face Recognition image" -a "Sergey Gladkiy" beff27f9a185 sergeylgladkiy/fr:v1

c:\>docker push sergeylgladkiy/fr:v1

Pushing the image can take some time because it is large (about 6.3 GB). The published image is available for download by other Docker users. They can download our image as follows:

docker pull sergeylgladkiy/fr:v1

Next Step

In the next article, we’ll wrap the face identification model in a simple Web API, create a client application on the Raspberry Pi, and run the client-server system. Stay tuned!