Sometimes, a user does not have drivers for OpenCL, sometimes there is no Nvidia GPU in system. A simple tool called VectorizedKernel works very similar to kernels of OpenCL/CUDA in terms of usage and lets an algorithm written in scalar-like pattern to gain parallel-like performance.

Introduction

VectorizedKernel.h header-only tool aims to help quick prototyping for basic GPGPU problems, by using only CPU that does not require anything else than a C++ compiler and proper compiler flags set. The learning-curve of GPGPU on many platforms are generally affected by quality & availability of the API (such as CUDA having much shorter hello-world than OpenCL and OpenCL having much more diverse device selection) and this project aims to retain the availability by having dependency to:

- C++14 compiler (GCC v10 was used for all benchmarks for this project)

- Compiler's autovectorization capability

- At least an MMX CPU is required but an AVX512, AVX1024(future?), AVX2048(future?) will work too

With the addition of relative simplicity to start compared to a driver-API of CUDA or pure OpenCL code and higher performance than plain single-thread non-explicitly-vectorized code at the expense of verbosity.

The entrance to GPGPU may not be always available rightaway because of not-supported devices (such as GT1030 for CUDA or RX550 for OpenCL) and problematic drivers that add starting overhead. It can be easily solved by hiring cloud computers and managing operating system images with a cost of few dollars per hour. It is generally more acceptable to test a demo for free and quick.

Background

When Nvidia started developing its own general purpose computing framework, many developers were using OpenGL/DirectX/Glide APIs to harness the graphics pipelines for the "general purpose" work. Lighting, transform and other pipelines of graphics chip was used for matrix operations and graphics buffers were used as input/output buffers. Usage of GPUs like these had an attractive speedup ratio compared to the CPUs their time and there were numerous work involving pushing more general purpose work into a GPU.

After CUDA (of Nvidia) had great success, OpenCL (of Apple) followed it by a modest technological lag and was adopted by hardware vendors from Intel to Xilinx to Amd and many others including Nvidia.

While GPGPU frameworks and higher-level libraries evolved to be more and more useful for different types of tasks (including training a neural-network), they also increased their starting overhead for learning, installing and testing. The hello-world of the GPGPU stayed simplest with CUDA but required Nvidia card. The hello-world of OpenCL on the other hand, is much longer on number of lines of codes. There are multiple parts of a hello-world OpenCL program to initialize just to send a letter to GPU:

- query platforms

- query devices

- query supported API-features or versions

- query memory size or other features of graphics card

- create contex

- create kernel

- create gpu buffer

- create kernel parameters

- compute hello world

- get results back to RAM (if its not CPU already)

- release all the resources in the opposite order they were allocated

Both CUDA and OpenCL have highly dependent either on the code-reuse or the hardware to begin with. Despite the high preparation time, GPU run-time is very good and it is tolerated most of the time. The point of making a simulator is to let prototyping require a shorter code or no hardware dependency (except SSE/AVX that is found on nearly all of the desktop-CPU market) and especially quicker time to start having results.

Using the Code

VectorizedKernel.h header file defines a simple kernel builder function to create a SIMD kernel from a lambda function and it applies the lambda on all workitems of the kernel. Workitem is only a scalar-looking "lane" of a vector-processing task. If the task is to add 1 to all elements of an array, then a workitem is the computation of a[i] = a[i] + 1.

Kernel

createKernel factory function creates the kernel object and takes simd width and kernel lambda:

template<int SimdWidth, typename F, class...Args>

auto createKernel(F&& kernelPrm, KernelArgs<Args...> const& _prm_)

{

return Kernel<SimdWidth, F, Args...>(std::forward<F>(kernelPrm));

}

The Args... part lets users define their own kernel parameters while SimdWidth is given explicitly as a template integer parameter that is integer power of 2. F parameter takes the lambda function and converts it to two versions, one for body of computations that uses simd = user given value and one for the tail that uses simd = 1. This makes the work scheduling easy and optimized enough without breaking the rule of integer-power-of-2 for any future algorithm that uses current width value of simd inside the kernel function.

How a kernel is created:

auto kernelHelloWorld = Vectorization::createKernel<simd>(

[&](auto & factory, auto & idThread, int * prm1, float * prm2, int prm3){

const int currentSimdWidth = factory.width;

},

Vectorization::KernelArgs<int*,float*, int>{}

);

Hello world version:

#include "VectorizedKernel.h"

#include <iostream>

int main()

{

constexpr int simd = 8;

auto kernelHelloWorld = Vectorization::createKernel<simd>(

[&](auto & factory, auto & idThread, float * bufferIn, float * bufferOut){

const int currentSimdWidth = factory.width;

auto tmp = factory.template generate<float>();

tmp.readFromContiguous(bufferIn,idThread);

tmp.add(1.0f,tmp);

tmp.writeToContiguous(bufferOut,idThread);

}, Vectorization::KernelArgs<float*,float*>{});

const int n = 23;

float vecIn[n], vecOut[n];

for(int i=0;i<n;i++)

{

std::cin>>vecIn[i];

}

kernelHelloWorld.run(n,vecIn,vecOut);

for(int i=0;i<n;i++)

{

std::cout<<vecOut[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

When it is compiled by a modern compiler with -march=native and -mavx flags, the produced CPU instructions look like this:

.L2:

mov rsi, rbx

mov edi, OFFSET FLAT:_ZSt3cin

add rbx, 4

cmp rbx, rbp

jne .L2

vmovaps xmm0, XMMWORD PTR .LC0[rip]

lea rbx, [rsp+96]

lea rbp, [rsp+188]

vaddps xmm1, xmm0, XMMWORD PTR [rsp] <-- 4x add

vmovaps XMMWORD PTR [rsp+96], xmm1

vaddps xmm1, xmm0, XMMWORD PTR [rsp+16] <-- 4x add

vmovaps XMMWORD PTR [rsp+112], xmm1

vaddps xmm1, xmm0, XMMWORD PTR [rsp+32] <-- 4x add

vmovaps XMMWORD PTR [rsp+128], xmm1

vaddps xmm1, xmm0, XMMWORD PTR [rsp+48] <-- 4x add

vaddps xmm0, xmm0, XMMWORD PTR [rsp+64] <-- 4x add

vmovaps XMMWORD PTR [rsp+144], xmm1

vmovq xmm1, QWORD PTR .LC1[rip]

vmovaps XMMWORD PTR [rsp+160], xmm0

vmovq xmm0, QWORD PTR [rsp+80]

vaddps xmm0, xmm0, xmm1

vmovlps QWORD PTR [rsp+176], xmm0

vmovss xmm0, DWORD PTR .LC2[rip]

vaddss xmm0, xmm0, DWORD PTR [rsp+88]

vmovss DWORD PTR [rsp+184], xmm0

The vaddps instruction is computation of 4 values at once (addition for 32-bit floating-point). First five of these instructions compute the main body (20) of 23 workitems. The last vaddps and the vaddss computes the tail part (last 3 elements).

When an extra multiplication operation is added to kernel:

const int currentSimdWidth = factory.width;

auto tmp = factory.template generate<float>();

tmp.readFromContiguous(bufferIn,idThread);

tmp.add(1.0f,tmp);

tmp.mul(3.0f, tmp); <------ an extra multiplication!

tmp.writeToContiguous(bufferOut,idThread);

The instruction output looks like this:

vaddps xmm2, xmm0, XMMWORD PTR [rsp]

vmulps xmm2, xmm2, xmm1

vmovaps XMMWORD PTR [rsp+96], xmm2

vaddps xmm2, xmm0, XMMWORD PTR [rsp+16]

vmulps xmm2, xmm2, xmm1

vmovaps XMMWORD PTR [rsp+112], xmm2

vaddps xmm2, xmm0, XMMWORD PTR [rsp+32] <-- 4x add

vmulps xmm2, xmm2, xmm1 <-- 4x mul

vmovaps XMMWORD PTR [rsp+128], xmm2

vaddps xmm2, xmm0, XMMWORD PTR [rsp+48] <-- 4x add

vaddps xmm0, xmm0, XMMWORD PTR [rsp+64] <-- 4x add

vmulps xmm2, xmm2, xmm1 <-- 4x mul

vmulps xmm0, xmm0, xmm1 <-- 4x mul

vmovq xmm1, QWORD PTR .LC2[rip]

vmovaps XMMWORD PTR [rsp+144], xmm2

vmovaps XMMWORD PTR [rsp+160], xmm0

vmovq xmm0, QWORD PTR [rsp+80]

vaddps xmm0, xmm0, xmm1

vmovq xmm1, QWORD PTR .LC3[rip]

vmulps xmm0, xmm0, xmm1

vmovlps QWORD PTR [rsp+176], xmm0

vmovss xmm0, DWORD PTR .LC4[rip]

vaddss xmm0, xmm0, DWORD PTR [rsp+88]

vmulss xmm0, xmm0, DWORD PTR .LC5[rip]

vmovss DWORD PTR [rsp+184], xmm0

The compiler adds the necessary vmulps instruction after each vaddps, without extra buffer copying (directly through re-using the same cpu vector register). This part is equivalent to explicitly using C++ x86 intrinsics, with the difference of automatically doing it.

On a Cascadelake processor microarchitecture (currently the cloud servers of godbolt.org), with simd=16 setting and with proper compiler flags (-std=c++2a (c++14 required at least!!) -O3 -march=cascadelake -mavx512f -mprefer-vector-width=512 -ftree-vectorize -fno-math-errno -lpthread), the output of computation of main body (16 workitems with AVX512 + 4 with SSE) is shorter:

vbroadcastss zmm0, DWORD PTR .LC6[rip]

vmovq xmm1, QWORD PTR .LC4[rip]

vaddps zmm0, zmm0, ZMMWORD PTR [rbp-304] <--- 512 bit add operation (16 elements)

lea rbx, [rbp-176]

lea r12, [rbp-84]

vmulps zmm0, zmm0, DWORD PTR .LC7[rip]{1to16} <--- 512 bit mul (16 elements)

vmovaps ZMMWORD PTR [rbp-176], zmm0

vbroadcastss xmm0, DWORD PTR .LC6[rip]

vaddps xmm0, xmm0, XMMWORD PTR [rbp-240] <--- 128 bit add (4 elements)

vmulps xmm0, xmm0, DWORD PTR .LC7[rip]{1to4} <--- 128 bit mul (4 elements)

vmovaps XMMWORD PTR [rbp-112], xmm0

vmovq xmm0, QWORD PTR [rbp-224]

vaddps xmm0, xmm0, xmm1 <--- tail i=20, i=21

vmovq xmm1, QWORD PTR .LC5[rip]

vmulps xmm0, xmm0, xmm1

vmovlps QWORD PTR [rbp-96], xmm0

vmovss xmm0, DWORD PTR .LC6[rip]

vaddss xmm0, xmm0, DWORD PTR [rbp-216] <--- tail i=22

vmulss xmm0, xmm0, DWORD PTR .LC7[rip]

vmovss DWORD PTR [rbp-88], xmm0

vzeroupper

With a higher number of work items (i.e., 1000x1000 pixels for mandelbrot set), the main body of computations is much bigger than the non-aligned tail and the overall performance increases greatly.

Kernel Variables

Kernel variables are created with factory parameter of the lambda. factory is a templated object and to create any type of object, it requires explicit selection of variable's template:

auto aFloat = factory.template generate<float>();

auto arr = factory.template generateArray<int,5>();

auto anInt = factory.template generate<int>(idThread);

This is a costly operation because every scalar-looking variable has a plain array inside. This causes stack allocation which is not always free. Due to this reason, variable allocations should be left outside of innermost loops that do the most intense math calculations:

auto j = idThread.modulus(matrixWidth); while(anyTrue)

{

a.fusedMultiplyAdd(b,c,result); }

while(anyTrue)

{

auto j = idThread.modulus(matrixWidth); a.fusedMultiplyAdd(b,c,result); }

Once a variable is created, it is better to re-use it for many times to benefit from SIMD performance. Otherwise, the compiler adds many mov/vmov instructions to prepare the allocations and the real part of the algorithm is stalled and becomes even slower than scalar version if it has low compute-to-data ratio.

Another downside of using plain array inside a struct is not being able to evade the deep data copying on all copy/move - constructors. This causes extra work on the compiler's side and the CPU's side. To not use a plain array, project would be made of 100% define macros and have higher performance, but this is not the way of C++. Define macros should be used only when there is no other way to achieve it (like AVX intrinsics).

Work-Item Index

During kernel launch, every work-item is given an integer id value to indicate its position in the overall task. For a simple array processing task, it can be used for indexing the array. For a 2D matrix multiplication, it can be used to compute modulus by N and division by N to find X-Y coordinates of elements (work-items' own data) in the matrix.

Work scheduling in kernel object's run method is very simple. First part computes all work-items until there is one non-aligned chunk left. Second part computes the remaining tail by launching single work-item at a time:

void run(int n, Args... args)

{

const int nLoop = (n/SimdWidth);

const KernelDataFactory<SimdWidth> factory;

auto id = factory.template generate<int>();

for(int i=0;i<nLoop;i++)

{

id.idCompute(i*SimdWidth,[](const int prm){ return prm;});

kernel(factory, id, args...);

}

if((n/SimdWidth)*SimdWidth != n)

{

const KernelDataFactory<1> factoryLast;

const int m = n%SimdWidth;

auto id = factoryLast.template generate<int>();

for(int i=0;i<m;i++)

{

id.idCompute(nLoop*SimdWidth+i,[](const int prm){ return prm;});

kernel(factoryLast, id, args...);

}

}

}

The id values are computed contiguously between each work-item, starting with zero. When launching 1000 workitems, latest id value is 999. The id value (being another scalar-looking block data) can be used in any integer-based operation in the kernel:

auto kernel = Vectorization::createKernel<simd>(

work-item index

^ kernel parameters

| ^

| |

[&](auto & factory, auto & idThread, ... prms ...){

auto j = factory.template generate<int>();

idThread.modulus(width, j);

auto i = factory.template generate<int>();

idThread.div(width, i);

auto element = factory.template generate<int>();

i.mul(j,element);

element.writeTo(matrix,idThread);

...

}, ... prm types ...

);

I/O Patterns

There are three groups of methods to read/write data from/to buffers: Contiguous read/write methods, indexed gather/scatter methods and indexed contiguous read/write methods.

Contiguous Read/Write

void writeTo(Type * const __restrict__ ptr);

void readFrom(const Type * const __restrict__ ptr);

These methods start read/write operation from the given ptr address and every next work-item in same work-group (that is single SIMD group launched in run method) uses uniformly increasing indexing on the ptr address. First work item of the group accesses first item of ptr, last work item in the group accesses currentSimdWidth-1 index on the ptr. Current simd width is queried from factory parameter of kernel lambda (first parameter):

int currentSimdWidth = factory.width;

This indicates the width of read/write operations on the contiguous read/write methods. For the body chunk of task, it is equal to simd template parameter given to kernel creator function. For the tail chunk, it is equal to 1.

Read and write operations are vectorized similar to multiplication and addition methods. Since there is RAM latency, algorithms with low compute-to-data ratio tend to lose more performance on I/O.

auto returnValue = factory.template generate<float>();

a.add(b,returnValue);

returnValue.writeTo(img);

Indexed Contiguous Read/Write

void readFromContiguous(Type * const __restrict__ ptr, const KernelData<int,Simd> & id);

void writeToContiguous(Type * const __restrict__ ptr, const KernelData<int,Simd> & id);

As an indexed version of first group, they only pick a different target region on the I/O buffer. First work item in every simd group decides where to read/write. If simd size is given 32, then first 32 work-items read/write starting with ptr + id[0]:

returnValue.writeToContiguous(img, idThread);

Gather/Scatter

readFrom(Type * const __restrict__ ptr, const KernelData<int,Simd> & id);

writeTo(Type * const __restrict__ ptr, const KernelData<int,Simd> & id);

Gathering and scattering means every work-item chooses its own target element to read/write in the buffer. The following method can do random-access per work-item:

returnValue.writeTo(img, idThread);

This is implemented for convenience in writing scalar pattern codes and is not as fast as the contiguous versions. Even on AVX512 CPUs that support gather/scatter instructions, they have high latency due to the nature of randomly accessing memory and losing the benefit of cache-line. AVX1 and SSE do not have gather/scatter instructions and algorithms to run on these CPUs should be optimized with contiguous access always.

When an algorithm is highly dependent on RAM latency (due to data-set being larger than caches), simd size should be chosen bigger to hide loading/storing latencies. RAM is capable of serving multiple requests in parallel by pipelining. When there are multiple requests in flight, some of their latency is hidden.

For algorithms with high compute-to-data ratio, simd size should be chosen closer to (1-2 times) the SIMD hardware's width to benefit from physical registers of CPU core. If simd width is chosen too high, then physical registers of CPU are not enough and L1 cache is used instead. L1 cache has higher latency and lower bandwidth than registers.

Loop Structure

SIMD hardware of CPU core does not have independent lanes. Each lane is locked to all other lanes in same SIMD unit and all do same computation(instruction) on different data. Due to this work-flow, they have limited looping capability.

Fixed Number Of Iterations

When all work-items in same simd group are required to iterate same number of times at the same time, simple loops can be used:

for(int i=0;i<100;i++)

{

a.add(1.0f,a);

b.mul(1.1f,b);

a.fusedMultiplyAdd(b,result,result); }

This is only for per-work-group. Different groups can iterate different number of times. The first SIMD number of work-items can iterate for 100 times and next group can iterate for 5 times without any problem since all work groups are independent (they are launched one after another).

Variable Number of Iterations

When each work-item is required to follow a different number of iterations, then all work-items in same SIMD groups have to follow same number of iterations, with masked operations. Masking allows a loop-breaked work-item to continue looping without changing its last state such as the result of algorithm:

bool anyWorkRemaining = true;

auto k = factory.template generate<int>(0);

auto mask = factory.template generate<int>();

auto somethingToCompute = factory.template generate<float>();

const int N = 100;

while(anyWorkRemaining)

{

k.lessThan(N,mask); anyWorkRemaining = mask.isAnyTrue();

mask.ternary(newValueComputed,somethingToCompute,somethingToCompute);

k.add(variableIterationAmount,k);

}

bool anyWorkRemaining = true;

Parallel Factorial Sample

Compilers are generally smart and can (horizontally)parallelize even a factorial function like this:

template<typename Type>

Type factorial(Type input)

{

Type result = input--;

if(input+1 == 0)

return 1;

while(input>0)

{

result *= input--;

}

return result>0?result:1;

}

Entrance part of the produced instruction is:

vpbroadcastq zmm0, rax

mov rdi, r8

vpaddq zmm0, zmm0, ZMMWORD PTR .LC9[rip]

vpbroadcastq zmm1, QWORD PTR .LC2[rip]

shr rdi, 3

xor edx, edx

.L26:

vmovdqa64 zmm2, zmm0

inc rdx

vpmullq zmm1, zmm1, zmm2

vpaddq zmm0, zmm0, ZMMWORD PTR .LC10[rip]

cmp rdx, rdi

jne .L26

vextracti64x4 ymm0, zmm1, 0x1

vpmullq ymm1, ymm0, ymm1

vmovdqa xmm0, xmm1

vextracti64x2 xmm1, ymm1, 0x1

vpmullq xmm0, xmm0, xmm1

vpsrldq xmm1, xmm0, 8

vpmullq xmm0, xmm0, xmm1

vmovq rdx, xmm0

imul rax, rdx

mov rdx, r8

and edx, 7

and r8d, 7

je .L27

After this optimized part, it continues computation on scalar version:

.L25:

mov rdi, rdx

imul rax, rdx

dec rdi

je .L27

imul rax, rdi

mov rdi, rdx

sub rdi, 2

je .L27

repeats this many times

Scalar factorial applied on an array of elements(of type uint64_t) with value 10+k%3 runs at 24 cycles per element speed and is already partially vectorized on multiplications by the compiler (Cascadelake AVX512) as shown above. 24 cycle operation translates to 8 nanoseconds for the server CPU of godbolt.org. Considering that the RAM latency is included in 24 cycles, it is fairly fast (on GCC v11).

Benchmarking program for scalar version:

#include <algorithm>

#include <cstdint>

#include"VectorizedKernel.h"

#ifdef _MSC_VER

# include <intrin.h>

#else

# include <x86intrin.h>

#endif

inline

uint64_t readTSC() {

uint64_t tsc = __rdtsc();

return tsc;

}

template<typename Type>

Type factorial(Type input)

{

Type result = input--;

if(input+1 <= 1)

return 1;

while(input>0)

{

result *= input--;

}

return result>0?result:1;

}

constexpr int N = 1000000;

int main() {

std::vector<uint64_t> input(N);

std::vector<uint64_t> output(N);

for(int k=0;k<N;k++)

{

input[k]=10+k%3

}

for(int i = 0;i<100;i++)

{

auto t1 = readTSC();

for(int k=0;k<N;k++)

{

output[k] = factorial(input[k]);

}

auto t2 = readTSC();

std::cout << (t2 - t1)/(N) << " cycles per factorial" << std::endl;

}

for(int i=0;i<20;i++)

{

std::cout<<output[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

Vectorized kernel version:

With vectorization on elements instead of multiplications, AVX512 can complete it quicker and requires the parallel while-loop. Compiler with AVX512 flags enabled for Cascadelake CPU completes same 1 million element process at 12 cycles per element.

constexpr int simd = 32;

using maskType = uint64_t;

using dataType = uint64_t;

auto kernel = Vectorization::createKernel<simd>(

[&](auto & factory, auto & id, dataType * inputBuffer, dataType * outputBuffer){

auto result = factory.template generate<dataType>();

auto input = factory.template generate<dataType>();

input.readFromContiguous(inputBuffer,id);

result.readFrom(input);

input.sub(1,input);

auto maskEarlyQuit =

factory.template generate<maskType>(1); auto inputPlus1 = factory.template generate<dataType>();

input.add(1,inputPlus1);

inputPlus1.greaterThan(1,maskEarlyQuit);

maskEarlyQuit.ternary(result,(dataType)1,result);

maskEarlyQuit.ternary(input,(dataType)1,input);

auto maskedResult = factory.template generate<dataType>();

auto maskedInput = factory.template generate<dataType>();

bool workInProgress = true;

auto mask =

factory.template generate<maskType>(1); const dataType one = 1;

const maskType zero = 1;

while(workInProgress)

{

result.mul(input,maskedResult);

input.sub(one,maskedInput);

maskedInput.greaterThan(zero,mask);

mask.bitwiseAnd(maskEarlyQuit,mask);

workInProgress = mask.isAnyTrue();

mask.ternary(maskedResult,result,result);

mask.ternary(maskedInput,input,input);

}

auto gt = factory.template generate<maskType>();

result.greaterThan(0,gt);

gt.ternary(result,(dataType)1,result);

result.writeToContiguous(outputBuffer,id);

}, Vectorization::KernelArgs<dataType *, dataType *>{}

);

Benchmark program for vectorized version:

#include <algorithm>

#include <cstdint>

#ifdef _MSC_VER

# include <intrin.h>

#else

# include <x86intrin.h>

#endif

inline

uint64_t readTSC() {

uint64_t tsc = __rdtsc();

return tsc;

}

template<typename Type>

Type factorial(Type input)

{

Type result = input--;

if(input+1 <= 1)

return 1;

while(input>0)

{

result *= input--;

}

return result>0?result:1;

}

#include"VectorizedKernel.h"

constexpr int N = 1000000;

int main() {

constexpr int simd = 4;

using maskType = uint64_t;

using dataType = uint64_t;

auto kernel = Vectorization::createKernel<simd>(

[&](auto & factory, auto & id, dataType * inputBuffer, dataType * outputBuffer){

auto result = factory.template generate<dataType>();

auto input = factory.template generate<dataType>();

input.readFromContiguous(inputBuffer,id);

result.readFrom(input);

input.sub(1,input);

auto maskEarlyQuit =

factory.template generate<maskType>(1); auto inputPlus1 = factory.template generate<dataType>();

input.add(1,inputPlus1);

inputPlus1.greaterThan(1,maskEarlyQuit);

maskEarlyQuit.ternary(result,(dataType)1,result);

maskEarlyQuit.ternary(input,(dataType)1,input);

auto maskedResult = factory.template generate<dataType>();

auto maskedInput = factory.template generate<dataType>();

bool workInProgress = true;

auto mask = factory.template generate<maskType>(1); const dataType one = 1;

const maskType zero = 1;

while(workInProgress)

{

result.mul(input,maskedResult);

input.sub(one,maskedInput);

maskedInput.greaterThan(zero,mask);

mask.bitwiseAnd(maskEarlyQuit,mask);

workInProgress = mask.isAnyTrue();

mask.ternary(maskedResult,result,result);

mask.ternary(maskedInput,input,input);

}

auto gt = factory.template generate<maskType>();

result.greaterThan(0,gt);

gt.ternary(result,(dataType)1,result);

result.writeToContiguous(outputBuffer,id);

}, Vectorization::KernelArgs<dataType *, dataType *>{});

std::vector<dataType> input(N);

std::vector<dataType> output(N);

for(int k=0;k<N;k++)

{

input[k]=k%100;

}

for(int i = 0;i<101;i++)

{

if(i%2==0)

{

auto t1 = readTSC();

kernel.run(N,input.data(),output.data());

auto t2 = readTSC();

std::cout << (t2 - t1)/(N) << " cycles per factorial (vectorized)" << std::endl;

}

else

{

auto t1 = readTSC();

for(int k=0;k<N;k++)

{

output[k] = factorial(input[k]);

}

auto t2 = readTSC();

std::cout << (t2 - t1)/(N) << " cycles per factorial (scalar)" << std::endl;

}

}

for(int i=0;i<20;i++)

{

std::cout<<output[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

Even with the extra masking operations in the while-loop, it surpasses the performance of scalar(but AVX512 optimized) version. While AVX512 CPUs with two AVX512 units per core have enough performance for the naive factorial loop vectorization, AVX2 and earlier architectures lack the necessary SIMD width to compensate for the high-ratio masking operations, hence the naive loop vectorization has same performance on AVX2 and is slower on SSE. There are multiple ways to lose to a smart compiler and this is one of them, unless CPU has something better to offer that compiler does not take into consideration (yet).

Mandelbrot Set Generation

Mandelbrot set generation using complex numbers is something compiler cannot predict as efficient as the factorial function even with the similar simplicity:

int getPoint(int a, int b)

{

float x = static_cast<float>(b);

float y = static_cast<float>(a);

x = (x - width / 2 - width/4)/(width/3);

y = (height / 2 - y)/(width/3);

complex<float> c (x,y);

complex <float> z(0, 0);

size_t iter = 0;

while (abs(z) < 2 && iter <= 35)

{

z = z * z + c;

iter++;

}

if (iter < 34) return iter*255/33;

else return 0;

}

Compiler-produced instructions are 90% scalar (except some memory moves) and the computation performance is 1043 cycles per pixel on Cascadelake (AVX512) CPU, because compiler is unable to unroll the unknown pattern of data. To let it know what's happening, some of the standard structs have to be expanded. For example, std::complex and std::abs are not optimized out by compiler by default. Due to this reason, three different versions of naive scalar versions were tested:

- simple naive version in code block above

std::complex expanded explicitly into two floats, without using square root for the abs calculationstd::complex expanded again but with square root

Benchmark results show that the naive version can be optimized highly by simple changes in the code.

Performance (cycles per pixel)

Compiler CPU std::complex c; float creal,cimag; + std::sqrt

gcc 10 Fx-8150 1770 175 215

gcc 11 Xeon-8275CL 1075 91 111

clang 9 Xeon-8275CL 252(average 500+) 97 97

clang 14 Xeon-8275CL 255(average 500+) 77 79

icc 2021.5 Xeon-8275CL 800+ 66 70

icx 2022 Xeon-8275CL 800+ 79 91

msvc 19 Xeon-8275CL 490 71 137

The largest speedup was seen when std::complex was expanded into two floats (real component and imaginary component) and explicitly computed in complex multiplication. This helped the compiler see optimizations that weren't visible with std::complex. Then to test if it was the square root in abs function, it was added back to the loop condition computation and it marginally increased timings.

With plain simple naive version, msvc-19 performed best, at 490 cycles per pixel while clang occasionally achieved 252 cycles per cycle. When std::complex was explicitly computed by two floats, icc performed best. When square root calculation was added back explicitly, winner was icc again.

Even though GCC (the default compiler used in benchmarking in this project) was the worst in unoptimized code, it did perform best in the case of auto-vectorization on the KernelData objects that are very small arrays that have just enough elements to be replaced by a single AVX or AVX512 instruction.

It is unfair to compare an unoptimized naive version against fully optimized vectorized version of code.

Real speedup for FX8150 is from 175 cycles per pixel to 80 cycles per pixel (2.1x performance) because the compiler is able to do "horizontal" parallelization that work on pixel one at a time but computing faster in while-loop depth per pixel. Nevertheless, vertical parallelization is better than horizontal parallelization for some algorithms and Mandelbrot-Set generation looks like one of them.

Real speedup for Cascadelake CPU is from 91 cycles per pixel to 11 cycles per pixel (~8x). Since the depth of while-loop is limited (35) and the AVX512 is 2x the width of AVX2 and there are 2x FMA units in the core, there is a substantial speedup against the horizontal vectorization of compiler.

Test code for three naive scalar versions:

#include <vector>

#include <stdint.h>

#include <iostream>

#include <sstream>

#include <fstream>

#include <complex>

#ifdef _MSC_VER

# include <intrin.h>

#else

# include <x86intrin.h>

#endif

inline

uint64_t readTSC() {

uint64_t tsc = __rdtsc();

return tsc;

}

constexpr int width = 2000;

constexpr int height = 2000;

unsigned char getPoint(int a, int b)

{

float x = static_cast<float>(b);

float y = static_cast<float>(a);

x = (x - width / 2.0f - width/4.0f)/(width/3.0f);

y = (height / 2.0f - y)/(width/3.0f);

std::complex<float> c (x,y);

std::complex <float> z(0, 0);

size_t iter = 0;

while (std::abs(z) < 2 && iter <= 35)

{

z = z * z + c;

iter++;

}

if (iter < 34) return iter*255/33;

else return 0;

}

unsigned char getPointExplicit(int a, int b)

{

float x = static_cast<float>(b);

float y = static_cast<float>(a);

x = (x - width / 2.0f - width/4.0f)/(width/3.0f);

y = (height / 2.0f - y)/(width/3.0f);

float creal = x;

float cimag = y;

float zreal = 0;

float zimag = 0;

size_t iter = 0;

while ((zreal*zreal + zimag*zimag) < 4 && iter <= 35)

{

float znewreal = zreal*zreal - zimag*zimag + creal;

float znewimag = 2.0f*zreal*zimag + cimag;

zreal=znewreal;

zimag=znewimag;

iter++;

}

if (iter < 34) return iter*255/33;

else return 0;

}

unsigned char getPointExplicitWithSqrt(int a, int b)

{

float x = static_cast<float>(b);

float y = static_cast<float>(a);

x = (x - width / 2.0f - width/4.0f)/(width/3.0f);

y = (height / 2.0f - y)/(width/3.0f);

float creal = x;

float cimag = y;

float zreal = 0;

float zimag = 0;

size_t iter = 0;

while (std::sqrt(zreal*zreal + zimag*zimag) < 2 && iter <= 35)

{

float znewreal = zreal*zreal - zimag*zimag + creal;

float znewimag = 2.0f*zreal*zimag + cimag;

zreal=znewreal;

zimag=znewimag;

iter++;

}

if (iter < 34) return iter*255/33;

else return 0;

}

void createImage()

{

std::vector<unsigned char> image(width*height);

for(int k=0;k<20;k++)

{

auto t1 = readTSC();

for (size_t i = 0; i < height; i++)

{

for (size_t j = 0; j < width; j++)

{

image[j+i*width]= getPointExplicit(i, j);

}

}

auto t2 = readTSC();

std::cout<<(t2-t1)/(width*height)<<" cycles per pixel"<<std::endl;

}

std::ofstream fout;

fout.open("mandelbrot.ppm");

if (fout.is_open()) {

fout << "P5\n" << width << ' ' << height << " 255\n";

for(int i=0;i<width*height;i++)

{

fout << image[i];

}

fout.close();

} else {

std::cout << "Could not open the file!\n";

}

}

int main()

{

createImage();

return 0;

}

Following code sample is the vectorized kernel for the same Mandelbrot Set generation (without square root):

- vertically parallelized, 16-32 pixels are computed at once

- memory is accessed for 16-32 pixels at once

- fused-multiply-add method is used for fast a*b+c computation

- masking operand types are chosen as

int to be same width as float (4 bytes) as this may help on compiler to generate efficient CPU instructions without converting them to/from char values

#include <algorithm>

#include <cstdint>

#include <fstream>

#include <iostream>

#include "VectorizedKernel.h"

constexpr int width = 2000;

constexpr int height = 2000;

constexpr int simd = 64;

void Mandelbrot(std::vector<char> & image) {

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, char * img){

const int currentSimdWidth = factory.width;

auto j = factory.template generate<int>();

idThread.modulus(width, j);

auto i = factory.template generate<int>();

idThread.div(width, i);

auto x0 = factory.template generate<float>();

j.template castAndCopyTo<float>(x0);

auto y0 = factory.template generate<float>();

i.template castAndCopyTo<float>(y0);

const auto heightDiv2 = factory.template generate<float>(height/2.0f);

auto x = factory.template generate<float>();

x0.sub(width/2.0f, x0);

x0.sub(width/4.0f, x0);

x0.div(width/3.0f,x);

auto y = factory.template generate<float>();

heightDiv2.sub(y0,y0);

y0.div(width/3.0f, y);

const auto imagc = factory.template generate<float>(y);

const auto realc = factory.template generate<float>(x);

auto imagz = factory.template generate<float>(0);

auto realz = factory.template generate<float>(0);

bool anyTrue = true;

auto iteration = factory.template generate<int>(0);

auto imagzSquared = factory.template generate<float>();

auto absLessThan2 = factory.template generate<int>();

auto tmp1 = factory.template generate<float>();

auto whileLoopCondition = factory.template generate<int>();

auto tmp2 = factory.template generate<int>();

auto zzReal = factory.template generate<float>();

auto zzImag = factory.template generate<float>();

auto tmp3 = factory.template generate<float>();

auto tmpAdd1 = factory.template generate<float>();

auto tmpAdd2 = factory.template generate<float>();

auto tmp4 = factory.template generate<int>();

while(anyTrue)

{

imagz.mul(imagz, imagzSquared);

realz.fusedMultiplyAdd(realz,imagzSquared,tmp1);

tmp1.lessThan(4.0f, absLessThan2);

iteration.lessThanOrEquals(35, tmp2);

absLessThan2.logicalAnd(tmp2, whileLoopCondition);

anyTrue = whileLoopCondition.isAnyTrue();

realz.fusedMultiplySub(realz,imagzSquared, zzReal);

imagz.mul(realz, tmp3);

realz.fusedMultiplyAdd(imagz,tmp3, zzImag);

zzReal.add(realc, tmpAdd1);

zzImag.add(imagc, tmpAdd2);

whileLoopCondition.ternary(tmpAdd1, realz, realz);

whileLoopCondition.ternary(tmpAdd2, imagz, imagz);

whileLoopCondition.ternary(1,0, tmp4);

iteration.add(tmp4, iteration);

}

const auto thirtyFour = factory.template generate<int>(34);

auto ifLessThanThirtyFour = factory.template generate<int>();

iteration.lessThan(thirtyFour, ifLessThanThirtyFour);

auto conditionalValue1 = factory.template generate<int>();

iteration.mul(255, conditionalValue1);

conditionalValue1.div(33, conditionalValue1);

auto conditionalValue2 = factory.template generate<int>(0);

auto returnValue = factory.template generate<int>(0);

ifLessThanThirtyFour.ternary

(conditionalValue1, conditionalValue2, returnValue);

auto tmp5 = factory.template generate<int>();

i.mul(width, tmp5);

auto writeAddr = factory.template generate<int>(0);

j.add(tmp5, writeAddr);

auto returnValueChar = factory.template generate<char>(0);

returnValue.template castAndCopyTo<char>(returnValueChar);

returnValueChar.writeTo(img,writeAddr);

},Vectorization::KernelArgs<char*>{});

if(image.size()<width*height)

image.resize(width*height);

kernel.run(width*height,image.data());

}

#ifdef _MSC_VER

# include <intrin.h>

#else

# include <x86intrin.h>

#endif

inline

uint64_t readTSC() {

uint64_t tsc = __rdtsc();

return tsc;

}

int main() {

std::vector<char> image;

for(int i = 0;i<100;i++)

{

auto t1 = readTSC();

Mandelbrot(image);

auto t2 = readTSC();

std::cout << (t2 - t1)/(width*height) << " cycles per pixel" << std::endl;

}

std::ofstream fout;

fout.open("mandelbrot.ppm");

if (fout.is_open()) {

fout << "P5\n" << width << ' ' << height << " 255\n";

for(int i=0;i<width*height;i++)

{

fout << image[i];

}

fout.close();

} else {

std::cout << "Could not open the file!\n";

}

return 0;

}

This time, there are more computations than masking operations and the vectorization more efficient than the factorial sample. On FX8150 3.6GHz CPU and GCC v10, it has performance of 80 cycles per pixel. On Cascadelake and GCC v10, it has 11 cycles per pixel. There is nearly 100x speedup on the Cascadelake against untouched naive code performance and about 8x against the optimized version of it.

Part of the performance comes from parallel memory I/O. All 64 work-items are computed in parallel, then all results are written to output buffer at once. Doing the writing one by one causes extra RAM or cache latency per operation. When all are written at once, some of the total latency is hidden. Then there is the SIMD-based computation speedup that is up to 16x. Cascadelake CPU of godbolt.org has dual AVX512 fma units per core. This makes 4 times speedup compared to single AVX unit of FX8150 and architectural differences (such as L1 bandwidth, instruction latency, total physical registers, ...) make the difference 8x even with lower CPU frequency.

Thanks to the GCC v10's efficient auto-vectorization, VectorizedKernel made 100% speedup even against std::valarray version that is on equally optimized code path.

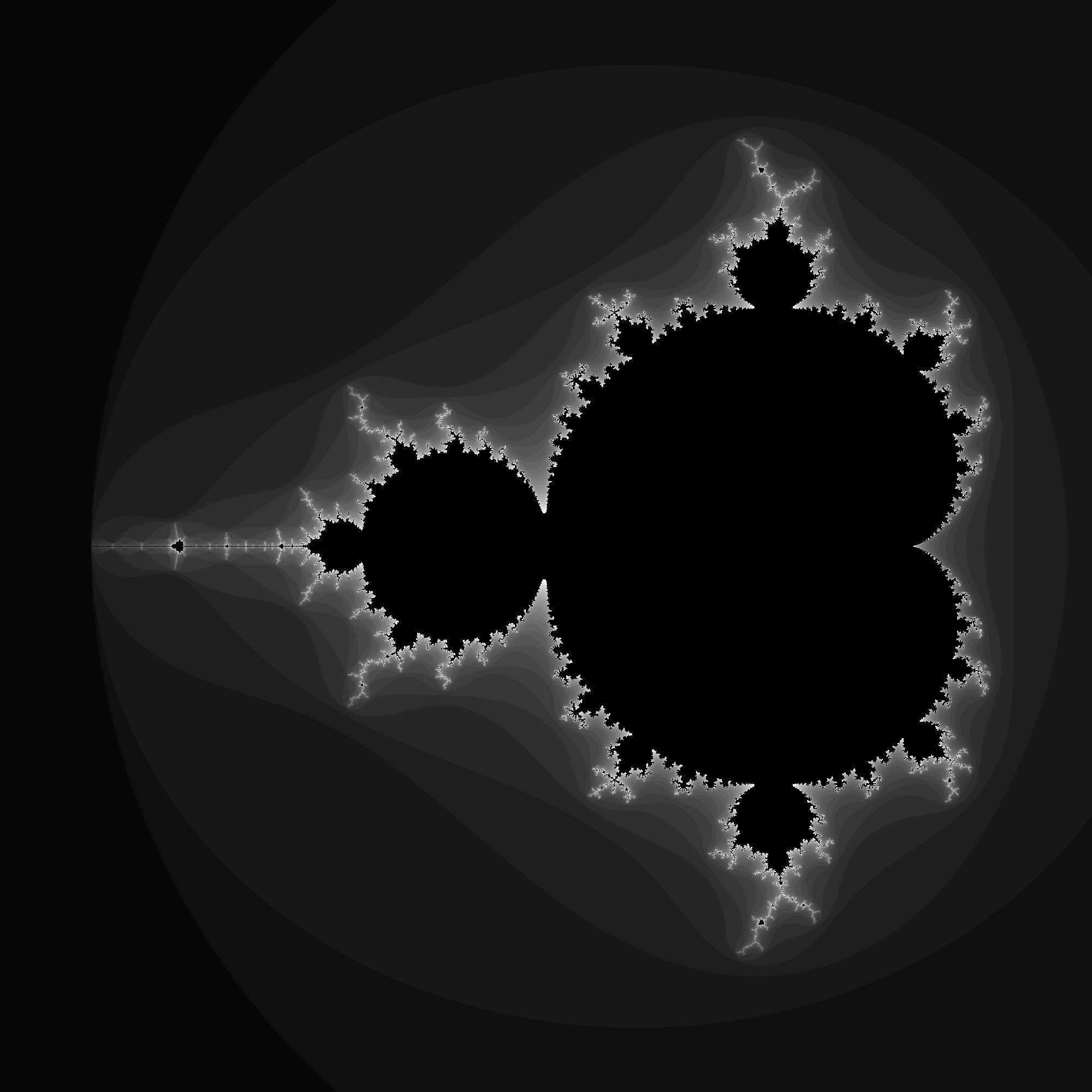

The program generates this image:

The image pattern mainly consists of uniform color distribution and this is good for scaling performance with SIMD usage. When image is zoomed, it may not scale as good as this. It depends on the brancing between neighboring pixels. The test program used scan-line computing. If it was tiled-computing, then it could have better scaling due to better packing of neighboring computations. When benchmarking the mandelbrot-set generation performance, the resulting image and the precision of calculations have to be same to have a fair comparison of different CPUs. There are also other factors such as AVX512 throttling issues with an unknown server-load. When another client is computing AVX512, current client's scalar code is throttled too. This is generally a bad side-effect for web servers but not for the high performance computing nor workstations. Xeon AVX512 core is similar to a mini GPU core (that has 192 pipelines at low frequency) that has free access to RAM.

Casting Variables To Different Types

Type conversion is supported by castAndCopyTo templated method. In the mandelbrot kernel, there are cast methods:

auto x0 = factory.template generate<float>(); j.template castAndCopyTo<float>(x0);

This converts all SIMD lane values to the explicitly called template type. When cast between integral variables and floating-point variables, this changes the bitwise representation of data. To keep bitwise representation same while changing the value, castBitwiseAndCopyTo method is used.

j.broadcast(100); auto x = factory.template generate<float>();

j.template castBitwiseAndCopyTo<float>(x);

Communication Between Lanes Of SIMD (So-called)Thread Group

There are also methods to broadcast value of a lane of a variable to all lanes of itself or to all lanes of another variable.

broadcast initializes all lanes to the same constant literal value.

auto x = factory.template generate<int>(0); x.broadcast(5);

broadcastFromLaneToVector copies a lane value to all lanes of a target variable:

#include <algorithm>

#include <iostream>

#include"VectorizedKernel.h"

constexpr int simd = 4;

int main() {

std::vector<int> data(500);

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

auto value = factory.template generate<int>();

idThread.broadcastFromLaneToVector(0,value);

value.writeTo(data,idThread);

},

Vectorization::KernelArgs<int*>{}

);

kernel.run(500,data.data());

for(int i=0;i<30;i++)

{

std::cout<<data[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

The output is as follows:

0 0 0 0 4 4 4 4 8 8 8 8 12 12 12 12 16 16 16 16 20 20 20 20 24 24 24 24 28 28

broadcastFromLane method copies a lane's value to all other lanes in same variable.

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>();

value.readFrom(idThread);

value.broadcastFromLane(0);

value.writeTo(data,idThread);

},

Vectorization::KernelArgs<int*>{}

);

gatherFromLane method does independent lane selection for each lane and copies values to result variable.

Left-shifting sample (works only for non-tail SIMD thread groups):

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>();

auto laneId = factory.template generate<int>();

idThread.modulus(currentSimdWidth, laneId);

laneId.add(1,laneId);

laneId.modulus(currentSimdWidth,laneId);

idThread.gatherFromLane(laneId,value);

value.writeTo(data,idThread);

},

Vectorization::KernelArgs<int*>{}

);

The output is as follows:

1 2 3 0 5 6 7 4 9 10 11 8 13 14 15 12 17 18 19 16 21 22 23 20 25 26 27 24 29 30

On Cascadelake CPU and simd=32 setting, each element is gathered from another lane and written to output at a speed of 3 cycles per element. On top of kernel launch latency, there are RAM access latency(partially hidden), two allocations for value and laneId variables, 2 modulus, 1 add, 1 gather and write operations, all completed in 3 cycles per element on average or 1 nanosecond (4GB/s, 200kB buffer).

Left-shifting lanes without gatherFromLane method can be made using lanesLeftShift:

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>(idThread);

auto value2 = factory.template generate<int>();

value.lanesLeftShift(10,value2);

value2.writeTo(data,idThread);

},

Vectorization::KernelArgs<int*>{}

);

Gather values from neighboring lanes (in same SIMD unit) makes it easier to calculate a reduction.

Reduction Sample

Naive and readable reduction algorithm is given as:

---64-element sum-reduction sample using 32 SIMD lanes---

Type data[64];

for(i = 32; i>0; i/=2)

{

if(current lane index < i)

{

data[current lane index] += data[current lane index + i];

}

}

data[0]---> has sum of all elements from 0 to 63

When there is an if, another mask is required for the instructions inside of its block. The += operator requires the masking:

Reduction In SIMD

Reduction within SIMD lanes uses only half of the lanes to add both halves then continues recursively by halving each new left half until first element is the only half part left.

constexpr int simd=64;

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>();

auto value2 = factory.template generate<int>();

auto value3 = factory.template generate<int>();

value.readFrom(data,idThread);

auto laneIndex = factory.template generate<int>();

auto laneIndexIncremented = factory.template generate<int>();

idThread.modulus(currentSimdWidth,laneIndex);

auto mask = factory.template generate<int>();

auto gatheredValue = factory.template generate<int>();

for(int i = 32; i>0; i/=2)

{

laneIndex.lessThan(i,mask);

laneIndex.add(i,laneIndexIncremented);

mask.ternary(laneIndexIncremented,laneIndex,laneIndexIncremented);

value.gatherFromLane(laneIndexIncremented,value2);

value.add(value2,value3);

mask.ternary(value3,value,value);

}

value.writeToMasked(data, idThread, mask);

},

Vectorization::KernelArgs<int*>{}

);

Reduction can also be run on a buffer to reduce twice number of elements:

Reduction On Buffer

constexpr int simd=64;

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>();

auto value2 = factory.template generate<int>();

auto value3 = factory.template generate<int>();

value.readFrom(data,idThread);

auto laneIndex = factory.template generate<int>();

auto laneIndexIncremented = factory.template generate<int>();

idThread.modulus(currentSimdWidth,laneIndex);

auto mask = factory.template generate<int>();

auto gatheredValue = factory.template generate<int>();

for(int i = 64; i>0; i/=2)

{

laneIndex.lessThan(i,mask);

laneIndex.add(i,laneIndexIncremented);

value.readFromMasked(data, laneIndex, mask);

value2.readFromMasked(data, laneIndexIncremented, mask);

value.add(value2,value3);

mask.ternary(value3,value,value);

value.writeToMasked(data,idThread,mask);

}

},

Vectorization::KernelArgs<int*>{}

);

The result has 8128 at position zero (sum of values 0,1,2,...127).

Since there is no overflowing in the buffer and the I/O is always contiguous, the masked read/write methods can be replaced with non-masked contiguous versions:

for(int i = 64; i>0; i/=2)

{

laneIndex.lessThan(i,mask);

laneIndex.add(i,laneIndexIncremented);

value.readFromContiguous(data, laneIndex);

value2.readFromContiguous(data, laneIndexIncremented);

value.add(value2,value3);

mask.ternary(value3,value,value);

value.writeToContiguous(data,idThread);

}

This is faster to run and completes every 128-element chunk in 1248 cycles on average (~3 nanoseconds per element), for Cascadelake CPU. This is the cost of 7 variable allocations, 6 loop iterations and 7 operations per iteration. This sample has low compute-to-data ratio (especially with allocations).

When only the reduction loop is measured:

#include <algorithm>

#include <iostream>

#include"VectorizedKernel.h"

constexpr int simd = 64;

#ifdef _MSC_VER

# include <intrin.h>

#else

# include <x86intrin.h>

#endif

inline

uint64_t readTSC() {

uint64_t tsc = __rdtsc();

return tsc;

}

int main() {

std::vector<int> data(512);

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data){

const int currentSimdWidth = factory.width;

auto value = factory.template generate<int>();

auto value2 = factory.template generate<int>();

auto value3 = factory.template generate<int>();

value.readFrom(data,idThread);

auto laneIndex = factory.template generate<int>();

auto laneIndexIncremented = factory.template generate<int>();

idThread.modulus(currentSimdWidth,laneIndex);

auto mask = factory.template generate<int>();

auto gatheredValue = factory.template generate<int>();

auto t1 = readTSC();

for(int i = 64; i>0; i/=2)

{

laneIndex.lessThan(i,mask);

laneIndex.add(i,laneIndexIncremented);

value.readFromContiguous(data, laneIndex);

value2.readFromContiguous(data, laneIndexIncremented);

value.add(value2,value3);

mask.ternary(value3,value,value);

value.writeToContiguous(data,idThread);

}

auto t2 = readTSC();

std::cout<<t2-t1<<" cycles per 128-element reduction"<<std::endl;

},

Vectorization::KernelArgs<int*>{}

);

for(int i=0;i<512;i++)

{

data[i]=i;

}

kernel.run(500,data.data());

for(int i=0;i<35;i++)

{

std::cout<<data[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

it is 198 cycles per reduction on FX8150 core and 28 cycles for Cascadelake (Godbolt.org server). Actual cycles are lower because readTSC functions (that are call twice) have their own latency too.

28 cycles for 6 loop iterations is equivalent to 0.67 cycles per method call. Since Cascadelake server is generally running 2.5GHz or 3.0Ghz, it is ~0.2 nanoseconds per method call on average (each method computes simd-number-of elements and roughly compares to 300 elements per nanosecond / 1.2 TB/s). The only issue with the sample is the allocation overhead of variables and its readability compared to real CUDA/OpenCL kernel code.

28 cycles for 128 value-reduction means less than 0.1 nanosecond per element and this is a naive reduction algorithm that is not work-efficient but is cache-friendly (6 steps for 128 elements work in L1 cache or registers depending on size&number of CPU physical registers).

8x8 Matrix Transposition Sample

Naive transposition algorithm applied on 100 matrices of sizes 8x8 on FX8150 CPU:

#include <algorithm>

#include <iostream>

#include"VectorizedKernel.h"

constexpr int simd = 8;

int main() {

constexpr int N = 100;

std::vector<int> data(simd*simd*N);

std::vector<int> dataOut(simd*simd*N);

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data, int * dataOut){

const int currentSimdWidth = factory.width; auto value = factory.template generate<int>();

auto indexRead = factory.template generate<int>(idThread);

auto indexWrite = factory.template generate<int>();

auto column = factory.template generate<int>();

auto row = factory.template generate<int>();

Vectorization::Bench bench(0);

for(int i=0;i<N;i++)

{

for(int j=0;j<currentSimdWidth;j++)

{

idThread.add(j*currentSimdWidth,indexRead);

value.readFrom(data+i*currentSimdWidth*currentSimdWidth,indexRead);

indexRead.div(currentSimdWidth,row);

indexRead.modulus(currentSimdWidth,column);

column.mul(currentSimdWidth,indexWrite);

indexWrite.add(row,indexWrite);

value.writeTo(dataOut+i*currentSimdWidth*currentSimdWidth,indexWrite);

}

}

},

Vectorization::KernelArgs<int*,int*>{}

);

for(int i=0;i<simd*simd*N;i++)

{

data[i]=i;

}

kernel.run(simd,data.data(),dataOut.data());

kernel.run(simd,data.data(),dataOut.data());

kernel.run(simd,data.data(),dataOut.data());

for(int i=0;i<100;i++)

{

std::cout<<dataOut[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

The output is as follows:

1.569e-05 seconds (for 100 matrices)

1.6099e-05 seconds (for 100 matrices)

1.6281e-05 seconds (for 100 matrices)

0 8 16 24 32 40 48 56 1 9 17 25 33 41 49 57 2 10 18 26 34 42 50 58 3 11 19

27 35 43 51 59 4 12 20 28 36 44 52 60 5 13 21 29 37 45 53 61 6 14 22 30 38

46 54 62 7 15 23 31 39 47 55 63 64 72 80 88 96 104 112 120 65 73 81 89 97

105 113 121 66 74 82 90 98 106 114 122 67 75 83 91 99 107 115 123 68 76 84 92

Each element of matrices moved for transposition at 2.5 nanoseconds on average (~1.6 GB/s). Naive solution for a linear algebra problem is not always fast. For Cascadelake CPU with simd=32 (to transpose 32x32 matrices), it is 1.1 nanoseconds (~3.9 GB/s).

Optimized codes should make the compiler produce SIMD shuffle instructions. gatherFromLane is a way of generating shuffle commands.

Slightly optimized version that does not use gatherFromLane but stores all matrix elements in single simd variable:

#include <algorithm>

#include <iostream>

#include"VectorizedKernel.h"

constexpr int matrixWidth = 2;

constexpr int simd = matrixWidth*matrixWidth;

int main() {

constexpr int N = 1000;

std::vector<int> data(simd*N);

std::vector<int> dataOut(simd*N);

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data, int * dataOut){

const int currentSimdWidth = factory.width; auto value = factory.template generate<int>();

auto indexRead = factory.template generate<int>(idThread);

auto indexWrite = factory.template generate<int>();

auto column = factory.template generate<int>();

auto row = factory.template generate<int>();

indexRead.div(matrixWidth,row);

indexRead.modulus(matrixWidth,column);

column.mul(matrixWidth,indexWrite);

indexWrite.add(row,indexWrite);

Vectorization::Bench bench(0);

for(int i=0;i<N;i++)

{

value.readFrom(data+i*currentSimdWidth,indexRead);

value.writeTo(dataOut+i*currentSimdWidth,indexWrite);

}

},

Vectorization::KernelArgs<int*,int*>{}

);

for(int i=0;i<simd*N;i++)

{

data[i]=i;

}

kernel.run(simd,data.data(),dataOut.data());

kernel.run(simd,data.data(),dataOut.data());

kernel.run(simd,data.data(),dataOut.data());

for(int i=0;i<100;i++)

{

std::cout<<dataOut[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

The output on FX8150 is as below:

3.155e-06 seconds

3.002e-06 seconds

2.826e-06 seconds

0 2 1 3 4 6 5 7 8 10 9 11 12 14 13 15 16 18 17 19 20 22 21 23 24 26 25 27

28 30 29 31 32 34 33 35 36 38 37 39 40 42 41 43 44 46 45 47 48 50 49 51 52

54 53 55 56 58 57 59 60 62 61 63 64 66 65 67 68 70 69 71 72 74 73 75 76 78

77 79 80 82 81 83 84 86 85 87 88 90 89 91 92 94 93 95 96 98 97 99

It is 0.7 nanoseconds per matrix element (5.7 GB/s). On Cascadelake CPU with simd=16 (matrixWidth=4), it is 0.11 nanoseconds per matrix element (36GB/s).

Second optimization with gatherFromLane:

#include <algorithm>

#include <iostream>

#include"VectorizedKernel.h"

constexpr int matrixWidth = 2;

constexpr int simd = matrixWidth*matrixWidth;

int main() {

constexpr int N = 1000;

std::vector<int> data(simd*N+64);

std::vector<int> dataOut(simd*N+64);

int * alignedInput = data.data();

int * alignedOutput = dataOut.data();

while(((size_t) alignedInput) % 64 !=0)

alignedInput++;

while(((size_t) alignedOutput) % 64 !=0)

alignedOutput++;

auto kernel = Vectorization::createKernel<simd>([&]

(auto & factory, auto & idThread, int * data, int * dataOut){

const int currentSimdWidth = factory.width; auto valueIn = factory.template generate<int>();

auto valueOut = factory.template generate<int>();

auto indexRead = factory.template generate<int>(idThread);

auto indexWrite = factory.template generate<int>();

auto column = factory.template generate<int>();

auto row = factory.template generate<int>();

indexRead.div(matrixWidth,row);

indexRead.modulus(matrixWidth,column);

column.mul(matrixWidth,indexWrite);

indexWrite.add(row,indexWrite);

{

Vectorization::Bench bench(0);

for(int i=0;i<N;i++)

{

valueIn.readFrom(data+i*simd);

valueIn.gatherFromLane(indexWrite,valueOut);

valueOut.writeTo(dataOut+i*simd);

}

}

},

Vectorization::KernelArgs<int*,int*>{}

);

for(int i=0;i<simd*N;i++)

{

alignedInput[i]=i;

}

for(int i=0;i<100000;i++)

kernel.run(simd,alignedInput,alignedOutput);

for(int i=0;i<100;i++)

{

std::cout<<alignedOutput[i]<<" ";

}

std::cout<<std::endl;

return 0;

}

- FX8150 (

simd=4): 0.28 nanoseconds per element (14 GB/s) - Cascadelake (

simd=4): 0.098 nanoseconds per element (40 GB/s) - Cascadelake (

simd=16): 0.094 nanoseconds per element (42 GB/s)

Matrix transposition is memory bandwidth bottlenecked and simple C++ scalar version can easily reach the RAM bandwidth bottleneck. Cascadelake L2-to-L1 cache bandwidth is 64 bytes per cycle. At 3GHz, it means 192 GB/s. The gatherFromLane method call is reducing the 192GB/s bandwidth to 42 GB/s. gatherFromLane produces "vpermd" instruction for Cascadelake CPU and it has 3 cycles latency (taken from instruction tables). Gathering 16 lane values (permuting for the instruction) taking 3 cycles is equivalent to ~5 integers/floats per cycle. This is 15 giga integers/floats at 3GHz or 45 GB/s bandwidth and is close to the measured 42 GB/s. transposeLanes method is slightly more efficient for the same task because the pattern of transposition is known in compile-time and produces one less mov command in the loop. Apparently, having two AVX512 FMA units does not help memory-only tasks. To be able to fully use FMA units during a matrix-matrix multiplication, multiple sub-matrices must be stored in AVX512 registers and selected by permutation or shuffling the register then computed using fusedMultiplyAdd or fusedMultiplySub methods.

Matrix Multiplication Sample

Naive version of matrix-matrix multiplication is straightforward to implement.

#include "VectorizedKernel.h"

#include<vector>

int main()

{

constexpr int simd = 8;

constexpr int matrixSize = 512;

auto kernelMatrixMultiplication = Vectorization::createKernel<simd>

([&](auto & factory, auto & idThread, float * bufferIn, float * bufferOut){

const int currentSimdWidth = factory.width;

auto ROW = factory.template generate<int>();

idThread.div(matrixSize, ROW);

auto COL = factory.template generate<int>();

idThread.modulus(matrixSize, COL);

auto tmpSum = factory.template generate<float>(0.0f);

auto A = factory.template generate<float>(0.0f);

auto B = factory.template generate<float>(0.0f);

auto AmulB = factory.template generate<float>(0.0f);

auto indexA = factory.template generate<int>(0);

auto indexB = factory.template generate<int>(0);

for(int i=0;i<matrixSize;i++)

{

ROW.mul(matrixSize, indexA);

indexA.add(i,indexA);

COL.add(i*matrixSize, indexB);

A.readFrom(bufferIn, indexA); B.readFrom(bufferIn, indexB);

A.mul(B,AmulB);

tmpSum.add(AmulB, tmpSum);

}

auto writeC = factory.template generate<int>();

ROW.mul(matrixSize, writeC);

writeC.add(COL, writeC);

tmpSum.writeTo(bufferOut,writeC);

},Vectorization::KernelArgs<float*,float*>{});

for(int j=0;j<25;j++)

{

std::vector<float> test1(matrixSize*matrixSize),test2(matrixSize*matrixSize);

for(int i=0;i<matrixSize*matrixSize;i++)

{

test1[i]=2.0f;

}

std::cout<< "matrix multiplication

("<<matrixSize*(size_t)matrixSize*matrixSize*2<<" operations total) ";

{

Vectorization::Bench bench(0);

kernelMatrixMultiplication.run

(matrixSize * matrixSize,test1.data(),test2.data());

}

for(int i=0;i<3;i++)

{

std::cout<<test2[i]<<" ";

}

std::cout<<std::endl;

}

return 0;

}

On Cascadelake CPU (simd=32), it takes 0.097 seconds (0.133 for FX8150 simd=8) to complete 268 million flops. 2.7 GFLOPs shows efficiency of strided reads (with stride = multiple rows of big matrix) and the lack of caching. Since there is no sub-matrix optimization, it doesn't even use L1 cache efficiently. To be able to use cache first, it can be made to do "tiled"/"sub matrix" computing.

Assuming that the second matrix is another matrix buffer and is already transposed, the data gathering part can be made fully contiguous and the mul&add methods can be joined together as a fused multiply add operation:

#include "VectorizedKernel.h"

#include<vector>

int main()

{

constexpr int simd = 4;

constexpr int matrixSize = 512;

auto kernelMatrixMultiplication = Vectorization::createKernel<simd>

([&](auto & factory, auto & idThread, float * bufferIn, float * bufferOut){

const int currentSimdWidth = factory.width;

auto ROW = factory.template generate<int>();

idThread.div(matrixSize, ROW);

auto COL = factory.template generate<int>();

idThread.modulus(matrixSize, COL);

auto tmpSum = factory.template generate<float>(0.0f);

auto A = factory.template generate<float>();

auto B = factory.template generate<float>();

auto AmulB = factory.template generate<float>();

auto indexA = factory.template generate<int>();

auto indexAmul = factory.template generate<int>();

auto indexB = factory.template generate<int>();

auto indexBmul = factory.template generate<int>();

ROW.mul(matrixSize, indexAmul);

COL.mul(matrixSize, indexBmul);

for(int i=0;i<matrixSize;i++)

{

indexAmul.add(i,indexA);

indexBmul.add(i,indexB);

A.readFrom(bufferIn, indexA); B.readFrom(bufferIn, indexB);

A.fusedMultiplyAdd(B,tmpSum,tmpSum);

}

auto writeC = factory.template generate<int>();

ROW.mul(matrixSize, writeC);

writeC.add(COL, writeC);

tmpSum.writeTo(bufferOut,writeC);

},Vectorization::KernelArgs<float*,float*>{});

for(int j=0;j<25;j++)

{

std::vector<float> test1(matrixSize*matrixSize),test2(matrixSize*matrixSize);

for(int i=0;i<matrixSize*matrixSize;i++)

{

test1[i]=2.0f;

}

std::cout<< "matrix multiplication

("<<matrixSize*(size_t)matrixSize*matrixSize*2<<" operations total) ";

{

Vectorization::Bench bench(0);

kernelMatrixMultiplication.run

(matrixSize * matrixSize,test1.data(),test2.data());

}

for(int i=0;i<3;i++)

{

std::cout<<test2[i]<<" ";

}

std::cout<<std::endl;

}

return 0;

}

Better memory access pattern leads to increased performances. FX8150 completes 512x512 multiplication in 0.053 seconds which is 5.1 GFLOPs. For every flops, 8 bytes are fetched from cache and 40GB/s bandwidth is achieved, without any explicit data re-use.

To experiment data re-use optimization, the following sample focuses on register-tiling using generateArray factory method to create arrays with vertical-memory-layout to achieve parallelism in simple array operations by vertical SIMD CPU instructions that are usually more efficient than horizontal versions (such as dot products):

#include "VectorizedKernel.h"

#include<vector>

int main()

{

constexpr int simd = 4;

constexpr int matrixWidth = 8;

constexpr int totalMatMatMultiplications = 10000;

constexpr int numMatrices = totalMatMatMultiplications*2;

constexpr int numElements = matrixWidth*matrixWidth*numMatrices;

size_t totalTime=0;

auto kernelMatrixMultiplication = Vectorization::createKernel<simd>

([&](auto & factory, auto & idThread, float * bufferIn, float * bufferOut){

auto privateMatrix1 = factory.template

generateArray<float,matrixWidth*matrixWidth>();

auto privateMatrix2 = factory.template

generateArray<float,matrixWidth*matrixWidth>();

auto privateMatrix3 = factory.template

generateArray<float,matrixWidth*matrixWidth>();

auto readIndexStart = factory.template generate<int>();

auto writeIndexStart = factory.template generate<int>();

auto readIndex = factory.template generate<int>();

auto writeIndex = factory.template generate<int>();

idThread.mul(matrixWidth*matrixWidth*2,readIndexStart);

idThread.mul(matrixWidth*matrixWidth,writeIndexStart);

for(int j=0;j<matrixWidth;j++)

{

for(int i=0;i<matrixWidth;i++)

{

readIndexStart.add(j*matrixWidth + i,readIndex);

privateMatrix1[i+j*matrixWidth].readFrom(bufferIn,readIndex);

privateMatrix2[i+j*matrixWidth].readFrom

(bufferIn+(matrixWidth*matrixWidth),readIndex);

}

}

size_t tmpTime;

{

Vectorization::Bench benchmark(&tmpTime);

for (int i = 0; i < matrixWidth; i++)

{

for (int j = 0; j < matrixWidth; j++)

{

privateMatrix1[i*matrixWidth].mul(privateMatrix2[j],

privateMatrix3[i*matrixWidth+j]);

for (int k = 1; k < matrixWidth; k++)

{

privateMatrix1[i*matrixWidth+k].fusedMultiplyAdd

(privateMatrix2[k*matrixWidth+j],

privateMatrix3[i*matrixWidth+j],privateMatrix3[i*matrixWidth+j]);

}

}

}

}

totalTime += tmpTime;

for(int j=0;j<matrixWidth;j++)

{

for(int i=0;i<matrixWidth;i++)

{

writeIndexStart.add(j*matrixWidth + i,writeIndex);

privateMatrix3[i+j*matrixWidth].writeTo(bufferOut,writeIndex);

}

}

},Vectorization::KernelArgs<float*,float*>{});

std::vector<float> test1(numElements),test2(numElements);

for(int i=0;i<numElements;i++)

test1[i]=i;

for(int i=0;i<100;i++)

{

totalTime=0;

kernelMatrixMultiplication.run(totalMatMatMultiplications,

test1.data(),test2.data());

std::cout<<"total time to do "<<totalMatMatMultiplications<<

" multiplications is "<<totalTime<<

" nanoseconds ("<<totalTime/((float)totalMatMatMultiplications)<<

" per multiplication)"<<

" ("<<1.0f/((totalTime/((float)totalMatMatMultiplications))/

(matrixWidth*(float)matrixWidth*matrixWidth*2.0))<<

" floating-point operations per nanosecond)"<<std::endl;

}

for(int i=0;i<15;i++)

std::cout<<test2[i]<<std::endl;

return 0;

}

Benchmarking was repeated for multiple simd - matrixWidth combinations:

Setting | 32-bit floating-point operations per nanosecond

simd matrixWidth | FX8150 Bulldozer Xeon 8275CL Cascadelake

1 1 | 0.04 0.10

1 2 | 0.32 0.76

1 4 | 2.03 3.28

1 8 | 3.47 16.25

2 1 | 0.08 0.18

2 2 | 0.61 1.45

2 4 | 3.12 4.41

2 8 | 3.98 14.65

4 1 | 0.17 0.4

4 2 | 1.14 2.67

4 4 | 6.73 9.14

4 8 | 14.42 30.12

8 1 | 0.33 1.0

8 2 | 2.67 5.33

8 4 | 10.67 24.81

8 5 | 14.40 11.33

8 6 | 16.84 58.31

8 7 | 17.59 !! 18.15

8 8 | 12.34 22.75

16 1 | 0.67 1.53

16 2 | 5.33 9.20

16 4 | 14.22 33.83

16 5 | 14.12 31.26

16 6 | 4.03 55.13

16 7 | 64.28 !!

16 8 | 4.12 35.31

1 16 | 2.87 21.06

2 16 | 5.51 8.50

4 16 | 7.55 14.76

8 16 | 5.33 16.31

16 16 | 3.63 31.50

32 2 | 8.00 12.65

2 32 | 2.18 5.06

32 32 | 3.75 19.85

32 4 | 23.22

32 5 | 47.65

32 6 | 42.45

32 7 | 39.02

32 8 | 25.24

64 4 | 35.04

64 5 | 28.11

64 6 | 22.18

Since Bulldozer architecture has only AVX-v1 and work like two SSE units (for single thread) joined together, best performance was achieved with simd=8 and matrixWidth=7. At 17.59 GFLOPs, it is 3x denser than naive loop (while only multiplying streaming matrices of sizes 7x7) and is 30% of theoretical peak performance (theoretical peak is 57.6 GFLOPS by using only FMA operations for equal number of additions and multiplications and no other operations to affect the pipeline).

Cascadelake architecture supports AVX512. This means, it has access to not only 32 architectural 512bit(64byte) registers but also 168 vector registers in renaming (as physical registers) and this lets it store more data before reaching L1 for re-using same data. Best performance was achieved for simd=16 and matrixWidth=7 setting. At 64.28 GFLOPs, it has 30% to 50% of the peak performance (depending on godbolt.org server's current load) of Cascadelake server-grade CPU that is shared between multiple clients. When an algorithm uses less register space than CPU supports, it can benefit more from pipelining and simd=32-64 settings depending on the pipelining efficiency.

In the last sample, factory.template generateArray<Type,NumElements>(); was used for simulating parallelized arrays. This has a vertical layout in memory where each element of a work-item has Simd stride between them. This lets the compiler parallelize the in-kernel array operations. Timing measurements of multiplications were made only around themselves and all I/O were excluded. To be able to use this performance, one needs to implement cache-tiling to feed the registers fast enough with new sub-matrices of a big matrix to be multiplied. Fastest matrix multiplications are only possible with register tiling, cache tiling and explicitly selecting efficient versions of CPU instructions by intrinsic functions. The implementation is not being developed with any intrinsics nor gnu language extensions. Maximum performance nearly always requires intrinsics (and experience on instruction tables) to be used. When they are not used, library is portable to any platform (with no define macro) as long as it has a C++ compiler that can auto-vectorize plain plain loops & plain arrays. Lastly, it will not require any code-rewrite other than just replacing simd=16 with simd=128 when an AVX-2048 is CPU is launched. The compiler will take care of all the plain loops iterating on plain arrays in each method of KernelData object.

How Fast are Sqrt and Other Math Functions?

Currently, the project only uses standard functions in auto-parallelized loops. Sqrt is one of the successfully auto-parallelized math functions, sinFast, cosFast, expFast are also added for less-accurate versions of sin, cos and exp functions with high speedup (see the last part in the article). There are also non-parallelized versions due to the standard implementation revealing no more than a function jump instruction. In future versions, Pow and Log methods will be given polynomial approximation versions with optimized coefficients (magic numbers found by genetic algorithm).

The best way to know performance is to benchmark. In below sample, Quake-3 (John Carmack) Fast Inverse Square Root is tested against plain 1.0f/std::sqrt(x) loop (which is effectively parallelized by compiler), VectorizedKernel version of inverse square root (computed by first division then square root, then a second version by a direct call to rsqrt) and VectorizedKernel version of Quake-3 Fast Inverse Square Root:

GCC v11, simd=8 for AVX, simd=16 for AVX512, n=20million

-------- Simple Loop ---------- ------- Kernel Version --------

(1/std::sqrt) Q_rsqrt Q_rsqrt (1/sqrt) rsqrt

FX8150 0.035 s 0.092 s 0.037 s 0.036 s 0.036 s

8275CL 0.012 s 0.014 s 0.013 s 0.012 s 0.012 s

CLANG v14(simd=16)

8275CL 0.016 s 0.014s 0.071 s 0.068 s 0.064 s

ICC 2021(simd=16)

8275CL 0.019 s 0.026 s 0.016 s 0.013 s 0.014 s

Since the simple 1/sqrt loop is auto-vectorized efficiently by compiler, it has equal performance to kernel version which does similar work-flow inside with the addition of kernel launch latency. Since ICC could not vectorize serial version efficiently, the kernel versions had a speedup.

Benchmark codes:

#include "VectorizedKernel.h"

constexpr int n = 20000000;

float Q_rsqrt( float number )

{

int i;

float x2, y;

const float threehalfs = 1.5F;

x2 = number * 0.5F;

y = number;

i = * ( int * ) &y;

i = 0x5f3759df - ( i >> 1 );

y = * ( float * ) &i;

y = y * ( threehalfs - ( x2 * y * y ) );

return y;

}

void Q_rsqrt_simd(std::vector<float> & input, std::vector<float> & output)

{

constexpr int simd = 16;

auto kernel = Vectorization::createKernel<simd>([&]

(const auto & factory, auto & idThread, float * bufIn, float * bufOut)

{

auto i = factory.template generate<unsigned int>();

auto x2= factory.template generate<float>();

auto y= factory.template generate<float>();

const auto threehalfs = factory.template generate<float>(1.5f);

auto threehalfsMinusX2 = factory.template generate<float>();

auto number = factory.template generate<float>();

auto iRightShifted = factory.template generate<unsigned int>();

auto iRightShiftedAndSubtractedFromMagicNumber =

factory.template generate<unsigned int>();

auto magicNumber = factory.template generate<unsigned int>(0x5f3759df);

for(int k = 0; k<n/simd; k++)

{

number.readFrom(bufIn,idThread);

number.mul(0.5f,x2);

y=number; y.readFrom(number);

y.template castBitwiseAndCopyTo<unsigned int>(i);

i.rightShift(1,iRightShifted);

magicNumber.sub(iRightShifted,i);

i.template castBitwiseAndCopyTo<float>(y);

x2.mul(y,x2);

x2.mul(y,x2);

threehalfs.sub(x2,threehalfsMinusX2);

y.mul(threehalfsMinusX2,y);

y.writeTo(bufOut,idThread);

idThread.add(simd,idThread);

}

},Vectorization::KernelArgs<float*,float*>{});

kernel.run(simd,input.data(),output.data());

}

void rsqrt_simd(std::vector<float> & input, std::vector<float> & output)

{

constexpr int simd = 16;

auto kernel = Vectorization::createKernel<simd>([&]

(const auto & factory, auto & idThread, float * bufIn, float * bufOut)

{

auto y= factory.template generate<float>();

const auto one= factory.template generate<float>(1.0f);

for(int k = 0; k<n; k+=simd)

{

y.readFrom(bufIn+k);

y.sqrt(y);

one.div(y,y);

y.writeTo(bufOut+k);

}

},Vectorization::KernelArgs<float*,float*>{});

kernel.run(simd,input.data(),output.data());

}

void rsqrt_simd2(std::vector<float> & input, std::vector<float> & output)

{

constexpr int simd = 16;

auto kernel = Vectorization::createKernel<simd>([&]

(const auto & factory, auto & idThread, float * bufIn, float * bufOut)

{

auto y= factory.template generate<float>();

for(int k = 0; k<n; k+=simd)

{

y.readFrom(bufIn+k);

y.rsqrt(y);

y.writeTo(bufOut+k);

}

},Vectorization::KernelArgs<float*,float*>{});

kernel.run(simd,input.data(),output.data());

}

int main() {

std::vector<float> input(n),output(n);

for(int i = 0; i<n; i++)

input[i]=1+i;

std::cout<<" ---------------------- 1.0f/std::sqrt ----------------------------- "

<<std::endl;

for(int k=0;k<20;k++)

{

{

Vectorization::Bench bench(0);

for(int i = 0; i<n; i++)

{

output[i]=1.0f/std::sqrt(input[i]);

}

}

std::cout<<output[0]<<" "<<output[1]<<" "<<output[2]<<" "<<output[3]<<"

"<<output[5]<<" "<<std::endl;

}

std::cout<<" ---------------------- Q_rsqrt ----------------------------- "

<<std::endl;

for(int k=0;k<10;k++)

{

{

Vectorization::Bench bench(0);

for(int i = 0; i<n; i++)

{

output[i]=Q_rsqrt(input[i]);

}

}

std::cout<<output[0]<<" "<<output[1]<<" "<<output[2]<<" "<<output[3]<<" "

<<output[5]<<" "<<std::endl;

}

std::cout<<" ---------------------- Q_rsqrt simd ----------------------------- "

<<std::endl;

for(int k=0;k<10;k++)

{

{

Vectorization::Bench bench(0);

Q_rsqrt_simd(input,output);

}