Using the program, you can prepare a Logo Detection Dataset and then train an Artificial neural network on this dataset and at last, you can test your network using your trained Neural Network.

Introduction

In this article, I will present my program (Logo Recognition System) and show you the mechanism of the program by giving a step by step explanation of the code.

Note: To be able to understand the article, you should have general knowledge of the basics of deep learning, such as the components of an artificial neural network, such as the layer or the activation function you should also have advanced C# knowledge.

Before we get started, we need to install the required libraries. The following libraries are required to get our program working:

Tensorflow.net and Tensorflow.Keras

Using the Paket-Manager-Console, enter the following commands:

NuGet\Install-Package TensorFlow.NET -Version 0.100.2NuGet\Install-Package TensorFlow.Keras -Version 0.10.2NuGet\Install-Package SciSharp.TensorFlow.Redist -Version 2.10.0NuGet\Install-Package SciSharp.TensorFlow.Redist-Windows-GPU -Version 2.10.0

- Emgu cv

Using the Paket-Manager-Console, enter the following commands:

NuGet\Install-Package EmguCV -Version 3.1.0.1NuGet\Install-Package Emgu.CV.runtime.windows -Version 4.6.0.5131NuGet\Install-Package Emgu.CV.Bitmap -Version 4.6.0.5131

ScottPlot.WinForms

Using the Paket-Manager-Console, enter the following command:

NuGet\Install-Package ScottPlot.WinForms -Version 4.1.60

- Newtonsoft.Json

Using the Paket-Manager-Console, enter the following command:

NuGet\Install-Package Newtonsoft.Json -Version 13.0.2

Note: After we installed the required libraries, we need also to set the target build platform from any CPU to 64 bit as the following:

Project->Properties->Build->General->Platform Target-> x64

Part 1: Create Our Own Convolution Neural Network Model

Since the convolution layer is specialized in image recognition and since we mainly want to classify images, we will use the convolution layer in our network and to speed up the learning process, we will also use the "maxpooling" layer and last but not least and to eliminate overfitting and to avoid a neural network being overly dependent on a particular object property, we will use the "droupout" layer in our network. As the final step in our cnn, we will use the "Dense" Layer which is a common classifier for neural networks. In this layer, every node is connected to every node in the previous layer. As activation function, we will use the Relu function in the hidden layers to speed up the training and the softmax function in the output layer because we will have more than two outputs.

Step by Step Network Structure Implementation

- The network input is a 32 x 32 image, which means that the input size should be 32 x 32 with 3 RGB channels

- x2 convolutional layers with 32 filter and with filter size as 3x3

Note 1: To avoid reducing layer output size, we can set the padding property to "same". - maxpooling layer with size as 2x2

- droupout layer with 0.25 rate

Note 2: As a parameter, we can give this layer a value between 0 and 1, for example 0.25 means that when the network is trained, a predetermined number (25%) of neurons in a certain layer of the network will be switched off ("dropout") and become not processed for the next calculation step. - We repeat the 2, 3, 4 steps two times and in each time, we double the number of the filters count in the convolutional layer

- Dense layer with 256 neuron

- Dense layer with 128 neuron

- As output, we will also use the dense layer with softmax as activation function:

public class CNN

{

ILayer Conv2D_1_;

ILayer Conv2D_2_;

ILayer MaxPooling2D_1_;

ILayer Dropout_1_;

ILayer Conv2D_3_;

ILayer Conv2D_4_;

ILayer MaxPooling2D_2_;

ILayer Dropout_2_;

ILayer Conv2D_5_;

ILayer Conv2D_6_;

ILayer MaxPooling2D_3_;

ILayer Dropout_3_;

ILayer Flatten;

ILayer Dense_1_;

ILayer Dense_2_;

ILayer Dense_3_;

int outputCount;

public CNN(int outputCount) {

var layers = new LayersApi();

Conv2D_1_ = layers.Conv2D(32, (3, 3), activation: "relu", padding: "same");

Conv2D_2_ = layers.Conv2D(32, (3, 3), activation: "relu", padding: "same");

Conv2D_3_ = layers.Conv2D(64, (3, 3), activation: "relu", padding: "same");

Conv2D_4_ = layers.Conv2D(64, (3, 3), activation: "relu", padding: "same");

Conv2D_5_ = layers.Conv2D(128, (3, 3), activation: "relu", padding: "same");

Conv2D_6_ = layers.Conv2D(128, (3, 3), activation: "relu", padding: "same");

MaxPooling2D_1_ = layers.MaxPooling2D(pool_size: (2, 2));

MaxPooling2D_2_ = layers.MaxPooling2D(pool_size: (2, 2));

MaxPooling2D_3_ = layers.MaxPooling2D(pool_size: (2, 2));

Dropout_1_ = layers.Dropout(0.25f);

Dropout_2_ = layers.Dropout(0.25f);

Dropout_3_ = layers.Dropout(0.25f);

Flatten = layers.Flatten();

Dense_1_ = layers.Dense(256, activation: "relu");

Dense_2_ = layers.Dense(128, activation: "relu");

Dense_3_ = layers.Dense(outputCount, activation: "softmax");

}

public Model Build(string name)

{

var inputs = keras.Input(shape: (32, 32, 3), name: "img_"+name);

var x = Conv2D_1_.Apply(inputs);

x = Conv2D_2_.Apply(x);

x = MaxPooling2D_1_.Apply(x);

x = Dropout_1_.Apply(x);

x = Conv2D_3_.Apply(x);

x = Conv2D_4_.Apply(x);

x = MaxPooling2D_2_.Apply(x);

x = Dropout_2_.Apply(x);

x = Conv2D_5_.Apply(x);

x = Conv2D_6_.Apply(x);

x = MaxPooling2D_3_.Apply(x);

x = Dropout_3_.Apply(x);

x = Flatten.Apply(x);

x = Dense_1_.Apply(x);

x = Dense_2_.Apply(x);

return keras.Model(inputs, Dense_3_.Apply(x), name: name);

}

}

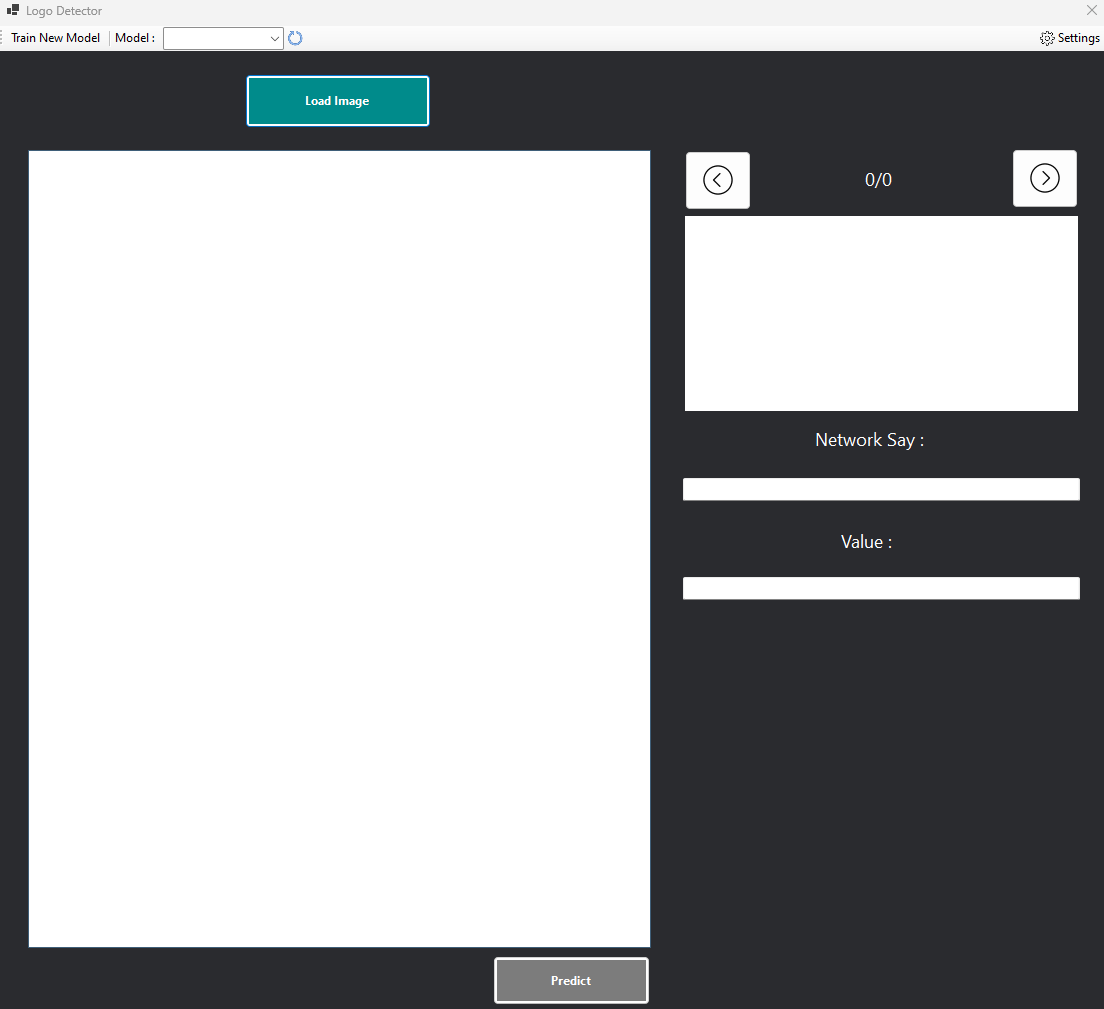

Part 2: The Program

To fully understand how to use the program, we need to know how it works in general so the program contains three parts; the first one where we prepare our own data set, the second part which represents the training process where we train our own model and the last part where we test our Model on realistic Objects.

-

Prepare Our Own Data Set

For this part, I have developed a Snipping Image Tool by which we can do two things:

-

The first thing is to Select an Object Manually where in this process, the Extracted Object will be exported as either a new Output and here a new Label in the Ideal Set Combo-box will be added or the Extracted Object can be set to existing Label in the Ideal Set Combobox, and that will be done as in the following steps in the order:

DialogResult res = CustomMsgbox.InputBox.ShowDialog

("represent the Image a new Dataset ?", "Question",

CustomMsgbox.InputBox.Icon.Question,

CustomMsgbox.InputBox.Buttons.YesNo);

if (res == System.Windows.Forms.DialogResult.Yes)

{

res = CustomMsgbox.InputBox.ShowDialog

("Please Enter the Ideal set value !!", "Ideal set",

CustomMsgbox.InputBox.Icon.Question,

CustomMsgbox.InputBox.Buttons.OkCancel,

CustomMsgbox.InputBox.Type.TextBox,

new string[] { "Ideal set new Value" });

if (res == System.Windows.Forms.DialogResult.OK)

{

Bitmap image = ImageHelper.CutImage(rect, new Bitmap

(this.docu_pb.Image));

switch (Central_Static_Value.Settings.Filter)

{

case "Colored": image = ImageHelper.BGRFiler(image); break;

case "Gray": image = ImageHelper.GrayFilter(image); break;

case "BlackWeight": image =

ImageHelper.BlackWhiteFilter(image); break;

default: image = ImageHelper.BGRFiler(image); break;

}

string InputBox_ResultValue = CustomMsgbox.InputBox.ResultValue;

int match = this.AssignIdentity(InputBox_ResultValue);

string imageName = "image" +

Central_Static_Value.Train_Model.

to_Train_Images_dataGridView.Rows.Count.ToString();

Central_Static_Value.Train_Model.

to_Train_Images_dataGridView.Rows.Add

(imageName, InputBox_ResultValue,

ImageHelper.ResizeImage(32, 32, image));

TrainingSet trainingSet =

new TrainingSet(imageName, match,

ImageHelper.ResizeImage(32, 32, image), true);

Central_Static_Value.Train_Model.TrainingSetList.Add(trainingSet);

refrech_ideal_set_comboBox();

}

}

else

{

res = CustomMsgbox.InputBox.ShowDialog

("Please choose the Ideal set value !!", "Ideal set",

CustomMsgbox.InputBox.Icon.Question,

CustomMsgbox.InputBox.Buttons.OkCancel,

CustomMsgbox.InputBox.Type.ComboBox,

Central_Static_Value.Train_Model.neuron2identity.Select

(x => x.Value).ToArray());

if (res == DialogResult.OK)

{

string result = CustomMsgbox.InputBox.ResultValue;

Bitmap image = ImageHelper.CutImage

(rect, new Bitmap(this.docu_pb.Image));

string imageName = "image" +

Central_Static_Value.Train_Model.

to_Train_Images_dataGridView.Rows.Count.ToString();

int match = AssignIdentity(result);

switch (Central_Static_Value.Settings.Filter)

{

case "Colored": image = ImageHelper.BGRFiler(image); break;

case "Gray": image = ImageHelper.GrayFilter(image); break;

case "BlackWeight": image =

ImageHelper.BlackWhiteFilter(image); break;

default: image = ImageHelper.BGRFiler(image); break;

}

Central_Static_Value.Train_Model.to_Train_Images_

dataGridView.Rows.Add

(imageName, result, ImageHelper.ResizeImage

(32, 32, image));

TrainingSet trainingSet =

new TrainingSet(imageName, match,

ImageHelper.ResizeImage(32, 32, image), false);

Central_Static_Value.Train_Model.TrainingSetList.Add(trainingSet);

}

}

-

After we use the Mouse, select a new Object. The program will ask us whether the selected object already exists in the dataset or not.

-

If the Selected Object is New or doesn't exist in the Data Set we have to enter the new Ideal set Value. Other case we have to choose one of the Available Ideal Set Values.

- After we entered the Ideal Set Value, we need to save it into a

Dictionary where the Key in the Dictionary represents the equivalent Current Output Count and the Value in the Dictionary represents the actual Ideal Set Value. Otherwise, we need to refresh the Output count Variable by incrementing it by one. All that, of course, if the Ideal set is New, refresh TrainingsetList by adding the New Dataset and the code: -

The second thing is to Select Objects automatically and that will be done using the Selective Search Algorithm) where in this process, the Extracted Object can be just set to existing Label in the Ideal Set Combo-box in the Snip Image Form so after we get one of them, using selective search automatic selected, object we take Ideal Set Value from the ideal_set_cb and save it as new Output.

The code:

string imageName = Image_name_tb.Text;

string selecteditem = ideal_set_cb.SelectedItem.ToString();

int match = AssignIdentity(selecteditem);

Central_Static_Value.Train_Model.to_Train_Images_dataGridView.Rows.Add

(imageName, selecteditem, ImageHelper.ResizeImage

(32, 32, new Bitmap(sniped_img_pictureBox.Image)));

TrainingSet trainingSet = new TrainingSet

(imageName, match, ImageHelper.ResizeImage

(32, 32, new Bitmap(sniped_img_pictureBox.Image)), false);

Central_Static_Value.Train_Model.TrainingSetList.Add(trainingSet);

-

Second Part "Training Process"

After the Dataset is prepared, the Training Process can be started as in the following steps:

NDArray x_train = ImageHelper.ImagesToNDArray(this.TrainingSetList, 32, 32);

NDArray y_train = ImageHelper.IdelaValuesToNDArray

(this.TrainingSetList, this.outputCount);

x_train = x_train.astype(np.float32);

x_train /= 255;

Model = new CNN(outputCount).Build("LogoDetector");

var opt = new Tensorflow.Keras.Optimizers.Adam(learning_rate: 0.001f);

Model.compile(optimizer: opt,

loss: keras.losses.CategoricalCrossentropy(),

metrics: new[] { "acc" });

for (int i = 0; i < (int)Epoches_numericUpDown.Value; i++)

{

Model.fit(x_train, y_train);

}

-

We already know that our network accepts a Size of (32x32) as Input so firstly and during the Prepare Dataset Process, we have to resize our image to (32x32) after that in the Training Process, we have to convert our images into Int ND Array so when we Convert our Image, we get an 1x32x32x3 Array, the first 1 represents the Image Index and the last 3 mean that we have 3 colors in each Pixel and now, let's imagine we have more than one image to train, so we need a ND Array like (Variable-Images-Count x 32 x 32 x 3) and the code:

public static NDArray ImagesToNDArray(List<TrainingSet> bitmaps,

int width, int height)

{

int[,,,] imagespixels = new int[bitmaps.Count, width, height, 3];

for (int bi = 0; bi < bitmaps.Count; bi++)

{

Bitmap bitmap = bitmaps[bi].Image;

int[,,] pixels = getPixels(bitmap);

for (int x = 0; x < width; x++)

{

for (int y = 0; y < height; y++)

{

for (int z = 0; z < 3; z++)

{

imagespixels[bi, x, y, z] = pixels[x, y, z];

}

}

}

}

return np.array(imagespixels);

}

-

After we convert our Image into ND Array, we need also to convert our Ideal Dataset Values or the Matches Values into 2d Nd Array where the First Dimension represent the Equivalent Image Index and the second Dimension represent the Match value or the Ideal Output and the code:

public static NDArray IdelaValuesToNDArray

(List<TrainingSet> images,int outputCount)

{

int[,] idealvalues = new int[images.Count, outputCount];

for (int i = 0; i < images.Count; i++)

{

for (int j = 0; j < outputCount; j++)

{

if (j == images[i].Match)

idealvalues[i, j] = 1;

else idealvalues[i, j] = 0;

}

}

return np.array(idealvalues);

}

-

To improve our training result, we divide our data set or our 4D dimensional ND Array by 255, the result will be a matrix with values between 0 and 1.

-

To speed up our learning process and save memory requirements, we will use the adam optimizer.

-

After that, the learning process can be started, and the final code:

-

Part 3, "Testing Process"

-

The program needs firstly a selective search algorithm, this algorithm will select for us all object in the Image and prepare them for the next step namely to Predict what for object it is:

-

The Predict Process: In this step, the Program will tell us what for object it is in the Image for that the Program will convert the image into 3 dimensional ND Array, the first dimension represent the width and the second the height and the last the RGB or the Colors. After that, we will give the array to our CNN to process it and at last to know what for object it is.

Note

In case we try to Predict images from outside the range of the dataset on which the model has been trained, there is a possibility that false predictions will be generated. Here, I would like to point out that the model must be trained on all images that have been set through the selective search algorithm, because only in this case will the program return correct predictions.

In the following video, I will show you how to use the program:

History

- 19th February, 2023: Initial version

- 28th March, 2023: Article updated