Cheetah Optimizer mimics the hunting strategies of cheetahs, which include searching, sitting-and-waiting, and attacking. This article is about Cheetah Optimizer implementation in Python.

Introduction

The Cheetah Optimizer has been implemented in MATLAB and is available on the MATLAB Central File Exchange. It offers a range of functions and has been meticulously designed to tackle complex optimization problems across multiple domains.

Recently, while working on one ML project, I wanted to enhance performance of SVM model. (To understand basic concept of SVM, you can go through my introductory article Understanding SVM published in DEV Community). I was looking for latest metaheuristic algorithm which can improve performance of SVM model. I came across Cheetah Optimizer in MATLAB Central. But given that my project is in Python and Python version is not available, I developed it in Python.

You can refer to my Medium article to understand basic concept about Metaheuristics Algorithms.

In this article, I’ll demonstrate Cheetah Optimizer implementation in Python. You can consider any dataset of your choice. For the sake of simplicity, I have considered one Diabetes dataset.

Background

In optimization and machine learning, various optimization algorithms and techniques are used to find the best solution to a given problem. Common optimization algorithms include gradient descent, genetic algorithms, particle swarm optimization, and many others. These algorithms are applied to tasks such as training machine learning models, finding the optimal configuration of parameters, and solving complex optimization problems in various domains.

You can read more about Cheetah optimizer here.

Using the Code

Below is the stepwise implementation of Cheetah Optimizer in Python.

1. Import Required Libraries

import numpy as np

import pandas as pd

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

Many of you might have been aware about the above imported libraries. However, for those who are not aware, you can refer to the below explanation.

import pandas as pd: This line imports the Pandas library and gives it the alias "pd." Pandas is a powerful data manipulation and analysis library in Python, often used for handling structured data in data science and machine learning tasks.

from sklearn.svm import SVC: Here, we are importing the SVC (Support Vector Classifier) class from the Scikit-Learn library. Scikit-Learn is a popular Python library for machine learning and provides various tools for tasks like classification, regression, clustering, etc. SVC is a class that allows you to create and train Support Vector Machine (SVM) models for classification.

import numpy as np: This line imports the NumPy library and gives it the alias "np." NumPy is another fundamental library for numerical and mathematical operations in Python. It provides support for handling arrays and matrices, which are often used in machine learning for data manipulation and mathematical operations.

from sklearn.metrics import accuracy_score: This line imports the accuracy_score function from Scikit-Learn's metrics module. accuracy_score is used to calculate the accuracy of a classification model's predictions by comparing them to the true labels. It's a common metric for evaluating the performance of classification models.

2. Read Dataset

data = pd.read_csv("diabetesdataset.csv")

X = data.iloc[:, 2:-1].values

y = data.iloc[:, 1].values

X = data.iloc[:, 2:-1].values: This line extracts the features from the DataFrame data and stores them in a NumPy array called X. It uses .iloc to select specific columns from the DataFrame. In this case, it selects all rows (denoted by :) and columns starting from the 3rd column (index 2) up to the last column (excluding the last column denoted by -1). These selected columns are considered as features for the machine learning model.

y = data.iloc[:, 1].values: This line extracts the labels from the DataFrame data and stores them in a NumPy array called y. It selects all rows (denoted by :) from the 2nd column (index 1) of the DataFrame. These selected values are considered as the labels or target variable for the machine learning model.

3. Set Target Variable

y = data['CLASS']

In machine learning tasks, we have a DataFrame with multiple columns, where one column represents the target variable (the thing we want to predict) and the others represent features (the attributes used to make predictions). In this case, y is being set to the labels (the "CLASS" column), which will typically be used as the target variable when training and evaluating machine learning models (in our case, its SVC).

4. Split Data

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

from sklearn.model_selection import train_test_split: This line imports the train_test_split function from Scikit-Learn's model_selection module. This function is commonly used to split a dataset into training and testing sets to assess the performance of machine learning models.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42): This line splits the dataset into four subsets by taking X and y parameters which are X: This is an array containing the feature data. y: This is an array which holds the target variable.

The train_test_split function takes these arrays as input and returns four subsets:

X_train: This is the subset of features used for training the machine learning model.X_test: This is the subset of features used for testing the machine learning model.y_train: This is the subset of labels corresponding to X_train and is used for training the model.y_test: This is the subset of labels corresponding to X_test and is used for evaluating the model's performance.

test_size=0.2: This specifies that 20% of the data will be reserved for testing, and the remaining 80% will be used for training.

random_state=42: This sets a random seed for reproducibility. Setting random_state ensures that the same random split is generated each time you run the code. You can use any integer value for random_state.

5. Define Objective Function

def objective_function(params):

if len(params) != 2:

raise ValueError("params must contain two values: C and gamma")

C, gamma = params

model = SVC(C=C, gamma=gamma)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

score = accuracy_score(y_test, y_pred)

return -score

This objective function is used in hyperparameter tuning tasks. In our case, we want to optimize hyperparameters C and gamma for an SVM model. The goal is to find the values of C and gamma that result in the highest accuracy on the test data, or equivalently, the lowest negative accuracy score.

6. Initialize Cheetah

bounds = np.array([[0.01, 100], [0.0001, 10]])

n_cheetahs = 30

n_iterations = 50

cheetahs = np.random.uniform(bounds[:, 0], bounds[:, 1], size=(n_cheetahs, len(bounds)))

best_position = None

best_score = -np.inf

cheetahs is a NumPy array of shape (n_cheetahs, len(bounds)). It initializes the positions of the "cheetahs" randomly within the specified parameter bounds.

np.random.uniform(bounds[:, 0], bounds[:, 1], size=(n_cheetahs, len(bounds))) generates random values for each cheetah's position within the specified bounds. Each row in the array corresponds to a cheetah, and each column corresponds to a parameter.

best_position is initially set to None, indicating that the best position found so far is unknown.

best_score is initially set to negative infinity (-np.inf) to ensure that any initial solution found in the optimization process will be considered an improvement. The goal is to maximize a score, so initializing it to negative infinity ensures that the first solution encountered will be considered the best until better solutions are found.

7. Set Constants

alpha = 0.5

beta = 0.5

delta = 0.5

8. Execute Optimization Function

for i in range(n_iterations):

scores = np.array([objective_function(cheetah) for cheetah in cheetahs])

This loop repeatedly evaluates the objective function for each "cheetah" in the optimization process for a specified number of iterations (n_iterations). The scores obtained for each "cheetah" at each iteration will be used to guide the optimization algorithm in updating the positions of the "cheetahs" and potentially finding a better solution as the optimization progresses.

if np.max(scores) > best_score:

best_score = np.max(scores)

best_position = cheetahs[np.argmax(scores)]

It checks if the maximum score obtained in the current iteration (np.max(scores)) is greater than the previous best score (best_score). If it is, then it updates best_score to the new maximum score, and best_position to the position of the "cheetah" that achieved the best score in this iteration.

print(f'Iteration {i+1}: Best score = {-best_score}')

new_cheetahs = np.zeros_like(cheetahs)

for j in range(n_cheetahs):

r = np.random.rand()

if r < alpha:

s = np.random.rand()

if s < 0.5:

f = np.random.choice([np.sin, np.cos, np.tan])

leader = cheetahs[np.argmax(scores)]

new_cheetahs[j] = leader + f((i+1) / n_iterations) * (leader - cheetahs[j])

else:

new_cheetahs[j] = cheetahs[j] +

np.random.uniform(-0.01, 0.01, size=len(bounds))

elif r < alpha + beta:

new_cheetahs[j] = cheetahs[j] + np.random.rand() *

(best_position - cheetahs[j])

else:

new_cheetahs[j] = cheetahs[0] +

np.random.uniform(-delta, delta, size=len(bounds))

Select a Strategy Based on Random Probability (alpha and beta): It generates a random number r between 0 and 1. Depending on the value of r, it selects one of three strategies:

Searching or Sitting-and-Waiting Strategy: If r < alpha, it further generates a random number s between 0 and 1. If s < 0.5, it chooses a searching strategy and updates the position using a random mathematical function applied to a leader's position. Otherwise, it chooses a sitting-and-waiting strategy.

Attacking Strategy: If alpha <= r < alpha + beta, it chooses an attacking strategy and updates the position by moving towards the best position found so far (best_position) with a random factor.

Leaving-the-Prey-and-Going-Back-Home Strategy: If r >= alpha + beta, it chooses a strategy of leaving the prey and going back home. It updates the position by moving back to the initial position (the position of the first "cheetah") with some random small value.

new_cheetahs[j] = np.clip(new_cheetahs[j], bounds[:, 0], bounds[:, 1])

After updating the positions, it clips the positions to ensure they remain within the specified parameter bounds (bounds[:, 0] and bounds[:, 1]).

cheetahs = new_cheetahs

Finally, it replaces the old positions of the "cheetahs" with the newly calculated positions stored in the new_cheetahs array.

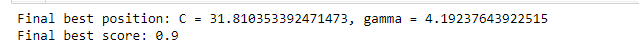

9. Finding Best Position and Score

print(f'Final best position: C = {best_position[0]}, gamma = {best_position[1]}')

print(f'Final best score: {-best_score}')

cValue = best_position[0]

gammaValue = best_position[1]

Output

10. Training SVM Model

from sklearn.svm import SVC

model = SVC(C = cValue, gamma = gammaValue)

This line creates an instance of the SVC class and configures it with specific hyperparameters:

C: The regularization parameter, cValue, is used to control the trade-off between maximizing the margin (large C) and minimizing the classification error (small C).

gamma: The kernel coefficient, gammaValue, determines the shape of the decision boundary. A small gamma leads to a more flexible decision boundary, while a large gamma makes it more rigid.

model.fit(X_train, y_train)

This line trains (fits) the SVM model on the training data. The training data consists of feature vectors stored in X_train and their corresponding labels stored in y_train. The fit method adjusts the model's parameters to learn a decision boundary that best separates the classes in the training data.

Output

11. Check for Model Accuracy

from sklearn.metrics import accuracy_score

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"The accuracy of the model is {accuracy:.2f}")

Output

Conclusion

Thus we have implemented Cheetah Optimizer algorithm in Python. Through Cheetah optimizer, we have found best rather optimal values of C and gamma parameters which are required for efficient and accurate result prediction through SVM machine learning model.

References

You can learn more about Cheetah Optimizer through the below articles:

- The Cheetah Optimizer (CO) - https://optim-app.com/projects/co

- Cheetah Optimizer - Seyedali Mirjalili (2023). Cheetah Optimizer (https://www.mathworks.com/matlabcentral/fileexchange/130404-cheetah-optimizer), MATLAB Central File Exchange. Retrieved .

You can download the source code here.

History

- 30th September, 2023: Initial version