Introduction

Completing task Asynchronously in case of long running query

processing is very helpful in some scenario. It ensures maximum use of hardware

resources as well. In case of non set based modern high level programming

language such as C# or Java has versatile facilities, libraries and

patterns for asynchronous programming.

But what about SET based language such as SQL? There is no straight forward way

to execute SQL statements in parallel

mode. In SQL Server there some way to do such stuff like using SQL Server

Service Broker or through CLR stored procedure. Service Broker actually is a

process of sending and receiving messages which can be sent to same or any

remote database of another SQL Server instance. Whereas CLR needs different set

of programming expertise, it also has some deployment issue. Today I am going

to show you the same implementation using SQL Server Agent Job. In this article

you also came to know, how huge number of long running SQL statements will be

executed in some smaller configurable chunks.

Background

In my recent data ware

house project, client has a requirement to validate a table data against some

wide range of predefined rules. The table has more than 150 columns. Each

column's data need to be validated with these rules. Let us be familiar with

some of them.

|

SL#

|

Column

|

Rule

|

|

1

|

Customer

DOB

|

DOB

Must be a valid date

|

|

DOB

must be less than the purchase date.

|

|

The

date format should be MM-DD-YYYY

|

|

2

|

Credit

Card Number

|

Card

Number should not be an Empty value

|

|

Should

be a valid Card Number

|

|

Should

be a valid Card Type

|

|

Expiry

Date must be within a range of 1997 To 2020

|

Table 1: Rule against

column.

In addition, another

requirement is to keep the garbage data into another table for reporting

purpose so that client can view the garbage data and fix them. To accomplish

the job we have dedicated to created stored procedures for each rule. As a result

we had to created about 35 distinct stored procedures to validate the customer

table data. Client usually sends us customer data in text files and we bulk

load them into staging tables. But our main challenge was to validate the data

of all 150 columns by running on average 4 rules (4 stored procedures) against

each columns. Our customer table contains more than 3 million of records and

each validation rule stored procedure took considerable time to select the garbage

value and save them to a Error Log Table. In this situation running these

stored procedures in parallel mode and 10-12 procedures at a time (a chunk) was an splendid solution for us to get the

job done. Alright, no more talk, let us

jump to the actual implementation.

Metadata Management

As I spoke earlier that

we will use SQL Server Agent Job to run our stored procedure Asynchronously .So

in run time you will have very minimal control over the running stored procedures or

statements. In this situation we have used a metadata table that stores the

stored procedures execution status like when they started and

finished or any error message during execution period. The table creation

scripts would be:

CREATE TABLE AsyncProcessStatusLog

(

Id INT IDENTITY(1, 1) NOT NULL PRIMARY KEY,

StoredProcedureName VARCHAR(100) NOT NULL,

StartTime DATETIME NOT NULL,

EndTime DATETIME NULL,

ProcessStatus CHAR(1) NOT NULL

CHECK (([ProcessStatus]='F' OR [ProcessStatus]='S' OR [ProcessStatus]='R')),

ErrorMessage VARCHAR(2000) NULL

)

Process Status:

- "R" indicates the procedures is

running state.

- "F" indicates the procedure failed and

an exception occurred.

- "S" means the procedures successfully

executed.

After that we need to create a stored procedure named "InsertUpdate_AsyncProcessStatusLog_usp

" that will insert/update status into the "AsyncProcessStatusLog

" table.

CREATE PROCEDURE InsertUpdate_AsyncProcessStatusLog_usp

@StoredProcedureName VARCHAR(100),

@ProcessStatus CHAR(1),

@ErrorMessage VARCHAR(2000)

AS

BEGIN

DECLARE @now DATETIME = GETDATE()

IF NOT EXISTS (

SELECT 1

FROM AsyncProcessStatusLog

WHERE StoredProcedureName = @StoredProcedureName

)

INSERT INTO AsyncProcessStatusLog

(

StoredProcedureName,

StartTime,

EndTime,

ProcessStatus

)

VALUES

(

@StoredProcedureName,

@now,

NULL,

'R'

)

ELSE

UPDATE AsyncProcessStatusLog

SET EndTime = @now,

ProcessStatus = @ProcessStatus,

ErrorMessage = @ErrorMessage

WHERE StoredProcedureName = @StoredProcedureName

END

How to write The

Stored Procedures/Statements for parallel execution

Now let me prepare some sample stored procedures that will

be run in parallel. Some convention need to be followed when creating

them. Have a look below:

CREATE PROCEDURE CheckValidDate_usp

AS

BEGIN

BEGIN TRY

EXEC InsertUpdate_AsyncProcessStatusLog_usp @StoredProcedureName =

'CheckValidDate_usp',

@ProcessStatus = 'R',

@ErrorMessage = NULL

WAITFOR DELAY '00:00:10'

EXEC InsertUpdate_AsyncProcessStatusLog_usp @StoredProcedureName =

'CheckValidDate_usp',

@ProcessStatus = 'S',

@ErrorMessage = NULL

END TRY

BEGIN CATCH

DECLARE @ErrorMsg VARCHAR(2000) = ERROR_MESSAGE()

EXEC InsertUpdate_AsyncProcessStatusLog_usp @StoredProcedureName =

'CheckValidDate_usp',

@ProcessStatus = 'F',

@ErrorMessage = @ErrorMsg

END CATCH

END

Maintaining Stored Procedures execution status

by executing "InsertUpdate_AsyncProcessStatusLog_usp"

stored procedure.

Setting Initial Running Status (@ProcessStatus ='R') by the

top most statement.

Updating with Success Status (@ProcessStatus

='S') by the statement placed just above the END

TRY block.

Updating with Failed Status (@ProcessStatus = 'F') by the

statement inside CATCH block with additional parameter @ErrorMessage.

·

Business Logic Block:

This block usually contains main TSQL code to satisfy

the business logic. I have used WAITFOR DELAY '00:00:10' here to have an impression that the stored

procedure takes 10 seconds to finish for demo purpose. (In real life situation

this will be replaced by actual business TSQL logic.)

Sample Stored

Procedures:

I have written 7

stored procedures against each rule by following the convention mentioned above.

Please have a look on the attached TestStoredProcedures.sql file (After extracting AsyncSQLScripts.zip).

| SL#

| Rule

| Associated Stored Procedure

|

| 1

| DOB Must be a valid date

| CheckValidDate_usp

|

| 2

| DOB must be greater than the purchase date.

| CheckDateGreaterThanAnotherDate_usp

|

| 3

| The date format should be MM/DD/YYYY

| CheckValidDateFormat_usp

|

| 4

| Card Number should be an Empty value

| CheckIsRequired_usp

|

| 5

| Should be a valid Card Number

| CheckValidCreditCardNumber_usp

|

| 6

| Should be a valid Card Type

| CheckCardTypeValid_usp

|

| 7

| Expiry Date must be within a range of 1997 To 2020

| CheckIsDateInValidRange_usp

|

Table 2: Rule wise stored procedure.

The main attraction!! The script that executes Stored Procedures Asynchronously via Agent Job:

We have our Stored Procedures and Metadata table ready.

Now let us have a look on the main attraction, the scripts which will create

SQL Agent Job steps on the fly and run our Stored Procedures In parallel mode.

CREATE PROCEDURE ExecuteSQL_ByAgentJob_usp(

@SqlStatemet VARCHAR(4000),

@SPNameOrStmntTitle VARCHAR(100),

@JobRunningUser VARCHAR(100) = NULL,

@JobIdOut UNIQUEIDENTIFIER OUTPUT

)

AS

BEGIN

SET NOCOUNT ON;

DECLARE @JobId UNIQUEIDENTIFIER,

@JobName VARCHAR(250) = NULL,

@DBName VARCHAR(100) = DB_NAME(),

@ServerName VARCHAR(100) = @@SERVERNAME

SET @JobName = @SPNameOrStmntTitle + '_' + CONVERT(VARCHAR(64), NEWID())

IF @JobRunningUser IS NULL

SET @JobRunningUser = SUSER_NAME()

EXECUTE msdb..sp_add_job @job_name = @JobName, @owner_login_name = @JobRunningUser,

@job_id = @JobId OUTPUT

EXECUTE msdb..sp_add_jobserver @job_id = @JobId, @server_name = @ServerName

EXECUTE msdb..sp_add_jobstep @job_id = @JobId, @step_name = 'Step1', @command

= @SqlStatemet,@database_name = @DBName, @on_success_action = 3

DECLARE @sql VARCHAR(250) = 'execute msdb..sp_delete_job @job_name=''' + @JobName + ''''

EXECUTE msdb..sp_add_jobstep @job_id = @JobId, @step_name = 'Step2', @command = @sql

EXECUTE msdb..sp_start_job @job_id = @JobId

SET @JobIdOut = @JobId

END

Main logic taken from here

The stored procedure

works as follows:

- Creates a job with an unique name, register it to the sever.

The has two steps.

- In Step-1:

the job executes the provided SQL Statement.

- In Step-2 : the job executes command to delete

itself.

- The procedures also return the JobId for further

reference.

Breaking the queries into small chunks

Now

we have everything to run the our stored procedures asynchronously. But what

about to run them in a small chunk. Now let me say few words about the benefits

of executing resource intensive talks in a smaller chunk.

- if we run 100 parallel tasks there is a

significant chance for the server to go out of resource with a error like " Insufficient System

Memory To Run This Query".

- If we need to forcefully stop query execution

only current chunk will be stopped and it ensures no further processing.

We have total 7 stored

procedures here, we will run 3 procedures at a time. In that case the chunk

execution scenario will be as follows:

| SL#

| Stored Procedure

| Chunk #

|

| 1

| CheckValidDate_usp

| 1

|

| 2

| CheckDateGreaterThanAnotherDate_usp

|

| 3

| CheckValidDateFormat_usp

|

| 4

| CheckIsRequired_usp

| 2

|

| 5

| CheckValidCreditCardNumber_usp

|

| 6

| CheckCardTypeValid_usp

|

| 7

| CheckIsDateInValidRange_usp

| 3

|

Table 3: Chunk wise

script distribution

This chunk size actually

depends on case to case so I prefer in real life, better to keep this value will be in a configuration

table so that any time this value can be changed.

Arrangements for running the SPs asynchronously:

To the run the queries

asynchronously we need to keep in mind the following arrangements.

- Prepare and keep all queries/stored

procedures(with required parameters) in a temp table or table variable.

- Execute the first chunk of using "ExecuteSQL_ByAgentJob_usp" SP with

the dynamic statement prepared at previous step and wait until first chunk to

be finished and run the subsequent chunks.

- Monitor the scripts by querying the metadata

table "AsyncProcessStatusLog".

- Notify user for any untoward situation during run time.

Now let us discuss each

steps in details.

CREATE PROCEDURE Customer_Validator_usp

AS

BEGIN

SET NOCOUNT ON;

DELETE FROM AsyncProcessStatusLog

DECLARE @Scripts TABLE (

Id INT NOT NULL IDENTITY(1, 1) PRIMARY KEY,

Script NVARCHAR(4000)

)

INSERT INTO @Scripts

SELECT 'EXEC CheckValidDate_usp'

UNION ALL

SELECT 'EXEC CheckDateGreaterThanAnotherDate_usp'

UNION ALL

SELECT 'EXEC CheckValidDateFormat_usp'

UNION ALL

SELECT 'EXEC CheckIsRequired_usp'

UNION ALL

SELECT 'EXEC CheckValidCreditCardNumber_usp'

UNION ALL

SELECT 'EXEC CheckCardTypeValid_usp'

UNION ALL

SELECT 'EXEC CheckIsDateInValidRange_usp'

DECLARE @sql NVARCHAR(4000),

@Itr INT,

@RecCount INT,

@ScriptTitle VARCHAR(200),

@JobId UNIQUEIDENTIFIER

DECLARE @TotalScriptSentToJob INT = 0,

@MaxValidatonProcedureToRunAtaTime INT = 3,

@IsChunkProcessing BIT

SET @Itr = 1

SET @RecCount = (

SELECT COUNT(*)

FROM @Scripts

)

WHILE (@Itr <= @RecCount)

BEGIN

SELECT @sql = t.Script

FROM @Scripts t

WHERE id = @Itr

SET @ScriptTitle = LEFT(REPLACE(@sql, 'EXEC ', ''), 10)

EXEC ExecuteSQL_ByAgentJob_usp

@SqlStatemet = @sql,

@SPNameOrStmntTitle = @ScriptTitle,

@JobRunningUser = 'sa',

@JobIdOut = @JobId OUTPUT

SET @TotalScriptSentToJob = @TotalScriptSentToJob + 1

IF (@TotalScriptSentToJob = @MaxValidatonProcedureToRunAtaTime)

BEGIN

SET @TotalScriptSentToJob = 0

SET @IsChunkProcessing = 1

END

IF (@IsChunkProcessing = 1)

BEGIN

DECLARE @Result INT

EXEC @Result = Wait_Unitl_Chunk_ToBe_Finished_usp

PRINT 'I am waiting the chunk to be finished.'

IF (@Result = -1)

BEGIN

RAISERROR ('Exception occured in some srored procedure.', 17, 2)

RETURN -1

END

ELSE

BEGIN

SET @IsChunkProcessing = 0

END

END

SET @sql = ''

SET @Itr = @Itr + 1

END

EXEC @Result = Wait_Unitl_Chunk_ToBe_Finished_usp

PRINT 'I am waiting the last chunk to be finished.'

IF (@Result = -1)

BEGIN

RAISERROR ('Exception occured in some srored procedure.', 17, 2)

RETURN -1

END

PRINT 'I am finished!'

END

PART-1 Preparing and Storing Scripts

in a table variable

The

scripts are kept into a table variable in a straight forward way just to reduce

complexity. But in real life situation please find out your won suitable way to

prepare the scripts with associated parameters dynamically and store them to

the table variable.

PART-2 Variable declaration Section

Here required variables have been declared. Not all are

needed to be described here but I would like to tell few words about "@TotalScriptSentToJob"

and "@MaxValidatonProcedureToRunAtaTime"

variables. The "@MaxValidatonProcedureToRunAtaTime" variable stores

the value of how many Stored Procedures will run at a time(Chunk Size) and

"@TotalScriptSentToJob"

keeps the incremented value of how many Stored Procedures already sent to SQL

Agent for asynchronous processing. The purpose of "@IsChunkProcessing" is to

decide whether the system will go to wait state to stop processing any other

queries until the current chunk to be finished.

PART-3 Beginning

of the WHILE LOOP

Prepare Job name, and

sending the command to the SQL Agent job for parallel processing.

PART-4 Decides

to go for WAIT state

After

sending scripts to the Agent Job the value of "@TotalScriptSentToJob" get

incremented and get compared with "@MaxValidatonProcedureToRunAtaTime" to determine whether max number of Stored Procedures already been sent against a chunk or not. If max number of Stored

Procedures already sent then it reinitialize "@TotalScriptSentToJob" with

"0" and "@IsChunkProcessing " with "1" .

PART-5 Wait

State:

If

get "@IsChunkProcessing

=1". The system goes to wait state until the current asynchronously

running stored procedures to be finished. How system goes to WAIT state after

finishing a chunk, has been described in details later in this article. This

PART also terminate the whole process for any error occurs during run time.

PART-6 WAIT

State When Last chunk value is always less than the actual chunk

At

the end, once again the system check for any running/active statements and waits for them to be completed. This situation takes place when the last chunk value

is always less than the actual chunk

value.

To

get a clear idea about this, just have a look on the Table 3 (Chunk wise script

distribution) where we have total 7 stored procedures and we have divided them

into 3 chunks like 3+3+1. In the last chunk only one procedures will run. In

this situation the following code block never comply with this situation.

IF (@TotalScriptSentToJob >= @MaxValidatonProcedureToRunAtaTime)

Because the value of "@TotalScriptSentToJob" will be "1" but our initial

chunk size is set to 3 (@MaxValidatonProcedureToRunAtaTime=3) . So the value of "@IsChunkProcessing" will never a get a value "1". Thus no WAIT state will happen for this chunk inside the WHILE LOOP. To get rid

from this , the "EXEC @Result = Wait_Unitl_Chunk_ToBe_Finished_usp" statement

has been used once again after END block of WHILE loop.

How system maintains WAIT state after finishing a chunk

The system goes in wait

state by executing "EXEC @Result = Wait_Unitl_Chunk_ToBe_Finished_usp". This

procedures run a infinite loop and checking the status of each procedures running

under this chunk by querying the "AsyncProcessStatusLog " metadata table.

Let us see the implementation:

CREATE PROCEDURE Wait_Unitl_Chunk_ToBe_Finished_usp

AS

BEGIN

WHILE (1 = 1)

BEGIN

WAITFOR DELAY '00:00:3'

IF EXISTS(

SELECT 1

FROM AsyncProcessStatusLog aps

WHERE aps.ProcessStatus = 'F'

)

RETURN -1

IF NOT EXISTS(

SELECT 1

FROM AsyncProcessStatusLog aps

WHERE aps.ProcessStatus = 'R'

)

RETURN 1

END

END

You may remember from "How to write The Stored

Procedures/Statements" section that when the each procedures start running it

writes a row in the metadata table with an initial status (ProcessStatus)

"R" indicates "Running". For any exception the status is

updated with a value of "F" indicates "Failed". If the

procedures runs successfully the status becomes "S" means "Success". The infinite loop breaks when there is no

active (running) queries in the job. The

procedure return 1 in normal situation and -1 if any exception occurs.

Using the code

So far we have enough idea about the architecture. Now let's

run the scripts and see the how queries are running in parallel and chunk by chunk.

- Please make sure SQL Agent Service is running.

- Extract AsyncSQLScripts.zip and execute DB_Metadata_Table.sql. This will

create a test database with metadata table. Afterwards, execute TestStoredProcedures.sql,

ExecuteSQL_ByAgentJob_usp.sql , Wait_Unitl_Chunk_ToBe_Finished_usp.sql sequentially.

- Finally execute Customer_Validator_usp.sql. Now

we are ready to test our code. Close all open window of SSMS.

- Open

two new blank query tab. Write following script in the respective tabs.

Query Tab 1

USE TestAyncDB

GO

EXEC Customer_Validator_usp

Query Tab 2

SELECT apsl.StoredProcedureName,

apsl.StartTime,

apsl.EndTime,

DATEDIFF(second, apsl.StartTime, apsl.EndTime) AS ElapsedSeconds

FROM AsyncProcessStatusLog apsl

ORDER BY

apsl.StartTime

- Go to Window Menu and Press "New Vertical

Tab Group" just to see the

windows side by side.

- Now execute the scripts of Query Tab 1 ( "EXEC Customer_Validator_usp") afterwards, quickly switch to Query

Tab 2 and press F5 continuously after couple of seconds.

- You will see 3 stored procedures (First Chunk)

start running at the same time(In Parallel)

like below:

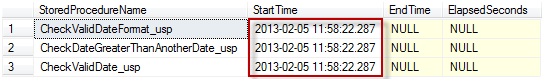

Figure 1: Query Running in parallel mode for first chunk.

in the image below, the second chunk has stared and one stored

procedure already finished and other two procedures is running in parallel.

Figure 2: Second chunk is running.

The next image shows all Stored procedures finished running

asynchronously in 3 separate chunks.

Figure 3: All stored procedures has finished running in 3 chunks.

- Now go back to Query Tab 1 and see the

"Messages" tab in the result

pane. You will find something similar like this.

Figure 4: Message generated by SSMS.

System goes to wait state after executing each chunk

(3+3+1).

The Real Benefit

If

we would run the query synchronously(one after another) the first stored procedure will wait for

the second procedures to be finished. In that case, it will take 1 minute 10 seconds

to finish (as we have put several WAITFOR DELAY inside the stored

procedures).

| Stores Procedure

| WAITFOR DELAY (Seconds)

|

| CheckValidDate_usp

| 10

|

| CheckDateGreaterThanAnotherDate_usp

| 10

|

| CheckValidDateFormat_usp

| 15

|

| CheckIsRequired_usp

| 5

|

| CheckValidCreditCardNumber_usp

| 10

|

| CheckCardTypeValid_usp

| 10

|

| CheckIsDateInValidRange_usp

| 10

|

| Total

| 70 Seconds

|

Table 4: WAITFOR DELAY setting inside each stored procedures.

Now let us run another query

to determine how much time it took to complete all 7 stored procedures in asynchronous mode.

SELECT MIN(apsl.StartTime) MinStartTime,

MAX(apsl.EndTime) MaxEndTime,

DATEDIFF(second, MIN(apsl.StartTime), MAX(apsl.EndTime)) AS

ElapsedSeconds

FROM AsyncProcessStatusLog apsl

The output will be:

| MinStartTime

| MaxEndTIme

| ElapsedSeconds

|

| 2013-02-05 12:00:58.287

| 2103-02-05 12:01:38.283

| 40

|

It took only 40 seconds to finish.Thus we have saved 30 seconds and got almost 42% performance improvement.

Lesson Learned During

from Real Life Implementation

- Please be 100% careful

about the following 2 exception inside the Stored Procedures code that you should

consider for parallel execution. Because SQL Server TRY CATCH block does not honor this type of exception.

- Compile errors, such as syntax errors that

prevent a batch from executing.

- Errors that occur during statement-level

recompilation, such as object name resolution errors that happen after

compilation due to deferred name resolution.

In

this situation, the stored procedure's running ("R") state for ever and will never

changes thus the whole process will hang on by WAIT State. You must have to

manually kill the process to break situation then.

- Please add an extra

code block inside " Wait_Unitl_Chunk_ToBe_Finished_usp" stored procedure to

break to loop after a defined time. Because if the system went into a long wait state

for a long running query or any non recognized system exception mentioned above so that other query can have slot or resource to execute.

- Have a look on the

SQL Agent Job history and clean up any non deleted job(for exception)

periodically. The ExecuteSQL_ByAgentJob_usp

stored procedures gives JobId as an output parameter you can save this to the

metadata table for future reference to identify which job got exception and delete them automatically.

Conclusion

Executing long running queries asynchronously not only save

time but also ensure maximum usages

of CPU and memory. On top of this, not running all queries at a time,

running them in a smaller chunks also prevent the queries from occupying all

system resources so that that the queries do not suffer from lack of resources.

Thus the overall system runs in a managed and optimized fashion.