Introduction

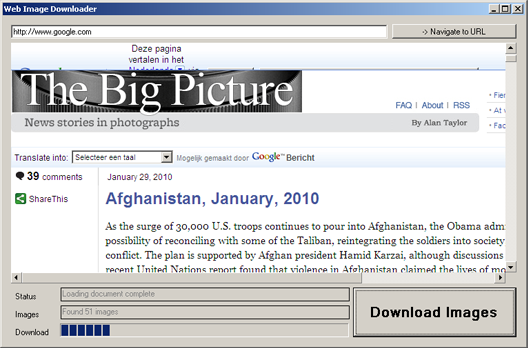

There is this Boston Global website called 'Big Picture' (http://www.boston.com/bigpicture/) and I love it. It shows pictures from people, animals, buildings, events, and all kinds of other stuff that is happening around the world. The quality of the images is excellent and I use them as desktop backgrounds a lot.

I found myself 'Saving as ...' a lot of images, and needed something to do this for me. The .NET framework makes it so easy to do something like this, that I wrote an application in a matter of minutes to download the images automatically.

Note: I use the pictures as background images. Please respect the photographer's rights - and don't use this app for anything illegal.

Solution

To make an application like this is really easy. What you need to do is:

- Get the website contents (HTML) of a specified page

- Get the image URLs from the HTML

- Download all the images

So ..

- What I did was add a

WebBrowser component to my windows form, starting up in Google. Now the user can navigate to whatever page he/she likes. To get the source of the current document, I use the WebBrowser.Document property. - Then, we extract the

IMG tags form the HTML using this method:

public static List<string> GetImagesFromWebBrowserDocument(HtmlDocument document)

{

var result = new List<string>();

foreach (HtmlElement item in document.Body.GetElementsByTagName("img"))

{

result.Add(item.GetAttribute("src"));

}

return result;

}

- Finally, to download the images, I use the

WebClient class:

public static void DownloadFile(string url,string destinationFolder)

{

if (!destinationFolder.EndsWith("\\"))

destinationFolder = string.Concat(destinationFolder, "\\");

using (var web = new WebClient())

{

var source = url;

var segments = source.Split('/');

var destination = destinationFolder +

segments[segments.Length - 1];

try

{

web.DownloadFile(source, destination);

System.Diagnostics.Debug.WriteLine(string.Format(

"Download OK - {0} ==> {1}", source, destination));

}

catch

{

System.Diagnostics.Debug.WriteLine(string.Format(

"Download FAILED - {0} ==> {1}", source, destination));

}

}

}

I added the possibility to select the download folder and added some events for updates, but that's all there is to it.

Stuff I might want to do in the future

What I could do is add a threshold for the minimum size of the image, so that logo's etc., aren't downloaded every time. Also, I don't have any checks on filenames (duplicates), but hey, it's working fine like this.