Introduction

The original FDomain and FGrid classes are not available anymore. FDomain and FGrid are dummy data structures in this source code. Hence, dummy data is written during the computational phase to illustrate the concept of parallel I/O. The performance of the parallel I/O in this source code is probably not optimum due to the choose data size and data structure. In general, the parallel I/O read and write operations can be applied to many parallel applications.

Pre-requisite to run the code: MPI and parallel HDF5 library are installed. phdf5.h5 (simulation initialization file) is located in the same directory as the other source codes. HDFView is not required to run the codes, but will help visualization and modification of the *.h5 file.

Command to run the code in Linux: mpiexec -n 5 ./phdf5

Note: The code needs to be run with 5 processes because there is a checking in the phdf5.h5. The offset_array in phdf5.h5 needs to be changed accordingly if the number of processes to run the code is not 5.

Introduction

This project explores the efficient parallel I/O access using the parallel HDF5 library available at http://www.hdfgroup.org/HDF5/. The code will read and write simulation data in parallel. The parallel I/O functionality is implemented in the FHDF class. The two main functions of the FHDF class:

- parallel read initial simulation data stored in the HDF5 file to restart simulation

- parallel write simulation data during computational phase to the HDF5 file

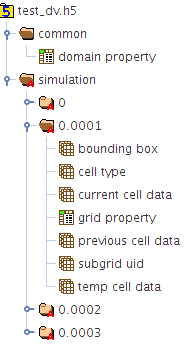

The design of the parallel I/O file structure using the parallel HDF5 library is shown below:

Note: The HDF5 (serial version) is a great data storage and management tools for many serial applications. Depending on the application data structure, the I/O performance could be better than the typical file pointer read/write in addition to great visualization capability.

Background

One of the purposes of performing simulation is to analyze the evolution of some physical properties such as the velocity across time and space. Hence, it is customary to write the numerical values of these physical properties, during the simulation, into files for further post processing and analysis. Proper data storage and management are becoming a critical issue especially for simulation which generates a vast amount of data. With the advance of supercomputer facilities to accommodate compute-intensive simulation, more data files are generated unfortunately and these files require more memory and disk for storage and management.

In the sequential approach (figure on the left), all processes send data to one process, for example process 0, and process 0 write the data to a file. During the reading phase, process 0 read from the file in the storage hardware and then distribute the data to all other processes. From the perspective of file I/O access, this approach works more efficiently when the number of processes is small. As the number of processes increases, there exists a bottleneck at process 0 to read from or write to the storage hardware. The lack of parallelism in file I/O limits the scalability performance. Furthermore, a good communication scheme is required to ensure process 0 receives or distributes the data correctly to other processes. Indirectly, this creates a high memory demand at process 0 to store all the data. This approach will not work if the memory demand is higher than the available memory at the single process in some supercomputer centers.

|  |

To overcome the limitation of communication and memory demand, some parallel applications read or write individual data file (figure on the right). The main disadvantages of this approach is a huge number of files are required/created as the number of processes used for the computation increases. Furthermore, a high demand is placed in the post processing phase because information needs to be collected from each individual file and assembled into a final data file. In file system of some supercomputer centers, for example, the supermuc at LRZ, this approach is prone to I/O error due to timeout error or crashes because the parallel file system at supermuc is tuned for high bandwidth but not optimal to handle a large quantity of small files located at a single directory, for example, a parallel program or simultaneously running job which generates ~ 1000 files per directory.

With the introduction of parallel I/O in MPI-2, this provides the possibility of writing a single file by all participating parallel processes. This is achieved by setting the file view through MPI_File_set_view, where a region in the file is defined for each process to read or write. The data will be written in binary format, a machine readable format. Thus it always requires a post processing step to transform the binary format into ASCII format if there is a need to analyze the data, for example, for debugging. Additionally, the binary files may not be portable to other supercomputers due to different endianness.

A high level I/O library, for example the HDF5 library, provides an interface between the application program and the parallel MPI-IO operation. The experts of the library encapsulate the MPI-IO library, optimize and add other useful features to build a high level parallel I/O library. The user of the library requires only to apply this knowledge in their parallel program. This will in general save the development and optimization time for parallel I/O in an application program.

Using the Code

The parallel read and write operation are implemented in the FHDF class. It uses the FDomain and FGrid classes which are unavailable due to legal reason. Both the FDomain and FGrid classes define the data structure of a CFD code, which varies from one CFD code to another CFD code. However, the design and implementation concept of the FHDF can be applied to many simulations.

MPI_Comm_size(MPI_COMM_WORLD, &comp_size);

int rank;

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

bool success;

fd = new FDomain();

FHDF *fhdf = new FHDF(MPI_COMM_WORLD, comp_size, rank, fd, success);

fhdf->writeDomainData(offset_array);

fhdf->writeGridData(offset_array);

hid_t grid_tid = H5Tcreate(H5T_COMPOUND, sizeof(FGrid);

H5Tinsert(grid_tid, "mytag", HOFFSET(FGrid, mytag), H5T_NATIVE_INT);

H5Tinsert(grid_tid, "depth", HOFFSET(FGrid, depth), H5T_NATIVE_SHORT);

hsize_t vector_dim[] = {3};

hid_t vector_DT_INDEX_tid = H5Tarray_create(H5T_NATIVE_UINT, 1, vector_dim);

H5Tinsert(grid_tid, "n", HOFFSET(FGrid, n), vector_DT_INDEX_tid);

H5Tclose(vector_DT_INDEX_tid);

H5Dwrite(dataset, datatype, dataspace(memory space), dataspace(file space), transfer property, buffer); ;

H5Dread(dataset, datatype, dataspace(memory space), dataspace(file space), transfer property, buffer); ;

The available report explains the design and implementation, advantages and problem encountered during this project. Most importantly, it gives you the main concept of an efficient parallel I/O design and implementation. Reading the report will help you understand the code.

The code can be easily adapted to other simulations by replacing the data structures in this code with the corresponding data structures of other simulations.

Points of Interest

With the vastly available multi-core processes, parallel programming plays an important role in shaping the programming trend for efficient application today and in the future. We observe exponential growth in computing power according to Moore's law since the last two to three decades. A crucial part in efficient parallel programming is the input and output. However, the performance of I/O access, even in parallel I/O access, is lacking behind. The growing imbalance has prompted more researches in efficient and scalable I/O, especially in parallel programming.

It is a well known fact that three dimensional (3D) array has inferior performance typically than one dimensional (1D) array in term of memory layout, memory access and cache efficiency in computation. However, the performance comparison between a 3D array and a 1D array data structure in file I/O access is less known. The result in the report.pdf shows that the performance of parallel I/O using the HDF5 library can be better than the writing in binary using the typical C/C++ file pointer.

A general approach in the current parallel computing is to distribute the computation domain/data size to the processes such that they can fit into the cache. Such strategies may observe superlinear scaling. This is the optimum solution from a computational perspective.

Unfortunately, as we have observed earlier, writing a small data array to the file I/O incurs bad I/O performance. Hence, we have two optimum solutions independently but are contradict each other nevertheless. Thus, a balance needs to be maintained among them or a new approach needs to be discovered to overcome this limitation.

The new trend in parallel programming is the new programming model of combining both the distributed and shared memory access. Together with the advanced interconnect technology, for example, the Intel Quick Path Interconnect technology, an improved I/O scalability performance can be observed without heavily relying on the communication efficiency and the I/O performance of a single process.

History

- 23rd April, 2013: First submission