Introduction

One of the main actions in a C program, (which underlies many other

programming languages) is memory management. When the scope of this

resource is global (remains after function exit) but is unknown at

compile time, we use the malloc(), calloc(), realloc() set of resources,

or their new() dispose() C++ avatars. if we are programming Java code,

a hidden gnome does that for us.

To fully understand the importance of this subject, let us think about

terms like "trash collection", "smart pointers" or "Java" itself. Such

very productive, but time wasting and past prone to errors technologies

are built among other reasons to deal with this subject.

Background

Where does "C" language come from? "C" language is a bit above

assembler and started to run in low resource computers, where memory was

really valuable. Even nowadays, you can find "C" or a subset of "C++"

code running in tiny microcontrollers like your car keyfob, washing

machine or shaver. Programming microcontrollers is great delight for me,

but we must understand that saving 32 bytes of memory when you only has

2.000 is not the same thing as when you have 4.000.000.000. Well, it

seems the compiler does not mind this subject, and the algorithm is

almost the same in both cases. There is little optimization. In fact malloc() takes memory in chunks not smaller than a chosen power of two

(16, 32, 64, ... bytes) mainly for alignment purposes, which made

processor more efficient. You must include the size of the internal

control data in the block. This is one of the reasons your program does

not hang when you ask for a bit less memory than you need (forgetting

the trailing null size in a string, for example) and also the reason of

packages like valgrind to detect this fact in your code.

Fresh

memory allocation is not a problem. libc only needs to take a piece of

memory on the top of the heap and shorten the remaining block. The

problem comes when you free a used memory block, not located on the top.

This creates a "hole" which must be reused. The heap solves this keeping

a linked list of deleted blocks. When you need a new block, malloc()

must iterate this list, in search for your block. Additionally, adjacent

blocks are merged together to keep the heap as unfragmented as

possible.

Benchmarks

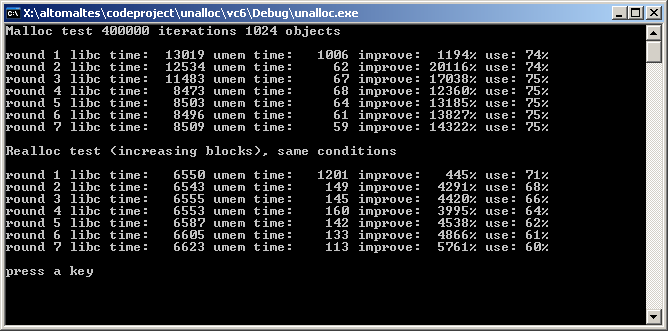

To prevent you from stop reading, and make sure

you do your homework, here you are an snapshot of my test program

(included on article). Look at the improve column. It shows the increase of speed compared to standard memory functions, and the use is the amount of memory used by program in our version.

A funny fact is that vc6 is 120 times faster, and

MinGW "only" times 21 than standard malloc(), but in

realloc() the ratios are 45 .. 300 times. The reason is explored below, but

realloc() is not as important as malloc(), free(), because in real programs (especially underlying new() and

delete() in C++ ) is almost no used.

Compiled under Visual Studio:

Compiled under mingw:

As you can see, Visual Studio does fast reallocs also, this made me suspect they does no "real" reallocs also, but always growing reallocs.

Proposed improvement.

Let's take an speculative approach to this issue.

malloc()-free() are normally used in a particular fashion from all

possible situations. Both functions underlies new() and delete()

operators,

and the type of objects we have in a real program is limited. An

examination of the heap as C++ program "warms" will show that the size

of blocks is organized in sets of fixed sizes, corresponding to objects.

alternated by free memory blocks of those sizes, form deleted objects.

when you request a block, malloc() steps the linked deleted list in

search for a big enough block. free() concerns on keeping the memory unfragmented, merging adjacent free blocks becomes useless many times,

because the fixed nature of the blocks requested.

I propose to

use only blocks of fixed sizes (powers of two) and keep the freed

blocks in single linked lists in a LIFO fashion. This allows that both

inserting (free) and removing (alloc) of a block can be done in a single

step, highly improving the speed of operations. When the memory block

needed is not present, will be requested to standard malloc() and once in the list never exited (till the program end).

The

cost of this way, is the not used memory is each block is more or less a

25% of the total block size. As a lateral effect the realloc() is a hundred times faster in the benchmark.

This is a memory layout based in power of two chunk sizes. There are

another possible layouts with bigger chunk tables and so, less unused

pad memory. This is a table showing possibilities for several

combinations, allowing allocating memory block sizes from 32 bytes to 8

MB.

| Chunk table size | Average used memory |

| |

| 10 pointers | 75% ( this example ) |

| 20 pointers | 88% |

| 40 pointers | 93% |

| 80 pointers | 97% |

Above table possibilities, needs a little more coding (not covered in this article), and this will penalize speed a little on unalloc(). The realloc() function is also compromised on growing blocks, since the possibility of need of moving increases at more accurate blocks. free() remains almost

unaffected. The theory is simple. More block sizes to choose from means<code>

Using the code

That's really simple. You must use

unalloc(), unfree(), and unrealloc() instead of

malloc(), free(), and realloc(). Here you are the code I've used to do the test, which may be a good working example. Win32 lacks

times() and so you have a workaround in the demo code to emulate it.

#define POOL_SIZE 1024

#define ITERATIONS 1000000

#define ROUNDS 7

unsigned randSize( ) { unsigned rnd= rand(); return(( rnd & 0x7FFF ) << 1 ); }

unsigned randIdx( ) { unsigned rnd= rand(); return( rnd & (POOL_SIZE-1) ); }

void mallocTest( int round )

{ static void * buffer[ POOL_SIZE ]; static void * unbuffer[ POOL_SIZE ];

int loop;

unsigned tm1,tm2;

for( loop= 0, tm1= times( NULL )

; loop < ITERATIONS

; loop++ )

{ int idx= randIdx();

if ( buffer[ idx ] )

{ free( buffer[ idx ] );

}

buffer[ idx ]= malloc( randSize() );

}

tm1= times( NULL ) - tm1;

for( loop= 0, tm2= times( NULL )

; loop < ITERATIONS

; loop++ )

{ int idx= randIdx();

if ( unbuffer[ idx ] )

{ unfree( unbuffer[ idx ] );

}

unbuffer[ idx ]= unalloc( randSize() );

}

tm2= times( NULL ) - tm2;

printf("round %d libc ticks: %6u umem ticks: %6u ", round, tm1, tm2 );

tm1 *= 100; tm1 /= tm2; tm1-=100;

printf("improving: %5u%%\n", tm1 );

}You can try several values for POOL_SIZE 1024 and ITERATIONS and

randSize() to test the improvements.

The reason I think realloc() is so fast in VC6 is that this compiler uses yet the "non shrinking size" optimization, so there are no need of move

blocks of memory, that is time consuming. This is only a theory.

You can start both

MinGW and VC6 executables and blame MS for it.

File list

Files included in ualloc_source.zip:

- main.c: Test program

- memory.c: Source code for

- memory.h: Include file for above

- windows.c: Windows emulation for UNIX

times() function - unalloc.cbp: Project file for code:::blocks

- build.bat: Build file for windows.

- build: Build file for UNIX.

- bin/debug/unalloc.exe: MinGW 32 bit executable

- vc6: Visual

Studio project

- vc6/debug/unalloc.exe: Visual Studio 32 bit executable

Files included in

ualloc_demo.zip:

- mingw.exe: MinGW 32 bit executable

- vc6.exe: MinGW 32 bit executable

Points of Interest

This is a subset of a bit bigger project. The main goal of the complete

code is give the "C++" delete() concept ( execute shutdown code before

free memory resources) to "C" code, implement also a "cascade disposal"

tree, by giving a parental relationship among objects, and when you

destroy one of the items, all of their children will be also destroyed,

and a system to recover the memory freed by globally allocated objects

no longer needed, but this is another history.

History

One of the reason of writing articles in this programmers forum is

making stronger my communication skills. This is a job I've done

successfully in my native language, but using another language with no

loss of hues and nuances is challenging. I would like for a several

years technological work experience abroad for a time and showing some

of my hidden cards might help.