ABSTRACT

This article comprises a detailed overview of the various multicore and parallel programming options available within the C# programming language. In addition, other programming languages and libraries which support multicore programming such as OpenMp, Thread Building Block, Message Passing Interface (MPI), Cilk++, and OpenCL are compared and contrasted.

THE C# PROGRAMMING LANGUAGE

The C# programming language is primarily designed for the Windows operating system and is available in the Visual Studio Integrated Development Environment (IDE). The language is well suited for rapid application development as its IDE is very feature rich, and the language itself contains an extremely large collection of supporting tools and classes. In terms of performance, C# falls between C++ and Java with some benchmarks showing up to a 17% performance gain over Java [1]. “The reference C# compiler is Microsoft Visual C#, which is closed-source. However, the language specification does not state that the C# compiler must target a Common Language Runtime, or generate Common Intermediate Language (CIL), or generate any other specific format. Theoretically, a C# compiler could generate machine code like traditional compilers of C++ or Fortran. [2]”

Applications designed in C# typically only function on the Windows operating system with the exception of applications deployed on the World Wide Web. However, there are many open source compilers available for C#. The most widely used open source compiler is called Mono which is licensed under dual GPLv3, MIT/X11 and libraries are LGPLv2 [3]. Using an open source compiler, applications developed in C# can be deployed on other operating systems such as Linux. The .NET Framework is required for executing C# applications unless an open source compiler such as Mono is deployed.

PRIMARY TYPES OF MULTICORE DEVELOPMENT

Starting with low level concepts such “bit parallelism” (i.e. 32 vs. 64 bit processing) many different types of parallelism exist including Instruction Level Parallelism, Data Parallelism, SIMD (Single Instruction Multiple Data), Task Parallelism, and Accelerators. However, in terms of multicore software development most projects can be divided into two primary categories: Asymmetric Multiprocessing and Symmetric Multiprocessing. Of course, many exceptions to this general rule exist. However, for purposes of this document, understanding the core distinction between AMP and SMP is critical for choosing an optimal multicore development platform and architecture.

Asymmetric Multiprocessing

Multicore development projects which implement asymmetric multiprocessing are typically deployed for very low-level, specialized tasks. The hardware upon which asymmetric multiprocessing applications execute includes a collection of two or more processors utilizing heterogeneous operating systems which do not typically have shared memory. AMP systems achieve high levels of Data Parallelism by dedicating one or more processors to handling very specific data processing tasks. Under this type of multicore development scenario, a pure C# implementation is most likely not the optimal choice. While there are third party libraries which allow C# to deploy AMP solutions [6] and the .NET 4.5 framework has included optimizations for Non-Uniform Memory Access (NUMA) architectures [5], the current effort to deploy AMP solutions in C# is similar to possibly higher performance implementations using C++.

Symmetric Multiprocessing

The most common form of multicore development is Symmetric Multiprocessing (SMP). Under the SMP architecture, high levels of Task Parallelism are achieved through distribution of different applications, processes, or threads to different processors typically using shared memory and homogeneous operating systems. The C# programming language excels primarily in rapid SMP application development offering high levels of performance and one of the largest collections of parallel classes and thread-safe data structures available. The C# suite of multicore development features distinguishes itself from other multicore development libraries such as OpenMp by offering both lower thread level programming support along with the higher level parallel programming constructs such as the C# Parallel class Parllel.For() and Parallel.ForEach() methods. In addition to a large number of multicore processing constructs, C# also includes a large variety of concurrent data structures, queues, bags, and other thread-safe collections. Using the multicore development features available in C#, common parallel programming abstractions such as Fork-Join, Pipeline, Locking, Divide and Conquer, Work Stealing, and Map Reduce can be quickly implemented while drastically reducing project timelines when compared to other multicore development languages.

MULTICORE DEVELOPMENT ALTERNATIVES

While there are a very large number of other development alternatives in the multicore marketplace, some of the more well-known offerings include: OpenMp, OpenCL, Thread Building Block (TBB), Message Passing Interface (MPI), and Cilk++. The following sections present high-level feature overviews and comparisons for each library. Understanding these alternatives is critical for choosing the best multicore development solution.

OpenMP

OpenMP is the most widely accepted standard for SMP systems, it supports 3 different languages (Fortran, C, C++), and it has been implemented by many vendors [7]. OpenMP is a relatively small and simple specification, and it supports incremental parallelism [8]. A lot of research is done on OpenMP, keeping it up to date with the latest hardware developments. OpenMP is easier to program and debug than MPI, and directives can be added incrementally supporting gradual parallelization [8]. OpenMP does not support thread level control or processor affinity [9].

OpenCL

The Open Computing Language (OpenCL) is a lower level “close-to-silicon” multicore development library [4]. OpenCL introduces the concept of uniformity by abstracting away underlying hardware using an innovative framework for building parallel applications. “The current supported hardwares range from CPUs, GPUs, DSP (Digical Signal Processors) to mobile CPUs such as ARM.” [10]. While OpenCL offers “parallel computing using all possible resources on end system” [4], multicore development using OpenCL can be quite complex with a steep learning curve. OpenCL requires configuration of various new abstractions such as “Work Groups”, “Work Items”, “Host Programs”, and “Kernels” to implement its concept of uniformity [4].

Thread Building Block

The Thread Building Block (TBB) library is Intel’s alternative for multicore development. TBB supports task level parallelism with cross-platform support and scalable runtimes [9]. OpenMP and TBB are similar in regards to the fact that the concept of threads and thread pools have been abstracted away within the library. Using both multicore development alternatives, the developer simply submits tasks without concern for how individual threads or the thread pool are being managed. This approach has both advantages and disadvantages. Using C#, multicore development can be done at either level using the Thread class, Parallel class, or other available solutions such as LINQ’s AsParallel() method [11].

Message Passing Interface

The Message Passing Interface(MPI) is a AMP multicore development solution. MPI runs on either shared or distributed memory architectures and can be used on a wider range of problems than OpenMP [8]. Unilke the SMP libraries each MPI process has its own local variables which is favorable for avoiding the overhead of locking. In addition, distributed memory computers are less expensive than large shared memory computers [8] which can be an important factor for large scale multicore development projects. However, being a lower level implementation, MPI can be extremely difficult to code involving many low level implementation details [8]. In addition, when a distributed memory architecture is used, performance can be limited by the communication network supporting each processor.

Cilk++

Cilk++ is a second multicore development alternative provided by Intel for supporting lower level implementation scenarios which may not be possible using Thread Building Blocks. Development in Cilk++ is a quick and easy way to harness the power of both multicore and vector processing with the library providing support for both task and data parallelism constructs [12]. With only 3 keywords, the Cilk++ library is relatively easy to learn providing an efficient work-stealing scheduler and powerful hyperobjects which allow for lock-free programming [12].

PARALLEL PROGRAMMING ABSTRACTIONS AND EXAMPLES USING C#

The beauty of multicore development in C# is the simplicity with which parallel programming abstractions can quickly be implemented. The following section explains some of the primary types of parallel programming abstractions giving examples of how these abstractions can be implemented using the C# language.

Fork-Join

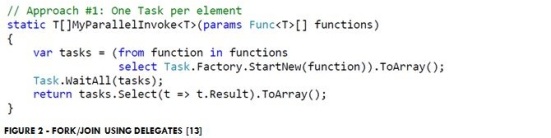

Using the Fork-Join pattern, various chunks of work are “forked” so that each individual chunk of work is executed asynchronously in parallel. After each asynchronous chunk is work is completed, the parallel chunks of work are then “joined” back together. In C# the FUNC keyword represents a “delegate” function which encapsulates a method that has one parameter and returns a value specified by the TResult parameter.

The following examples show four different approaches to the Fork-Join pattern using C#:

In Figure 2 above, an array of functions (each representing individual subroutines) are started as asynchronous tasks. Using this pattern, the Task WaitAll() method is then called joining each of the tasks. All of the individual tasks run simultaneously, and none of them are returned to the caller until each of the individual tasks have completed. The same pattern is demonstrated below, using a single “parent” task to manage each of the functions which are attached to the parent once they are started.

Similar to OpenMP or Thread Build Blocks the Fork-Join abstraction can be accomplished using a Parallel.ForEach() loop to avoid dealing with the management of individual threads and tasks:

The C# LINQ libraries take this abstraction even one step further accomplishing Fork-Join using only one line:

Pipelines

In a pipeline scenario, there is typically a producer thread managing one or more worker threads producing data. There is also a consumer thread managing one or more worker threads which consume the data being created by the producer.

The following figure demonstrates a very simple pipeline using the SemaphoreSlim class:

While the simple BlockingQueue above provides the most basic support for exchanging data between threads in a pipeline, these architectures can quickly become very complex when considering factors such as speed differences between the producer and consumer and notifications between managing threads when production and consumption have started or completed.

The C# System.Collections.Concurrent.BlockingCollection provides robust support for all of these pipeline implementation details which is demonstrated in the three stage pipeline below:

In the parallel pipeline example above, the BlockingCollection is used to manage the communication between the different producer / consumer threads. Using the BlockingCollection’s GetConsumingEnumerable() method, consumers can continue to wait for new work items until the producer has notified the BlockingCollection that production has completed. In addition, bounded capacities can be set to help manage memory and resolve speed differences between producers and consumers.

The pipeline example can be taken one step further by executing any of the producer / consumer processes in parallel. Futhermore, the BlockingCollection is thread-safe so no additional locking effort is required when calling its add() method. The Stage 2 processing in the example above can easily be executed in parallel. Notice in the example below that the .AsOrdered() method is also used to ensure file lines are still processed in order and also in parallel!

Locking

The C# multicore development environment offers many types of locks for thread synchronization. The following section includes several of the most common lock types available and their use cases:

The “Lock” Keyword

The “Lock” statement can be used to protect critical sections of parallel C# code and is the most common form of barrier used for thread-safety in C#:

The Interlocked Class

The Interlocked Class

Use the interlocked class for high performance and thread-safe increment, decrement, or exchange of variables.

The SpinLock Class

A “spin lock” is much faster than a regular lock. However, it never releases the CPU during locking and consumes more CPU resources. Use this lock type with caution to achieve high performance in certain low-level locking situations where only one or two lines of code may require a lock.

ReaderWriter Class

Use the ReaderWriter class for locking a resource only when data is being written and permit multiple clients to simultaneously read data when data is not being updated. [15]

Divide and Conquer

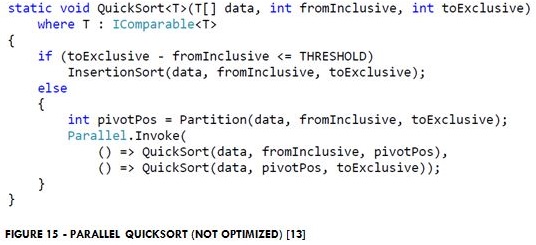

The most popular example of the divide and conquer algorithm is QuickSort which executes with a time complexity of O(n log n) using recursive calls to divide sorting work into buckets until the bucket size becomes 1, which is implicitly sorted. However, it is important to note that the InsertionSort algorithm out performs QuickSort for much smaller values of n. Therefore, stopping the recursion and switching to InsertionSort at an acceptable value for n is optimal.

Using LINQ in C#, the QuickSort algorithm is “quickly” converted to execute in Parallel:

When using multiple threads to perform QuickSort in parallel, it is important to realize that the overhead associated with parallel processing quickly saturates processors by the potentially large amount of recursive calls resulting from QuickSort’s divide and conquer strategy. There is also overhead associated with each Task created to execute the individual calls to Parallel.Invoke().

The following version of QuickSort has been optimized to account for both the number of Parallel.Invoke() tasks executing at any given time and the size of “n” for each bucket. When these thresholds are exceeded, the algorithm switches from parallel to serial execution. However, it is possible for a serial execution to recursively switch back to parallel at some point when the CONC_LIMIT is no longer exceeded.

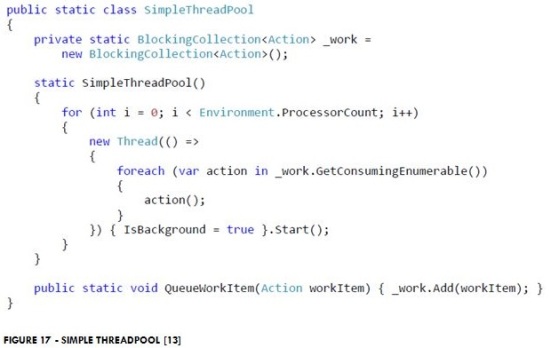

Thread Pools and Work Stealing

In later versions of the .NET framework >= 4.0, Tasks execute from the Task Scheduler using a Task Scheduler Type. The ThreadPool not only maintains a global queue, but a queue per thread where they can place their work instead of in the global queues. When threads look for work, they first look locally and then globally. If no work still exists, then threads are able to steal work from their peers [13]. While most parallel calls from LINQ and the Parallel class (Parallel.For / ForEach) manage their own thread pools, custom thread pools and task schedulers can be created when needed.

The following example demonstrates the creation of a simple thread pool using a BlockingCollection to manage work items in the queue:

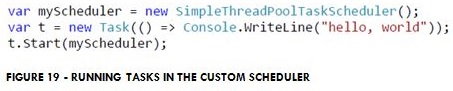

In the next example, a simple task scheduler is created to schedule work items within the simple thread pool we previously created:

Now, running tasks on an instance of the scheduler is very simple:

Thread Safe Data Structures and Collections

The following list details other important thread safe data structures and collections in C# which are worth mentioning:

- ConcurrentDictionary – Thread-safe Dictionary.

- ConcurrentBag – Unordered Thread-safe Collection. This is the fastest collection available when processing order does not matter.

- ConcurrentQueue – FIFO Thread-safe Collection.

- ConcurrentStack – LIFO Thread-safe Collection.

- BlockingCollection – Wraps around a Bag, Queue, or Stack providing blocking functionality in a Producer / Consumer pipeline.

MapReduce

“Map reduction processing provides an innovative approach to the rapid consumption of very large and complex data processing tasks. The C# language is also very well suited for map reduction processing. This type of processing is described by some in the C# community as a more complex form of producer / consumer pipelines.” [16] A parallel MapReduce pipeline can easily be created using many of the concepts and multicore development components previously discussed in this document.

The following diagram shows how each of the these components will work together in a simple parallel MapReduce pipeline for counting the occurrence of unique words within a document:

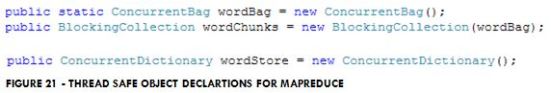

The following thread-safe objects will be used to exchange data between the “map” and “reduce” components of the pipeline and to house the final reduction results:

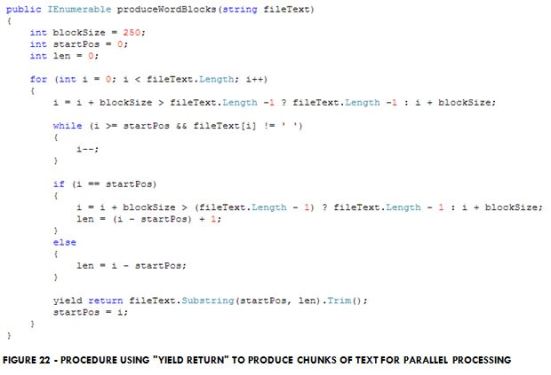

First a “chunking” function will be used to read text from a fileText variable breaking all the text into smaller approximately 250 character chunks which are “yield returned” to downstream worker threads for further processing as they are produced:

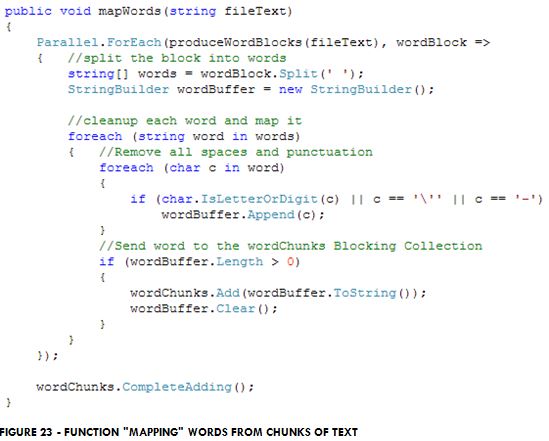

Next the “mapping” function will “map” each block of text into words in parallel. As words are identified by individual threads, they are placed into the thread-safe Blocking Collection for further downstream reduction processing:

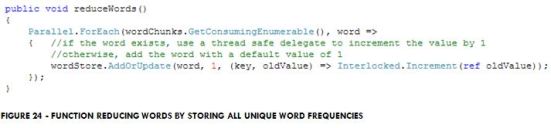

The “reduce” function in this process identifies unique words and keeps track of their frequencies. This is accomplished by using a thread-safe ConcurrentDictionary. The ConcurrentDictionary is a high performance collection of key-value pairs for which keys are managed using a hash table implementation which provides extremely fast lookups / access to each key’s value. The ConcurrentDictionary also provides a simple addOrUpdate() method which allows users to check for a key adding the key when it does not exist and updating the key otherwise. Since we are only incrementing a counter here, the Interlocked.Increment() function is also used to ensure very efficient threadsafe updates to each counter variable.

Both the map and reduction processes utilize Parallel.ForEach() to perform MapReduce processing in Parallel:

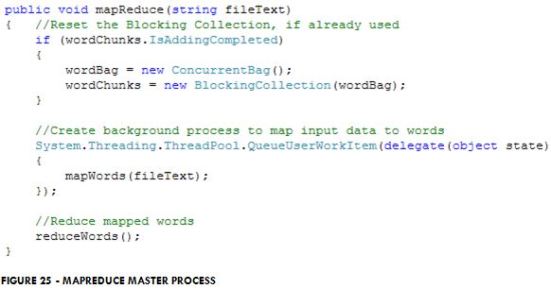

Finally, the entire MapReduce pipeline is tied together by one master process which asynchronously executes the mapping function in the background while simultaneously performing the reduction function in the foreground to achieve the highly parallel MapReduce pipeline:

CONCLUSION

Multicore development support within the C# language is extremely powerful and versatile making it one of the best languages available for the rapid development and prototyping of highly parallel Symmetric Multiprocessing (SPM) applications. I would highly recommend the C# multicore development suite for tasks well suited to homogeneous operating systems and shared memory situations. Although C# is primarily designed for the Windows operating system, parallel applications can be deployed on many other non-Windows systems via the World Wide Web or using an open source compiler such as Mono. Considering its reasonable execution performance and the very rapid development time lines afforded by the robust Visual Studio IDE and multicore development classes, C# would remain my first choice for multicore development unless Asymmetric Multiprocessing or non-uniform memory access were key project requirements. In addition, I would recommend that developers consider other alternative multicore development solutions such as OpenCL and MPI for very specialized low level projects requiring large amounts of data parallelism using dedicated processors.

REFERENCES

- Onur Gumus, C# versus C++ versus Java performance comparison, http://reverseblade.blogspot.com/2009/02/c-versus-c-versus-java-performance.html, accessed on 12/13/2013.

- C#, http://en.wikipedia.org/wiki/C_Sharp_(programming_language), accessed on 12/13/2013.

- Mono, CSharp Compiler, http://www.mono-project.com/CSharp_Compiler, accessed 12/17/2013.

- Oshana, Rob, Multicore Software Development, SMU – Fall 2013.

- Microsoft, NUMA Support, http://msdn.microsoft.com/en-us/library/windows/desktop/aa363804(v=vs.85).aspx, accessed on 12/17/2013.

- Stack Overflow, Complete .NET OpenCL Implementations, http://stackoverflow.com/questions/5654048/complete-net-opencl-implementations, accessed on 12/17/2013.

- OpenMP, Why Should I Use OpenMP, http://openmp.org/openmp-faq.html#Problems, accessed on 12/17/2013.

- Dartmouth, Pros and Cons of OpenMP, http://www.dartmouth.edu/~rc/classes/intro_mpi/parallel_prog_compare.html, accessed on 12/17/2013.

- Intel, Compare Windows* threads, OpenMP*, Intel® Threading Building Blocks for parallel programming, http://software.intel.com/en-us/blogs/2008/12/16/compare-windows-threads-openmp-intel-threading-building-blocks-for-parallel-programming/ , accessed on 12/17/2013.

- Xu,J.Y., OpenCL – The Open Standard for Parallel Programming of Heterogeneous Systems, http://it029000.massey.ac.nz/notes/59735/seminars/05119308.pdf, accessed on 12/17/2013.

- Microsoft, Parallel LINQ – Running Queries On Multi-Core Processors, http://msdn.microsoft.com/en-us/magazine/cc163329.aspx, accessed on 12/17/2013.

- Cilk++, Why Use Intel® Cilk™ Plus?, https://www.cilkplus.org/, accessed on 12/17/2013.

- Microsoft, PATTERNS OF PARALLEL PROGRAMMING – UNDERSTANDING AND APPLYING PARALLEL PATTERNS WITH THE .NET FRAMEWORK 4 AND VISUAL C#, http://www.microsoft.com/en-us/download/details.aspx?id=19222, accessed on 12/17/2013.

- Microsoft, Thread Synchronization, http://msdn.microsoft.com/en-us/library/ms173179.aspx, accessed on 12/17/2013.

- Microsoft, ReaderWriter Class, http://msdn.microsoft.com/en-us/library/system.threading.readerwriterlock.aspx, accessed on 12/17/2013.

- Drew, Jake, MapReduce / Map Reduction Strategies Using C#, http://www.codeproject.com/Articles/524233/MapReduceplus-2fplusMapplusReductionplusStrategies, accessed on 12/17/2013.

RESOURCES