On Article's Update

Initially, I wrote this article to present a small framework for machines and processes control. But following a wise reader’s suggestion, I decided to widen its scope. Indeed, the framework provides simple means for parallel computing.

Introduction

During my software development career, several times I have dealt with projects related to processes / machines control. Every time taking the project, I observed an amazingly similar picture. A relatively small company designs and manufactures some machine or process to be controlled. The company has very good experts in its activity field, advanced electronic and software engineers. Mechanisms of the machine are adequately controlled at the low level. But when it comes to PC-based flow control software, the project runs to problems. This software is difficult to understand and maintain, not to say about its adjustment to new versions / generations of machine. So, what's wrong with the flow control software?

IMHO, the problem is rooted in general approach to design and development of such software. Every company I have seen so far develops its own flow control software from scratch. And indeed it looks very simple at first glance: just implement few connections between well-defined operations! The main efforts of PC software engineers are focused on development of operations themselves – implementing protocol for connection with controllers, parts motion programming, cameras control and subsequent image analysis, etc. And when all these stages of development are successfully accomplished, suddenly their interrelation does not work as expected. When trying to achieve proper machine behavior, developers are faced with inflexible, ad hoc written code which is very difficult to debug and fix. To make things worse, to debug the software, all developers need to share the same expensive hardware. And in some cases, debugging on hardware is simply not possible. Consider, for example, servo motor which has an operating cycle of working for n sec. and then being idle for m sec. Increasing its continuous working time leads to its overheat and subsequent destruction. So developer cannot stop software for debugging at a breakpoint keeping the motor working under load for a long time.

Failure to develop adequate operations flow control software causes tremendous (with respect to their budget) losses for the companies. So what should be done to prevent these losses and improve quality of the flow control software?

Background

To attain this goal, I'd suggest to follow simple rules while developing flow control software.

- Split the entire process to separate operations (below referred to as commands).

- Use general flow control engine. The engine processes, commands and analyzes results of the processing. This engine is based on generic framework. The framework provides uniform mechanism for both sequential and parallel command execution, machine state analysis and generation of new commands based on this analysis. Besides this, the framework supports features like command priority, suspension / resumption of commands execution, error handling and logging mechanisms.

- Clearly separate command from its processor. Besides cleaner logic and easier understanding, this rule provides a great organizational advantage by separating development tasks between software people comfortably dealing with parallel processing and threads synchronization, and engineers understanding nature of command and able to write a command execution method.

- Each command provides virtual methods for its execution and error handling. These methods are implemented in derived classes for specific commands and called by command processor.

- Each command may be executed either in real mode or in mock one. Command mocking allows developer test her/his software by simulating some or all of commands execution. Mock methods may be either targeted to machine emulator or simulate machine themselves.

- The control engine is managed by a singleton processor manager. It is responsible mainly for creation and maintaining processors, and processing state changed events caused by command execution.

- Built-in error handling and logging mechanisms are vital for the engine.

I believe that by following the above rules, a small machine manufacturing company will be able to achieve good result in development of machine operations flow control software. In this article, I present a simple and generic framework based on the above rules, and provide two examples of its usage.

When designing a framework, developer usually faces dilemma: either provide user with maximum tools and methods, or offer her/him only the most crucial and complex-to-develop means and allow the user maximum flexibility. In real life, this is always sort of trade-off. For this small flow control framework, I choose a design which is closer to the latter approach. The decision was to design "minimalistic" framework. It means that the framework should have only those features which are vital for operation flow management. I think that in most cases simplicity and maintainability of code are more important than some performance advantages. As an example, Microsoft Robotics Developer Studio [1] may be considered. Despite its very useful and advanced features (like e.g. its decentralized lightweight services, visual programming language, amazing visual simulation environment, etc.), this monstrous framework has relatively little spread in the industry due to its complexity in installation, usage and learning.

Design

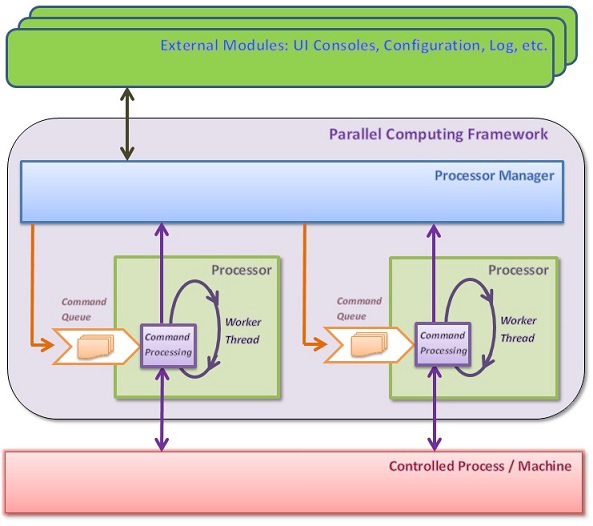

The following block-diagram illustrates design.

The framework mainly consists of five major types, namely:

ProcessorManagerProcessorCommandParallelCommand andLog

ProcessorManager is a singleton class responsible for creation and managing of Processors. It has internal class ProcessorManager.PriorityQueue which handles processors according to their priority. For each priority, ProcessorManager has an instance of PriorityQueue type providing queue of available processors and dictionary of currently running processors of this priority. PriorityQueue type also supports mechanism to suspend / resume execution of all processors with priorities below the running one. This feature is active by default, but can be suppressed by creation of ProcessorManager with Initialize() method with its parameter set to false. Actual suspending / resuming processors according to their priorities is performed by ProcessorManager. A crucial function of ProcessorManager type is to take decisions regarding command execution flow based on the current state of controlled process. Method OnStateChanged() is used for that matter. It implements synchronized calls of decision taking delegates providing by user. The delegates are supplied using appropriate ProcessorManager type indexer.

User may decide to create several instances of Processor with the same priority at once (normally at the beginning of application). This may be useful because creation of a new processor implies creation of its thread which is a relatively expensive operation. Method IncreaseProcessorPool() of ProcessorManager type creates new processors. PriorityQueue type supports pool of processors. A processor taken from the pool can be returned to the pool. This is done to avoid creation of a new thread which is necessary when new processor is created.

The main job of Processor type is to handle its own Command queue and execute dequeued commands. Commands' execution takes place in a separate thread owned by each instance of Processor type. The instance is characterized by its unique Id and priority which is essentially integer value (but for convenience, appropriate enumerator may be used for some well-known values). A Processor instance encapsulates the entire mechanism of command execution in its thread including queue management, synchronization, error handling, logging and appropriate callbacks calls. After execution of each command processor calls in context of its thread synchronized method ProcessorManager.OnStateChanged() described above. Since this method is synchronously called by all processors, delegates executing by it should be fast to ensure good performance. After ProcessorManager.OnStateChanged(), the processor may call its own post-command-execution OnStateChangedEvent delegate if the latter was provided by user. So, collaboration of ProcessorManager with Processors provides commands execution flow with user supplied callbacks effectively hiding from user somewhat tricky details of queuing and threads synchronization. The delegate should be assigned to processor before it is ready for commands execution, i.e., before method StartProcessing() is called. This is achieved by getting instance of Processor type with the second parameter of appropriate indexer set to false, than assigning the OnStateChangedEvent delegate, following by call of StartProcessing() method. This is shown in code samples. Commands inqueuing to a processor implies their asynchronous execution meaning that inqueuing method immediately returns, and actual command processing will take place later by the processor's thread.

Types ProcessorManager and Processor described above are not subject to user change - only decision making callbacks should be provided. Types derived from Command type provide the process / machine specific functionality. Unlike ProcessorManager and Processor, Command-based classes are spared from synchronization and other tricky stuff. They are rather straightforward. The commands are dealing solely with the controlled object and therefore may be developed by activity field expert even with limited software skills. An important feature of the commands is their ability to be executed either in real or in mock modes. Appropriate virtual methods ProcessReal() and ProcessMock() should be implemented by derived classes.

One of the vital features of a control framework is its support for parallel execution of commands. This is achieved with special command type ParallelCommand : Command. In its ProcessParallel() method called by ProcessReal() and ProcessMock(), ParallelCommand enqueues each of parallel executing commands to a separate newly obtained processor. These processors are automatically returned to the processors pool upon completion of their commands execution.

Logging is implemented using the same processor-command approach. Generic class Log implementing interface ILog parameterized with user provided class derived from abstract class CommandLogBase : Command. In its constructor, Log class creates a dedicated log processor, and each of ILog.Write() overloaded methods inqueuing CommandLogBase-based command to it. Priority of log processor is fixed, and priority of user created processors should be chosen to ensure desirable proportion between control commands execution and logging. After playing for some time with the framework, I'd recommend to use in addition to ordinary log file, one more log file of special format. Each command at its begin and end outputs a record to this additional log file. The records of each processor are placed in a separate column. Thus, this log file illustrates commands execution per processor in chronological order. Although currently, such a logging is not part of the framework, it is very useful for understanding the commands flow and debugging. Code SampleA produces such file.

Code Samples

The framework classes described above are located in ParallelCompLib project. Two samples placed under folder Samples use the framework. Both applications implement activity field and log related commands classes derived from Command been executed by processors with different priorities. SampleA application demonstrates execution of several sequential and parallel commands. The commands have different processing durations (defined with Thread.Sleep() method). The sample also shows return of processors to pool. Logging of SampleA produces file _test.log containing routine log output and file _flow.log showing timing of commands execution per processor and illustrating return processors to the pool and their subsequent reuse. Every line in file _flow.log presents either begin or end of appropriate command processing. Processor ID is written in parenthesis before information about each command. Lines in the file are put in chronologic order. So if initially only one processor of certain priority was placed into the pool, then reuse of processors can be clearly seen. It is interesting to observe differences in command execution flow with commented and uncommented conditional compilation symbols in the beginning of file Program.cs. To run SampleA from Visual Studio, please build and start SampleA project.

Let's have a closer look at the SampleA. Its code (with some minor omissions) is given below:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Drawing;

using System.Threading;

using System.Threading.Tasks;

using IL.ParallelCompLib;

namespace SampleA

{

class Program

{

static void Main(string[] args)

{

#if _NO_LOWER_PRIORITY_SUSPEND

ProcessorManager.Initialize(false);

#endif

ProcessorManager.Instance.Logger = new Log<CommandLog>(LogLevel.Debug);

var priority = PriorityLevel.Low;

#if _BIG_PROCESSOR_POOL

ProcessorManager.Instance.IncreaseProcessorPool(priority, 15);

#endif

var evSynch = new AutoResetEvent(false);

CommandLog.dlgtEndLogging = (s) =>

{

if (!string.IsNullOrEmpty(s) && s.Contains("Z") && s.Contains("End"))

evSynch.Set();

};

var processor1 = ProcessorManager.Instance[priority];

ProcessorManager.Instance[ProcessorState.CommandProcessed] = (pr, e) =>

{

if (e.Cmd != null && e.Cmd.ProcessState != Command.State.NotYetProcessed &&

!string.IsNullOrEmpty(e.Cmd.Name))

{

if (e.Cmd.Name == "M")

{

processor1.EnqueueCommand(new CommandS("Z"));

return;

}

if (e.Cmd.Name.Contains("->1"))

ProcessorManager.Instance.ReturnToPoolAsync(e.Cmd.Priority,

e.Cmd.ProcessorId);

}

};

var processor2 = ProcessorManager.Instance[priority, false];

processor2.OnStateChangedEvent += (pr, e) =>

{

if (e.Cmd != null && e.Cmd.ProcessState != Command.State.NotYetProcessed &&

!string.IsNullOrEmpty(e.Cmd.Name))

{

if (e.Cmd.Name == "_ParallelCommand" &&

e.Cmd.ProcessState == Command.State.ProcessedOK)

processor1.EnqueueCommand(new CommandS("H"));

if (e.Cmd.Name == "K")

evSynch.Set();

}

};

processor2.StartProcessing();

int si = 0;

int pi = 0;

processor2.EnqueueCommand(new CommandS(GetName("S", si++)));

processor2.EnqueueCommands(new Command[]

{ new CommandS(GetName("S", si++)), new CommandS(GetName("S", si++)) });

processor2.EnqueueCommandsForParallelExecution(new CommandP[]

{ new CommandP(GetName("P", pi++)), new CommandP(GetName("P", pi++)) });

processor2.EnqueueCommands(new CommandS[2]

{ new CommandS(GetName("S", si++)), new CommandS(GetName("S", si++)) });

processor2.EnqueueCommand(new CommandS("K"));

evSynch.WaitOne();

ProcessorManager.Instance.ReturnToPool(ref processor2);

int processorsInPool = ProcessorManager.Instance.ProcessorsCount(priority);

processor2 = ProcessorManager.Instance[priority];

processor2.EnqueueCommands(new CommandS[2]

{ new CommandS(GetName("S", si++)), new CommandS(GetName("S", si++)) });

processor2.EnqueueCommandsForParallelExecution(new CommandP[]

{ new CommandP(GetName("P", pi++)), new CommandP(GetName("P", pi++)) });

processor2.EnqueueCommand(new CommandS("M"));

evSynch.WaitOne();

ProcessorManager.Instance.Dispose();

}

static string GetName(string name, int n)

{

return string.Format("{0}{1}", name, n);

}

}

}

And now, we discuss SampleA's _flow.log log file. Format of the file's most informative part is quite simple. Each column denotes separate processor with its thread. The processor's ID is given in the first field in parenthesis. Then appropriate words indicate begin and end of each command, following by (after dash) command ID and command name. For simplicity, log processor (its ID equals to 0 in our case), log commands and ParallelCommand are omitted from the log. Every processor and command get their unique ID by incrementing ID of the previous one. Given in the file command IDs are not successive because "missing" numbers belong to log commands.

A fragment of SampleA's _flow.log log file when _BIG_PROCESSOR_POOL was commented out, is depicted below. In this case, processor pool for given priority was not created in advance.

In this case, we can see that processors returned to the processor pool were reused. processor1 was the first processor created, but commands (namely H and Z) were assigned to it after some time, in change event handlers. So this processor has ID (1) but appeared in the last column only.processor2 with ID (2) started with sequential processing of three commands of CommandS type (namely S0, S1 and S2). Then the same processor was assigned with two CommandP commands (P0 and P1) for parallel execution. Instance of ParallelCommand executed the commands in newly created processors (3) and (4) respectively. Each CommandP command creates another processor - (5) and (6) - to execute two successive commands of type CommandS. Interesting enough, processor (2) proceeds with its next successive commands S3, S4 and K only after all parallel commands were ended. This is desirable behavior caused by ParallelCommand design. However, sequential commands initiated by the parallel commands in processors (5) and (6) ran even after end of the parallel commands. End of ParallelCommand command caused enqueueing command H to processor (1). The enqueue operations took place before start of command S3 by processor (2), but actual execution of command H by processor (1) started later. So begin of S3 preceded begin of H. All processors (3)-(6) were returned to processor pool. Processors (3) and (4) were returned by ParallelCommand, whereas processors (5) and (6) were returned to the pool by ProcessorManager change event handler calling method ReturnToPoolAsync(). Because the above four processors were returned to processor pool asynchronously, their new order in the pool is unpredictable. In the sample AutoResetEvent evSynch.WaitOne() caused the execution to wait until the end of command K. Then processor (2) was returned to processor pool by calling ProcessorManager's method ReturnToPool(). The following commands were executed by known to us processors retaken from the pool.

When _BIG_PROCESSOR_POOL was uncommented, processor pool for given priority containing 15 processors was created in advance. Now already used processors are also returned to the pool. But number of processors created in advance is large enough to not observe this. It is interesting that in this case shown numbers of commands started from much higher number than in previous case. This happens because creation of processors caused many log commands which are not shown in _flow.log file.

SampleB application controls Simulator WinForms application. The Simulator uses DynamicHotSpotControl described in [2] (with some modernizations). Simulator acting as a host for WCF service, receives commands from SampleB. To observe changes in Simulator's state, the "smart polling" technique described in [3] is used. This approach is chosen to illustrate usage of processor's OnStateChangedEvent and long lasting command with low (below log) priority. In the beginning, commands cause Simulator to create its visual objects with appropriate states and dynamics. Then Simulator informs SampleB application about each left mouse click over a visual object. ProcessorManager in its appropriate callback processes Simulator state change and responds with CommandChangeState and CommandChangeDynamics to Simulator. To run SampleB and Simulator from Visual Studio, you should build and simultaneously run them as multiple startup projects.

Demo

Unzip file containing demo. To run demo of SampleA, file SampleA.exe should be started. For SampleB, first start Simulator.exe (since it hosts WCF server) and then SampleB.exe.

Discussion

The flexibility of the presented framework should be used with caution. Usage of too many processors with commands enqueueing in many points of the application may cause performance deterioration and even unexpected operations flow. Principle "keep it simple" should not be ignored just because we have tools to make things complex.

Conclusions

This article presents a simple framework targeted to parallel computing applicable to operations flow management in machine and process control, gaming, simulators, etc. The framework provides a mechanism for sequential and parallel commands execution, analysis of controlled process state, error handling and logging. Its usage allows developers to clearly separate command from execution flow, mock (simulate) some of the commands while actually executes others. The suggested general framework is easy to use, and it may serve as a foundation for parallel computing applications in various activity fields.

References