Introduction

This article shows the implementation of 4 variants of the "Iterative Closest Point" algorithm.

What this article handles:

-a brief comparison of the ICP variants regarding the mathematical background

-discussion and samples for the scaling transformation, especially the inhomogenous scaling

-samples and comparison of translation, rotation and scaling transformations for all discussed ICP variants

-point cloud samples where the ICP algorithm fails

My goal is also to show which convergence problems the ICP algorithm may still have on basic level - mainly for finding the right correspondance of points between source and target clouds. The work is in progress: Perhaps some people will invest a bit of time in comments. From my point of view, these convergence and principal problems should be solved before approaching the nonlinear problems in ICP like outliers etc. which are highly investigated in the literature.

There is a lot of literature on this field, also some source code, but I did not find a systematic comparison of the different methods with source included, for later usage in own implementations.

Background

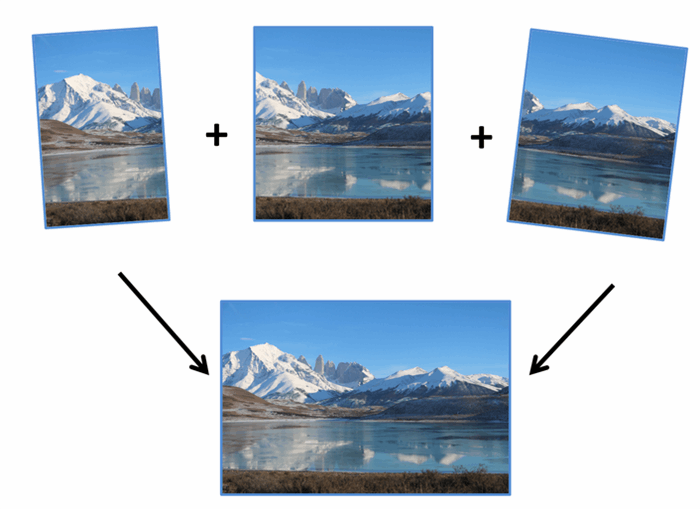

The ICP algorithm is used to align (stitch, register) point clouds taken from different angles to a single 3D point cloud.

What does it mean to "align" (register, stitch) point clouds?

It means to match one 2D or 3D point cloud (source cloud) into another (target cloud).

In 2D this problem is well known and solved, lots of programs can "stitch" photos taken from different angles together to form a panorama photo.

Brief description of the Iterative Closest Point method

Problem Statement: Match one point cloud (source) into another one (target):

-

For each point in the source point cloud, find the closest point in the target point cloud. This is efficiently done by a “k-d tree” search algorithm

-

Calculate a transformation (combination of rotation, translation and scaling)

This is done by minimizing a mean squared error cost function, as described in references [1] - [5]. It involves solving e.g. an eigenvalue system or using quaternion maths, which is on the level of higher college math.

-

Transform the source points using the obtained transformation.

- Check if the clouds match on a desired threshold. If not, go to step 1 - Iterate again

Mathematical background of the different ICP methods discussed

There are lots of different ICP methods implemented in hundreds of publications. The difference between them can be categorized regarding the 4 steps of the ICP methods (see the 4 points in "Brief Description of the ICP method").

1. Different algorithms for finding the point correspondance between source and target.

If one assumes that there are N points in the source and N points in the target cloud, a "brute force" method requires N*N operations, which is very slow. A k-d tree does this work in (N ln N) operations. There are also Octree or other variants, with basically the same speed.

2. Different way of calculating the transformation between two point clouds

For rigid (translation, rotation) and affine (scaling) transformations, most methods use the mathematically driven solution, since the affine transformations has a mathematically closed solution. Some, but very few use other, e.g. probabilistic methods (like Monte Carlo).

For the rotation and translation, the original ICP article of Horn [1] proposes a quaternion method. This has been proven (in the article of reference [6]) to be basically equivalent to others methods like:

-a SVD (Singular Value Decomposition) method used first proposed in [7], used also in [3] and [4] and [5]

-a method using orthonormal matrices methods see [2]

-dual quaternion method [8]

There is however a difference regarding scale transformations. The original ICP method from 1987 did not include scale transformations, but Horn added this in his publication from 1988 [2].

In real world the scale transformations is important, just imagine that you have a point cloud from one angle, then move towards the target to take the second point cloud. This is basically a scale transformation.

A somewhat comprehensible discussion of scale transformations is done in [3] and [4].

In the code I implemented, there are four different methods to include scaling

-as in the "Horn" method of [2] on orthonormal matrix level

-the "Umeyama" [3] method deduces the scale factor on eigenvalue level (his mathematical proof is nice :) )

-the "Zinsser" [4] method deduces the scale factor as a partial derivative

-the "Du" [5] method is similar to [4], but deduces three scale factors (for the 3 geometry axes) as partial derivates

3. Other differences of ICP regard how you treat small measurement errors, outliers and missing points.

Measurement errors occur quite often - due to the missing scan precision: The same point in space is represented by slightly different coordinates in the source and target cloud. To some extent this is covered in the base algorithm.

Outliers are erroneous measured points, which should be ignored.

Missing points are the points of one point cloud with no correspondence in the other cloud. This is wanted effect: By scanning the target from another angle, you will of course have points not present in the first scan.

As these three effects are highly non-linear, a lot of different implementations are published, with weight factors, etc. The methods cannot be really compared, since implementation details are not complete in lots of cases, and of course, they publish mostly working samples of point clouds. It would be nice to have also not - working examples published, this would be good for a method comparison.

There are also some methods which find some subset of identical points in source and target (e.g. by comparing color, or light intensity).

Comparison of the ICP methods of [1] - [4]

-The Umeyama [3] and Zinsser [4] behave the same, although the method is different. They give the same results at translations, rotations and uniform scaling. (Uniform scaling means that the scale factor is the same in all 3 spatial directions)

-The Horn [2] method behaves a bit different than [3] and [4] at scaling: The converge behaviour is different (sometimes faster, sometimes much slower), and the performance of a single iteration is slower, at least in the implementation I used, which is ported from the VTK library. I found some cases where [3] and [4] go into false local minima, but the Horn [2] method does OK, and some others where it is the other way around, see also samples below.

-The Du [5] method is the only one which works for inhomogenous scaling transformations. This is clear, as the others have by definition the same scale factor for all 3 directions. This should basically be better for real objects, where you have to take into account non-linear transformations of the scanned object.

The convergence of the Du method is slower than the other methods.

Using the code

To run the samples, you would have to first install NUnit and then configure the path of the NUnit exe in the Visual Studio project, in order to run the tests.

Hint: I did not manage to use NUnit 2.6 which uses .NET 2.0, but compiled NUnit using .NET 4.5. Possible that NUnit 3.0 (alpha version) works as well – I did not try that.

An overview of the testcases:

The testcases discussed below are in the folder "UI" and "ExpectedError"

The other ones are work in progress, or the "Automated" ones: A small set of testcases which run quite fast and have to work after each program change.

1. Cube code gallery (testcases in class: ICP_Test5_Cube)

From left to right: Translation, rotation, uniform scaling, inhomogenous scaling, translation + rotation + scaling

Color coding:

-the source point cloud is white

-the target is green

-red is used for the transformed point cloud.

If there is nothing red on the screen, then the target (green) overlaps the transformed point cloud, so the ICP result is "success", matching is 100%.

1.a Cube translation sample

In testcase:

ICPTest5_Cube.Cube_Translate

The Code of this method, for a short explanation:

[TestFixture]

[Category("UnitTest")]

public class ICPTest5_Cube : ICPTestBase

{

[Test]

public void Cube_Translate()

{

Reset();

IterativeClosestPointTransform.ICPVersion = ICP_VersionUsed.Horn;

IterativeClosestPointTransform.FixedTestPoints = true;

IterativeClosestPointTransform.ResetVertexToOrigin = false;

ICPTestData.Test5_CubeTranslation(ref verticesTarget, ref verticesSource,

ref verticesResult);

this.ShowResultsInWindow_CubeLines(false);

Assert.IsTrue(ICPTestData.CheckResult(verticesTarget, verticesResult, 1e-10));

}

Code explained:

IterativeClosestPointTransform.ICPVersion = ICP_VersionUsed.Horn;

The ICP method used is the "Horn" method (see [2])

-IterativeClosestPointTransform.FixedTestPoints = true;

For the cube it is assumed that the relation of source and target points is known, this is configured in the parameter FixedTestPoints

-IterativeClosestPointTransform.ResetVertexToOrigin = false;

There is the possibility to transform all point clouds to the origin of the coordinate system. This is basically a translation (see e.g. ref. [2]), which in this case would mean that no further ICP has to be done. Therefore this parameter is set to false, so that the effect of translation of ICP can be seen.

If you run the unit test, you will see something like this:

1.b Cube rotation

ICPTest5_Cube.Cube_Rotate

1.c Cube - homogenous scaling (equal scale factor on all 3 axes)

ICPTest5_Cube.Cube_Scale_Uniform

1.d Cube - inhomogenous scale (different scale factor on all 3 axes)

ICPTest5_Cube.Cube_ScaleInhomogenous_Du

1.e Translate, rotate and uniform scaling

ICPTest5_Cube.Cube_RotateTranslate_ScaleUniform_Umeyama

2. Bunny, transformed

ICPTest6_Bunny.Horn

3. A face - scanned by the Microsoft Kinect, transformed

Testcase:

ICPTest6_Bunny.Horn

The face is rotated by 30 degrees, translated by a small vector and scaled by a factor of 0.8

This is done in the method: ICPTestData.Test7_Face_KnownTransformation

VertexUtils.ScaleByFactor(myVerticesSource, 0.8);

Matrix3d R = new Matrix3d();

R = R.Rotation30Degrees();

VertexUtils.RotateVertices(myVerticesSource, R);

VertexUtils.TranslateVertices(myVerticesSource, -0.15, 0.05, 0.02);

The convergence behaviour is different in the 4 methods investigated. It converges best with the Umeyama [2] and Zinsser [3] method - after 35 iterations. The overlap between target and result is 100%. Using the Horn or Du method, after 50 iterations, the average mean distance between result and target points is still larger than 1 mm.

ICP samples, not working

If ICP is not converging, the transformed (red) cloud will not overlay the green one (target). Therefore you explicitly see 3 objects.

1. Inhomogenous scale transformation with Horn method

Expected error: This will not converge using methods 1-3, which is obvious. It will converge only with the Du [5] method, see the explanation in the Background part.

ICPTest5_Cube_ExpectedError.Cube_ScaleInhomogenous_Horn()

2. The Bunny object, rotated by 65 degrees

Will also not converge to the target. I assume this is an issue of finding the right correspondance of points in the k-d tree algorithm.

ICPTest6_Bunny_ExpectedError.Horn()

3. Face - two scans from different angles

Not converging

ICPTest9_Face_ExpectedError.Umeyama

Conclusion

Some principal differences between 4 main ICP methods are shown, especially the behaviour of scaled point clouds is important.

In my implementation, even for some simple transformation of models, the ICP method does not converge. More cases can be easily constructed. The cause is mainly finding the right correspondance between source and target points. Mechanisms which assume a subset of point correspondance KNOWN (e.g. by color, light intensity) help.

I am eager to get input from readers on how to improve the code and possibly what I have done wrong. I am grateful for any article link or hint!

References

- “Horn” ICP method

-Berthold K. P. Horn (1987), “Closed-form solution of absolute orientation using unit quaternions” in Journal of the Optical Society of America A, vol. 4, page 629-642. See article link - Horn BKP, Hilden HM, Negahdaripour S (1988), "Closed-form solution of

absolute orientation using orthonormal matrices", J Opt Soc Am Ser A, vol 5, page 1127–1135

-Original python implementation of this method done by David G. Gobbi, later a C++ port in the VTK library, this was ported to C# by me. - “Umeyama” ICP Method

Umeyama (1991), "Least-squares estimation of transformation parameters between two point patterns", IEEE Transactions On Pattern Analysis And Machine Intelligence, vol. 13, no. 4, page 376–380 - “Zinsser” ICP Method

Timo Zinßer, Jochen Schmidt, Heinrich Niemann (2003), “A REFINED ICP ALGORITHM FOR ROBUST 3-D CORRESPONDENCE ESTIMATION” in IEEE International Conference on Image Processing, September 2003, Barcelona, Spain - “Du” ICP Method

Shaoyi Du, Nanning Zheng, Shihui Ying, Qubo You, Yang Wu (2007), “AN EXTENSION OF THE ICP ALGORITHM CONSIDERING SCALE FACTOR”, in Conference Image Processing, 2007. ICIP 2007. IEEE International Conference on, Volume: 5, see link -

Comparison of ICP Methods

D.W. Eggert, A. Lorusso, R.B. Fisher (1997), “Estimating 3-D rigid body transformations: a comparison of four major algorithms”, Machine Vision and Applications, vol. 9, page 272–290, Springer-Verlag.

-

K. S. Arun, T. S. Huang, and S. D. Blostein. Least square fitting of two 3-d point sets. IEEE Transactions on Pattern Analysis and Machine Intelligence, 9(5):698 – 700, 1987.

-

M. W. Walker, L. Shao, and R. A. Volz. Estimating 3-d location parameters using dual number quaternions. CVGIP: Image Understanding, 54:358 – 367, November 1991.

Other Code used

- VTK library - parts of the code was ported, basically for the Horn [1] method

- OpenTK control from my OpenTK Code Project article and the list of code used there:

Points of Interest

Further work:

-investigate and improve convergence

-apply the method for scanned data, investigate further convergence problems

-inclusion of inhomogenous transformations. e.g. by extension of the "Du" for other transformations

-speed up iteration convergence

-inclusion of outliers, precision improvement

See also

Point cloud viewer article

History

Version 0.9.0.6 submitted on 1/16/2015