1. Introduction

General machine intelligence system (Hereafter “GMIS”) is a general robot operating system. By using quasi- natural language, it gives ordinary users freedom to drive robots working programmatically to meet user’s individualized needs.

Unlike existing operating system, GMIS no longer manages hardware but is focused on intelligence of logical behavior of device. It decreased the granularity of computer’s behavior (execution unit) from application level to function level so that the task logic can be compiled in real-time on demand.

On this basis, we have implemented that using a quasi-natural language to drive GMIS, which means an ordinary user will get freedom to control the robot’s behaviors in real time. We will just need to talk like with Siri, and GMIS will work like a programmer to execute.

More crucial is that all GMIS' behaviors can be assembled into a logic tree to perform. In this way, we can easily implement dynamic restructure of logic, memory, learning, prediction, division of labour and so on, which are extremely important features for achieving strong AI. We are confident that there is no other alternative solution.

To verify the theory, we have made a prototype and we believe we have got what we wanted.

Through GMIS, we can turn any device based on a modern operating system into robot, including but not limited to computer, mobile, home appliance, car, aircraft, various kinds of industrial equipment and military equipment, etc. robot era will be more within reach of ordinary users.

2. Background

Robot’s hardware is advancing rapidly at present, but traditional mouse + menu or remote control operation modes greatly limit the practical value of robots, the only way to solve this problem is to make a breakthrough in artificial intelligence.

Since 1956, although we had various artificial intelligence theories, but in practice none of them are successful.

One more widely used theory is the so-called neural algorithms that claim to have simulated the human brain nerve, it caused a great deal of misleading and let people think that as long as it had enough computing power, we can achieve the intelligence as high as humans.

In fact, we haven’t fully understood how the nerve works. Technically, this algorithm is a common means of data analysis and processing. Just like other algorithms we used, it can solve some application problems, but no more help to achieve true intelligence.

To achieve true intelligence, we must first understand what is the essence of intelligence.

Simply put, we believe intelligence is derived from a certain agent, not an algorithm. A river eventually flows into the sea not because it is able to find the way to the sea, but because of an inevitable result under the mutual effect of gravity and the environment. A person appears to have intelligence not because he is born with knowledge, but of an inevitable behavior under the interaction between his instincts and the objective environment.

Hence, so-called intelligence is nothing but just an interaction between an agent and the objective environment. The agent that has some innate ability or instincts determines the extent of interaction with the objective world, also determines the degree of intelligence it can have.

The essence of artificial intelligence is to try to create a kind of agent which has some certain instincts (organs), until it meets our requirements.

Further, when interacting with the objective world, if the agent produced a behavior-feedback loop, and the loop can be self-sustaining, we say that this agent had autonomous intelligence.

Based on the above viewpoints, we divided a complete robot design into two parts, one part focuses more on the hardware-related side, which determines what instincts (organs) a robot should have according to the specific field of application, and diversify the robot species. The other part can be designed as a unified general system to handle all input and output messages, drive robot’s instincts interacting with object world, and get the loop that we want. This is the design goal of GMIS.

When one kind of information is received, GMIS decides how to use the information to compile instincts into a logical behavior, and how to perform these logical behaviors in order to achieve some features that like human brain has shown, such as dynamic logic recombination, the logic reentrant, memory, prediction and learning, and so on.

More importantly, the logical behavior of GMIS can give the corresponding logical context of the information, which is a prerequisite of intelligent interaction. Since then, robots processing information is no longer to please human beings, but to provide a basis for their own behavior.

If we use a natural language as the inputting information, then an ordinary user can use the natural language to drive the robot for meeting their needs instead of to learn professional programming. It will open the door for robots' going into ordinary home.

The unified system means that the robots based on GMIS have the ability to interconnect, learn from each other and conduct logical division of labor.

The system, if we have made, whose instincts (organ) are similar to humans, it is possible to achieve the autonomous intelligence that can be much like humans.

3. Customizing Instincts

Now we have had a GMIS prototype, this prototype will help us verify the viewpoints above.

With this prototype, we can turn a hardware based on a modern operating system into robot. To achieve this goal, the first thing we need to do is to customize some instincts for the device.

Instinct here refers to some basic behaviors or functions of the hardware that can be combined to implement other more complex actions.

From the programmer’s perspective, the difference between instinct and function is that a function is just a piece of code which is meaningful to programmers but an instinct is a meaningful behavior to robot itself or its user. In other words, one function can be an instinct, but an instinct could be a program included many functions.

Though an instinct can be regarded as an application feature, it usually is implemented with certain hardware (organ) as carrier. For example, if a robot had the ability to walk, it must have a hardware feature similar to the leg, if the robot wanted to hear sounds, then it must have an organ like ear.

What instincts a robot should have depends on the using purposes of the robot, but the quantity should be as few as possible, because it is inversely proportional to the efficiency of the brain.

In general, special purpose robots should have very few instincts because we require more on efficiency rather than intelligence, sometimes it even doesn’t have to interact with human users, and these kinds of robots will be the majority of robot species.

GMIS supports downward compatibility. The robots always have the ability to communicate with each other, so that it is hopeful to become the unified standard of IOT in future.

Only a very few robots should have human-like intelligence, which are one sort of the most complex robots and the greatest challenge in artificial intelligence field.

Based on this objective, we have customized the instincts of GMIS prototype on PC with Microsoft Windows operating system. Of course “customized” here does not mean to change the PC hardware or give it a leg, but to use some software feature under operating system as its instincts, through which, we can make some demonstrations on math operating, memory, logic controlling, and natural language understanding and so on, so as to verify the design philosophy of GMIS.

If it worked well, the only thing we need to do should be adding specific instincts (organ) to others GMIS-based robots.

The following discussions about GMIS all are based on the customized prototype. The details of instincts will not be listed here, you can find them in the appendix of the manual of this prototype.

4. Using Quasi Natural Language Driven

As mentioned above, to use robots freely, we must allow user using natural language to drive robots , and robots can compile the meanings of the language as its own logic behavior to perform, we could say this is the core objective of GMIS.

At present, maybe we can’t get robots to directly understand the human’s natural language that has evolved tens of thousands of years, but we should at least try to make it understand quasi natural language.

The so-called quasi natural language refers to a most small subset of natural language, its syntax is as simple as possible but its use and form should be as much as possible to maintain consistency relative to normal natural language.

Below, we will reveal it by using GMIS prototype.

4.1 Initialization

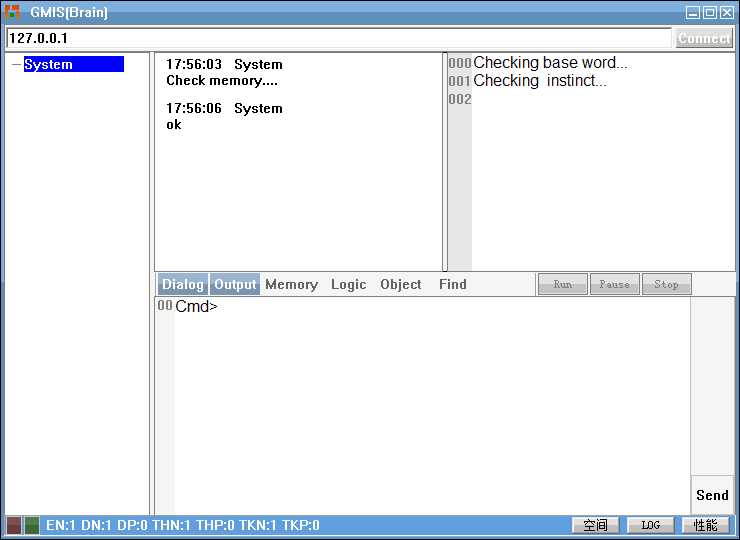

First, we open the main program of GMIS, or say activating the brain of the prototype.

For simplicity, we use a single input and output interface as an information organ integrated into GMIS, which makes the main program look a bit like an IM program.

mainwindow

User can enter commands into the bottom edit box, viewing the outputted results or other data that we need to know in the top forms. All logical threads or task dialogues that the brain currently owned will be displayed on the left side.

As a default, GMIS always has a system dialogue like the central nervous system that produces child dialogue to perform user entered commands instead of to do it personally, so as to guarantee the brain itself running safely.

Then the remaining question is how will GMIS interpret our input information?

Front we have mentioned, GMIS was designed to be information type-independent and initially only has instincts. For using these instincts, user must first mark it with himself familiar information in the memory of GMIS, we can imagine this step like pasting labels onto goods in the supermarket. When using the labels, it means that we are invoking the corresponding instincts. This is an acquired learning, all of these labels will gradually develop into language similar to we taught newborn babies.

As for what these pieces of information are, it depends on what the robot’s information organ is, that like dolphins can receive ultrasound but humans cannot.

Of course, as a PC version of GMIS prototype, the information organ that it can have is only a text editor, in which user can directly enter any natural language.

For the convenience of users, when first being opened, with the initializing, GMIS will memorize some English words and phrases to tag its instincts so that users can directly use English to command GMIS. On this basis, other mother tongue users can teach it any language.

As Chinese is my mother tongue, it has also learned Chinese, so it can even understand English-Chinese mixed input.

4.2 Final C Language

Now, let us experience what the quasi nature language is, and how it drives GMIS.

Enter a command to test:

Define int 32;

The “define” is predicate verb, the “int” (short for integer) is object, the “32” constitutes the objective complement, this command’s role is obviously same to its literal meaning.

Do an addition:

Define int 4, define int 5, use operator +;

Here it is worth mentioning that the form of commands rather than its role. From now on, no matter how complex the logic is, all commands inputted into GMIS will be this form: each action logic is one sentence ending by a period or a semicolon, each sentence consists of one or more clauses, each clause is a behavior that will be separated by commas, no more requested.

Does this mean that users can use natural language freely to command GMIS doing anything for us? We hope so, but the reality is that the prototype still does not have such a mature ability.

The first restriction is GMIS only understand the commands that we have taught, well, it is just a beginning.

Another restriction is GMIS cannot understand most of the natural language syntaxes except the subject-predicate-object clause at present (assume the subject is the robot himself, so the clause usually only consists of predicate and object), it’s also nothing, when understanding failed, GMIS will prompt us.

But the biggest limitation is that we have to bend the reality of programming and deviated from some natural language using intuition when we compile the commands to drive the robot for doing some complex tasks.

To understand this point, we must first explain how the GMIS’ instincts will be executed.

In short, each instinct is just like a function, GMIS will provide a pipeline like a nerve to connect the function and supply the required parameters during a task execution, if there are any data output when the function completed, GMIS will put these data back into the pipeline for the next instinct, so round and round, until the entire task logic is over.

Take the above command as an example:

AdditionExample

Before execution pipeline goes into any instinct (function), we must ensure the types of the data of the pipeline are consistent with the requirement of the instinct. GMIS will automatically check it and interrupt the task if there was any data that was not matching. So using instincts to compile the complex logic, it requires that the user must clearly know how each instinct changes the data in the pipeline when finished execution, as well what data types the next instinct requires. Users will have to adjust the data in the pipeline if necessary.

Therefore, the language that GMIS accepts is still not true natural language but a kind of quasi natural language. We called it Final C, namely the final C program language. It is a sort of intermediate language between traditional high-level programming language and natural language. In our view, GMIS will have the ability to complete the transition in future. As a prototype, it can only achieve this in first step.

One of the obvious benefits of Final C language is that it is possible to directly control robots to do some logical task by voice in real-time rather than by keyboard or mouse (You may not have noticed, even the program written by most advanced scripting language is hard to read aloud).

It appears that is only some improvement in form but it is one of the main barriers of restricting robot development. We all know that using keyboard or mouse to control robots is very hard to express complex logic, and using high-level programming language can do that but hardly in real-time. In fact, we can’t let everyone learn professional programming. But now using Final C, ordinary users will get the freedom to drive robots.

4.3 However, Can it really perform any logic?

Yes, it can.

To prove this, we intend to let GMIS perform a slightly complex task: loop output 9 times “hello world”.

According to structured programming theory, if GMIS has done it well, that means it can work well for any complex logic.

Before GMIS begins to execute the task, let us recall some physical knowledge that we learned in high school:

- Series and parallel circuits: No need to explain series circuits, we only need to know that the same electric current at the same time will flow through all the branches of a parallel circuit. It is very important for GMIS which also uses the same concept: the logical relationship between the logical behaviors is either series or parallel, if it was parallel relationship, execution pipeline will replicate itself to provide the same data into each branch.

- Capacitor: A capacitor will absorb energy from electric current if it was empty, and then release the energy into current when it full. GMIS has simulated this behavior to achieve temporary data storage.

- Resistor: A resistor can consume electrons of current. For GMIS, it means to erase the specified number of data in the execution pipeline.

- Diode: It makes current one-way pass, GMIS extended a bit, and it can selectively let current through.

With the knowledge points above, let us draw a circuit diagram for the task:

LoopSample

Each command in the diagram is one of GMIS’s instincts and its role is easier to understand from the literal meaning.

The tip of understanding this diagram is like observing circuit diagram, knowing what data the electric current has on each node.

Let us briefly trace the execution process:

- (1) First define an integer 0, then the execution pipeline will contains data: 0.

- (2)Encounter the empty capacitor “cp” , when the execution pipeline flowing through it, which means that all data in the pipeline will be saved into it.

- (3) Set the logical break point “lab1”, which has no effect on the pipeline.

- (4) Reference the capacitor “cp” that was created above, at this point the capacitor has charged so it will discharge, all data in the capacitor will be re-putted into the pipeline that contains data:0.

- (5) Define an integer 1 which is a cycle step size, after it is executed, the pipeline contains data: 0 and 1.

- (6) Execute an addition, it will take out the first two data that must be number otherwise system will report an error, and then it pushes the result into the pipeline which only contains data: 1.

- (7) (8) Now ready to enter a parallel body, the pipeline copies itself to ensure the data in the pipeline that will go into each branch is same, in (7) the pipeline flow into the capacitor “cp”, as “cp” has discharged before, so it will store all data of the pipeline again, namely: 1, in (8) the pipeline flow into a zero resistor means that no data be changed, when (7) and (8) has been done respectively, both pipelines will be merged by order into one pipeline, eventually, the effect is that the loop count is stored in the capacitor “cp” and the only flowed out pipeline still contains data:1.

- (9) Now, define the loop terminate count 10, then the pipeline contains data: 1, 10.

- (10) Execute a comparison operation, it will remove two numbers from the pipeline, push the comparison result back into the pipeline at the same time, either 0 (false) or 1 (true).

- (11) (12) It is another parallel body. Both branches of parallel beginning with a diode will respectively take out a number from its own pipeline, checking the number whether is equal to the diode’s, if they were equal, the branch continues to the next step, otherwise the branch will be broken and no longer execute. Obviously, based on the data in the pipeline: 1, the branch (12) will continue working until the loop condition is met, when (12) done, the pipeline will contain no data.

- (14) Define a string. The pipeline will contain one string: “Hello World”.

- (15) This operation will print all data of the pipeline to get us wanted “output”.

- (16) Define a resistor of 10. It means that the first ten data of the pipeline will be deleted. Obviously, the pipeline hasn’t so much data, it is essentially a preventive measure to clear all data in the pipeline.

- (17) Finally, we perform a jump to back to (3) where we have set a logical break point, and then repeating the above steps until we get into parallel branch (11) to complete the entire task.

Now, let us turn this diagram into Final C language. Just like pondering some problem, people always break down a complex logic into smaller simple tasks to perform. GMIS will also do the same here.

Let us first enter:

think logic circle;

This command will make brain into thinking mode that means the next entered command will not be executed immediately but create a temporary logic that be named “circle”.

Then enter:

use logic a, set label "lab1",use logic b, use logic c;

The temporary logic “circle” has been created. We can see it in the logic view. It consists of three child logics: the logic “a” is to define a loop starting count, the logic “b” is responsible for judging loop condition, the logic “c” is responsible for carrying out specific work.

Now, we get down to implementing the above three logics:

think logic a;

define int 0, use capacitor cp;

think logic b;

reference capacitor cp, define int 1,use operator+, reference capacitor cp,

and use resistor 0 ,define int 10,use operator <;

The above sentences can be entered together.

Note: The “reference capacitor cp, and use resistor 0”, there is an "and” inserting between the two clauses, meaning that two commands are parallel logic relation and will be executed at the same time logically.

think logic c;

use logic c1, and use logic c2;

The logic “c” was also divided into two child logics that are parallel logical relation, the logic “c1” is responsible for the case that the loop condition is met, the logic “c2” deal with the case that the loop termination condition is met.

think logic c1;

use diode 1,define string "hello world", view pipe, use resistor 1000,goto label "lab1" ;

think logic c2;

use diode 0, use resistor 1000;

Finally, let us enter the below command to perform the whole task.

use logic circle;

5. The Biggest Advantage: Logic Tree

So far, we have proved that we can use Final C language to express any logic, but is it really necessary by this way?

In the loop example above, if using high-level programming languages to complete that, maybe only need one line code but Final C used 17-steps.

With improving the intelligence of GMIS in future, we believe that such complexity can be eliminated, just like high-level programming language eliminated the complexity of assembly language.

The reason that we have to use Final C is because it can give us an essential condition to achieve artificial intelligence: logic tree– this is the core of the whole story.

In order to display the tree, let us enter:

debug;

Then, the brain will be changed into debug execution mode.

Let us execute the previous loop task once again:

use logic lg1;

This time, you can see a logic tree in the debug output window.

LogicTree

We can click the “Step” button, step by step, one by one to execute every instinct, viewing in the output window what data the execution pipeline has currently.

Note, this execution mode is not specially prepared for debugging, it is a normal behavior that robots should have. GMIS can freely switch the both mode at any time, just like when human beings encountered some unexpected things, we always slow down speed with doing some adjustment until making sure no other problem occurred, then return normal execution mode to continue the task.

Well, what special benefits can the logic tree bring to us?

Dynamically Change Logic at Runtime

We believe this is the key for future robot to achieve autonomous artificial intelligence.

Logic tree has an important feature: no matters how to combine, add, and delete its branches, it is still a logic tree, which means robots are able to deal with real-time dynamic scenes rather than rigid working. It is the real simulation of human brain.

Easy to Memory and Forecast

A logic tree can be easily memorized and forecast, it would help understand the natural language with logic context. After memorized a certain amount of logic, GMIS can easily figure out your real means, fundamentally solve the word ambiguity, and forecast the next action, which will lay the foundation for autonomous intelligence.

Logic Reentrant

It is same benefited from the logic tree execution mode, we can easily suspend a logic task, saving the execution state, and then resume it from the suspended point in future. The same things often occurred in our life. Such as since childhood, we always try to do many things but most of them have to be suspended because there are no appropriate conditions to complete, but it will all be stored in our brain memory, constituting our life experience. We can pick it back if necessary, and change the retrieved logic to meet our current of needs.

Inborn Parallel Execution

We have already mentioned above, GMIS’s parallel execution just like working in a parallel circuit, it gives GMIS inborn ability to execute in parallel.

We just need to say: “do something A and do something B” that can build a parallel task. It will substantially lower the threshold for the use of parallel computing.

Cooperate with Other Robots

The execution mode of logic tree can ensure a GMIS can invoke other GMIS into its task logic just like invoking itself owned instinct, thus to achieve the cooperation between robots.

And because of its natural ability to execute in parallel, the cooperation is two-way. While a GMIS calls other robots, it can be called by other robots at the same time. The result is that robots will achieve human-like division of labor.

A typical application case is that GMIS allows arbitrary numbers of computing devices (such as mobile phones and PC) in real-time are assembled into several of supercomputer at the same time to serve many users.

For the practical value of GMIS, we have given GMIS the ability of using external objects, here the external objects refer to various executable segments that have existed in the current IT world. Such as the operating system APIs or other website provided APIs, various applications and so on, all that can be wrapped as an external object to provide for GMIS using, through which we can have some special skill of robot.

For more intuitive way to show the features of GMIS, we recorded some demo videos.

GMIS prototype is fully open source: gmis.github.io, you can download the prototype and all the codes.

6. Conclusion

In a word, that GMIS supports linear expressed messages makes it possible to directly understand natural language in future. The execution mechanism based on logic tree makes it possible to eventually achieve the transformation from passive intelligence to independent intelligence.

Maybe you are a little disappointment because you did not find any intelligent feats like Siri or Google now on the GMIS. But can you imagine an intelligent robot on the lack of the above abilities of GMIS?

The philosophy foundation that GMIS is based on is so simple so that we believe it’s hard to find other alternative solutions.