Mingliang dev homepage / Project page

Introduction

I recently came upon the task of processing video clips and extracting some features for further analysis. The basic idea was straightforward: just extract each frame of the video into a bitmap and then do whatever I wish to. I did not want to be stuck in the hardness of video decoding. I just wanted to use an existing and easy-to-use framework to extract the frames. That's when DirectShow, the widely used framework, came under consideration.

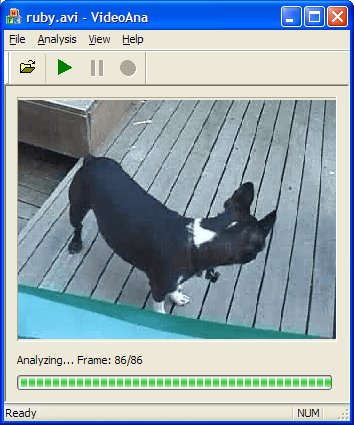

I searched the web for some solutions and I did find some. Unfortunately, what I found were mostly focused on just grabbing a single frame. This was far from efficient for my task. The typical extraction speed for such methods is around 5 to 8 frames per sec on my machine, which will be further discussed later in this article. Keep in mind that the typical playback rate of a video is 25 frames per second! Thus, I had to dig into the DirectShow framework and write something myself. Fortunately, I ultimately achieved a speed above 300 frames per second on the DirectShow sample video ruby.avi, which is a bit small in frame sample image size and not deeply compressed :-).

Background

Here are the approaches I found that could -- or are supposed to -- finish the task.

IMediaDet

Maybe this is the most easy to use solution. There is an article that demonstrates this, which is unluckily written in Chinese. Readers of my article will probably not understand that one. The main idea of this approach is:

- Create an instance of the

IMediaDet interface. - Open a video file.

- Enumerate all streams from the video using

get_OutputStreams() and find the video stream. - Get information regarding the video stream, e.g. duration or the frame image size.

- Use

GetBitmapBits() to obtain the frame image at some specified media time, or WriteBitmapBits() if you simply save a snapshot of that frame to a file.

Perhaps this is the simplest way to enumerate all of the frames of a video file. Unfortunately, its performance is not satisfying, as I mentioned before.

ISampleGrabber, One-shot Mode

There is a good article demonstrating this approach. It is not much more difficult to use than IMediaDet, but its performance is also not much better. The advantages of using this over IMediaDet are:

- Easy of use.

- Ease of process control, as you can freely jump to any part of the video or just finish the job.

So, this is suitable for just getting some snapshots from the video.

Write a Transform Filter

By writing your own transform filter, you can just do your job inside the DirectShow framework. This approach is the most powerful and efficient one. On the other hand, you need to know DirectShow quite well to get it to work. It is also quite difficult to realize a transform filter. There are several MSDN articles explaining how to write a transform filter, as well as an example realizing a sample grabber trans-in-place filter. Note that the demonstrated sample grabber here is quite like ISampleGrabber, working in callback mode and not in one-shot mode. This seems too difficult for me, however, and there appear to be too many things to consider for I'm just a green hand at DirectShow. In the end, I decided not to use this.

Alternatives to DirectShow

As far as I know, there is another widely used framework that may complete my task: OpenCV. OpenCV is a well-known open source and cross-platform computer vision library, of which video tasks are merely a small part. I just came up with some build problems with it initially, but maybe I should look into it later.

Using the Code

My Framework

At last, I chose ISampleGrabber in callback mode and not in one-shot mode. That is, I run the DirectShow graph and it continuously decodes the video frames of a video file. Each time a frame is decoded, a user-defined callback is called by DirectShow, providing the image data. The analyzing work can be done here.

Setup of the Graph

The video analyzing framework should contain extra components, so the DirectShow graph for analyzing goes like this: a ISampleGrabber is added right after the source filter, which does the decoding work. ISampleGrabber is followed by a NullRenderer, which simply does no further rendering work. The most commonly used code to connect filters that I found on the Internet looks like this:

HRESULT ConnectFilters(IGraphBuilder *pGraph,

IBaseFilter *pFirst, IBaseFilter *pSecond)

{

IPin *pOut = NULL, *pIn = NULL;

HRESULT hr = GetPin(pFirst, PINDIR_OUTPUT, &pOut);

if (FAILED(hr)) return hr;

hr = GetPin(pSecond, PINDIR_INPUT, &pIn);

if (FAILED(hr))

{

pOut->Release();

return E_FAIL;

}

hr = pGraph->Connect(pOut, pIn);

pIn->Release();

pOut->Release();

return hr;

}

For some video files, though, the line pGraph->Connect(pOut, pIn) just failed. I checked and found that the source filter for such video files has more than one output pin and GetPin() just returns the first one. The returned output pin may not be the one that outputs video frame data. So, I modified that code to make it work on all video files supported by DirectShow:

HRESULT ConnectFilters(IGraphBuilder *pGraph,

IBaseFilter *pFirst, IBaseFilter *pSecond)

{

IPin *pOut = NULL, *pIn = NULL;

HRESULT hr = GetPin(pSecond, PINDIR_INPUT, &pIn);

if (FAILED(hr)) return hr;

IEnumPins *pEnum;

pFirst->EnumPins(&pEnum);

while(pEnum->Next(1, &pOut, 0) == S_OK)

{

PIN_DIRECTION PinDirThis;

pOut->QueryDirection(&PinDirThis);

if (PINDIR_OUTPUT == PinDirThis)

{

hr = pGraph->Connect(pOut, pIn);

if(!FAILED(hr))

{

break;

}

}

pOut->Release();

}

pEnum->Release();

pIn->Release();

pOut->Release();

return hr;

}

However, if we were to incorrectly open an audio file such as an MP3, the above would be blocked and ConnectFilters() would never return. I don't know how to avoid this :-(.

Control the Analyzing Process

Controlling of the analyzing process in my framework is much the same as writing a video player using DirectShow. After the graph is set up, call pControl->Run() to start the analysis. After that, you may call pEvent->WaitForCompletion(INFINITE, &evCode) to wait until the analyzing finishes. Alternatively, you may call other controlling methods in IMediaControl to pause or stop the analyzing process.

Analyzing the Video

For most things in my framework, the video is encapsulated in CVideoAnaDoc. All you have to do when analyzing the video is write your own analyzing code in CVideoAnaDoc::ProcessFrame(). CVideoAnaDoc::ProcessFrame() is called by the framework every time a new frame comes, providing the frame image data as its parameters. An example is shown below:

HRESULT CVideoAnaDoc::ProcessFrame(double SampleTime,

BYTE *pBuffer, long nBufferLen)

{

if(0 == m_nCurFrame % 10)

{

CString strFilename;

strFilename.Format("C:\\Snap%d.bmp", m_nCurFrame / 10);

FILE *pfSnap = fopen(strFilename, "wb");

fwrite(&m_Bfh, sizeof(m_Bfh), 1, pfSnap);

fwrite(&m_Bih, sizeof(m_Bih), 1, pfSnap);

fwrite(pBuffer, nBufferLen, 1, pfSnap); fclose(pfSnap);

}

int x = 0;

int y = 0;

int nLineBytes = (m_Bih.biWidth * 24 + 31) / 32 * 4;

BYTE *pLine = pBuffer + (m_Bih.biHeight - y - 1) * nLineBytes;

BYTE *pPixel = pLine + 3 * x;

BYTE B = *pPixel;

BYTE G = *(pPixel + 1);

BYTE R = *(pPixel + 2);

m_nCurFrame++;

return S_OK;

}

About the Demo Project

The demo project I provided is in Visual Studio 8.0 (Visual Studio 2005) format. The DirectShow SDK is now included in the Platform SDK, which used to be included in the DirectX SDK. The Platform SDK I downloaded from Microsoft does not support Visual Studio 6.0 any longer (refer to this), so I was not able to provide a version on Visual Studio 6.0. I also do not have a Visual Studio 7.x installed on my system, so there's no Visual Studio 7.x version either. However, if you create a proper project in Visual Studio 7.x and copy all of the code in my project to it, it should work.

History

- Version 3, 2008-03-02:

- Fixed a bug that may cause resource leaks.

- Now uses notifications instead of a thread waiting for processing complete.

- A tiny update to the memory management.

- Version 2, 2007-06-12:

- Fixed a bug that caused resource leaks. (Thanks to bowler_jackie_lin)

- Fixed a bug that may have caused a false frame count to be retrieved at the beginning of analysis.

- Version 1, 2006-07-02: