Download WcfAsyncRestApi.zip - 30.18 KB

Introduction

At 9 AM in the morning, during the peak traffic for

your business, you get an emergency call that the website you built is no more.

It’s not responding to any request. Some people can see some page after waiting

for long time but most can’t. So, you think it must be some slow query or the

database might need some tuning. You do the regular checks like looking at CPU

and Disk on database server. You find nothing is wrong there. Then you suspect

it must be webserver running slow. So, you check CPU and Disk on webservers.

You find no problem there either. Both web servers and database servers have

very low CPU and Disk usage. Then you suspect it must be the network. So, you

try a large file copy from webserver to database server and vice versa. Nope,

file copies perfectly fine, network has no problem. You also quickly check RAM

usage on all servers but find RAM usage is perfectly fine. As the last resort, you run some diagnostics

on Load Balancer, Firewall, and Switches but find everything to be in good

shape. But your website is down. Looking at the performance counters on the

webserver, you see a lot of requests getting queued, and there’s very high

request execution time, and request wait time.

So, you do an IIS restart. Your websites comes back

online for couple of minutes and then it goes down again. After doing restart

several times you realize it’s not an infrastructure issue. You have some

scalability issue in your code. All the good things you have read

about scalability and thought that those were fairy tales and they will

never happen to you is now happening right in front of you. You realize you

should have made your services async.

What is the problem here?

There’s high Request Execution Time. It means requests

are taking awfully long to complete. Maybe the external service you are

consuming is too slow or down. As a result, threads aren’t getting released at

the same rate as incoming requests are coming in. So, requests are getting

queued (hence the Requests in Application queue > 0) and not getting

executed until some thread is freed from the thread pool.

In an N-tier application you can have multiple layers

of services. You can have services consuming data from external services. This

is common in Web 2.0 applications gathering data from various internet services

and also in Enterprise Applications where you are part of an organization

offering some services to your own apps but those services need to consume

other services exposed by other parts of the organization. For instance, you

have a service layer built using WCF talking to an external service layer which

may or may not be a WCF service layer.

In such architecture you need to consider using Async

services for better performance if you have high number of requests, say around

100 requests/sec per server. If the external service is over WAN or internet or

takes long time to execute, then using Async service is no longer optional to

achieve scalability, because long running services will exhaust threads from

.NET thread pool. Calling another async WCF service from an async WCF service

is easy, you can easily chain them. But if the external service is not WCF and

support only HTTP (eg REST), then it gets outrageously complicated. Let me show

you how you can chain multiple async WCF services and chain plain vanilla HTTP

services with async WCF services.

What is an Async Service?

Async services do not occupy the thread while it is

doing some IO operation. You can fire an external async IO operation like calling

an external webservice, or hitting some HTTP URL or reading some file

asynchronously. While the IO operation is happening, the thread that’s

executing the WCF request is released so that it can serve other requests. As a

result, even if the external operation takes long to return a result, you

aren’t occupying threads from .NET Thread Pool. This prevents thread congestion

and improves scalability of your application. You can read details about how to

make services Async from this MSDN article.

In a nutshell, sync services are what we usually do, we just call a service and

wait for it to return the result.

Here your client app is calling your service which in

turn makes a call to an external service to get the data. Until the external

service responds, the server has a thread occupied. When the result arrives

from StockService, the server returns the

result to client and releases the thread.

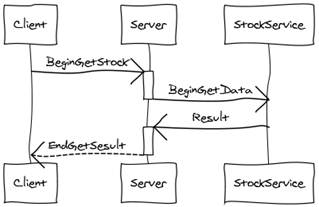

But in an async service model, server does not wait

for the external service to return result. It releases the thread right after

firing the request to StockService.

As you see from the diagram, as soon as Server calls

BeginGetData on StockService, it releases the thread represented by the

vertical box on the server lifeline. But client does not get any response yet.

Client is still waiting. WCF will hold the request and not return any response

yet. When the StockService completes its execution, Server picks a free thread

from the ThreadPool and then executes the rest of the code in Server and

returns the response back to Client.

That’s how Async Services work.

Show me the service

Let’s see a typical async WCF service. Say you have

this service:

public interface IService

{

[OperationContractAttribute] string GetStock(string code);

} If you want to make this async, you create a Begin and

End pair.

public interface IService

{

[OperationContractAttribute(AsyncPattern = true)]

IAsyncResult BeginGetStock(string code, AsyncCallback callback, object asyncState);

string EndGetStock(IAsyncResult result);

}

Now if you have done async services in the good old

ASMX service in .NET 2.0, you know that you can get it done in couple of lines.

public class Service : IService

{

public IAsyncResult BeginGetStock(string code, AsyncCallback callback, object asyncState)

{

ExternalStockServiceClient client = new ExternalStockServiceClient();

var myCustomState = new MyCustomState { Client = client, WcfState = asyncState };

return client.BeginGetStockResult(code, callback, myCustomState);

}

public string EndGetStock(IAsyncResult result)

{

MyCustomState state = result.AsyncState as MyCustomState;

using (state.Client)

return state.Client.EndGetStockResult(result);

}

}

But surprisingly this does not

work in WCF. You will get this exception from client when you will call the EndGetStock:

An error occurred while receiving the HTTP response to http://localhost:8080/Service.svc. This could be due to the service endpoint binding not using the HTTP protocol. This could

also be due to an HTTP request context being aborted by the server (possibly due to the service shutting down). See server logs for more details.

The

InnerException

is totally useless as well.

The underlying connection was closed: An unexpected error occurred on a receive.

You will find that the

EndGetStock

method on the service never gets hit although you call it.

It works perfectly fine in the good old ASMX service.

I have done this so many times in creating async webservices. For instance, I

have built an AJAX Proxy Service using ASMX in order to overcome the cross

domain HTTP call restriction in browsers from Javascript. You can read about it

from here

and get the code. The solution that works in WCF is impossibly difficult to

remember and unbelievably head twisting to understand.

WCF async service is awfully

complicated

In WCF the traditional async pattern does not work

because if you study the code carefully you will see that the code totally

ignores the asyncState passed to the BeginGetStock method.

public IAsyncResult BeginGetStock(string code, AsyncCallback callback, object asyncState)

{

ExternalStockServiceClient client = new ExternalStockServiceClient();

var myCustomState = new MyCustomState { Client = client, WcfState = asyncState };

return client.BeginGetStockResult(code, callback, myCustomState);

}

The difference between old ASMX and new WCF is that

the asyncState is null in ASMX, but not in

WCF. The asyncState has a very useful object in

it:

As you see the MessageRpc is

there which is responsible for managing the thread that runs the request. So,

it’s absolutely important that we carry this along with the other async calls

so that ultimately the MessageRpc

responsible for the thread running the request gets a chance to call the EndGetStock.

Here’s the code that works in WCF async pattern:

public IAsyncResult BeginGetStock(string code, AsyncCallback wcfCallback, object wcfState)

{

ExternalStockServiceClient client = new ExternalStockServiceClient();

var myCustomState = new MyCustomState

{

Client = client,

WcfState = wcfState,

WcfCallback = wcfCallback

};

var externalServiceCallback = new AsyncCallback(CallbackFromExternalStockClient);

var externalServiceResult = client.BeginGetStockResult(code, externalServiceCallback, myCustomState);

return new MyAsyncResult(externalServiceResult, myCustomState);

}

private void CallbackFromExternalStockClient(IAsyncResult externalServiceResult)

{

var myState = externalServiceResult.AsyncState as MyCustomState;

myState.WcfCallback(new MyAsyncResult(externalServiceResult, myState));

}

public string EndGetStock(IAsyncResult result)

{

var myAsyncResult = result as MyAsyncResult;

var myState = myAsyncResult.CustomState;

using (myState.Client)

return myState.Client.EndGetStockResult(myAsyncResult.ExternalAsyncResult);

}

It’s very hard to understand from the code who is

calling who and how the callbacks get executed. Here’s a sequence diagram that

shows how it works:

Here’s a walkthrough of what’s going on here:

- Client, which can

be a WCF Proxy, makes a call to WCF service.

- WCF runtime

(maybe hosted by IIS) picks up the call and then gets a thread from thread pool

and calls

BeginGetStock on it, passing a callback

and state of WCF stuff. - The

BeginGetStock method in Service, which is our own code,

makes an async call to StockService,

which is another WCF service. However, here it does not pass the WCF callback

and state. Here it needs to pass a new callback, which will be fired back on

the Service, and a new state that contains stuff that Service needs to complete

its EndGetStock call. The mandatory stuff we

need is the instance of the StockService

because inside EndGetStock in Service, we need

to call StockService.EndGetStockResult. - Once

BeginGetStockResult on StockService is

called, it starts some async operation and it immediately returns an IAsyncResult. - The

BeginGetStock on Service receives the IAsyncResult,

it wraps if inside a MyAsycResult

instance and returns that back to WCF runtime. - After some time,

when the async operation that

StockService

started finishes, it fires the callback directly on the Service. - The callback then

fires the WCF callback, which in turn fires the

EndGetStock

on the Service. - EndGetStock then

gets the instance of the StockService from the MyAsyncResult and then it calls

EndGetStockResult on it to get the response. Then it returns the response to

WCF runtime.

- WCF runtime than

returns the response back to the Client.

It’s very difficult to follow and it’s very difficult

to do it correctly for hundreds of methods. If you have a complex service layer

that calls many other services and hundreds of operations on them, then imagine

writing this much code for each and every operation.

I have come up with a way to reduce the pain using

delegates and some kind of “Inversion of Code” pattern. Here’s the how it looks

like when you follow this pattern:

public IAsyncResult BeginGetStock(string code, AsyncCallback wcfCallback, object wcfState)

{

return WcfAsyncHelper.BeginAsync<ExternalStockServiceClient>(

new ExternalStockServiceClient(),

wcfCallback, wcfState,

(service, callback, state) => service.BeginGetStockResult(code, callback, state));

}

public string EndGetStock(IAsyncResult result)

{

return WcfAsyncHelper.EndAsync<ExternalStockServiceClient, string>(result,

(service, serviceResult) => service.EndGetStockResult(serviceResult),

(exception, service) => { throw exception; },

(service) => (service as IDisposable).Dispose());

}

In this approach, all the plumbing work for creating CustomAsyncResult, CustomState

etc, creating a callback function to receive callback from external service and

then fire the EndXXX and then dealing with AsyncResults

and state again in EndXXX, are

totally moved into the generic helper. The amount of code you have to write for

each and every operation you want to make is, I believe, quite reasonable now.

Let’s see how the WcfAsyncHelper

class looks like:

public static class WcfAsyncHelper

{

public static bool IsSync<TState>(IAsyncResult result)

{

return result is CompletedAsyncResult<TState>;

}

public static CustomAsyncResult<TState>BeginAsync<TState>(

TState state,

AsyncCallback wcfCallback, object wcfState,

Func<TState, AsyncCallback, object, IAsyncResult> beginCall)

{

var customState = new CustomState<TState>(wcfCallback, wcfState, state);

var externalServiceCallback = new AsyncCallback(CallbackFromExternalService<TState>);

var externalServiceResult = beginCall(state, externalServiceCallback, customState);

return new CustomAsyncResult<TState>(externalServiceResult, customState);

}

public static CompletedAsyncResult<TState> BeginSync<TState>(

TState state,

AsyncCallback wcfCallback, object wcfState)

{

var completedResult = new CompletedAsyncResult<TState>(state, wcfState);

wcfCallback(completedResult);

return completedResult;

}

private static void CallbackFromExternalService<TState>(IAsyncResult serviceResult)

{

var serviceState = serviceResult.AsyncState as CustomState<TState>;

serviceState.WcfCallback( new CustomAsyncResult<TState>(serviceResult,

serviceState));

}

public static TResult EndAsync<TState,

TResult>(IAsyncResult result,

Func<TState, IAsyncResult, TResult> endCall,

Action<Exception, TState> onException,

Action<TState> dispose)

{

var myAsyncResult = result as CustomAsyncResult<TState>;

var myState = myAsyncResult.CustomState;

try

{

return endCall(myState.State, myAsyncResult.ExternalAsyncResult);

}

catch (Exception x)

{

onException(x, myState.State);

return default(TResult);

}

finally

{

try

{

dispose(myState.State);

}

finally

{

}

}

}

public static TResult EndSync<TState,

TResult>(IAsyncResult result,

Func<TState, IAsyncResult, TResult> endCall,

Action<Exception, TState> onException,

Action<TState> dispose)

{

var myAsyncResult = result as

CompletedAsyncResult<TState>;

var myState = myAsyncResult.Data;

try

{

return endCall(myState, myAsyncResult);

}

catch (Exception x)

{

onException(x, myState);

return default(TResult);

}

finally

{

try

{

dispose(myState);

}

finally

{

}

}

}

}

As you see, the BeginAsync and EndAsync has taken over

the plumbing work to deal with CustomState and

CustomAsyncResult. I have made a similar

BeginSync and EndSync,

which you can use incase your BeginXXX call

needs to return a response immediately without making an asynchronous call. For

example, you might have the data cached and you don’t want to make an external

service call to get the data again, instead just return it immediately. In that

case, the BeginSync and EndSync

pair help.

An Async AJAX Proxy made in WCF using

Async Pattern

If you want to get data from external domains directly

from javascript, you need an AJAX proxy. The javascript hits the AJAX proxy

passing the URL it eventually needs to hit and the proxy then transmits the

data from the requested URL. This way you can overcome the cross browser XmlHttp call restriction in browsers. This is needed in most

of the Web 2.0 start pages like My Yahoo, iGoogle, my previous startup – Pageflakes and so on. You can see AJAX

proxy in use from my open source Web 2.0 start page project Dropthings.

Here’s the code of a WCF AJAX proxy that can get data

from an external URL and return it. It also supports caching the data. So, once

it fetches the data from external domain, it then caches it. The proxy is fully

streaming. This means the proxy does not first download the entire response and

then cache it and then return it. This way the delay will be almost twice than

hitting the external URL directly from browser, if it was possible. The code I

have here will directly stream the response from the external URL and it will

also cache the response on the fly.

First is the BeginGetUrl

function that receives the call and opens an HttpWebRequest

on the requested URL. It calls BeginGetResponse

on HttpWebRequest and returns.

[ServiceBehavior(InstanceContextMode=InstanceContextMode.PerCall, ConcurrencyMode=ConcurrencyMode.Multiple), AspNetCompatibilityRequirements(RequirementsMode= AspNetCompatibilityRequirementsMode.Allowed), ServiceContract]

public partial class AsyncService

{

[OperationContract(AsyncPattern=true)]

[WebGet(BodyStyle=WebMessageBodyStyle.Bare)]

public IAsyncResult BeginGetUrl(string url, int cacheDuration, AsyncCallback wcfCallback, object wcfState)

{

if (IsInCache(url))

{

return WcfAsyncHelper.BeginSync<WebRequestState>(new WebRequestState

{

Url = url,

CacheDuration = cacheDuration,

ContentType = WebOperationContext.Current.IncomingRequest.ContentType,

},

wcfCallback, wcfState);

}

else

{

HttpWebRequest request = WebRequest.Create(url) as HttpWebRequest;

request.Method = "GET";

return WcfAsyncHelper.BeginAsync<WebRequestState>(

new WebRequestState

{

Request = request,

ContentType = WebOperationContext.Current.IncomingRequest.ContentType,

Url = url,

CacheDuration = cacheDuration,

},

wcfCallback, wcfState,

(myState, externalServiceCallback, customState) =>

myState.Request.BeginGetResponse(externalServiceCallback, customState));

}

}

First it checks if the requested URL is already in

cache. If it is, then it will return the response synchronously from cache.

That’s where the BeginSync and EndSync come handy. If data is not in cache, then it will

make a HTTP request to the requested URL.

When the response arrives, the EndGetUrl

gets fired.

public Stream EndGetUrl(IAsyncResult asyncResult)

{

if (WcfAsyncHelper.IsSync<WebRequestState>(asyncResult))

{

return WcfAsyncHelper.EndSync<WebRequestState, Stream>(

asyncResult, (myState, completedResult) =>

{

CacheEntry cacheEntry = GetFromCache(myState.Url);

var outResponse = WebOperationContext.Current.OutgoingResponse;

SetResponseHeaders(cacheEntry.ContentLength, cacheEntry.ContentType,

cacheEntry.ContentEncoding,

myState, outResponse);

return new MemoryStream(cacheEntry.Content);

},

(exception, myState) => { throw new ProtocolException(exception.Message, exception); },

(myState) => { });

}

else

{

return WcfAsyncHelper.EndAsync<WebRequestState, Stream>(asyncResult,

(myState, serviceResult) =>

{

var httpResponse = myState.Request.EndGetResponse(serviceResult) as HttpWebResponse;

var outResponse = WebOperationContext.Current.OutgoingResponse;

SetResponseHeaders(httpResponse.ContentLength,

httpResponse.ContentType, httpResponse.ContentEncoding,

myState, outResponse);

if (myState.CacheDuration > 0)

return new StreamWrapper(httpResponse.GetResponseStream(),

(int)(outResponse.ContentLength > 0 ? outResponse.ContentLength : 8 * 1024),

buffer =>

{

StoreInCache(myState.Url, myState.CacheDuration, new CacheEntry

{

Content = buffer,

ContentLength = buffer.Length,

ContentEncoding = httpResponse.ContentEncoding,

ContentType = httpResponse.ContentType

});

});

else

return httpResponse.GetResponseStream();

},

(exception, myState) => { throw new ProtocolException(exception.Message, exception); },

(myState) => { });

}

}

Here it checks if the request is completed

synchronously because the URL was already cached. If it’s completed

synchronously then it returns the response directly from cache. Otherwise it

reads the data of the HttpWebResponse

and returns the data as a stream.

I have created a StreamWrapper

which not only returns the data from the original stream, it also stores the

buffers in a MemoryStream so that the data can

be cached while the data is read from the original stream. Thus it adds no

delay in first downloading the entire data and then caching it and only then

sending it back to the client. Both returning the response and caching the

response happens in a single pass.

public class StreamWrapper : Stream, IDisposable

{

private Stream WrappedStream;

private Action<byte[]> OnCompleteRead;

private MemoryStream InternalBuffer;

public StreamWrapper(Stream stream, int internalBufferCapacity, Action<byte[]> onCompleteRead)

{

this.WrappedStream = stream;

this.OnCompleteRead = onCompleteRead;

this.InternalBuffer = new MemoryStream(internalBufferCapacity);

}

.

.

.

public override int Read(byte[] buffer, int offset, int count)

{

int bytesRead = this.WrappedStream.Read(buffer, offset, count);

if (bytesRead > 0)

this.InternalBuffer.Write(buffer, offset, bytesRead);

else

this.OnCompleteRead(this.InternalBuffer.ToArray());

return bytesRead;

}

public new void Dispose()

{

this.WrappedStream.Dispose();

}

}Time to load test this and prove it really scales.

It does not scale at all!

I did a load test to compare the improvement of the

async implementation over a sync implementation. The sync implementation is

simple, it just uses WebClient to

get the data from given URL. I made a console app which will launch 50 threads

and hit the service using 25 threads and use the other 25 threads to hit some

ASPX page to make sure the ASP.NET site is functional while the service call is

going on. The service is hosted on another server and it is intentionally made

to respond very slow, taking 6 seconds to complete the request. The load test

is done using three HP ProLiant BL460c G1 Blade servers, 64 bit hardware, 64

bit Windows 2008 Enterprise Edition.

The client side code launches 50 parallel threads and

then hits the service first so that the threads get exhausted running the long

running service. While the service requests are executing, it launches another

set of threads to hit some ASPX page. If the page responds timely, then we have

overcome the threading problem and the WCF service is truly async. If it does

not, then we haven’t solved the problem.

static TimeSpan HitService(string url,

string responsivenessUrl,

int threadCount,

TimeSpan[] serviceResponseTimes,

TimeSpan[] aspnetResponseTimes,

out int slowASPNETResponseCount,

string logPrefix)

{

Thread[] threads = new Thread[threadCount];

var serviceResponseTimesCount = 0;

var aspnetResponseTimesCount = 0;

var slowCount = 0;

var startTime = DateTime.Now;

var serviceThreadOrderNo = 0;

var aspnetThreadOrderNo = 0;

for (int i = 0; i < threadCount/2; i++)

{

Thread aThread = new Thread(new ThreadStart(() => {

using (WebClient client = new WebClient())

{

try

{

var start = DateTime.Now;

Console.WriteLine("{0}\t{1}\t{2} Service call Start", logPrefix, serviceThreadOrderNo,

Thread.CurrentThread.ManagedThreadId);

var content = client.DownloadString(url);

var duration = DateTime.Now - start;

lock (serviceResponseTimes)

{

serviceResponseTimes[serviceResponseTimesCount++] = duration;

Console.WriteLine("{0}\t{1}\t{2} End Service call. Duration: {2}",

logPrefix, serviceThreadOrderNo,

Thread.CurrentThread.ManagedThreadId, duration.TotalSeconds);

serviceThreadOrderNo++;

}

}

catch (Exception x)

{

Console.WriteLine(x);

}

}

}));

aThread.Start();

threads[i] = aThread;

}

Thread.Sleep(500);

for (int i = threadCount / 2; i < threadCount; i ++)

{

Thread aThread = new Thread(new ThreadStart(() => {

using (WebClient client = new WebClient())

{

try

{

var start = DateTime.Now;

Console.WriteLine("{0}\t{1}\t{2} ASP.NET Page Start", logPrefix, aspnetThreadOrderNo,

Thread.CurrentThread.ManagedThreadId);

var content = client.DownloadString(responsivenessUrl);

var duration = DateTime.Now - start;

lock (aspnetResponseTimes)

{

aspnetResponseTimes[aspnetResponseTimesCount++] = duration;

Console.WriteLine("{0}\t{1}\t{2} End of ASP.NET Call. Duration: {3}",

logPrefix, aspnetThreadOrderNo,

Thread.CurrentThread.ManagedThreadId, duration.TotalSeconds);

aspnetThreadOrderNo++;

}

if (serviceResponseTimesCount > 0)

{

Console.WriteLine("{0} WARNING! ASP.NET requests running slower than service.", logPrefix);

slowCount++;

}

}

catch (Exception x)

{

Console.WriteLine(x);

}

}

}));

aThread.Start();

threads[i] = aThread;

}

foreach (Thread thread in threads)

thread.Join();

var endTime = DateTime.Now;

var totalDuration = endTime - startTime;

Console.WriteLine(totalDuration.TotalSeconds);

slowASPNETResponseCount = slowCount;

return totalDuration;

}

When I do this for the sync service, it demonstrates

the expected behaviour, ASP.NET page takes longer than the service calls to

execute because ASP.NET requests aren’t getting chance to run.

[SYNC] 15 116 End of ASP.NET Call. Duration: 10.5145348

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 16 115 End of ASP.NET Call. Duration: 10.530135

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 17 114 End of ASP.NET Call. Duration: 10.5457352

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 12 142 End Service call. Duration: 142

[SYNC] 18 112 End of ASP.NET Call. Duration: 10.608136

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 19 113 End of ASP.NET Call. Duration: 10.608136

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 20 111 End of ASP.NET Call. Duration: 11.0293414

[SYNC] WARNING! ASP.NET requests running slower than service.

[SYNC] 21 109 End of ASP.NET Call. Duration: 11.0605418

When it run the test for the async service, surprisingly, it’s the same thing:

[ASYNC] 13 134 End of ASP.NET Call. Duration: 12.0745548

[ASYNC] WARNING! ASP.NET requests running slower than service.

[ASYNC] 14 135 End of ASP.NET Call. Duration: 12.090155

[ASYNC] WARNING! ASP.NET requests running slower than service.

[ASYNC] 15 136 End of ASP.NET Call. Duration: 12.1057552

[ASYNC] WARNING! ASP.NET requests running slower than service.

[ASYNC] 14 111 End Service call. Duration: 111

[ASYNC] 15 110 End Service call. Duration: 110

[ASYNC] 16 137 End of ASP.NET Call. Duration: 12.5737612

[ASYNC] WARNING! ASP.NET requests running slower than service.

[ASYNC] 17 138 End of ASP.NET Call. Duration: 12.5893614

[ASYNC] WARNING! ASP.NET requests running slower than service.

[ASYNC] 18 139 End of ASP.NET Call. Duration: 12.6049616

The statistics shows that we are having same number of

slow ASP.NET page execution and there’s really no visible difference in

performance nor scalability between the sync and the async version.

<a name="OLE_LINK1">Regular service slow responses: 25</a>

Async service slow responses: 25

Regular service average response time: 19.39416864

Async service average response time: 18.5408377

Async service is 4.39993564993564% faster.

Regular ASP.NET average response time: 10.363836868

Async ASP.NET average response time: 10.4503867776

Async ASP.NET is 0.828198146555863% slower.

Async 95%ile Service Response Time: 14.5705868

Async 95%ile ASP.NET Response Time: 12.90994551

Regular 95%ile Service Response Time: 15.54793933

Regular 95%ile ASP.NET Response Time: 13.06594751

95%ile ASP.NET Response time is better for Async by 1.19395856963763%

95%ile Service Response time is better for Async by 6.28605829528921%

There’s really no significant difference. The slight

difference we see may well be due to network hiccups or just some threading

delay. It’s not something that really shows us that there’s significant

improvement.

However, when I do this for an async ASMX service, the

good old ASP.NET 2.0 ASMX service, the result is as expected. There’s no delay in

ASP.NET request execution no matter how many service calls are in progress. The

scalability improvement is significant. Service response time is 33% faster and

most importantly, the responsiveness of the ASP.NET webpages, are over 73%

better.

Regular service slow responses: 25

Async service slow responses: 0

Regular service average response time: 23.25053808

Async service average response time: 13.90445826

Async service is 40.1972624798712% faster.

Regular ASP.NET average response time: 11.2884919224

Async ASP.NET average response time: 2.211796356

Async ASP.NET is 80.4066267557752% faster.

Async 95%ile Service Response Time: 14.1493814

Async 95%ile ASP.NET Response Time: 3.7596482

Regular 95%ile Service Response Time: 21.2318722

Regular 95%ile ASP.NET Response Time: 14.06436031

95%ile ASP.NET Response time is better for Async by 73.2682602185126%

95%ile Service Response time is better for Async by 33.3578251285819%

So, does this means all the articles we read about WCF

supporting Async, making your services more scalable for long running calls,

are all lies?

I thought I must have written the client wrong. So, I

used HP Performance Center to run some load test to see how the sync and async

services compare. I have used 50 vusers to hit the service and another 50

vusers to hit the ASPX page at the same time. After running the test for 15

minutes, the result is the same, both sync and async implementation of the WCF

service is showing the same result. This means there’s no improvement in the

async implementation.

Figure:

Async service load test result

Figure:

Sync service load test result

As you can see, both performing more or less the same.

This means WCF, off the shelf, does not support async service.

Reading some

MSDN blog posts, I get the confirmation. By default, WCF has a synchronous

HTTP handler that holds the ASP.NET thread until the WCF request completes. In

fact it’s worse than good old ASMX when WCF is hosted on IIS because it uses

two threads per request. Here’s what the blog says:

In .NET 3.0 and 3.5, there

is a special behavior that you would observe for IIS-hosted WCF services.

Whenever a request comes in, the system would use two threads to process the

request:

One thread is the CLR ThreadPool thread

which is the worker thread that comes from ASP.NET.

Another thread is an I/O thread that is

managed by the WCF IOThreadScheduler (actually created by ThreadPool.UnsafeQueueNativeOverlapped).

The blog posts gives you a hack where you can

implement a custom HttpModule of

your own, which replaces the default sync HttpHandler for

processing WCF request with an Async HttpHandler. As

per the blog, it’s supposed to support asynchronous processing of WCF requests

without holding the ASP.NET thread. After implementing the hack and running the

load test, the result is the same, the hack also does not work.

Figure: Load test report after implementing the custom

HttpModule

I have successfully installed the HttpModule by

configuring the module in the web.config:

<system.webServer>

<validation validateIntegratedModeConfiguration="false"/>

<httpErrors errorMode="Detailed">

</httpErrors>

<modules>

<remove name="ServiceModel"/>

<add name="MyAsyncWCFHttpModule" type="AsyncServiceLibrary.MyHttpModule" preCondition="managedHandler" />

</modules>

</system.webServer>

But still no improvement.

Is that end of the road? No, .NET 3.5 SP1 fixed the

problem.

The final solution that really works

Finally, I found the

MSDN post which confirms in .NET 3.5 SP1, Microsoft has released Async

implementation of Http Handlers. Using a

tool, you can install the async handlers on IIS to be the default and only

then the WCF async services truly become async.

Besides the existing synchronous WCF HTTP

Module/Handler types, WCF introduced the asynchronous versions. Now there are

total four types of Http Modules implemented:

Synchronous Module: System.ServiceModel.Activation.HttpModule, System.ServiceModel, Version=3.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089

Asynchronous Module (new): System.ServiceModel.Activation.ServiceHttpModule,

System.ServiceModel, Version=3.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089

Synchronous Handler: System.ServiceModel.Activation.HttpHandler, System.ServiceModel, Version=3.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089

Asynchronous Handler (new): System.ServiceModel.Activation.ServiceHttpHandlerFactory, System.ServiceModel, Version=3.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089

The following command will install the async http

modules and it will set the throttle to 200. This means not more than 200

requests will be queued. This will ensure async requests do not get queued

indefinitely and make server suffer from memory unavailability.

WcfAsyncWebUtil.exe /ia /t 200

Once the tool is installed and I run my client to test

the improvement, I can see the dramatic improvement in response times.

| After the tool is run

| Before the tool is run

|

| Regular service slow responses: 0

| Regular service slow responses: 25

|

| Async service slow responses: 0

| Async service slow responses: 25

|

| Regular service average response time: 16.46133104

| Regular service average response time: 19.39416864

|

| Async service average response time: 12.45831972

| Async service average response time: 18.5408377

|

| Regular ASP.NET average response time: 2.1384130152

| Regular ASP.NET average response time: 10.363836868

|

| Async ASP.NET average response time: 2.214292388

| Async ASP.NET average response time: 10.4503867776

|

| Async 95%ile Service Response Time: 7.1448916

| Async 95%ile Service Response Time: 14.5705868

|

| Async 95%ile ASP.NET Response Time: 3.62782651

| Async 95%ile ASP.NET Response Time: 12.90994551

|

| Regular 95%ile Service Response Time: 15.32017641

| Regular 95%ile Service Response Time: 15.54793933

|

| Regular 95%ile ASP.NET Response Time: 3.30022231

| Regular 95%ile ASP.NET Response Time: 13.06594751

|

After running the tool, WCF service responsiveness is

2x better and ASP.NET page responsiveness is 4x better.

The load test report also confirms significant

improvement:

Figure: Transaction per second improved by 2x after

the WCF tool is run

Figure: Average response time for both the service and

the ASP.NET page is nearly half after the WCF tool is run.

So, this proves, WCF supports async services as

expected and .NET 3.5 SP1 has made dramatic improvement in supporting true

async services.

Wait it is showing same improvement

for both sync and async!

You are right, it did not make just async better but

also made sync better. Both show same improvement. The reason is the tool just

beefs up the number of threads made available to serve the requests. It does

not make async service better. You should definitely use the tool to beef up

the capacity of your server if you find the server is not really giving its

best. But it does not really solve the problem where async services are not

giving you significantly better results than sync services.

So, I had some email exchange with Dustin

from WCF team at Microsoft. Here’s how he explained:

Hi Omar,

I think this may help to illustrate what’s

happening in your test.

First, in Wenlong’s blog post WCF Request Throttling and Server Scalability, he talks

about why there are two threads per request: one worker thread and one IO

thread. Your test illustrates this well and it’s visible in the profile above.

There is a highlighted thread (4364) which I’m

showing in particular. This is a worker thread pool thread. It is waiting on

HostedHttpRequestAsyncResult.ExecuteSynchronous as highlighted in the stack

trace. You can also tell that this is a worker thread pool thread by the three

sleeps of 1 second each that happen as soon as that thread is available. What

you can also see is that this worker thread is unblocked by a thread

immediately below it (3852).

The interesting thing about thread 3852 is that

it does not start at the same time as 4364. The reason for this is because

you’re using the async pattern in WCF. However, once the back end service

(Echo) starts returning data, an IO thread is grabbed from the IO thread pool

and used to read the data coming back. Since your back end is streamed and has

many pauses in there to return the data, it takes a long time to get the whole

thing. Thus, an IO thread is blocked.

If you were to use the synchronous pattern with

WCF, you would notice that the IO thread is blocked for the entire time that

the worker thread is blocked. But it ends up not making much of a difference

because of the fact that an IO thread is going to be blocked for a long period

of time anyway in either the sync or async case.

When using the async pattern, the worker thread

pool will become a bottleneck. If you simply increase the minWorkerThreads on

the processModel, all the ASP.NET

requests will go through (on worker threads) before the WCF async requests,

which is what I assume you’re trying to do with your test code.

Now, let’s say you switch to .Net 4.0. Now you

don’t have the same problem of using two threads per request. Here’s a profile

using .Net 4.0 just on the middle tier:

Notice how 7 of the ASP.NET requests are all happening at the same time (shown

as 1 second sleeps with blue color). There are three 1 second sleeps that

happen just a bit later and this is because there was not enough worker

threads available. You’ll also notice the IO threads by the distinctive purple

color, which is indicating waiting for IO. Because the Echo service on the back

end is streaming and has pauses in it, the WCF requests have to block a thread

while receiving the data. I’m running with default settings and only 2 cores so

the default min IO thread count is 2 (and one of those is taken by the timer

thread). The rest of the service calls have to wait for new IO threads to be

created. Again, this is showing the WCF async case. If you were to switch to

the sync case, it wouldn’t be much different because it would block an IO

thread, but just for less time.

I hope this helps explain what you’re seeing in

your test.

Thanks

Dustin

But what puzzled me is if WCF 3.5 has support for

async service, and the ASync http modules are installed, why do we have the

same scalability problem? What makes WCF 4.0 perform so much better than WCF

3.5?

So, I tried various combinations of minWorkerThreads, maxWorkerThreads,

minIOThreads, maxIOThreads, minLocalRequestFreeThreads settings to see whether

the Async http module that is available in WCF 3.5 SP1 really makes any

difference or not. Here’s my finding:

| Threads

| maxWorkerThreads

| maxIOThreads

| minWorkerThreads

| minIOThreads

| minFreeThreads

| minLocalRequestFreeThreads

| ASP.NET Better

| Service Better

|

| 100

| 200

| 200

| 40

| 30

| 20

| 20

| -1.49%

| 66%

|

| 100

| 100

| 100

| 40

| 30

| 352

| 304

| 0%

| 66%

|

| 100

| auto

| auto

| auto

| auto

| auto

| auto

| 0%

| 66%

|

| 100

| 100

| 100

| 50

| 50

| 352

| 304

| -7%

| 66%

|

| 300

| auto

| auto

| auto

| auto

| auto

| auto

| 1.26%

| 37%

|

| 300

| 200

| 200

| 40

| 30

| 20

| 20

| 0%

| 38%

|

| 300

| 200

| 200

| 40

| 30

| 352

| 304

| 0%

| 39%

|

| 300

| 100

| 100

| 40

| 30

| 352

| 304

| 0%

| 39%

|

| 300

| 100

| 100

| 40

| 30

| 20

| 20

| | |

It makes no difference.

So back to Dustin:

Hi Omar,

So I

went through your example more thoroughly and I believe I’ve figured out what

was causing you problems. Here is an example output from a test run I did of

your sample with some modifications:

Regular service slow responses: 0

Async service slow responses: 0

Regular service average response time:

11.6486576

Async service average response time: 2.7771104

Async service is 76.1593953967709% faster.

Regular ASP.NET average response time: 1.018747336

Async ASP.NET average response time: 1.016686512

Async ASP.NET is 0.202290001374735% faster.

Async 95%ile Service Response Time: 2.55532172

Async 95%ile ASP.NET Response Time: 1.03106226

Regular 95%ile Service Response Time: 11.41521426

Regular 95%ile ASP.NET Response Time: 1.03451364

95%ile ASP.NET Response time is better for Async by 0.333623440673065%

95%ile Service Response time is better for Async by 77.6147721646006%

This

was a run using 100 threads. The full log is attached. There were a number of

things that I took note of that were preventing you from seeing a difference

between async and sync:

- The most important one was the

backend service Echo.ashx. Even if ASP.NET was letting all the requests

through, because this was doing a number of Thread.Sleep()s, it was blocking

worker threads. The worker threads ramp up at 2 per second so if you throw 50

requests at it all at once, you’re going to have to wait. Whether you used

async or not in the middle tier was not going to make a difference.

- You have a 10 second sleep between

async and sync. This actually skews the results in sync’s favor. The threads

that were created to handle the async work will not die due to inactivity for

at least 15 seconds. I increased this sleep to 30 seconds to make things fair.

- Your Echo.ashx begins returning

data after 1 second and then takes another 1.5 seconds to finish returning

data. This blocks an IO thread in the middle tier, which leads to two problems:

- If

the minIoThread count is not high enough, then you’ll still be waiting for the

2 per second gate to allow you to have more threads to process the simultaneous

work.

- Because

of the bug mentioned here,

the minIoThread setting may not have an effect over time.

In

order to get your test working properly I made a number of changes:

- Increased the minWorkerThreads on

the backend Echo.ashx service so that it could handle all the traffic

simultaneously.

- Increased the minWorkerThreads on

the middle tier service so that it could handle all the ASP.NET requests

simultaneously.

- Used the workaround from the blog

post above to use SynchronizationContext to move the work coming in on the

AsyncService in the middle tier from the IO thread pool to the worker thread

pool. If everything goes according to plan, all the ASP.NET requests should

finish before the Echo.ashx starts returning data, so there should be plenty of

worker threads available.

I did

not make changes to minFreeThreads or minLocalRequestFreeThreads.

Please

let me know if there is anything that is unclear.

Thanks

Dustin

So, we have an undocumented behavior in WCF's IO Threadpool management.

The problem is that when threads are launched, there’s a slow startup time for

threads. Threads are gradually added even if you suddenly queue 100 works in

the threadpool. You can see this from performance counters. If you add the

threads on w3wp process, you see there’s a slow rampup:

WCF uses the .Net CLR I/O Completion Port thread pool

for executing your WCF service code. The problem is encountered when the .Net

CLR IO Completion Port thread pool enters a state where it cannot create

threads quickly enough to immediately handle a burst of requests. The response

time increases unexpectedly as new threads are created at a rate of 1 per

500ms.

The most obvious thing to

do then would be to increase the minimum number of IO threads. You can do this

in two ways: use ThreadPool.SetMinThreads or use the <processModel> tag

in the machine.config. Here is how to do the latter:

<system.web>

<processModel

autoConfig="false"

minIoThreads="101"

minWorkerThreads="2"

maxIoThreads="200"

maxWorkerThreads="40"

/>

Be sure to turn off the autoConfig setting or the other options

will be ignored. If we run this test again, we get a much better result.

Compare the previous snapshot of permon with this one:

But if you keep the load test running for a while, you

will see it goes back to the old behaviour:

So, there’s really no way to fix this problem tweaking

configuration settings. It corroborates the research I have done earlier with

various combinations of thread pool settings and seeing no difference being

made.

So, Dustin recommended the custom

SynchronizationContext implementation by Juval Lowy. Using that you can move

requests from WCF IO thread pool to CLR Worker thread pool. CLR worker

threadpool does not have the problem. The solution to make WCF execute requests

using the CLR Worker thread pool is documented here: http://support.microsoft.com/kb/2538826

Conclusion

WCF itself supports async pattern everywhere and it

can be used to build faster and more scalable web services than ASMX. It’s the

default installation of WCF which is not configured to support async pattern

properly. So, you need to tweak IIS configuration by running the tool and use

the IO ThreadPool bug fix to enjoy the benefits of WCF at its best.