Introduction

Sometimes, users ask a question about video and audio trimming and then search the utilities that can do this. But what about programmatic implementation of this task? This article will show how it appears from the programmer's position. First of all, I will tell you about the platform and necessary media interfaces. It will be Win32 and core Windows media technology named DirectShow. Moreover, we need «K-Lite Codec Pack» installed with Media Player Classic, which is used for playing media files.

Our application consists of two parts:

- A DirectShow Transform Filter, which actually does the necessary media splitting

- A Console Application, which demonstrates how this filter can be used

The code, which is shown in this article represents the idea of this process and is not a complete application. However it can be a hard foundation of such popular application types, such as different media (video and audio) splitters.

Background

Let's look at the DirectShow Transform Filters -- what are they? First of all, they allow a programmer to do any manipulation with the media samples, which goes through these filters. But what do we need to exclude specified intervals from the media stream? The answer may be so: we need to discard any samples, which we don't want to see in the output. If we stop here we will have the media file without specified intervals of data, but the cut parts will be filled with the last sample, which have been played before the cut interval started. And this is not the result we want to see. To avoid this, we need not only simply exclude time blocks from the media stream, we need to take the next samples and correct their times, such that they shall go from the point where the cut is done. It will give us the effect that the sample goes through our filter without any pauses and break effects will not appear. To make this more general, we need to use the previous rule for all excluded intervals. We need to stride the next samples block at the summary time, which represents the sum of cut interval times. To see it in more detail, look at the application code.

Using the Code

To run the application code, you need copy the chicken.wmv file to C:\. It is necessary, because we use the manually built graph for rendering, which takes the input file from the specified location. We don't use the code graph building, because it’s a demo application and is targeted to core principles demonstration, but not for all DirectShow application specific needs. The second thing that you need to start the demo code is to register the filter in your system using a shell command like regsvr32 DShowMediaSplitFilt.ax. Then start the Demo.exe and after the execution process is finished, you will see the proceed.wmv file in C:\, which represents the result of time block exclusion process.

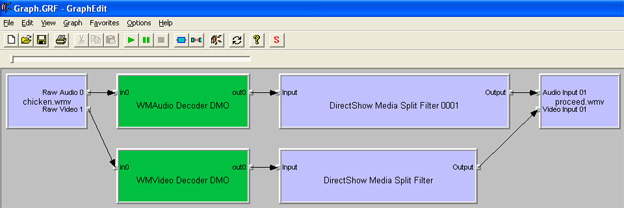

The graph, which is used here, is shown below:

You can see that we use two filter instances to make the video and audio exclusion synchronized. Also we use the IReferenceClock synchronization in our application for all graph filters. Look at the filter code:

#ifndef __DSHOWMEDIASPLITFILT_H__

#define __DSHOWMEDIASPLITFILT_H__

#pragma warning (disable:4312)

#include <streams.h> // DirectShow (includes windows.h)

#include <initguid.h> // Declares DEFINE_GUID to declare an EXTERN_C const

#include <vector>

using std::vector;

DEFINE_GUID (CLSID_DShowMediaSplit, 0x1a21958d, 0x8e93, 0x45de, 0x92, 0xda,

0xdd, 0x95, 0x44, 0x29, 0x7b, 0x41);

struct TimeBlock

{

TimeBlock (LONGLONG startTime, LONGLONG endTime)

{

this->startTime = startTime;

this->endTime = endTime;

}

LONGLONG startTime;

LONGLONG endTime;

};

DEFINE_GUID (IID_IDShowMediaSplitFilt, 0xec634576, 0x4c86, 0x464e, 0x99, 0xd7,

0xac, 0x7a, 0xfe, 0xfb, 0x2f, 0xf0);

interface IDShowMediaSplitFilt : public IUnknown

{

STDMETHOD (SetIntervalList) (const vector <TimeBlock> &lst) = 0;

};

#endif

#include "DShowMediaSplitFilt.h"

const AMOVIESETUP_MEDIATYPE sudPinTypes =

{ &MEDIATYPE_NULL , &MEDIASUBTYPE_NULL };

const AMOVIESETUP_PIN psudPins [] = {

{ L"Input" , FALSE , FALSE , FALSE , FALSE , &CLSID_NULL , L"" , 1 , &sudPinTypes }

,

{ L"Output" , FALSE , TRUE , FALSE , FALSE , &CLSID_NULL , L"" , 1 , &sudPinTypes }

};

const AMOVIESETUP_FILTER sudTransformSample =

{ &CLSID_DShowMediaSplit , L"DirectShow Media Split Filter" , MERIT_DO_NOT_USE , 2 , psudPins };

struct TimeBlockEx : public TimeBlock

{

TimeBlockEx (LONGLONG startTime, LONGLONG endTime,

LONGLONG timeAtSkipped, LONGLONG timeAtNonSkipped)

: TimeBlock (startTime, endTime)

{

this->timeAtSkipped = timeAtSkipped;

this->timeAtNonSkipped = timeAtNonSkipped;

skippedFound = false;

nonSkippedFound = false;

needToSkip = false;

}

LONGLONG timeAtSkipped;

LONGLONG timeAtNonSkipped;

bool skippedFound;

bool nonSkippedFound;

bool needToSkip;

};

class CDShowMediaSplitFilt : public CTransInPlaceFilter, IDShowMediaSplitFilt

{

public:

static CUnknown *WINAPI CreateInstance (LPUNKNOWN pUnk, HRESULT *pHr);

DECLARE_IUNKNOWN;

STDMETHODIMP NonDelegatingQueryInterface (REFIID riid, void **ppv);

STDMETHODIMP SetIntervalList (const vector <TimeBlock> &lst);

private:

CDShowMediaSplitFilt (TCHAR *tszName, LPUNKNOWN pUnk, HRESULT *pHr)

: CTransInPlaceFilter (tszName, pUnk, CLSID_DShowMediaSplit, pHr) {}

HRESULT Transform (IMediaSample *pSample);

HRESULT CheckInputType (const CMediaType *pMediaTypeIn);

vector <TimeBlockEx> timeBlockList_;

};

CFactoryTemplate g_Templates [] = {

{

L"DirectShow Media Split Filter",

&CLSID_DShowMediaSplit,

CDShowMediaSplitFilt::CreateInstance,

NULL,

&sudTransformSample

}

};

int g_cTemplates = sizeof (g_Templates) / sizeof (g_Templates [0]);

CUnknown *WINAPI CDShowMediaSplitFilt::CreateInstance (LPUNKNOWN pUnk, HRESULT *pHr)

{

CDShowMediaSplitFilt *pNewObject = new CDShowMediaSplitFilt (

NAME ("DirectShow Media Split Filter"), pUnk, pHr);

if (pNewObject == NULL)

*pHr = E_OUTOFMEMORY;

return pNewObject;

}

STDMETHODIMP CDShowMediaSplitFilt::NonDelegatingQueryInterface (REFIID riid, void **ppv)

{

if (riid == IID_IDShowMediaSplitFilt)

return GetInterface (static_cast <IDShowMediaSplitFilt *> (this),

ppv);

return CTransInPlaceFilter::NonDelegatingQueryInterface (riid, ppv);

}

STDMETHODIMP CDShowMediaSplitFilt::SetIntervalList (const vector <TimeBlock> &lst)

{

for (size_t i = 0; i < lst.size (); i++)

{

TimeBlockEx timeBlockEx (lst.at (i).startTime, lst.at (i).endTime, 0, 0);

timeBlockList_.push_back (timeBlockEx);

}

return S_OK;

}

HRESULT CDShowMediaSplitFilt::Transform (IMediaSample *pSample)

{

LONGLONG sampleStartTime = 0;

LONGLONG sampleEndTime = 0;

pSample->GetTime (&sampleStartTime, &sampleEndTime);

LONGLONG totalDelta = 0;

for (size_t i = 0; i < timeBlockList_.size (); i++)

{

if (sampleEndTime > timeBlockList_.at (i).startTime &&

sampleEndTime < timeBlockList_.at (i).endTime)

timeBlockList_.at (i).needToSkip = true;

else

timeBlockList_.at (i).needToSkip = false;

if (sampleEndTime >= timeBlockList_.at (i).startTime &&

!timeBlockList_.at (i).skippedFound)

{

timeBlockList_.at (i).timeAtSkipped = sampleEndTime;

timeBlockList_.at (i).skippedFound = true;

}

if (sampleEndTime >= timeBlockList_.at (i).endTime &&

!timeBlockList_.at (i).nonSkippedFound)

{

timeBlockList_.at (i).timeAtNonSkipped = sampleEndTime;

timeBlockList_.at (i).nonSkippedFound = true;

}

if (timeBlockList_.at (i).skippedFound &&

timeBlockList_.at (i).nonSkippedFound)

{

LONGLONG delta = timeBlockList_.at (i).timeAtSkipped -

timeBlockList_.at (i).timeAtNonSkipped;

totalDelta += delta;

LONGLONG newSampleStartTime = sampleStartTime + totalDelta;

LONGLONG newSampleEndTime = sampleEndTime + totalDelta;

pSample->SetTime (&newSampleStartTime, &newSampleEndTime);

}

if (timeBlockList_.at (i).needToSkip)

return S_FALSE;

}

return NOERROR;

}

HRESULT CDShowMediaSplitFilt::CheckInputType (const CMediaType *pMediaTypeIn)

{

return S_OK;

}

STDAPI DllRegisterServer ()

{

return AMovieDllRegisterServer2 (TRUE);

}

STDAPI DllUnregisterServer ()

{

return AMovieDllRegisterServer2 (FALSE);

}

We use the IDShowMediaSplitFilt interface, which is made in COM-style to allow calling application setup time blocks, which have to be excluded from the input media file. All core functionality stays inside the filter Transform method. The filter code is strongly simplified. Out filter accepts any media type and doesn't control which type it receives – encoded or decoded, however, we work only with decoded content. But now let’s look at the demo application source code, which is shown below:

#include <iostream>

using std::cout;

using std::endl;

#include "../DShowMediaSplitFilt/DShowMediaSplitFilt.h"

HRESULT LoadGraphFile (IGraphBuilder *pGraph, const WCHAR *wszName)

{

IStorage *pStorage = NULL;

IPersistStream *pPersistStream = NULL;

IStream *pStream = NULL;

HRESULT hr = S_OK;

try

{

hr = StgIsStorageFile (wszName);

if (FAILED (hr))

throw "Graph file not found";

hr = StgOpenStorage (wszName, 0,

STGM_TRANSACTED | STGM_READ | STGM_SHARE_DENY_WRITE,

0, 0, &pStorage);

if (FAILED (hr))

throw "Storage opening failed";

hr = pGraph->QueryInterface (IID_IPersistStream,

reinterpret_cast <void **> (&pPersistStream));

if (FAILED (hr))

throw "Couldn't query interface";

hr = pStorage->OpenStream (L"ActiveMovieGraph", 0,

STGM_READ | STGM_SHARE_EXCLUSIVE, 0, &pStream);

if (FAILED (hr))

throw "Couldn't open ActiveMovieGraph";

hr = pPersistStream->Load (pStream);

if (FAILED (hr))

throw "Couldn't load stream data";

}

catch (char *str)

{

cout << "Error occurred: " << str << endl;

}

if (pStream) pStream->Release ();

if (pPersistStream) pPersistStream->Release ();

if (pStorage) pStorage->Release ();

return hr;

}

int main ()

{

const WCHAR GRAPH_FILE_NAME [] = L"Graph.GRF";

const LONGLONG SCALE_FACTOR = 10000000;

IGraphBuilder *pGraph = NULL;

IMediaControl *pControl = NULL;

IMediaEvent *pEvent = NULL;

HRESULT hr = S_OK;

IBaseFilter *pVideoSplitFilt = NULL;

IDShowMediaSplitFilt *pVideoSplitConfig = NULL;

IBaseFilter *pAudioSplitFilt = NULL;

IDShowMediaSplitFilt *pAudioSplitConfig = NULL;

IReferenceClock *pClock = NULL;

IEnumFilters *pFiltEnum = NULL;

try

{

cout << "Process started" << endl;

hr = CoInitialize (NULL);

if (FAILED (hr))

throw "Could not initialize COM library";

hr = CoCreateInstance (CLSID_FilterGraph, NULL, CLSCTX_INPROC_SERVER,

IID_IGraphBuilder, reinterpret_cast <void **> (&pGraph));

if (FAILED (hr))

throw "Could not create the Filter Graph Manager";

hr = LoadGraphFile (pGraph, GRAPH_FILE_NAME);

if (FAILED (hr))

throw "Couldn't load graph file";

hr = pGraph->FindFilterByName (L"DirectShow Media Split Filter",

&pVideoSplitFilt);

if (FAILED (hr))

throw """DirectShow Media Split Filter"" not founded in graph";

hr = pVideoSplitFilt->QueryInterface (IID_IDShowMediaSplitFilt,

reinterpret_cast <void **> (&pVideoSplitConfig));

if (FAILED (hr))

throw "IDShowMediaSplitFilt config 4 video splitter couldn't be retrieved";

hr = pGraph->FindFilterByName (L"DirectShow Media Split Filter 0001",

&pAudioSplitFilt);

if (FAILED (hr))

throw """DirectShow Media Split Filter 0001"" not founded in graph";

hr = pAudioSplitFilt->QueryInterface (IID_IDShowMediaSplitFilt,

reinterpret_cast <void **> (&pAudioSplitConfig));

if (FAILED (hr))

throw "IDShowMediaSplitFilt config 4 audio splitter couldn't be retrieved";

vector <TimeBlock> timeBlockList;

timeBlockList.push_back (TimeBlock (1 * SCALE_FACTOR, 3 * SCALE_FACTOR));

timeBlockList.push_back (TimeBlock (5 * SCALE_FACTOR, 9 * SCALE_FACTOR));

pVideoSplitConfig->SetIntervalList (timeBlockList);

pAudioSplitConfig->SetIntervalList (timeBlockList);

hr = CoCreateInstance (CLSID_SystemClock, NULL, CLSCTX_INPROC_SERVER,

IID_IReferenceClock, reinterpret_cast <void **> (&pClock));

if (FAILED (hr))

throw "Failed to create reference clock";

hr = pGraph->EnumFilters (&pFiltEnum);

if (FAILED (hr))

throw "Couldn't enumerate graph filters";

IBaseFilter *pCurrFilt = NULL;

while (pFiltEnum->Next (1, &pCurrFilt, 0) == S_OK)

{

pCurrFilt->SetSyncSource (pClock);

pCurrFilt->Release ();

}

hr = pGraph->QueryInterface (IID_IMediaControl, reinterpret_cast <

void **> (&pControl));

if (FAILED (hr))

throw "Media control couldn't be retrieved";

hr = pGraph->QueryInterface (IID_IMediaEvent, reinterpret_cast <

void **> (&pEvent));

if (FAILED (hr))

throw "Media event couldn't be retrieved";

hr = pControl->Run ();

if (FAILED (hr))

throw "Graph couldn't be started";

long evCode = 0;

pEvent->WaitForCompletion (INFINITE, &evCode);

}

catch (char *str)

{

cout << "Error occurred: " << str << endl;

}

if (pFiltEnum) pFiltEnum->Release ();

if (pClock) pClock->Release ();

if (pAudioSplitConfig) pAudioSplitConfig->Release ();

if (pAudioSplitFilt) pAudioSplitFilt->Release ();

if (pVideoSplitConfig) pVideoSplitConfig->Release ();

if (pVideoSplitFilt) pVideoSplitFilt->Release ();

if (pEvent) pEvent->Release ();

if (pControl) pControl->Release ();

if (pGraph) pGraph->Release ();

CoUninitialize ();

cout << "Done." << endl;

return EXIT_SUCCESS;

}

How can we see that it is DirectShow-specific code, which shows how the filter properties can be set through the COM-interface. In our application it’s time blocks that we need to exclude. The time block is set in the second unit and multiplied by a factor, which allows using these times as DirectShow-style reference times.

Points of Interest

Of course there are many different ways to do a similar task. For example, we can use the DirectShow Editing Services. But in my own opinion, non-standard ways are more interesting for implementation.

History

- 19th March, 2008: Initial post

Some time ago I was very interested in the implementation of the task described above, but couldn't find any useful material or code for it and decided to write my own. It was targeted to video trim process using DirectShow implementation programmatically. We can see that its foundation was built successfully.