Background

This article follows on from the previous five Searcharoo samples:

Searcharoo 1 was a simple search engine that crawled the file system. Very rough.

Searcharoo 2 added a 'spider' to index web links and then search for multiple words.

Searcharoo 3 saved the catalog to reload as required; spidered FRAMESETs and added Stop words, Go words and Stemming.

Searcharoo 4 added non-text filetypes (eg Word, PDF and Powerpoint), better robots.txt support and a remote-indexing console app.

Searcharoo 5 runs in Medium Trust and refactored FilterDocument into DownloadDocument and its subclasses for indexing Office 2007 files.

Introduction to version 6

The following additions have been made:

- Extend the

DownloadDocument object hierarchy introduced in v5 to index Jpeg images (yes, the metadata in image files such as photos) and the new Microsoft XPS (Xml Paper Specification) format (if you are compiling in .NET 3.5). - Add Latitude/Longitude (Gps) location data to the base classes, and index geographic location data embedded in Jpeg images and the META tags of Html documents. Include the location in the search results, wtih a link to show the location on Google Maps and Google Earth.

- Allowing Searcharoo to run on websites where the ASP.NET application is restricted to "No" Trust. The remote-indexing console app in v4 and the changes in v5 were intended to addrsess this issue - but I came across a situation where NO File OR Web access was allowed. Now the code allows for the Catalog to be compiled-in to an assembly (remotely) and uploaded in ANY Trust scenario.

- Minor improvements: addition of COLOR to the Indexer.EXE Console Application, allowing multiple "start pages" to be set (so you can index your website, your blog, your hosted forum, whatever).

- Bug fixes including: <TITLE> tag parsing, correctly identifying external site links, and escaped & ampersands.

NOTE: This version of Searcharoo only displays the location (Latitude, Longitude) data - it doesn't "search by location". To read how to search "nearby" using location data, see the Store Locator: Help customers find you with Google Maps article.

Image Indexing (reading Jpeg metadata)

In Searcharoo 5 the DownloadDocument class was added to handle IFilter and Office 2007 files - files that needed to be downloaded to disk before additional code could be run against them to extract and index their textual content.

Adding support for indexing Jpeg image metadata followed the same simple steps - subclass DownloadDocument and incorporate code for processing IPTC/EXIF and XMP to read out whatever information has been embedded in a Jpeg file.

The simple changes to the object model are shown above - the actual JpegDocument class was cloned from DocxDocument, with parsing code from EXIFextractor by Asim Goheer and Reading XMP Metadata from a JPEG using C# by Omar Shahine.

The two key parts of the code are shown below - the first uses System.Drawing.Imaging to extract PropertyItems which are then parsed against a set of known, hardcoded hex values; the second extracts an 'island' of Xml from within the Jpeg binary data. Both methods return differing (sometimes overlapping) metadata key/value pairs - from which we use only a small subset (Title, Description, Keywords, GPS Location, Camera Make & Model). Dozens of other values are present including focal length, flash settings - whatever the camera supports - but I will leave you to parse additional fields as you need them.

System.Drawing.Imaging and EXIFextractor

The EXIF data is stored in a 'binary' format - opening a tagged image in Notepad2 shows recognisable data with binary markers:

The binary structures are recognised by the .NET System.Drawing.Imaging code (below), but you must know the hexadecimal code and data-type for each piece of data you want to extract.

using System.Collections;

using System.Drawing.Imaging;

public static PropertyItem[] GetExifProperties(string fileName)

{

using (FileStream stream =

new FileStream(fileName, FileMode.Open, FileAccess.Read))

{

using (System.Drawing.Image image =

System.Drawing.Image.FromStream(stream,

true,

false))

{

return image.PropertyItems;

}

}

}

Searcharoo uses the EXIFextractor code so that it's easy to review and change - there is also an alternative method to access the EXIF data by incorporating the Exiv2.dll library into your code, but I'll leave that up to you.

XMP via XML island

Unlike the EXIF data, XMP is basically 'human readable' within the JPG file as you can see below - there is an 'island' of pure XML in the binary image data. Importantly, XMP is the only way to get the Title and Description information which is really useful for searching.

public static string GetXmpXmlDocFromImage(string filename)

{

using (System.IO.StreamReader sr = new System.IO.StreamReader(filename))

{

contents = sr.ReadToEnd();

sr.Close();

}

beginPos = contents.IndexOf("<rdf:RDF", 0);

endPos = contents.IndexOf("</rdf:RDF>", 0);

xmlNode = doc.SelectSingleNode(

"/rdf:RDF/rdf:Description/dc:title/rdf:Alt", NamespaceManager);

xmlNode = doc.SelectSingleNode(

"/rdf:RDF/rdf:Description/dc:description/rdf:Alt", NamespaceManager);

It's worthwhile noting that if you are working with .NET 3.0 or later, extracting XMP metadata is much more 'scientific' than the above example - using WIC - Windows Imaging Component to access photo metadata. In order to keep this version of Searcharoo compatible with .NET 2.0, utilizing those newer features has been avoided (for now).

If you want to be able to add metadata to your images, try iTag: photo tagging software (recommended). iTag was used to tag many of the photos used during testing.

Indexing Geographic Location (Latitude/Longitude)

Image metadata & Html Meta tags

Only two document types have 'standard' ways of being geo-tagged: Html documents via the <META > tag and Jpeg images in their metadata. The location for images is extracted along with the rest of the metadata (explained above), but for the HtmlDocument class we needed to add code to parse either of the following two 'standard' geo-tags (only ONE tag is required, not both):

<meta name="ICBM" content="50.167958, -97.133185">

<meta name="geo.position" content="50.167958;-97.133185">

The code currently parses out description, keyword and robot META tags using the following Regular Expression/for loops:

Adding support for ICBM and geo.position merely required a couple of additional case clauses:

Once we have the longitude and latitude for an Html or Jpeg, it is set in the base Document class property; where it is available for copying across to Catalog File objects (which are then persisted for later searching).

Additional metadata: File type and Keywords (tags)

Since we needed to add properties to the base Document class for location, it seemed like a logical time to start storing keywords and file type to improve the search accuracy and user-experience. In both cases a new property was added to the base Document class, and relevant subclasses (that know how to "read" that data) were updated to populate the property.

Only Html and Jpeg classes currently support Keyword parsing, but almost all the subclasses correctly set the Extension property that indicates file-type.

These additional pieces of information will provide more feedback to the user when viewing results, and in future may be used for: (a) alternate search result navigation (eg. a tag cloud) and/or (b) changes to the ranking algorithm when a keyword is 'matched'.

"No" trust Catalog access

This 'problem' continues on from the "Medium" Trust issue discussed in Searcharoo 5 so it might be worthwhile reading that article again.

Basically, the new problem is that NOT EVEN WebClient permission is allowed, so the Search.aspx code cannot load the Catalog from ANY FILE (either via local file access or a Url). Searcharoo needs a way to load the Catalog into memory on the web server WITHOUT requiring any special permission... and the simplest way to accomplish that seems to be compiling the Catalog into the code!

The steps required are:

- Run Searcharoo.Indexer.EXE with the correct configuration to remotely index your website

- Copy the resulting z_searcharoo.xml catalog file (or whatever you have called it in the .config) to the special WebAppCatalogResource Project

- Ensure the Xml file Build Action: Embedded Resource

- Ensure that is the ONLY resource in that Project

- Compile the Solution -

WebAppCatalogResource.DLL will be copied into the WebApplication \bin\ directory - Deploy the

WebAppCatalogResource.DLL to your server

If the code fails to load a .DAT or .XML file under Full or Medium Trust, its fallback behaviour is to use the first resource in that assembly (using the few simple lines of code below):

System.Reflection.Assembly a =

System.Reflection.Assembly.Load("WebAppCatalogResource");

string[] resNames = a.GetManifestResourceNames();

Catalog c2 = Kelvin<Catalog>.FromResource(a, resNames[0]);

One final note: rather than remove the Binary or Xml Serialization features that run in "Full" and "Medium" Trust, all methods are still available. Whether Binary or Xml is controlled by the web.config/app.config setting (for your Website and Indexer Console application).

<appSettings>

<add key="Searcharoo_InMediumTrust" value="True" />

</appSettings>

If set to

True, the Catalog will be saved as a *.XML file, if set to

False it will be written as *.DAT. Only if the code cannot load EITHER of these files will the resource DLL be used (an easy way to force it would be to delete all .DAT and .XML catalog files).

Presenting the newly indexed data

The aforementioned changes mainly focus on the addition of indexing functionality: finding new data (latitude, longitude, keyword, file-type), cataloging it and allowing the Catalog to be accessed. Presenting the search results with this additional data required some changes to the File and ResultFile classes which are used by the Search.aspx page when it does a search and shows the results.

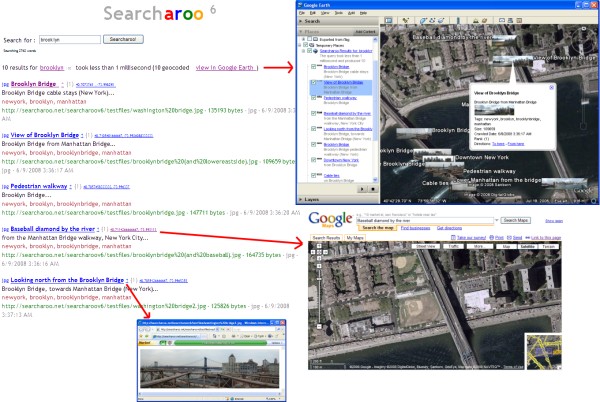

The new properties added to Document (above) are mirrored in File, and where required 'wrapped' in ResultFile by display-friendly read-only properties. It is the ResultFile class that is bound to an asp:Repeater to produce the following output (note the coordinates next to some results, which link to the specific location at maps.google.com)

SearchKml.aspx

The presence of location data (latitude and longitude coordinates) doesn't just allow us to 'link' out to a single location - it allows for a whole new way to view the search results! When one or more result links are found to have location data, a new view in Google Earth link is displayed. It links to the SearchKml.aspx page (which inherits from the same "code-behind" as Search.aspx) but instead of Html it displays the results in KML (Keyhole Markup Language, used by Google Earth)!

The KML output looks very different to the HTML from Search.aspx:

However, the link to SearchKml.aspx is formatted more like a 'file reference': for example searcharoo.net/SearchKml/newyork.kml takes you to the screenshots below:

How (and why) does the link searcharoo.net/SearchKml/newyork.kml use the SearchKml.aspx page, you may wonder?

The reason for the url format (using a .kml extension and embedding the search term) is to enable browsers to open Google Earth based on the file extension (when Google Earth is installed, it registers .KML and .KMZ as known file types). Because the link "looks" like it refers to a newyork.kml file (and not an ASPX page), most browsers/operating systems will automatically open it in Google Earth (or other program registered for that file type).

The link syntax is accomplished with a 404 custom error handler (which must also be setup in either web.config or IIS Custom Errors tab):

NOTE: the same 'behaviour' is possible using a custom HttpHandler, however it requires "mapping" the .KML extension to the .NET Framework in IIS - something that isn't always possible on cheaper hosting providers [which will usually still allow you to setup a custom 404 URL]. It would be even easier using the new URL Routing Framework being introduced in .NET 3.5 SP1. For now, Searcharoo uses the simplest approach - 404.aspx.

Minor enhancements

Color-coded Indexer.EXE

This is purely a cosmetic change to make using the Searcharoo.Indexer.EXE easier; and uses Philip Fitzsimons' article Putting colour/color to work on the console. Each different "logging level" (or 'verbosity') is output in a different color to make reading easier.

'Verbosity' is set in the Searcharoo.Indexer.EXE.config file (app.config in Visual Studio).

And looks like this when running:

Multiple start Urls

Previous versions of Searcharoo only allowed a single 'start Url', and any links away from that Url were ignored. Version 6 now allows multiple 'start Urls' to be specified - they will all be indexed and added to the same Catalog for searching. Specify multiple subdomains in the .config file seperated by a comma or semicolon (eg. maybe you have forums.* and www.* domain names; or you wish to index your blog and your photosharing sites together).

WARNING: indexing takes time and uses network bandwidth - DON'T index lots of sites without being aware of how long it will take. If you stop indexing half-way-through, the Catalog does NOT get saved!

Recognising 'fully qualified' local links

A 'bug' that was reported by a few people (without resolution) has been addressed - if your site has "fully qualified" links (eg. my blog conceptdev.blogspot.com has ALL the anchor tags specified like this "http://conceptdev.blogspot.com/2007/08/latlong-to-pixel-conversion-for.html") these links were marked as "External" and not crawled (HtmlDocument class, around line 360).

Spider.cs has been updated to add these links to the LocalLinks ArrayList.

NOTE: the code does a 'starts with' comparison, so if you specified a subdirectory for your "Start Url" (eg. http://searcharoo.net/SearcharooV1/) then a fully qualified link to a different subdirectory will STILL not be indexed (eg. lt;a href="http://searcharoo.net/SearcharooV2/SearcharooSpider_alpha.html"> would NOT be followed).

Bug fix (honor roll)

Many thanks to the following CodeProject readers/contributors:

<TITLE> tag parsing

Erick Brown [work] identified the problem of CRLFs in the <TITLE> tag causing it to not be indexed... and provided a new Regex to fix it.

Correctly identifying external site links

mike-j-g (and later hitman17) correctly pointed out that the matching of links (in HtmlDocument) was case-sensitive, and provided a simple fix.

Handling escaped & ampersands in the querystring

Thanks to Erick Brown [work] again for highlighting a problem (and providing a fix) for badly handled & ampersands in querystrings.

Proxy support

stephenlane80 provided code for downloading via a proxy server (his change was added to Spider.Download() and RobotsTxt.ctor()). A new .config setting has been added to store the Proxy Server Url (if required):

Parsing robots.txt from Unix servers

maaguirr suggested a change to the RobotsTxt class so that it correctly processes robots.txt files on Unix servers (or wherever they might have different 'line endings' to the standard Windows CRLF).

Try it out

In order to 'try out' Searcharoo without having to download and set-up the code, there is now a set of test files on searcharoo.net which you can search here. The test files are an assortment of purpose-written files (eg. to test Frames, IFrames, META tags, etc) plus some geotagged photos from various holidays.

Wrap-up

Obviously the biggest change in this version is the ability to 'index' images using the metadata available in the JPG format. Other metadata (keywords, filetype) has also been added, and a foundation created to index and store even more information if you wish.

These changes allow for a number of new possibilities in future, including: a "Search Near" feature to order results by distance from a given point or search result; much more sophisticated tag/keyword display and processing; additional metadata parsing across other document types (eg. Office documents have a Keyword field in their Properties); and whatever else you can think of.