Introduction

When you create rich Ajax applications, you use external JavaScript frameworks and you have your own homemade code that drives your application. The problem with well known JavaScript framework is, they offer rich set of features which are not always necessary in its entirety. You may end up using only 30% of jQuery but you still download the full jQuery framework. So, you are downloading 70% unnecessary scripts. Similarly, you might have written your own JavaScripts which are not always used. There might be features which are not used when the site loads for the first time, resulting in unnecessary download during initial load. Initial loading time is crucial – it can make or break your website. We did some analysis and found that every 500ms we added to initial loading, we lost approximately 30% traffic that never waits for the whole page to load and just closes the browser or goes away. So, saving initial loading time, even by a couple of hundred milliseconds, is crucial for survival of a startup, especially if it’s a rich AJAX website.

You must have noticed Microsoft’s new tool Doloto which helps solve the following problem:

Modern Web 2.0 applications, such as GMail, Live Maps, Facebook and many others, use a combination of Dynamic HTML, JavaScript and other Web browser technologies commonly referred as AJAX to push page generation and content manipulation to the client web browser. This improves the responsiveness of these network-bound applications, but the shift of application execution from a back-end server to the client also often dramatically increases the amount of code that must first be downloaded to the browser. This creates an unfortunate Catch-22: to create responsive distributed Web 2.0 applications developers move code to the client, but for an application to be responsive, the code must first be transferred there, which takes time.

Microsoft Research looked at this problem and published this research paper in 2008, where they showed how much improvement can be achieved on initial loading if there was a way to split the JavaScript frameworks into two parts – one primary part which is absolutely essential for initial rendering of the page and one auxiliary part which is not essential for initial load and can be downloaded later or on-demand when user does some action. They looked at my earlier startup Pageflakes and reported:

2.2.2 Dynamic Loading: Pageflakes A contrast to Bunny Hunt is the Pageflakes application, an industrial-strength mashup page providing portal-like functionality. While the download size for Pageflakes is over 1 MB, its initial execution time appears to be quite fast. Examining network activity reveals that Pageflakes downloads only a small stub of code with the initial page, and loads the rest of its code dynamically in the background. As illustrated by Pageflakes, developers today can use dynamic code loading to improve their web application’s performance. However, designing an application architecture that is amenable to dynamic code loading requires careful consideration of JavaScript language issues such as function closures, scoping, etc. Moreover, an optimal decomposition of code into dynamically loaded components often requires developers to set aside the semantic groupings of code and instead primarily consider the execution order of functions. Of course, evolving code and changing user workloads make both of these issues a software maintenance nightmare.

Back in 2007, I was looking at ways to improve the initial load time and reduce user dropout. The number of users who would not wait for the page to load and go away was growing day by day as we introduced new and cool features. It was a surprise. We thought new features will keep more users on our site but the opposite happened. Analysis concluded it was the initial loading time that caused more dropout than it retained users. So, all our hard work was essentially going to drain and we had to come up with something ground breaking to solve the problem. Of course we had already tried all the basic stuffs – IIS compression, browser caching, on-demand loading of JavaScript, CSS and HTML when user does something, deferred JavaScript execution – but nothing helped. The frameworks and our own hand coded framework was just too large. So, the idea tricked me, what if we could load functions inside a class in two steps. The first step will load the class with absolutely essential functions and the second step will inject more functions to the existing classes.

Split a Class into Multiple JavaScript Files

Here was the idea:

var VeryImportantClass = {

essentialMethod: function()

{

DoSomething1();

DoSomething2();

DoSomething3();

DoSomething4();

},

notSoEssentialMethod: function()

{

DoSomething1();

DoSomething2();

DoSomething3();

DoSomething4();

}

};

Here you see a class that is absolutely essential to load during initial load time. If this class is not loaded during initial load, page loading will break with JavaScript errors. Now, assume this class is really big. It has many core functions that deliver most important features of your website. However, not every function in this class is called during initial load. Some functions are called when user does some action like clicking some button or hovering on some menu. So, we could take those functions out into another JavaScript file and load it after the page rendering has completed. This means, you change the class to this:

var VeryImportantClass = {

essentialMethod: function()

{

DoSomething1();

DoSomething2();

DoSomething3();

DoSomething4();

}

};

Here the notSoEssentialMethod is taken out of the JavaScript file. Let’s call this stripped down JavaScript PreFramework.js. PreFramework must be loaded before page rendering begins. It blocks page rendering until it’s fully loaded. As a result, it’s essential that it has absolutely bare minimum code in it.

Now you add another JavaScript to your page which is loaded using the defer attribute set, and you put it at the end of the page, and in that JavaScript, you write this:

VeryImportantClass.notSoEssentialMethod = function()

{

DoSomething1();

DoSomething2();

DoSomething3();

DoSomething4();

}

Let’s call this JavaScript file PostFramework.js. Since this JavaScript is loaded using defer attribute set on the script tag, it does not block page rendering. It loads after the browser has finished loading the scripts.

When the PostFramework.js loads, it adds the additional methods to the same class. Thus your VeryImportantClass gets more and more behavior loaded on demand. All the code that’s using the VeryImportantClass remains the same. No need to change them at all. Since that code only gets executed when user does something, they aren't fired during initial loading and thus they don't mind if the functions aren't there. JavaScript is not like C# that if some function does not exist, it won't compile. So, I took advantage of it and significantly reduced the amount of initial JavaScript we were loading. This is the exact technique Microsoft Research used in the Doloto tool. Doloto does it automatically for you. In fact, it’s even more clever. It does something called Stubbing. Read on.

Stub the Functions Which Aren't Called During Initial Load

At Pageflakes, it was hard to keep track of JavaScript since widgets are developed by external developers and companies. So, it’s not always possible to scan all the JavaScripts we have and figure out which functions are called initially and which aren't. When we aggressively started taking functions out of initial loading, we started seeing JavaScript errors on mousemove, or tooltip or sometimes window resize because the scripts needed during these events were not there. It was becoming a long trial and error approach to see what JavaScript gets called before the PostFramework.js loads. Different internet bandwidth speed was causing even more trouble since we could not assume that PostFramework.js will be available in 10 seconds of loading time and it’s ok to move FunctionX out from Pre to Post.

So, I tried another approach. I kept the function declaration there, but took the code out of the functions. This prevented the JavaScript errors since you could call those functions but nothing would happen. The result was that in PreFramework.js:

var VeryImportantClass = {

essentialMethod: function()

{

DoSomething1();

DoSomething2();

DoSomething3();

DoSomething4();

},

notSoEssentialMethod: function()

{

}

};

PreFramework just declares the function so that others could call them, but nothing would happen. This is called stubs. PostFramework injects the code inside that stub. This solved majority of the JavaScript problem except for those who would expect some return value from the function and would break if it did not return any. But that was negligible.

So, we broke over 1 MB of JavaScript into Pre and Post framework. PreFramework was only about 200 KB, containing gigantic Microsoft AJAX library and our own absolutely essential JavaScript for initial rendering of page. The remaining 0.9 MB worth of JavaScript was moved to post framework.

Now you have Doloto, which will do exactly this but automatically so that you won't have to hand pick the functions. Too bad it was not there when we were losing traffic and business. We would be more successful if Doloto came out 2 years earlier. Pageflakes is a real example where Doloto could increase its valuation by at least $5M over two years.

However, I had more tricks up my sleeves than what Doloto does now. Doloto does scientific analysis on your code – it checks when JavaScript functions are getting called and based on that, it finds which scripts are called before page is rendered and which scripts are called after page is rendered. It also records timing of function calling so that it can create groups of functions which are called relatively closely and helps you break your JavaScripts further into small clusters. But a scientific analysis is not always the most optimal one. You might have written code that tries to initialize tooltips, menus, accordions, show/hide dives, resize divs, etc. and have put them early in your scripts. Doloto sees they are being executed during initial loading and keeps them in the PreFramework. However you know that they are not a pre-requisite for page rendering. You could easily do those on-demand, after the page has finished loading. For example, when a user hovers on some links to see tooltip or when some button is clicked, you could initialize the tooltips. This is something only a human brain can decide, no system can predict. But it is something that can save significant download time.

JavaScript Code in Text

You could execute JavaScript lazily, by doing some eval or using the new Function(‘’) syntax. Such JavaScripts are not executed when they are created, instead when they are called. For example, instead of doing this:

function initTooltips()

{

var links = document.getElementsByTagName("a");

for (a in links)

{

a.onmouseover = show_tooltip;

}

}

This requires the show_tooltip function or at least its stub to be already available. Instead of doing this, if you use eval or the Function approach:

function initTooltips()

{

var links = document.getElementsByTagName("a");

for (a in links)

{

a.onmouseover = new Function("if (typeof show_tooltip == 'function')

show_tooltip(this)");

}

}

There’s no need for the show_tooltip function to be available when this code runs. If this function is not loaded and user hovers on the links, it ignores calling the function. Only when your gigantic post framework is loaded and the show_tooltip function is available, it will call the function. From the UI point of view, user won't get tooltips for say, the first 5 seconds. But who cares! It’s better to sacrifice such non-essential features for a short period than increasing initial page load time which results in drop of users.

Break UI Loading into Multiple Stages

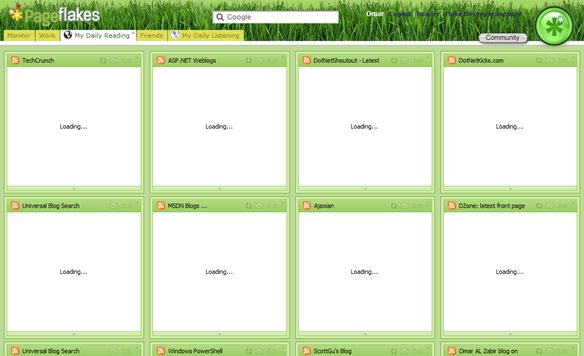

Since Pageflakes is a super heavy AJAX site, majority of the page is rendered using JavaScript by making some AJAX calls. That means both content and behavior are loaded asynchronously. For example, when you visit my Pageflakes Pagecast for the first time, you see this for a while:

Here you see the skeleton or placeholders for the content. From server side, we know what will eventually be delivered to the browser. Instead of delivering the content and JavaScripts upfront, we deliver a fake skeleton first while the necessary JavaScripts download to render the actual content. Although this contributes to the actual load time, since the fake skeleton also takes some bandwidth, it’s kept absolute minimal. Since the user gets to see the skeleton instead of a blank page, the user actually feels the page loads faster. We did enough user study to come to the conclusion that if we can give the user a preview of the real content real fast, instead of the progress bar, the user actually feels the site works faster.

Once the skeleton is delivered, the content and JavaScripts to make that content work are delivered in the background. When they are fully delivered, we render them as much as we can in one shot to avoid frequent re-rendering and page re-organization.

You will learn similar tricks from this video in MIX 09 - Building High Performance Web Applications and Sites.

Always Grow Content from Top to Bottom, Never Shrink or Jump

The way you render the page content also has significant impact on user’s perception of the speed of your website. If you are rendering content in such a way that sometimes the visible content of the page moves up and down, then user’s perception of site’s speed gets worse. At Pageflakes, initially we made the widgets appear some specific height initially, enough to show a “Loading…” message. When the page is finally rendered with the real content, some widgets would grow, some would shrink, some jump up and down. It seemed like the page is very busy and it annoyed users. You will see a similar problem on my open source AJAX start page Dropthings. When the page loads, it seems like the site spends a lot of time rendering the page content. It gives the user a slow loading feeling. But if you go to my Pageflakes page, you will see there’s a continuous flow of content. Widgets only grow in one direction that is vertically, which is a natural experience. And there’s an overall subtle feeling of content loading on the page, widgets loading harmoniously one after another, without stepping over each other, nicely building the page from top to bottom. This smooth flow of content loading gave users a perception of faster loading, although the actual loading time was worsened due to all the tricks we played to create such an experience.

The above pictures show that widgets only grow and move one way. This gives a faster loading feeling than some widgets shrinking, some growing. The size of the widget skeleton has been chosen after much research to find the right size that gives the “grow” result in most cases, especially pages with lots of RSS feed. However, as you will notice, the regular Pageflakes homepage loading is not so impressive because of so many different types and sizes of widgets.

Deliver Browser Specific Script from Server

When you build rich Ajax client, you have to deal with browser specific CSS and JavaScripts. Especially in Internet Explorer 6 and Internet Explorer 7 era, with browsers like Firefox, Safari, Opera and Chrome out there which all seem to have their own rendering style and render things differently. As a result, your CSS and JavaScripts get bloated with browser specific tweaks. For example:

function browserSpecificStuff()

{

if (browser.isIE)

{

DoSomething1();

DoSomething2();

DoSomething3();

}

else if (browser.isFirefox)

{

DoSomething4();

DoSomething5();

DoSomething6();

}

else if (browser.isSafari)

{

DoSomething3();

}

}

As you see, Safari users will get 80% useless script loaded. So, you need to find a way to break such scripts into browser specific files. You keep the common functions in one common JS, but take out the functions which are browser specific and separate them into browser specific JavaScript files. When you load JavaScripts, load the common one first and then load one browser specific one that injects browser specific implementation into the common one.

The way we dealt with this issue was to use a server side HTTP handler which would look at browser user agent, detect the browser type and load the script that only has a specific browser related code. For example, we would split our framework code into different chunks like PreFramework.Common.js and then PreFramework.IE.js, PreFramework.Firefox.js, PreFramework.Safari.js. We created a handler that would combine the PreFramework.Common.js with one browser specific PreFramework.XXXX.js and emit the combined output. This way the browser gets only the relevant scripts, none extra. It saves download time, makes page load faster, retains more users, increases your startup’s valuation. Such simple trick sometimes results in a million more dollars in your pocket!

Conclusion

The first visit page load performance is key to your business, if retaining users is the primary criteria for measuring success of your business. The faster your page loads, the less users drop out. Nowadays users are more impatient than they were when it was the era of ISDN lines and dial-up modems. So, any site taking more than 3 seconds to load will have around 30% user drop out. So, it’s absolutely important that you try whatever trick you have to shed milliseconds out of initial loading and make sure the initial rendering is as visually pleasant as possible.

History

- 23rd September, 2009: Initial version