Introduction

People spend a significant amount of time and energy at putting their thoughts into writing. So, it is time for a technology to concentrate on automating the retrieval and manipulation of these thoughts in such a way that the original meaning is not lost in the process. To that end, a means by which concepts are reconstructed and then manipulated from natural language is required. That goal cannot be achieved by a diminutive form of natural language understanding that limits itself to word-spotting or a superficial association of semantic blocks, but rather by a process that mimics the functions of the human brain in its processing and outcome. This article exposes a novel means by which such processing is achieved through a Conceptual Language Understanding Engine (CLUE).

The dominant part of the word "recognition" is "cognition", which is defined in the Oxford dictionary as "the mental acquisition of knowledge through thought, experience, and the senses". The present approach uses techniques that encapsulate most aspects associated with a cognitive approach to recognition.

Communication is a procedural sequence that involves the following processes:

- a "de-cognition" process - producing a syntactic stream representing the cognitive aspect to communicate.

- a transporting process - putting such syntactic stream on a medium.

- a perceptive process - senses acquiring the syntactic stream.

- a "re-cognition" process - rebuilds the original cognitive substance that hopefully has not been lost throughout the syntactic, transporting, and perceptive processing.

Because a full cycle language analysis involving natural language understanding requires putting the concept back in its original form, without incurring any loss of the conceptual manipulations that can be achieved following a "de-cognition" and "re-cognition" processes, the conceptual aspect of language cannot and must not be overlooked. Only when a conceptual dimension to speech analysis becomes available will the syntactic aspect of processing language becomes unconstrained. That is, syntactic analysis will limit to a required transient step for communications to fit on a medium. Only then the limitations that we have endured until today related to natural language understanding and processing will start to fade.

The reward with a CLUE is the ability to abstract the written syntactic forms from conveyed concepts - the words used to communicate become irrelevant as soon as the concept is calculated - while maintaining the ability to intelligently react to recognized content. It further shifts the final responsibility of disambiguation to the conceptual analysis layer, instead of the phonetic and syntactic layers as it has typically been done to this day. That frees the person communicating from the obligation of using predetermined sequences of words or commands in order to be successfully understood by an automated device. In an era where automated devices have become the norm, the bottleneck has shifted to the inability of technology to deal effectively with the human element. People are not comfortable with a set of syntactic related rules that appear to them as counter-natural in relation to their conceptual natural abilities.

Background

This article is the third of a sequence. In the first article, "Implementing a std::map Replacement that Never Runs Out of Memory and Instructions on Producing an ARPA Compliant Language Model to Test the Implementation", the memory management technique used within the code-base provided in the present article is exposed. As a result of that, you shall observe there is only one delete call throughout the entire code-base, and you are certain there is no memory leak within the said C++ code. In the second article, "The Building of a Knowledge Base Using C++ and an Introduction to the Power of Predicate Calculus," predicate calculus is introduced, but falls short of processing natural language input. Although it also exposes techniques in order to infer from a knowledge base - not used in this article, but could easily be adapted to do so - the article covers basic predicate calculus techniques that are widely used in this article. A reading of these two articles will help you gain a better understanding of the basis upon which this code is built. Although Conceptual Speech Recognition is used to interpret speech (sound), for the sake of simplicity and demonstration, this article limits itself to textual content input. A software implementation of this technology can be referred to as a Conceptual Language Understanding Engine, or "CLUE" for short. The approach to process speech recognition is comparable to what is exposed here, but with a couple of software engineering twists in order to include further biases from speech through the integration of a Hidden-Markov-Model that is not discussed in this article.

Using the Code

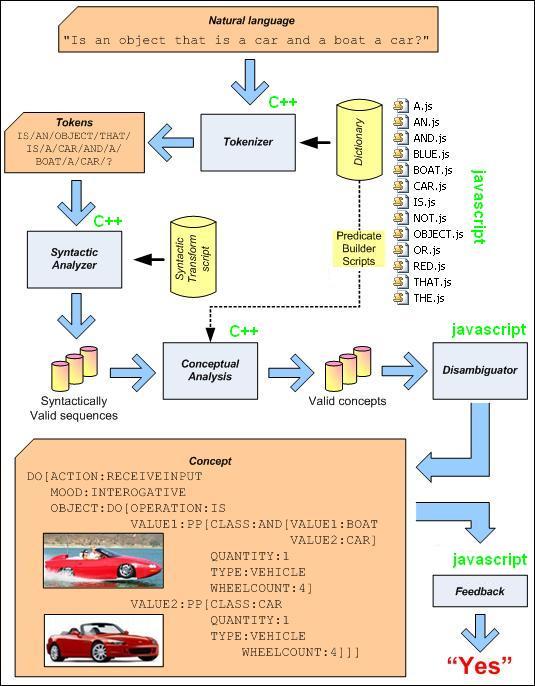

The current project builds under Visual Studio 2008. It is composed of about 10,000 lines of C++ source code, and about 500 lines of JavaScript code that is processed by Google V8.

The main components of the code are divided as follows:

- The dictionary: A 195,443 words, 222,048 parts-of-speech dictionary, held in a 2.1 MB file, which can return information on spelling almost instantly. [IndexStructure.h, StoredPOSNode.h, StoredPOSNode.cpp, DigitalConceptBuilder::BuildDictionary]

- Tokenization: Transforming a stream of natural language input into tokens based on content included into a dictionary. [IndexStructure.h, POSList.h, POSList.cpp, POSNode.h, POSNode.cpp, IndexInStream.h, DigitalConceptBuilder::Tokenize]

- Syntactic Analysis: The algorithms to extrapolate complex nodes, such as

SENTENCE, from atomic nodes, such as NOUN, ADJECTIVE, and VERB obtained from the dictionary following the tokenization phase. [SyntaxTransform.txt, POSTransformScript.h, POSTransformScript.cpp, Permutation.h, Permutation.cpp, POSList.h, POSList.cpp, POSNode.h, POSNode.cpp, Parser.h, Parser.cpp, BottomUpParser.h, BottomUpParser.cpp, MultiDimEarleyParser.h, MultiDimEarleyParser.cpp] - Conceptual Analysis: The building of concepts based on syntactic organizations, and how Google V8, the JavaScript engine, is integrated into the project. [Conceptual Analysis/*.js, Conceptual Analysis/Permutation scripts/*.js, Conceptual Analysis/POS scripts/*.js, POSList.h, POSList.cpp, POSNode.h, POSNode.cpp, Predicate.h, Predicate.cpp, JSObjectSupport.h, JSObjectSupport.cpp, POSNode::BuildPredicates]

Execution of the Code

Execution of the code as provided with this article runs the test cases specified in SimpleTestCases.txt. Each block within curly brackets defines a scope to run, where CONTENT is the natural language stream to analyze.

Each variable definition prior to the test case sentences can also be inserted within curly bracket scopes to override its value. For example, to enable only the first test case, the following change is possible:

...

#-------------------------------------------------------------------

# ENABLED

# _______

#

# Possible values: TRUE, FALSE

#

# TRUE: enable the test cases within that scope.

#

# FALSE: disable the test cases within that scope.

ENABLED = FALSE

#-------------------------------------------------------------------

{

ENABLED = TRUE

CONTENT = Is a red car a car?

ID = CAR1

}

...

Executing the test cases, as available from within the zip files attached, results in the following output. The remainder of this article exposes the approach, philosophies, and techniques used in order to transition from the Natural Language input from these test-cases to concepts and responses as exposed here.

Evaluating: "Is a red car a car?" (ID:CAR1)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 250 ms

Syntactic: 63 ms

Conceptual: 187 ms.

Evaluating: "Is a red car the car?" (ID:CAR2)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

DETERMINED:TRUE

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 2 sec (2235 ms)

Syntactic: 31 ms

Conceptual: 2 sec (2204 ms).

Evaluating: "Is the red car a car?" (ID:CAR3)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

DETERMINED:TRUE

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 203 ms

Syntactic: 31 ms

Conceptual: 172 ms.

Evaluating: "Is the red car red?" (ID:CAR4)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

DETERMINED:TRUE

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[COLOR:RED]]]

Total time: 219 ms

Syntactic: 31 ms

Conceptual: 188 ms.

Evaluating: "The car is red" (ID:CAR5)

No inquiry to analyze here:

DO[ACTION:RECEIVEINPUT

MOOD:AFFIRMATION

OBJECT:PP[CLASS:CAR

COLOR:RED

DETERMINED:TRUE

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]

Total time: 547 ms

Syntactic: 15 ms

Conceptual: 532 ms.

Evaluating: "Is a red car blue?" (ID:CAR6)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[COLOR:BLUE]]]

Total time: 2 sec (2453 ms)

Syntactic: 31 ms

Conceptual: 2 sec (2422 ms).

Evaluating: "Is a red car red?" (ID:CAR7)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[COLOR:RED]]]

Total time: 235 ms

Syntactic: 32 ms

Conceptual: 203 ms.

Evaluating: "Is a car or a boat a car?" (ID:CAR8)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:OR[VALUE1:BOAT

VALUE2:CAR]

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 390 ms

Syntactic: 187 ms

Conceptual: 203 ms.

Evaluating: "Is a car an object that is not a car?" (ID:CAR9)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:AND[VALUE1:PP[QUANTITY:1

TYPE:{DEFINED}]

VALUE2:NOT[VALUE:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]]]

Total time: 3 sec (3297 ms)

Syntactic: 141 ms

Conceptual: 3 sec (3156 ms).

Evaluating: "Is a boat an object that is not a car?" (ID:CAR10)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]

VALUE2:AND[VALUE1:PP[QUANTITY:1

TYPE:{DEFINED}]

VALUE2:NOT[VALUE:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]]]

Total time: 328 ms

Syntactic: 78 ms

Conceptual: 250 ms.

Evaluating: "Is an object that is not a car a boat?" (ID:CAR11)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:AND[VALUE1:PP[QUANTITY:1

TYPE:{DEFINED}]

VALUE2:NOT[VALUE:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

VALUE2:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]]]

Total time: 3 sec (3500 ms)

Syntactic: 63 ms

Conceptual: 3 sec (3437 ms).

Evaluating: "Is a car that is not red a car?" (ID:CAR12)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:!RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 313 ms

Syntactic: 63 ms

Conceptual: 250 ms.

Evaluating: "Is a car an object that is a car or a boat?" (ID:CAR13)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:OR[VALUE1:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]]

Total time: 3 sec (3875 ms)

Syntactic: 125 ms

Conceptual: 3 sec (3750 ms).

Evaluating: "Is a red car an object that is a car or a boat?" (ID:CAR14)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:OR[VALUE1:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]]

Total time: 4 sec (4563 ms)

Syntactic: 344 ms

Conceptual: 4 sec (4219 ms).

Evaluating: "Is a car that is not red a car?" (ID:CAR15)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:!RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 360 ms

Syntactic: 63 ms

Conceptual: 297 ms.

Evaluating: "Is a red car not red?" (ID:CAR16)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[COLOR:!RED]]]

Total time: 1 sec (1844 ms)

Syntactic: 32 ms

Conceptual: 1 sec (1812 ms).

Evaluating: "Is a car a car that is not red?" (ID:CAR17)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

COLOR:!RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 3 sec (3125 ms)

Syntactic: 78 ms

Conceptual: 3 sec (3047 ms).

Evaluating: "Is a car that is not red a blue car?" (ID:CAR18)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:!RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

COLOR:BLUE

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 4 sec (4250 ms)

Syntactic: 250 ms

Conceptual: 4 sec (4000 ms).

Evaluating: "Is a red car a car that is not red?" (ID:CAR19)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

COLOR:!RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 3 sec (3547 ms)

Syntactic: 234 ms

Conceptual: 3 sec (3313 ms).

Evaluating: "Is an object that is not a car a car?" (ID:CAR20)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:AND[VALUE1:PP[QUANTITY:1

TYPE:{DEFINED}]

VALUE2:NOT[VALUE:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 3 sec (3891 ms)

Syntactic: 47 ms

Conceptual: 3 sec (3844 ms).

Evaluating: "Is an object that is a car or a boat a car?" (ID:CAR21)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:OR[VALUE1:BOAT

VALUE2:CAR]

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 781 ms

Syntactic: 141 ms

Conceptual: 640 ms.

Evaluating: "Is an object that is a car or a boat a red car?" (ID:CAR22)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:OR[VALUE1:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]

VALUE2:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 5 sec (5984 ms)

Syntactic: 344 ms

Conceptual: 5 sec (5640 ms).

Evaluating: "Is an object that is a car and a boat a red car?" (ID:CAR23)

MAYBE:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:AND[VALUE1:BOAT

VALUE2:CAR]

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

COLOR:RED

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 6 sec (6437 ms)

Syntactic: 734 ms

Conceptual: 5 sec (5703 ms).

Evaluating: "Is a car an object that is a car and a boat?" (ID:CAR24)

NO:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:AND[VALUE1:PP[CLASS:BOAT

QUANTITY:1

TYPE:VEHICLE]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]]

Total time: 4 sec (4313 ms)

Syntactic: 125 ms

Conceptual: 4 sec (4188 ms).

Evaluating: "Is an object that is a car and a boat a car?" (ID:CAR25)

YES:

DO[ACTION:RECEIVEINPUT

MOOD:INTEROGATIVE

OBJECT:DO[OPERATION:IS

VALUE1:PP[CLASS:AND[VALUE1:BOAT

VALUE2:CAR]

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]

VALUE2:PP[CLASS:CAR

QUANTITY:1

TYPE:VEHICLE

WHEELCOUNT:4]]]

Total time: 797 ms

Syntactic: 156 ms

Conceptual: 641 ms.

Done.

The dictionary that is made available through the zip files is a file, article_testcases_streams.txt, with partial content cumulated for the sole purpose to provide the words and parts-of-speech necessary to run the test-cases successfully. That special step was done in order to save readers of this article from a significant download in size since the non-abridged dictionary is more than 8 MB in size.

The non-abridged dictionary is available for download by clicking here (2.2 MB). Unzip the downloaded file and place streams.txt into the DigitalConceptBuilder directory, at the same level as article_testcases_streams.txt, to have it loaded the next time the program will be launched.

The text files streams.txt and article_testcases_streams.txt are editable. The content in streams.txt was obtained over the years from a variety of sources, some of which are under copyright as expressed in the "Licensing information.txt" file from the non-abridged dictionary zip file.

The format used is as follows:

<spelling - mandatory>:<pronunciation - mandatory>:

<part-of-speech - optional>(<data - optional>)

Example: December:dusembar:DATE(m_12).

The pronunciation is mandatory, yet is not used for the purpose of the current article. When the topic of speech recognition is covered in a later article, its use will be necessary.

The text file holding the spellings and parts-of-speech is loaded into a three-way decision tree, whose code can be found in the IndexStructure template, as described in the article: "Implementing a std::map Replacement that Never Runs Out of Memory and Instructions on Producing an ARPA Compliant Language Model to Test the Implementation". A representation of the three-way decision tree can be seen here holding the primary keys "node", "do", "did", "nesting", "null", and "void", as illustrated below:

Holding the dictionary in such a structure provides the best possible performance when tokenizing, while ensuring that the tokens generated from the process have a corresponding entry in the dictionary. Furthermore, the IndexStructure template used can hold data partially on disk and partially in memory, making it a suitable medium for that purpose.

The goal of tokenization is to create a POSList object that holds spelling and corresponding parts-of-speech for the provided syntactic stream.

Following is the partial output from the first test-case executed with DEFINITIONNEEDED = FALSE, OUTPUTTOKENS = TRUE with the non-abridged dictionary loaded.

Evaluating: "Is a red car a car?" (ID:CAR1)

Tokens before syntactic analysis:

[NOUN & "IS"], from 0, to: 1, bridge: 3, index: 0

[VERB & "IS"], from 0, to: 1, bridge: 3, index: 0

[AUX & "IS"], from 0, to: 1, bridge: 3, index: 0

[NOUN & "A"], from 3, to: 3, bridge: 5, index: 1

[PREPOSITION & "A"], from 3, to: 3, bridge: 5, index: 1

[VERB & "A"], from 3, to: 3, bridge: 5, index: 1

[DEFINITE_ARTICLE & "A"], from 3, to: 3, bridge: 5, index: 1

[ADJECTIVE & "RED"], from 5, to: 7, bridge: 9, index: 2

[NOUN & "RED"], from 5, to: 7, bridge: 9, index: 2

[VERB & "RED"], from 5, to: 7, bridge: 9, index: 2

[PROPER_NOUN & "RED"], from 5, to: 7, bridge: 9, index: 2

[NOUN & "CAR"], from 9, to: 11, bridge: 13, index: 3

[PROPER_NOUN & "CAR"], from 9, to: 11, bridge: 13, index: 3

[NOUN & "A"], from 13, to: 13, bridge: 15, index: 4

[PREPOSITION & "A"], from 13, to: 13, bridge: 15, index: 4

[VERB & "A"], from 13, to: 13, bridge: 15, index: 4

[DEFINITE_ARTICLE & "A"], from 13, to: 13, bridge: 15, index: 4

[NOUN & "CAR"], from 15, to: 17, bridge: 18, index: 5

[PROPER_NOUN & "CAR"], from 15, to: 17, bridge: 18, index: 5

[PUNCTUATION & "?"], from 18, to: 18, bridge: 0, index: 6

Tokenization can appear as an easy task, but it has hidden difficulties of its own.

- Tokenization must allow words to be included in other words (for example, 'all' and 'all-in-one').

- While tokenizing, special provisions for numbers handling, which are not part of the dictionary, must be covered.

- Allowed punctuation must be taken into account.

- Punctuation that is not allowed must be ignored, unless it is part of a dictionary entry.

- Any word from the resulting list of words in

POSList must be readily accessible based on its position in the stream and its part of speech to ensure that later processing will not be negatively affected in regards to performance.

#ifndef __POSLIST_HH__

#define __POSLIST_HH__

#include <vector>

#include <map>

#include <string>

using std::vector;

using std::map;

using std::string;

#include "POSNode.h"

#include "shared_auto_ptr.h"

#include "IndexInStream.h"

class POSList;

class POSList

{

public:

POSList(bool duplicateDefense = false);

virtual POSNode *AddToPOSList(shared_auto_ptr<POSNode> dNode);

vector<shared_auto_ptr<POSNode>>

BrigdgeableNodes(int position, shared_auto_ptr<POSNode> dPOSNode);

int GetLowestStartPosition();

int GetHighestEndPosition();

vector<shared_auto_ptr<POSNode>> AccumulateAll(

shared_auto_ptr<POSNode> dPOSNode,

POSNode::ESortType sort = POSNode::eNoSort,

int fromPos = -1, int toPos = -1);

void Output(int pos = -1);

unsigned long Count();

virtual void Clear();

void ResetPOSNodeIteration();

virtual IndexInStream<POSNode> *GetPositionInList();

virtual bool GetNext(shared_auto_ptr<POSNode> &dPOSNode);

protected:

virtual string GetLineOutput(shared_auto_ptr<POSNode> dNode);

IndexInStream<POSNode> m_position;

int m_lowestStart;

int m_highestEnd;

bool m_duplicateDefense;

map<string, int> m_uniqueEntries;

int m_count;

};

#endif

In order to have a quick retrieval mechanism, the POSList class uses the m_position member that is an IndexInStream<POSNode> instance. The IndexInStream template is used in some cases when quick retrieval of an object, in our case, a POSNode, is needed based on a position in the stream and a POSEntry type. The implementation of the IndexInStream template follows:

#ifndef __INDEXSINSTREAM_H__

#define __INDEXSINSTREAM_H__

#include <map>

#include <vector>

using std::map;

using std::vector;

#include "POSEntry.h"

template <class T> class IndexInStreamPosition

{

public:

IndexInStreamPosition();

void Add(shared_auto_ptr<T> dContent, POSEntry dPosEntry);

typedef typename map<int, vector<shared_auto_ptr<T>>>

container_map_vector_type;

typedef typename

container_map_vector_type::iterator iterator_map_vector_type;

container_map_vector_type m_content;

};

template <class T> IndexInStreamPosition<T>::IndexInStreamPosition() {}

template <class T> void IndexInStreamPosition<T>::Add(

shared_auto_ptr<T> dContent, POSEntry dPosEntry)

{

m_content[dPosEntry.GetValue()].push_back(dContent);

}

template <class T> class IndexInStream

{

public:

IndexInStream();

void Reset();

void Clear();

void Add(shared_auto_ptr<T> dObject, int position, POSEntry dPos);

bool GetNext(shared_auto_ptr<T> &dPOSNode);

vector<shared_auto_ptr<T>> ObjectsAtPosition(int position,

POSEntry dPOSEntry, int *wildcardPosition = NULL);

protected:

typedef typename map<int,

shared_auto_ptr<IndexInStreamPosition<T>>>

container_map_index_type;

typedef typename

container_map_index_type::iterator iterator_map_index_type;

typedef typename map<int,

vector<shared_auto_ptr<T>>> container_map_vector_type;

typedef typename container_map_vector_type::iterator

iterator_map_vector_type;

container_map_index_type m_allSameEventPOSList;

iterator_map_index_type m_iterator1;

iterator_map_vector_type m_iterator2;

int m_posVectorEntryIndex;

};

template <class T> IndexInStream<T>::IndexInStream(): m_posVectorEntryIndex(-1)

{

Reset();

m_allSameEventPOSList.clear();

}

template <class T> void IndexInStream<T>::Clear()

{

Reset();

}

template <class T> vector<shared_auto_ptr<T>>

IndexInStream<T>::ObjectsAtPosition(int position,

POSEntry dPOSEntry, int *wildcardPosition)

{

vector<shared_auto_ptr<T>> dReturn;

if (m_allSameEventPOSList.find(position) != m_allSameEventPOSList.end())

{

if (m_allSameEventPOSList[position]->m_content.find(dPOSEntry.GetValue()) !=

m_allSameEventPOSList[position]->m_content.end())

{

dReturn =

m_allSameEventPOSList[position]->m_content[dPOSEntry.GetValue()];

}

}

if (wildcardPosition != NULL)

{

vector<shared_auto_ptr<T>> temp =

ObjectsAtPosition(*wildcardPosition, dPOSEntry);

for (unsigned int i = 0; i < temp.size(); i++)

{

dReturn.push_back(temp[i]);

}

}

return dReturn;

}

template <class T> bool IndexInStream<T>::GetNext(shared_auto_ptr<T> &dObject)

{

while (m_iterator1 != m_allSameEventPOSList.end())

{

while ((m_posVectorEntryIndex != -1) &&

(m_iterator2 != m_iterator1->second.get()->m_content.end()))

{

if (m_posVectorEntryIndex < (int)m_iterator2->second.size())

{

dObject = m_iterator2->second[m_posVectorEntryIndex];

m_posVectorEntryIndex++;

return true;

}

else

{

m_iterator2++;

m_posVectorEntryIndex = 0;

}

}

m_iterator1++;

m_posVectorEntryIndex = 0;

if (m_iterator1 != m_allSameEventPOSList.end())

{

m_iterator2 = m_iterator1->second.get()->m_content.begin();

}

else

{

m_posVectorEntryIndex = -1;

}

}

Reset();

return false;

}

template <class T> void IndexInStream<T>::Add(

shared_auto_ptr<T> dObject, int position, POSEntry dPos)

{

if (m_allSameEventPOSList.find(position) == m_allSameEventPOSList.end())

{

m_allSameEventPOSList[position] =

shared_auto_ptr<IndexInStreamPosition<T>>(

new IndexInStreamPosition<T>());

}

m_allSameEventPOSList[position]->Add(dObject, dPos);

}

template <class T> void IndexInStream<T>::Reset()

{

m_iterator1 = m_allSameEventPOSList.begin();

m_posVectorEntryIndex = 0;

if (m_iterator1 != m_allSameEventPOSList.end())

{

m_iterator2 = m_iterator1->second.get()->m_content.begin();

}

else

{

m_posVectorEntryIndex = -1;

}

}

#endif

With the POSList class implemented, the tokenization is implemented as follows:

shared_auto_ptr<POSList> DigitalConceptBuilder::Tokenize(string dContent,

string posNumbers,

string posPunctuation,

string punctuationAllowed,

bool definitionNeeded)

{

struct TokenizationPath

{

TokenizationPath(

shared_auto_ptr<IndexStructureNodePosition<StoredPOSNode>> dPosition,

unsigned int dStartIndex): m_position(dPosition), m_startIndex(dStartIndex) {}

shared_auto_ptr<IndexStructureNodePosition<StoredPOSNode>> m_position;

unsigned int m_startIndex;

};

unsigned int dWordIndex = 0;

shared_auto_ptr<POSList> dReturn = shared_auto_ptr<POSList>(new POSList(true));

string dNumberBuffer;

vector<TokenizationPath> activePaths;

vector<POSNode*> floatingBridges;

int latestBridge = -1;

for (unsigned int i = 0; i <= dContent.length(); i++)

{

bool isAllowedPunctuation = false;

string dCharStr = "";

if (i < dContent.length())

{

dCharStr += dContent.c_str()[i];

if ((posPunctuation.length()) &&

(punctuationAllowed.find(dCharStr) != string.npos))

{

isAllowedPunctuation = true;

latestBridge = i;

}

}

activePaths.push_back(TokenizationPath(

shared_auto_ptr<IndexStructureNodePosition<StoredPOSNode>>(

new IndexStructureNodePosition<StoredPOSNode>(

m_POS_Dictionary.GetTopNode())), i));

for (unsigned int j = 0; j < activePaths.size(); j++)

{

if ((activePaths[j].m_position.get() != NULL) &&

(activePaths[j].m_position->get() != NULL) &&

(activePaths[j].m_position->get()->m_data != NULL))

{

if ((i == dContent.length()) || (IsDelimitor(dContent.c_str()[i])))

{

string dKey = activePaths[j].m_position->GetNode()->GetKey();

if ((i < dKey.length()) ||

(IsDelimitor(dContent.c_str()[i - dKey.length() - 1])))

{

StoredPOSNode *dPOS = activePaths[j].m_position->get();

for (int k = 0; k < kMAXPOSALLOWED; k++)

{

if (dPOS->m_pos[k] != -1)

{

shared_auto_ptr<POSNode> candidate =

POSNode::Construct("["+POSEntry::StatGetDescriptor(

dPOS->m_pos[k]) + " & \"" + dKey + "\"]", NULL,

activePaths[j].m_startIndex, i-1, 0,

(dPOS->m_data[k] != -1)?m_data[dPOS->m_data[k]]:"");

if (PassedDefinitionRequirement(candidate, definitionNeeded))

{

POSNode *dNewNode = dReturn->AddToPOSList(candidate);

if (dNewNode != NULL)

{

floatingBridges.push_back(dNewNode);

}

latestBridge = activePaths[j].m_startIndex;

}

}

}

}

}

}

if ((latestBridge != -1) && (((i < dContent.length()) &&

(!IsDelimitor(dContent.c_str()[i]))) ||

(i == dContent.length()) || (isAllowedPunctuation)))

{

bool atLeastOneAdded = false;

for (int l = (floatingBridges.size() - 1); l >= 0; l--)

{

if ((floatingBridges[l]->GetBridgePosition() == 0) &&

(floatingBridges[l]->GetStartPosition() != latestBridge))

{

atLeastOneAdded = true;

floatingBridges[l]->SetWordIndex(dWordIndex);

floatingBridges[l]->SetBridgePosition(latestBridge);

floatingBridges.erase(floatingBridges.begin() + l);

}

}

if (atLeastOneAdded)

{

dWordIndex++;

}

if (isAllowedPunctuation)

{

shared_auto_ptr<POSNode> candidate =

POSNode::Construct("[" + posPunctuation + " & \"" +

dCharStr + "\"]", NULL, i, i, 0);

if (PassedDefinitionRequirement(candidate, definitionNeeded))

{

POSNode *dNewNode = dReturn->AddToPOSList(candidate);

if (dNewNode != NULL)

{

floatingBridges.push_back(dNewNode);

}

latestBridge = i;

}

}

else

{

latestBridge = -1;

}

}

if (i == dContent.length())

{

break;

}

shared_auto_ptr<IndexStructureNodePosition<StoredPOSNode>>

dNewPosition = m_POS_Dictionary.ForwardNodeOneChar(

activePaths[j].m_position, toupper(dContent.c_str()[i]));

if (dNewPosition.get() != NULL)

{

activePaths[j].m_position = dNewPosition;

}

else

{

activePaths[j].m_position->Clear();

}

}

if ((posNumbers.length() > 0) && ((dContent.c_str()[i] >= '0') &&

(dContent.c_str()[i] <= '9') ||

((dContent.c_str()[i] == '.') && (dContent.length() > 0))))

{

if ((i == 0) || (dNumberBuffer.length() > 0) ||

(IsDelimitor(dContent.c_str()[i-1])))

{

dNumberBuffer += dContent.c_str()[i];

}

}

else if (dNumberBuffer.length() > 0)

{

shared_auto_ptr<POSNode> candidate =

POSNode::Construct("["+posNumbers + " & \"" +

dNumberBuffer + "\"]", NULL, i - dNumberBuffer.length(), i - 1, 0);

if (PassedDefinitionRequirement(candidate, definitionNeeded))

{

POSNode *dNewNode = dReturn->AddToPOSList(candidate);

if (dNewNode != NULL)

{

floatingBridges.push_back(dNewNode);

}

latestBridge = i - dNumberBuffer.length();

dNumberBuffer = "";

}

}

for (int j = (activePaths.size() - 1); j >= 0; j--)

{

if ((activePaths[j].m_position.get() == NULL) ||

(activePaths[j].m_position->GetNode().get() == NULL))

{

activePaths.erase(activePaths.begin() + j);

}

}

}

for (int l = (floatingBridges.size() - 1); l >= 0; l--)

{

floatingBridges[l]->SetWordIndex(dWordIndex);

}

return dReturn;

}

The purpose of Syntactic Analysis is to produce complex nodes from the atomic nodes passed in the POSList and to identify the targeted complex nodes to provide to the Conceptual Analysis process. In a CLUE, Syntactic Analysis is not the final disambiguator; rather, the Conceptual Analysis process working in conjunction with the Syntactic Analysis process shall determine which concept prevails over the others based on meaning and syntactic integrity. Consequently, there is no requirement to fully disambiguate during the Syntactic Analysis process, meaning that Syntactic Analysis produces a multitude of syntactic organizations that later need to be disambiguated by Conceptual Analysis. Prior to Syntactic Analysis, there is a lot of ambiguity because the process only holds a list of POSNodes that each have associated parts-of-speech; following Syntactic Analysis, there is less ambiguity because targeted parts-of-speech have been identified and associated with their corresponding sequences of words and parts-of-speech required to build them. Syntactic Analysis is also useful in providing Conceptual Analysis with syntactic information to rely upon in predicate calculation. As is later exposed in this article, a Predicate Builder Script is composed of code that mostly relates to syntax, and making the transition from a syntactic stream to concepts relies heavily on syntactic information produced during Syntactic Analysis.

The Syntactic Transform Script stored in SyntaxTransform.txt is central to Syntactic Analysis. The content of that file holds sequencing decisions used to build complex nodes from a configuration of complex nodes and atomic nodes found in the dictionary. The Syntactic Transform Script is composed of about 50 lines of code of a language that is created for the sole purpose of permuting nodes.

A closer look into the first three lines of code from SyntaxTransform.txt helps in understanding that language.

ADJECTIVE PHRASE CONSTRUCTION 1: ([ADVERB])[ADJECTIVE] -> ADJECTIVE_PHRASE

MAXRECURSIVITY:2

ADJECTIVE PHRASE ENUMERATION: [ADJECTIVE_PHRASE]([CONJUNCTION])

[ADJECTIVE_PHRASE] -> ADJECTIVE_PHRASE

# Verbs

MAXRECURSIVITY:1

COMPLEX VERB CONSTRUCTION: [VERB & "is" | "was" | "will" | "have" |

"has" | "to" | "will be" | "have been" |

"has been" | "to be" | "will have been" |

"be" | "would" | "could" | "should"]([ADVERB])[VERB] -> VERB

Lines have been wrapped in the above snippet to avoid scrolling.

The first line permutes all possibilities from ([ADVERB])[ADJECTIVE] and creates a resulting node that is a part-of-speech, ADJECTIVE_PHRASE. For example, tokens such as "more red" result in an ADJECTIVE_PHRASE since "more" is an ADVERB and "red" is an ADJECTIVE. But since the ADVERB node is between parentheses, it is identified as being optional. Consequently, the ADJECTIVE token "blue" also results in an ADJECTIVE_PHRASE node.

The following line, [ADJECTIVE_PHRASE]([CONJUNCTION])[ADJECTIVE_PHRASE], takes sequences of ADJECTIVE_PHRASE nodes, optionally separated by a CONJUNCTION node, and creates a new ADJECTIVE_PHRASE node with them. Such a script line is recursive since it transforms into a part-of-speech that is part of its sequencing. To that effect, in order to limit computing to a reasonable level of parsing, we may want to limit recursion as it is done on the preceding line: MAXRECURSIVITY:2. That basically states that only two successful passes at this transformation line are allowed. That means that tokens such as "more blue and less green" are transformed successfully, while tokens such as "red, some green and grey" are not transformed successfully since a recursion level of at least 3 is required for that transform to happen. Note that recursion limitations are only relevant while performing bottom-up parsing and not multi-dimensional Earley parsing. More on that later...

The next line, [VERB & "is" | "was" | "will" | "have" | "has" | "to" | "will be" | "have been" | "has been" | "to be" | "will have been" | "be" | "would" | "could" | "should"]([ADVERB])[VERB], has comparable rules, but also states some conditions in regards to spellings for the first node. The components between double-quotes are spelling conditions, where at least one of which must succeed for the node match to occur. From that line of code, a successful transform happens for the token sequence: "could never see", but fails for the token sequence: "see always ear".

Here is the complete script. It encapsulates most of the English language, although slight adaptations may be required if more complex test cases are not transformed as expected.

# NOTES ON THE SYNTAX OF THE SCRIPTING LANGUAGE

# - BEFORE THE ':' CHARACTER ON A LINE IS THE LINE NAME

# - A NODE BETWEEN PARENTHESIS IS INTERPREATED AS BEING OPTIONAL

# - CONTENT BETWEEN QUOTES RELATES TO SPELLING

# - SPELLINGS THAT BEGIN WITH A '*' CHARACTER ARE INTERPREATED

#- AS A 'START WITH' STRING MATCH

# - ON THE RIGHT SIDE OF THE CHARACTERS '->'

# - IS THE DEFINITION OF THE NEW ENTITY (AFFECTATION)

# SCRIPT

# Adjective phrase construction

ADJECTIVE PHRASE CONSTRUCTION 1: ([ADVERB])[ADJECTIVE] -> ADJECTIVE_PHRASE

MAXRECURSIVITY:2

ADJECTIVE PHRASE ENUMERATION: [ADJECTIVE_PHRASE]([CONJUNCTION])

[ADJECTIVE_PHRASE] -> ADJECTIVE_PHRASE

# Verbs

MAXRECURSIVITY:1

COMPLEX VERB CONSTRUCTION: [VERB & "is" | "was" | "will" | "have" |

"has" | "to" | "will be" | "have been" |

"has been" | "to be" | "will have been" |

"be" | "would" | "could" |

"should"]([ADVERB])[VERB] -> VERB

# Noun phrase construction

GERUNDIVE ING: [VERB & "*ing"] -> GERUNDIVE_VERB

GERUNDIVE ED: [VERB & "*ed"] -> GERUNDIVE_VERB

PLAIN NOUN PHRASE CONSTRUCTION: ([DEFINITE_ARTICLE | INDEFINITE_ARTICLE])

([ORDINAL_NUMBER])([CARDINAL_NUMBER])

([ADJECTIVE_PHRASE])[NOUN | PLURAL |

PROPER_NOUN | TIME |

DATE | PRONOUN] -> NOUN_PHRASE

MAXRECURSIVITY:2

NOUN PHRASE ENUMERATION: [NOUN_PHRASE]([CONJUNCTION])[NOUN_PHRASE] -> NOUN_PHRASE

MAXRECURSIVITY:1

# Preposition phrase construction

PREPOSITION PHRASE CONSTRUCTION: [PREPOSITION][NOUN_PHRASE] -> PREPOSITION_PHRASE

MAXRECURSIVITY:2

PREPOSITION PHRASE ENUMERATION: [PREPOSITION_PHRASE]([CONJUNCTION])

[PREPOSITION_PHRASE] -> PREPOSITION_PHRASE

# Verb phrase construction

VERB PHRASE CONSTRUCTION 1: [VERB]([ADVERB])[NOUN_PHRASE]

([PREPOSITION_PHRASE]) -> VERB_PHRASE

VERB PHRASE CONSTRUCTION 2: [VERB][PREPOSITION_PHRASE] -> VERB_PHRASE

VERB PHRASE CONSTRUCTION 3: [ADJECTIVE_PHRASE][PREPOSITION][VERB] -> VERB_PHRASE

# Noun phrase construction while considering gerundive

GERUNDIVE PHRASE CONSTRUCTION: [GERUNDIVE_VERB]([NOUN_PHRASE])

([VERB_PHRASE])([ADVERB]) -> GERUNDIVE_PHRASE

MAXRECURSIVITY:2

NOUN PHRASE CONST WITH GERUNDIVE: [NOUN_PHRASE][GERUNDIVE_PHRASE]

([GERUNDIVE_PHRASE])([GERUNDIVE_PHRASE]) -> NOUN_PHRASE

PREPOSITION PHRASE CONSTRUCTION 3: [PREPOSITION][GERUNDIVE_PHRASE] -> PREPOSITION_PHRASE

# Noun phrase construction while considering restrictive relative clauses

RESTRICTIVE RELATIVE CLAUSE: [WH_PRONOUN & "who" | "where" | "when" |

"which"][VERB_PHRASE] -> REL_CLAUSE

RESTRICTIVE RELATIVE CLAUSE 2: [PRONOUN & "that"][VERB_PHRASE] -> REL_CLAUSE

MAXRECURSIVITY:2

NOUN PHRASE WITH REL_CLAUSE: [NOUN_PHRASE][REL_CLAUSE] -> NOUN_PHRASE

# Make sure the restrictive relative clauses built are part of the verb phrases

VERB PHRASE WITH REL_CLAUSE: [VERB_PHRASE][REL_CLAUSE] -> VERB_PHRASE

VERB PHRASE CONSTRUCTION 4: [VERB][NOUN_PHRASE][REL_CLAUSE]

([PREPOSITION_PHRASE]) -> VERB_PHRASE

MAXRECURSIVITY:2

WH_PRONOUN CONSTRUCTION ENUMERATION: [WH_PRONOUN][CONJUNCTION][WH_PRONOUN] -> WH_PRONOUN

# Make sure the gerundive built are part of the verb phrases

VERB PHRASE CONSTRUCTION 5: [VERB][NOUN_PHRASE][GERUNDIVE_PHRASE]

([GERUNDIVE_PHRASE])([GERUNDIVE_PHRASE])

([PREPOSITION_PHRASE]) -> VERB_PHRASE

VERB PHRASE CONSTRUCTION 6: [VERB][NOUN_PHRASE][ADJECTIVE_PHRASE] -> VERB_PHRASE

VERB PHRASE CONSTRUCTION 7: ([VERB])[NOUN_PHRASE][VERB] -> VERB_PHRASE

MAXRECURSIVITY:2

VERB PHRASE CONSTRUCTION 8: [VERB_PHRASE][NOUN_PHRASE][GERUNDIVE_PHRASE]

([GERUNDIVE_PHRASE])([GERUNDIVE_PHRASE])

([PREPOSITION_PHRASE]) -> VERB_PHRASE

MAXRECURSIVITY:2

VERB PHRASE CONSTRUCTION 9: [VERB_PHRASE]([NOUN_PHRASE])

[ADJECTIVE_PHRASE] -> VERB_PHRASE

MAXRECURSIVITY:2

VERB PHRASE CONSTRUCTION 10: [WH_PRONOUN][VERB_PHRASE] -> VERB_PHRASE

MAXRECURSIVITY:2

VERB PHRASE CONSTRUCTION 11: [VERB_PHRASE][NOUN_PHRASE](

[PREPOSITION_PHRASE |

GERUNDIVE_PHRASE]) -> VERB_PHRASE

MAXRECURSIVITY:2

VERB PHRASE CONSTRUCTION 12: [VERB_PHRASE][PREPOSITION_PHRASE] -> VERB_PHRASE

# WH-Phrases construction

WH_NP CONSTRUCTION 1: [WH_PRONOUN][NOUN_PHRASE] -> WH_NP

WH_NP CONSTRUCTION 2: [WH_PRONOUN][ADJECTIVE]([ADVERB]) -> WH_NP

WH_NP CONSTRUCTION 3: [WH_PRONOUN][ADVERB][ADJECTIVE] -> WH_NP

MAXRECURSIVITY:2

WH_NP CONSTRUCTION 4: [WH_NP][CONJUNCTION][WH_NP | WH_PRONOUN] -> WH_NP

# Sentence construction

SENTENCE CONSTRUCTION QUESTION 1: [VERB & "is" | "was" | "were"]

[NOUN_PHRASE][NOUN_PHRASE](

[PUNCTUATION & "?"]) -> SENTENCE

SENTENCE CONSTRUCTION QUESTION 2: [VERB & "is" | "was" | "were"]

[VERB_PHRASE][VERB_PHRASE](

[PUNCTUATION & "?"]) -> SENTENCE

SENTENCE CONSTRUCTION 1: [VERB_PHRASE]([PREPOSITION & "at" | "in" | "of" |

"on" | "for" | "into" | "from"])

([PUNCTUATION & "?"]) -> SENTENCE

SENTENCE CONSTRUCTION 2: ([AUX])[NOUN_PHRASE][VERB_PHRASE | VERB](

[PREPOSITION & "at" | "in" | "of" |

"on" | "for"])([ADVERB])([PUNCTUATION & "?"]) -> SENTENCE

WH_NP SENTENCE CONSTRUCTION 1: [WH_NP][VERB_PHRASE]([PREPOSITION & "at" |

"in" | "of" | "on" | "for" | "into" |

"from"])([PUNCTUATION & "?"]) -> SENTENCE

WH_NP SENTENCE CONSTRUCTION 2: [WH_NP]([AUX])[NOUN_PHRASE][VERB_PHRASE | VERB](

[PREPOSITION & "at" | "in" | "of" | "on" | "for"])

([ADVERB])([PUNCTUATION & "?"]) -> SENTENCE

WH_NP SENTENCE CONSTRUCTION 3: [NOUN_PHRASE | VERB_PHRASE]([PREPOSITION &

"at" | "in" | "of" | "on" | "for" | "into" |

"from"])[WH_NP]([PUNCTUATION & "?"]) -> SENTENCE

MAXRECURSIVITY:2

SENTENCE CONSTRUCTION 4: [SENTENCE]([CONJUNCTION])[SENTENCE] -> SENTENCE

Lines have been wrapped in the above snippet to avoid scrolling.

One goal for the Syntactic Transform Script is to create [SENTENCE] complex nodes. Sentences are special as they are expected to encapsulate a complete thread of thoughts that can later be represented by a predicate. Although predicates can also be calculated for other complex nodes, only [SENTENCE] parts-of-speech can reliably fully be encapsulated into a predicate. That does not mean the [SENTENCE] part-of-speech is necessarily self-contained, though. For example, think about the two following [SENTENCE]s: "I saw Edith yesterday. She is feeling great." The object of knowledge "she" in the second [SENTENCE] refers to the object of knowledge "Edith" from the first [SENTENCE]. For the purpose of this sample project, although it is possible to do so, we will not keep a context between different [SENTENCE] nodes.

Permuting the Syntactic Transform Script

One of the first things done by the syntactic analyzer is to create a decision-free version of the Syntactic Transform Script. That is, it creates a version of the Syntactic Transform Script into POSDictionary_flat.txt that is functionally equivalent to SyntaxTransform.txt that does not hold any decision nodes (nodes between parenthesis in SyntaxTransform.txt, or different spellings conditions that are delimited by the pipe character within a node). The content of POSDictionary_flat.txt, as generated by the downloadable executable attached to this article, can be referred to by clicking here. In order to do that, it performs two distinct steps into POSTransformScript::ManageSyntacticDecisions, where the parameter decisionFile is the string holding the name of the Syntax Transform Script.

void POSTransformScript::ManageSyntacticDecisions(string decisionFile,

string dFlatFileName)

{

FlattenDecisions(decisionFile, "temp.txt");

FlattenDecisionNodes("temp.txt", dFlatFileName);

}

During the first step (into POSTransformScript::FlattenDecisions), each line of the file is read, and each element between parenthesis is removed from parenthesis in one line and ignored into another. Since an element between parenthesis is optional, there are indeed only two possibilities - it is included or not.

void POSTransformScript::FlattenDecisions(string dDecisionFileName,

string dFlattenDecisionFileName)

{

fstream dOutputStream;

dOutputStream.open(dFlattenDecisionFileName.c_str(),

ios::out | ios::binary | ios::trunc);

ifstream dInputStream(dDecisionFileName.c_str(), ios::in | ios::binary);

dInputStream.unsetf(ios::skipws);

string dBuffer;

char dChar;

while (dInputStream >> dChar)

{

switch (dChar)

{

case '\n':

case '\r':

if (dBuffer.find("#") != string::npos)

{

dBuffer = SubstringUpTo(dBuffer, "#");

}

if (dBuffer.length() > 0)

{

unsigned int i;

vector<string> *matches;

Permutation perms(dBuffer);

matches = perms.GetResult();

for (i = 0; i < matches->size(); i++)

{

dOutputStream.write(

matches->at(i).c_str(), matches->at(i).length());

dOutputStream.write("\r\n", strlen("\r\n"));

}

}

dBuffer = "";

break;

default:

dBuffer += dChar;

}

}

dInputStream.close();

dOutputStream.close();

}

To help the calculation of all possible permutations of a single line, the Permutation class is used. Since there may be more than one permutation on a line, the use of the recursive Permutation::FillResult method is the best approach to perform these calculations.

void Permutation::FillResult()

{

m_result.clear();

vector<string> matches;

if (PatternMatch(m_value, "(*)", &matches, true))

{

string tmpString = m_value;

size_t pos = tmpString.find("(" + matches[0] + ")");

if (SearchAndReplace(tmpString, "(" + matches[0] +

")", "", 1, true) == 1)

{

Permutation otherPerms(tmpString);

vector<string> *otherMatches = otherPerms.GetResult();

for (unsigned int i = 0; i < otherMatches->size(); i++)

{

m_result.push_back(otherMatches->at(i));

m_result.push_back(m_value.substr(0, pos) +

matches[0] + otherMatches->at(i).substr(pos));

}

}

}

else

{

m_result.push_back(m_value);

}

}

Once this first step of taking care of content between parenthesis is done, decisions within atomic nodes need to be handled. An atomic node with a decision is like [DEFINITE_ARTICLE | INDEFINITE_ARTICLE], where the part-of-speech is a choice, or [WH_PRONOUN & "who" | "where" | "when" | "which"], where the spelling constraint is also a choice. Most of the logic is in POSTransformScript::FlattenOneDecisionNodes, which itself relies heavily on POSNode::Construct for parsing.

vector<string> POSTransformScript::FlattenOneDecisionNodes(string dLine)

{

vector<string> dReturn;

vector<string> matches;

if (PatternMatch(dLine, "[*]", &matches, true) > 0)

{

for (unsigned int i = 0; i < matches.size(); i++)

{

vector<shared_auto_ptr<posnode />> possibilities;

POSNode::Construct("[" + matches[i] + "]", &possibilities);

if (possibilities.size() > 1)

{

for (unsigned int j = 0; j < possibilities.size(); j++)

{

string dLineCopy = dLine;

SearchAndReplace(dLineCopy, "[" + matches[i] + "]",

possibilities[j].get()->GetNodeDesc(), 1);

vector<string> dNewLineCopies = FlattenOneDecisionNodes(dLineCopy);

for (unsigned int k = 0; k < dNewLineCopies.size(); k++)

{

dReturn.push_back(dNewLineCopies[k]);

}

}

break;

}

}

}

if (dReturn.size() == 0)

{

dReturn.push_back(dLine);

}

return dReturn;

}

shared_auto_ptr<POSNode> POSNode::Construct(string dNodeDesc,

vector<shared_auto_ptr<POSNode>> *allPOSNodes,

unsigned int dStartPosition,

unsigned int dEndPosition,

unsigned int dBridgePosition,

string data)

{

vector<shared_auto_ptr<POSNode>> tmpVector;

if (allPOSNodes == NULL)

{

allPOSNodes = &tmpVector;

}

vector<string> matches;

vector<string> spellings;

vector<POSEntry> dPOS;

string dLeftPart = "";

if (PatternMatch(dNodeDesc, "[* & *]", &matches, false) == 2)

{

dLeftPart = matches[0];

string dRightPart = matches[1];

if (PatternMatch(dRightPart, "\"*\"", &matches, true, "|") > 0)

{

for (unsigned int i = 0; i < matches.size(); i++)

{

spellings.push_back(matches[i]);

}

}

}

else

{

matches.clear();

if (PatternMatch(dNodeDesc, "[*]", &matches, false) == 1)

{

dLeftPart = matches[0];

}

else

{

throw new exception("Formatting error");

}

}

while (dLeftPart.length())

{

matches.clear();

if (PatternMatch(dLeftPart, "* | *", &matches, false) == 2)

{

dPOS.push_back(POSEntry(matches[0]));

dLeftPart = matches[1];

}

else

{

dPOS.push_back(POSEntry(dLeftPart));

dLeftPart = "";

}

}

if ((spellings.size() > 0) && (dPOS.size() > 0))

{

for (unsigned int i = 0; i < dPOS.size(); i++)

{

for (unsigned int j = 0; j < spellings.size(); j++)

{

allPOSNodes->push_back(shared_auto_ptr<POSNode>(

new POSNode(dPOS[i], spellings[j], dStartPosition,

dEndPosition, dBridgePosition, data)));

}

}

}

else if (dPOS.size() > 0)

{

for (unsigned int i = 0; i < dPOS.size(); i++)

{

allPOSNodes->push_back(shared_auto_ptr<POSNode>(

new POSNode(dPOS[i], "", dStartPosition,

dEndPosition, dBridgePosition, data)));

}

}

if (allPOSNodes->size() > 0)

{

return shared_auto_ptr<POSNode>(allPOSNodes->at(0));

}

else

{

return NULL;

}

}

Loading the Decision-free Syntax Transform Script

It is only when POSDictionary_flat.txt has been generated that a POSTransformScript object is created and the content from POSDictionary_flat.txt is loaded into it by calling the POSTransformScript::BuildFromFile method. While referring to the class definition that follows, we can see it is fairly simple. A POSTransformScript is simply composed of a vector of POSTransformScriptLines.

#ifndef __POSTRANSFORM_H__

#define __POSTRANSFORM_H__

#include <vector>

#include <string>

#include <stack>

#include "POSNode.h"

#include "POSList.h"

#include "shared_auto_ptr.h"

#include "StoredScriptLine.h"

using std::vector;

using std::string;

using std::stack;

class POSTransformScriptLine

{

public:

friend class POSTransformScript;

int GetLineNumber() const;

int GetRecursivity() const;

string GetLineName() const;

string ReconstructLine() const;

shared_auto_ptr<POSNode> GetTransform() const;

vector<shared_auto_ptr<POSNode>> GetSequence() const;

bool MustRecurse() const;

protected:

POSTransformScriptLine(string lineName,

vector<shared_auto_ptr<POSNode>> dSequence,

shared_auto_ptr<POSNode> dTransform,

int recursivity,

string originalLine,

int lineNumber);

vector<shared_auto_ptr<POSNode>> m_sequence;

shared_auto_ptr<POSNode> m_transform;

string m_lineName;

string m_originalLineContent;

int m_recursivity;

int m_lineNumber;

bool m_mustRecurse;

};

class POSTransformScript

{

public:

friend class POSTransformLine;

POSTransformScript();

void BuildFromFile(string dFileName);

vector<shared_auto_ptr<POSTransformScriptLine>> *GetLines();

string GetDecisionTrees(string dTreesType);

void KeepDecisionTrees(string dTreesType, string dValue);

bool IsDerivedPOS(POSEntry dPOSEntry) const;

static void ManageSyntacticDecisions(string decisionFile,

string dFlatFileName);

private:

static void FlattenDecisions(string dDecisionFileName,

string dFlattenDecisionFileName);

static void FlattenDecisionNodes(string dDecisionFileName,

string dFlattenDecisionFileName);

static vector<string> FlattenOneDecisionNodes(string dLine);

vector<shared_auto_ptr<POSTransformScriptLine>> m_lines;

vector<bool> m_derived;

map<string, string> m_decisionTrees;

};

#endif

Parsing Tokens

Once the Syntactic Transform Script is held in an object in memory, tokens parsing is the next step that is required. That transforms atomic parts-of-speech into complex ones, which in turn are the ones which are later to be relevant to conceptual analysis processing. Since the topic of parsing is subject to a great diversity in regard to implementation algorithms, an abstract interface is preferred.

#ifndef __PARSER_H__

#define __PARSER_H__

#include "shared_auto_ptr.h"

#include "POSTransformScript.h"

class Parser

{

public:

Parser(shared_auto_ptr<POSTransformScript> dScript);

virtual ~Parser();

virtual void ParseList(shared_auto_ptr<POSList> dList) = 0;

protected:

shared_auto_ptr<POSTransformScript> m_script;

};

#endif

The Parser abstract class keeps a reference to the POSTransformScript object that has the details regarding transformations that can happen. The ParseList method is the one responsible for performing the actual transformations for the tokens passed as a parameter in dList.

The Bottom-up Parser: A Simple Parser Implementation

Bottom-up parsing (also called shift-reduce parsing) is a strategy for parsing sentences that attempt to construct a parse tree, beginning at the leaf nodes and working "bottom-up" towards the root. It has the advantage of being a simple algorithm which is typically easy to implement, with the disadvantage of resulting in slow calculations, since all nodes need to be constructed prior to getting to the root node.

#include "BottomUpParser.h"

#include "CalculationContext.h"

#include "POSTransformScript.h"

#include "StringUtils.h"

#include "DigitalConceptBuilder.h"

#include "DebugDefinitions.h"

#ifdef _DEBUG

#define new DEBUG_NEW

#undef THIS_FILE

static char THIS_FILE[] = __FILE__;

#endif

BottomUpParser::BottomUpParser(

shared_auto_ptr<POSTransformScript> dScript): Parser(dScript) {}

BottomUpParser::~BottomUpParser() {}

void BottomUpParser::ParseList(shared_auto_ptr<POSList> dList)

{

string dLastOutput = "";

for (unsigned int i = 0; i < m_script->GetLines()->size(); i++)

{

if (DigitalConceptBuilder::

GetCurCalculationContext()->SyntacticAnalysisTrace())

{

if (i == 0)

{

originalprintf("\nBottom-up Syntactic trace:\n\n");

}

for (unsigned int j = 0; j < dLastOutput.length(); j++)

{

originalprintf("\b");

originalprintf(" ");

originalprintf("\b");

}

dLastOutput = FormattedString(

"%d of %d. line \"%s\" (%lu so far)",

i+1, m_script->GetLines()->size(),

m_script->GetLines()->at(i)->GetLineName().c_str(),

dList->Count());

originalprintf(dLastOutput.c_str());

}

BottomUpLineParse(dList, m_script->GetLines()->at(i));

}

for (unsigned int j = 0; j < dLastOutput.length(); j++)

{

originalprintf("\b");

originalprintf(" ");

originalprintf("\b");

}

}

int BottomUpParser::BottomUpLineParse(shared_auto_ptr<POSList> dList,

shared_auto_ptr<POSTransformScriptLine> dLine,

int fromIndex,

int atPosition,

int lowestPos,

string cummulatedString,

vector<shared_auto_ptr<POSNode>> *cummulatedNodes)

{

vector<shared_auto_ptr<POSNode>> childNodes;

if (cummulatedNodes == NULL)

{

cummulatedNodes = &childNodes;

}

int dTransformCount = 0;

if (fromIndex == -1)

{

fromIndex = 0;

}

int fromPosition = atPosition;

int toPosition = atPosition;

if (atPosition == -1)

{

fromPosition = dList->GetLowestStartPosition();

toPosition = dList->GetHighestEndPosition();

}

for (int i = 0; i < dLine->GetRecursivity(); i++)

{

dTransformCount = 0;

for (int pos = fromPosition; pos <= toPosition; pos++)

{

vector<shared_auto_ptr<POSNode> > dNodes =

dList->BrigdgeableNodes(pos, dLine->GetSequence()[fromIndex]);

for (unsigned int j = 0; j < dNodes.size(); j++)

{

if (fromIndex == (dLine->GetSequence().size() - 1))

{

dTransformCount++;

string dSpelling = cummulatedString + " " +

dNodes[j]->GetSpelling();

RemovePadding(dSpelling, ' ');

shared_auto_ptr<POSNode> dNewNode(new POSNode(

dLine->GetTransform()->GetPOSEntry(), dSpelling,

(lowestPos == -1)?pos:lowestPos, dNodes[j]->GetEndPosition(),

dNodes[j]->GetBridgePosition()));

dNewNode->SetConstructionLine(dLine->GetLineName());

for (unsigned int k = 0; k < cummulatedNodes->size(); k++)

{

cummulatedNodes->at(k)->SetParent(dNewNode);

}

dNodes[j]->SetParent(dNewNode);

dList->AddToPOSList(dNewNode);

}

else

{

if (dNodes[j]->GetBridgePosition() != 0)

{

int sizeBefore = cummulatedNodes->size();

cummulatedNodes->push_back(dNodes[j]);

dTransformCount += BottomUpLineParse(dList, dLine,

fromIndex+1, dNodes[j]->GetBridgePosition(),

(lowestPos == -1)?pos:lowestPos, cummulatedString +

" " + dNodes[j]->GetSpelling(), cummulatedNodes);

while ((int)cummulatedNodes->size() > sizeBefore)

{

cummulatedNodes->erase(cummulatedNodes->begin() +

cummulatedNodes->size() - 1);

}

}

}

}

}

if ((dTransformCount == 0) || (!dLine->MustRecurse()))

{

break;

}

}

return dTransformCount;

}

The Multi-dimensional Earley Parser: A More Efficient Parsing Method

An Earley parser is essentially a generator that builds left-most derivations, using a given set of sequence productions. The parsing functionality arises because the generator keeps track of all possible derivations that are consistent with the input up to a certain point. As more and more of the input is revealed, the set of possible derivations (each of which corresponds to a parse) can either expand as new choices are introduced, or shrink as a result of resolved ambiguities. Typically, an Earley parser does not deal with an ambiguous input as it requires a one-dimensional sequence of tokens. But, in our case, there is ambiguity since each word may have generated multiple tokens that have different parts-of-speech. This is the reason why the algorithm is adapted and named a multi-dimensional Earley parser.

#include "MultiDimEarleyParser.h"

#include "CalculationContext.h"

#include "POSTransformScript.h"

#include "StringUtils.h"

#include "DigitalConceptBuilder.h"

#include "DebugDefinitions.h"

#ifdef _DEBUG

#define new DEBUG_NEW

#undef THIS_FILE

static char THIS_FILE[] = __FILE__;

#endif

class ScriptMultiDimEarleyInfo

{

public:

map<int, vector<shared_auto_ptr<POSTransformScriptLine>>>

m_startPOSLines;

};

class UnfinishedTranformLine

{

public:

UnfinishedTranformLine();

UnfinishedTranformLine(

shared_auto_ptr<POSTransformScriptLine> dTransformLine);

shared_auto_ptr<POSTransformScriptLine> m_transformLine;

vector<shared_auto_ptr<POSNode>> m_cummulatedNodes;

};

map<uintptr_t, ScriptMultiDimEarleyInfo>

MultiDimEarleyParser::m_scriptsExtraInfo;

MultiDimEarleyParser::MultiDimEarleyParser(

shared_auto_ptr<POSTransformScript> dScript): Parser(dScript) {}

MultiDimEarleyParser::~MultiDimEarleyParser() {}

void MultiDimEarleyParser::ParseList(shared_auto_ptr<POSList> dList)

{

m_listParsed = dList;

MultiDimEarleyParser::BuildDecisionsTree(m_script);

shared_auto_ptr<POSNode> dPOSNode;

m_derivedNodesLookup.Clear();

m_derivedNodesProduced.Clear();

m_targetNodesProduced.Clear();

dList->GetPositionInList()->Reset();

while (dList->GetPositionInList()->GetNext(dPOSNode))

{

NodeDecisionProcessing(dPOSNode);

ProcessAgainstUnfinishedLines(dPOSNode);

}

while ((m_delayedSuccessCondition.size() > 0) &&

(m_targetNodesProduced.Count() <

(unsigned long)DigitalConceptBuilder::

GetCurCalculationContext()->GetMaxSyntaxPermutations()))

{

SuccessNodeCondition(

m_delayedSuccessCondition.front().m_partialLine,

m_delayedSuccessCondition.front().m_POSNode);

m_delayedSuccessCondition.pop();

}

m_targetNodesProduced.GetPositionInList()->Reset();

while (m_targetNodesProduced.GetPositionInList()->GetNext(dPOSNode))

{

dList->AddToPOSList(dPOSNode);

}

m_derivedNodesProduced.GetPositionInList()->Reset();

while (m_derivedNodesProduced.GetPositionInList()->GetNext(dPOSNode))

{

dList->AddToPOSList(dPOSNode);

}

m_decisionTrees.clear();

m_derivedNodesLookup.Clear();

m_targetNodesProduced.Clear();

Trace("");

}

void MultiDimEarleyParser::SuccessNodeCondition(

shared_auto_ptr<UnfinishedTranformLine> dPartialLine,

shared_auto_ptr<POSNode> dPOSNode)

{

if (m_targetNodesProduced.Count() >=

(unsigned long)DigitalConceptBuilder::

GetCurCalculationContext()->GetMaxSyntaxPermutations())

{

return;

}

dPartialLine->m_cummulatedNodes.push_back(dPOSNode);

if (dPartialLine->m_cummulatedNodes.size() ==

dPartialLine->m_transformLine->GetSequence().size())

{

shared_auto_ptr<POSNode> dPOSNode(new POSNode(

dPartialLine->m_transformLine->GetTransform()->GetPOSEntry()));

dPOSNode->SetConstructionLine(

dPartialLine->m_transformLine->GetLineName());

unsigned int i;

for (i = 0; i < dPartialLine->m_cummulatedNodes.size(); i++)

{

dPartialLine->m_cummulatedNodes[i]->SetParent(dPOSNode);

}

dPOSNode->UpdateFromChildValues();

ProcessAgainstUnfinishedLines(dPOSNode);

m_derivedNodesProduced.AddToPOSList(dPOSNode);

NodeDecisionProcessing(dPOSNode);

if ((dPOSNode->GetEndPosition() - dPOSNode->GetStartPosition() >=

(unsigned int)(m_listParsed->GetHighestEndPosition() -

m_listParsed->GetLowestStartPosition() - 1)) &&

(DigitalConceptBuilder::

GetCurCalculationContext()->GetTargetPOS().GetValue() ==

dPOSNode->GetPOSEntry().GetValue()))

{

m_targetNodesProduced.AddToPOSList(dPOSNode);

}

}

else if (dPOSNode->GetBridgePosition() > dPOSNode->GetEndPosition())

{

shared_auto_ptr<UnfinishedTranformLine> usePartialLine = dPartialLine;

if (m_script->IsDerivedPOS(

dPartialLine->m_transformLine->GetSequence().at(

dPartialLine->m_cummulatedNodes.size())->GetPOSEntry()))

{

shared_auto_ptr<UnfinishedTranformLine>

dPartialLineCopy(new UnfinishedTranformLine());

*dPartialLineCopy.get() = *dPartialLine.get();

m_derivedNodesLookup.Add(dPartialLineCopy, dPOSNode->GetBridgePosition(),

dPartialLine->m_transformLine->GetSequence().at(

dPartialLine->m_cummulatedNodes.size())->GetPOSEntry());

usePartialLine = dPartialLineCopy;

}

shared_auto_ptr<POSNode> dTestNode =

usePartialLine->m_transformLine->GetSequence()

[usePartialLine->m_cummulatedNodes.size()];

BridgeableNodesProcessing(dPOSNode->GetBridgePosition(),

dTestNode, &usePartialLine->m_cummulatedNodes,

usePartialLine->m_transformLine);

}

}

void MultiDimEarleyParser::ProcessAgainstUnfinishedLines(

shared_auto_ptr<POSNode> dPOSNode)

{

vector<shared_auto_ptr<UnfinishedTranformLine>> dUnfinishedLines =

m_derivedNodesLookup.ObjectsAtPosition(dPOSNode->GetStartPosition(),

dPOSNode->GetPOSEntry(), NULL);

for (unsigned long i = 0; i < dUnfinishedLines.size(); i++)

{

if (dPOSNode->Compare(*dUnfinishedLines[i]->

m_transformLine->GetSequence()[dUnfinishedLines[i]->

m_cummulatedNodes.size()].get()) == 0)

{

shared_auto_ptr<UnfinishedTranformLine>

dPartialLineCopy(new UnfinishedTranformLine());

*dPartialLineCopy.get() = *dUnfinishedLines[i].get();

m_delayedSuccessCondition.push(

DelayedSuccessCondition(dPartialLineCopy, dPOSNode));

}

}

}

void MultiDimEarleyParser::BridgeableNodesProcessing(unsigned int dPosition,

shared_auto_ptr<POSNode> dTestNode,

vector<shared_auto_ptr<POSNode>> *resolvedNodes,

shared_auto_ptr<POSTransformScriptLine> scriptLine)

{

if (m_script->IsDerivedPOS(dTestNode->GetPOSEntry()))

{

vector<shared_auto_ptr<POSNode>> dNodes =

m_derivedNodesProduced.BrigdgeableNodes(dPosition, dTestNode);

for (unsigned int j = 0; j < dNodes.size(); j++)

{

OneNodeCompareInDecisionTree(dNodes[j], scriptLine, resolvedNodes);

}

}

vector<shared_auto_ptr<POSNode>> dNodes =

m_listParsed->BrigdgeableNodes(dPosition, dTestNode);

for (unsigned int j = 0; j < dNodes.size(); j++)

{

OneNodeCompareInDecisionTree(dNodes[j], scriptLine, resolvedNodes);

}

}

void MultiDimEarleyParser::NodeDecisionProcessing(unsigned int dPosition,

IndexStructureNode<StoredScriptLine> *fromNode,

vector<shared_auto_ptr<POSNode>> *resolvedNodes)

{

vector<shared_auto_ptr<POSTransformScriptLine>> *options =

SetUpForNodeProcessing(resolvedNodes->at(0)->GetPOSEntry());

if (options != NULL)

{

for (unsigned int i = 0; i < options->size(); i++)

{

shared_auto_ptr<POSTransformScriptLine> dAssociatedLine = options->at(i);

shared_auto_ptr<POSNode> dTestNode =

dAssociatedLine->GetSequence()[resolvedNodes->size()];

BridgeableNodesProcessing(dPosition, dTestNode,

resolvedNodes, options->at(i));

}

}

}

void MultiDimEarleyParser::OneNodeCompareInDecisionTree(shared_auto_ptr<POSNode> dPOSNode,

shared_auto_ptr<POSTransformScriptLine> scriptLine,

vector<shared_auto_ptr<POSNode>> *resolvedNodes)

{

unsigned int node2Compare = 0;

if (resolvedNodes != NULL)

{

node2Compare = resolvedNodes->size();

}

if (dPOSNode->Compare(*scriptLine->GetSequence()[node2Compare].get()) == 0)

{

shared_auto_ptr<UnfinishedTranformLine>

dPartialLine(new UnfinishedTranformLine(scriptLine));

if (resolvedNodes != NULL)

{

dPartialLine->m_cummulatedNodes = *resolvedNodes;

SuccessNodeCondition(dPartialLine, dPOSNode);

}

else

{

m_delayedSuccessCondition.push(

DelayedSuccessCondition(dPartialLine, dPOSNode));

}

}

}

vector<shared_auto_ptr<POSTransformScriptLine>>

*MultiDimEarleyParser::SetUpForNodeProcessing(POSEntry dPOSEntryTree)

{

if (m_scriptsExtraInfo.find((uintptr_t)m_script.get()) != m_scriptsExtraInfo.end())

{

if (m_scriptsExtraInfo[(uintptr_t)m_script.get()].m_startPOSLines.find(

dPOSEntryTree.GetValue()) !=

m_scriptsExtraInfo[(unsigned long)m_script.get()].m_startPOSLines.end())

{

return &m_scriptsExtraInfo[

(uintptr_t)m_script.get()].m_startPOSLines[dPOSEntryTree.GetValue()];

}

}

return NULL;

}

void MultiDimEarleyParser::NodeDecisionProcessing(

shared_auto_ptr<POSNode> dPOSNode,

IndexStructureNode<StoredScriptLine> *fromNode,

vector<shared_auto_ptr<POSNode>> *resolvedNodes)

{

Trace(FormattedString("NodeDecisionProcessing for node %s",

dPOSNode->GetNodeDesc().c_str()));

vector<shared_auto_ptr<POSTransformScriptLine>> *options =

SetUpForNodeProcessing(dPOSNode->GetPOSEntry());

if (options != NULL)

{

for (unsigned int i = 0; i < options->size(); i++)

{

OneNodeCompareInDecisionTree(dPOSNode,

options->at(i), resolvedNodes);

}

}

}

void MultiDimEarleyParser::BuildDecisionsTree(

shared_auto_ptr<POSTransformScript> dScript)

{

if (m_scriptsExtraInfo.find((uintptr_t)dScript.get()) ==

m_scriptsExtraInfo.end())

{

fstream dOutputStream;

dOutputStream.open("LineNumbers.txt",

ios::out | ios::binary | ios::trunc);

for (unsigned int i = 0; i < dScript->GetLines()->size(); i++)

{

string sequence;

for (unsigned int j = 0; j <

dScript->GetLines()->at(i)->GetSequence().size(); j++)

{

sequence +=

dScript->GetLines()->at(i)->GetSequence().at(j)->GetNodeDesc();

}

dOutputStream << FormattedString("%d. %s: %s -> %s\r\n", i,

dScript->GetLines()->at(i)->GetLineName().c_str(), sequence.c_str(),

dScript->GetLines()->at(i)->GetTransform()->GetNodeDesc().c_str()).c_str();

m_scriptsExtraInfo[(uintptr_t)dScript.get()].m_startPOSLines[

dScript->GetLines()->at(i)->GetSequence().at(0)->

GetPOSEntry().GetValue()].push_back(dScript->GetLines()->at(i));

}

dOutputStream.close();

}

}

void MultiDimEarleyParser::Trace(string dTraceString)

{

if (DigitalConceptBuilder::GetCurCalculationContext()->SyntacticAnalysisTrace())

{

for (unsigned int j = 0; j < m_lastTraceOutput.length(); j++)

{

originalprintf("\b");

originalprintf(" ");

originalprintf("\b");

}

if (dTraceString.length() > 0)

{

originalprintf(dTraceString.c_str());

}

m_lastTraceOutput = dTraceString;

}

}

UnfinishedTranformLine::UnfinishedTranformLine() {}

UnfinishedTranformLine::UnfinishedTranformLine(

shared_auto_ptr<POSTransformScriptLine> dTransformLine):

m_transformLine(dTransformLine) {}

DelayedSuccessCondition::DelayedSuccessCondition(

shared_auto_ptr<UnfinishedTranformLine> dPartialLine,

shared_auto_ptr<POSNode> dPOSNode):

m_partialLine(dPartialLine), m_POSNode(dPOSNode) {}

Syntactic Analysis calculates complex nodes from an input of atomic nodes, yet it provides the algorithm with an ambiguous set of results.

The following content is taken from SimpleTestCases.txt. MAXSYNTAXPERMUTATIONS and MAXCONCEPTUALANALYSIS are two key variables used to determine how many syntactic permutations are calculated in a conceptual representation.

...

# MAXSYNTAXPERMUTATIONS

# _____________________

#

# Possible values: Numeric value

#

# The maximal amount of TARGETPOS that syntactic analysis should

# produce.

MAXSYNTAXPERMUTATIONS = 200

#-------------------------------------------------------------------

# MAXCONCEPTUALANALYSIS

# _____________________

#

# Possible values: Numeric value

#

# The maximal amount of TARGETPOS that conceptual analysis should

# analyze. From the MAXSYNTAXPERMUTATIONS sequences of TARGETPOS that

# are sorted, only the fist MAXCONCEPTUALANALYSIS will be analyzed.

MAXCONCEPTUALANALYSIS = 20

...

Under the default condition of SimpleTestCases.txt left untouched, but with OUTPUTSYNTAXPERMUTATIONS set to TRUE, the following output is generated for the 18th test-case:

Evaluating: "Is a car that is not red a blue car?" (ID:CAR18)

CAR18:1. {SENTENCE: IS[VERB] {NOUN_PHRASE: {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {REL_CLAUSE: THAT[PRONOUN]

{VERB_PHRASE: IS[VERB] NOT[ADVERB] {NOUN_PHRASE: RED[NOUN] } } } }

{NOUN_PHRASE: A[DEFINITE_ARTICLE] {ADJECTIVE_PHRASE:

BLUE[ADJECTIVE] } CAR[NOUN] } ?[PUNCTUATION] }

CAR18:2. {SENTENCE: IS[VERB] {NOUN_PHRASE: {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {REL_CLAUSE: THAT[PRONOUN]

{VERB_PHRASE: IS[VERB] NOT[ADVERB] {NOUN_PHRASE: RED[NOUN] } } } }

{NOUN_PHRASE: {NOUN_PHRASE: A[DEFINITE_ARTICLE] BLUE[NOUN] }

{NOUN_PHRASE: CAR[NOUN] } } ?[PUNCTUATION] }

CAR18:3. {SENTENCE: {VERB_PHRASE: IS[VERB] {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {REL_CLAUSE: THAT[PRONOUN]

{VERB_PHRASE: IS[VERB] NOT[ADVERB] {NOUN_PHRASE:

{NOUN_PHRASE: RED[NOUN] } {NOUN_PHRASE: A[DEFINITE_ARTICLE]

{ADJECTIVE_PHRASE: BLUE[ADJECTIVE] } CAR[NOUN] } } } } } ?[PUNCTUATION] }

CAR18:4. {SENTENCE: IS[VERB] {NOUN_PHRASE: {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {REL_CLAUSE: THAT[PRONOUN]

{VERB_PHRASE: {VERB_PHRASE: IS[VERB] NOT[ADVERB] {NOUN_PHRASE: RED[NOUN] } }

{NOUN_PHRASE: A[DEFINITE_ARTICLE] BLUE[NOUN] } } } }

{NOUN_PHRASE: CAR[NOUN] } ?[PUNCTUATION] }

CAR18:5. {SENTENCE: IS[AUX] {NOUN_PHRASE: {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {NOUN_PHRASE: THAT[PRONOUN] } }

{VERB_PHRASE: {VERB_PHRASE: IS[VERB] NOT[ADVERB]

{NOUN_PHRASE: RED[NOUN] } } {NOUN_PHRASE: A[DEFINITE_ARTICLE]

{ADJECTIVE_PHRASE: BLUE[ADJECTIVE] } CAR[NOUN] } } ?[PUNCTUATION] }

CAR18:6. {SENTENCE: {VERB_PHRASE: IS[VERB] {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {REL_CLAUSE: THAT[PRONOUN]

{VERB_PHRASE: {VERB_PHRASE: IS[VERB] NOT[ADVERB]

{NOUN_PHRASE: RED[NOUN] } } {NOUN_PHRASE: A[DEFINITE_ARTICLE]

{ADJECTIVE_PHRASE: BLUE[ADJECTIVE] } CAR[NOUN] } } } } ?[PUNCTUATION] }

CAR18:7. {SENTENCE: IS[AUX] {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {VERB_PHRASE: {VERB_PHRASE:

{VERB_PHRASE: {NOUN_PHRASE: THAT[PRONOUN] } IS[VERB] }

{ADJECTIVE_PHRASE: NOT[ADVERB] RED[ADJECTIVE] } }

{NOUN_PHRASE: A[DEFINITE_ARTICLE]

{ADJECTIVE_PHRASE: BLUE[ADJECTIVE] } CAR[NOUN] } } ?[PUNCTUATION] }

CAR18:8. {SENTENCE: IS[AUX] {NOUN_PHRASE: {NOUN_PHRASE:

A[DEFINITE_ARTICLE] CAR[NOUN] } {NOUN_PHRASE: THAT[PRONOUN] } }

{VERB_PHRASE: IS[VERB] NOT[ADVERB] {NOUN_PHRASE: {NOUN_PHRASE: RED[NOUN] }

{NOUN_PHRASE: A[DEFINITE_ARTICLE]

{ADJECTIVE_PHRASE: BLUE[ADJECTIVE] } CAR[NOUN] } } } ?[PUNCTUATION] }

CAR18:9. {SENTENCE: IS[VERB] {NOUN_PHRASE: {NOUN_PHRASE:

{NOUN_PHRASE: A[DEFINITE_ARTICLE] CAR[NOUN] }

{REL_CLAUSE: THAT[PRONOUN] {VERB_PHRASE: IS[VERB] NOT[ADVERB]

{NOUN_PHRASE: RED[NOUN] } } } }

{NOUN_PHRASE: A[DEFINITE_ARTICLE] BLUE[NOUN] } }

{NOUN_PHRASE: CAR[NOUN] } ?[PUNCTUATION] }

CAR18:10. {SENTENCE: {VERB_PHRASE: {VERB_PHRASE: IS[VERB]

{NOUN_PHRASE: A[DEFINITE_ARTICLE] CAR[NOUN] }