Introduction

The contents presented here build on

a previous article (see Introduction to OpenCV:

Playing and Manipulating Video files click here), but I tried to organize them in a way,

so that they can be understood without reading part one of this tutorial. Alas,

the current article might even be simpler than its predecessor and possibly

would have been a better part one. Still, I am very optimistic that the

interested reader will not be puzzled that much and consider this as a minor

problem.

As already indicated by the title

the article mainly concerns the Open Source Computer Vision Library (OpenCV), which is a software platform that provides a great

number of high level programming tools for loading, saving, depicting and

manipulating images and videos. It is, of course, impossible to give a

description of every aspect that is offered by this library in a brief tutorial

like this, therefore I will only discuss a small selection of topics. If you

are in need of a more extensive overview, if you are interested in mathematical

backgrounds or if you are looking for more details on the topics touched on here,

you might find the answers you are yearning for in a book on OpenCV like, for example, “Learning OpenCV”

by Gary Bradski and Adrian Kaehler,

where partly have my “wisdom” from.

The OpenCV

function calls are pure C code, but I packed them into two C++ classes (one for

image operations, and one for video operations inheriting the methods of the

image class). Some people might consider this mixture of programming

conventions as a faux pas, I, however, regard it as a

good way to keep the code tidy and have most of it at a particular location.

Because the OpenCV library only offers a limited set

of possibilities to create a graphical interface I built a Windows GUI around

the OpenCV code (by creating a Win32 program in

Visual C++ 2010 Express Edition).

If you want to turn the presented

code into an executable program, you will have to install the OpenCV libraries (get the latest version at

e.g. http://opencv.willowgarage.com) and include the correct lib files

and headers for your program. I give a stepwise description on all this on top

of the UsingOpenCV.cpp source code

file. Graphical illustrations can be very helpful in finding one’s way through

this and ,luckily, further explanations, which offer screenshots also, can be

found on the web (e.g. by searching for ‘Using OpenCV

in VC++2010’).

In the first part of this tutorial I

will give a basic description of how to handle events (in particular mouse

events) in OpenCV, then there will be some words on a

selection of OpenCV commands which can be used to

manipulate images, and finally I will show how to turn visual input into video

formats that can be read by most standard video players. I am not an expert in

the mathematical details of the presented contents. What I can give is a more

or less superficial overview of how the presented functions work and how they

can be used in a program.

Processing mouse events with the

help of OpenCV

If you press a key or a button on the computer-mouse or simply move the mouse pointer over a window, the message loop in a Win32 program processes such events and makes

them accessible to the programmer. For those who are experienced in developing interactive software handling such messages is daily business. Since OpenCV has its

own commands to create windows, it also offers its own procedures to set up message loops for such windows. In most cases the OpenCV specific code for event handling is

easy to implement and should be preferred to processing messages coming from OpenCV windows via the standard message loop (something I

have not tried out, but surely can be done). The code below concerns mouse events, but for the sake of completeness I also briefly discuss the handling of keyboard input.

Keyboard events can be processed very easily. The command cvWaitKey(timespan); waits a certain time for a key to be pressed

and returns this key as an integer value (ASCII code). Therefore, if you want to process keyboard events set up a

while loop, insert the command line key = cvWaitKey(timespan); and then process keyboard input by checking the value of the variable key like, for

example, if(key == ‘q’){ do something}. Please note, that

cvWaitKey(); also makes the program wait for a specified period of time (e.g. cvWaitKey(100) makes the program

wait for 100 milliseconds). This is necessary, for example, to process images at a certain frame rate (find examples in the previous article and below where

I discuss the code on how to save a video).

Mouse events require more attention, although they are not very difficult to implement as well. They consist of two parts:

First, you will have to invoke

cvSetMouseCallback (const char* window_name, CvMouseCallback my_Mouse_Handler,

void* param);

in order to register a callback. The first argument of this function is the name of the window to which the

callback is attached (a window created with

cvNamedWindow(“window name”,0);). The second argument is the callback function itself, and the third argument, for instance, the image to

which the callback is applied.

Afterwards, you have to set up a mouse handler function (second argument in

cvSetMouseCallback). This function, which I named

my_mouse_Handler (int events, int x, int y, int

flags, void* param)

in my program, takes 5 arguments.

The first and most important argument is an integer variable that can have one of the following values

(ranging from 0 to 9): CV_EVENT_MOUSE_MOVE (= mouse pointer moves over specified window),

CV_EVENT_LBUTTONDOWN (= left mouse

button is pressed), CV_EVENT_RBUTTONDOWN (= right mouse button is pressed),

CV_EVENT_MBUTTONDOWN (= middle mouse

button is pressed), CV_EVENT_LBUTTONUP, CV_EVENT_RBUTTONUP,

CV_EVENT_MBUTTONUP (= events that occur after one

of the corresponding button has been released), CV_LBUTTONDBLCLK,

CV_LBUTTONDBLCLK, and CV_LBUTTONDBLCLK (= when a user

double clicks the corresponding buttons).

The second and the third argument of the callback function are the x (= horizontal) and the y (=

vertical) position of the mouse-pointer with the upper left corner of a window being the reference point (0,0).

The forth argument is useful if you want to access additional information during a mouse event.

CV_EVENT_FLAG_LBUTTON, CV_EVENT_FLAG_RBUTTON, CV_EVENT_MBUTTON check if the user

presses one of the corresponding buttons. This might be needed if you want to know if a button is pressed while the mouse pointer moves (e.g., drag and drop

operations). CV_EVENT_FLAG_CTRLKEY, CV_EVENT_FLAG_SHIFTKEY,

CV_EVENT_FLAG_ALTKEY check if the Ctrl, Shift, or the Alt key has been pressed during a mouse event.

The final argument is a void pointer for any additional information that will be needed. In the code example below I use this argument to obtain a pointer to

the image the event handler is operating on.

Using the code

As already mentioned above I packed the OpenCV specific code into two classes. The first class contains some methods on image operations and

the mouse-handler. The second one inherits the methods of the image class but also contains code for processing videos. Please note, that within a class a

callback function and its variables have to be defined as static.

The program I wrote works on videos. It provides access to the video data; then it loads the first frame of the video and presents it in a window of its own. Mouse

operations for which the program implements a handler are done on the image shown in this window.

The most important steps are:

- Capture video file with

cvCreateFileCapture(); by invoking

Get_Video_from_File(char* file_name); which I defined in the Video_OP class. Please find the contents of this method in the following code sample. It also should give you a “feeling” of how

to use some OpenCV commands (like cvNamedWindow();, for instance).

bool Video_OP::Get_Video_from_File(char* file_name)

{

if(!file_name)

return false;

my_p_capture = cvCreateFileCapture(file_name);

if (!my_p_capture) return false;

this->my_grabbed_frame = cvQueryFrame(my_p_capture);

this->captured_size.width = (int)cvGetCaptureProperty(my_p_capture,

CV_CAP_PROP_FRAME_WIDTH);

this->captured_size.height = (int)cvGetCaptureProperty(my_p_capture,

CV_CAP_PROP_FRAME_HEIGHT);

cvNamedWindow("choose area",CV_WINDOW_AUTOSIZE)

cvShowImage("choose area",my_grabbed_frame);

this->my_total_frame = (int) cvGetCaptureProperty(my_p_capture,CV_CAP_PROP_FRAME_COUNT);

this->Set_Mouse_Callback_for_Image(this->my_grabbed_frame);

return true;

}

After capturing a frame and setting up the mouse callback, mouse events can be processed in the

my_Mouse_Handler(); function of the program’s Image_OP class. The following code sample

does not give a description of all possible mouse events. It only presents mouse events that are needed to draw a rectangle onto an image. Please note

that the static variables for the static method my_Mouse_Handler(); have to be defined outside the class.

void Image_OP::my_Mouse_Handler(int events, int x, int y, int flags, void* param)

{

IplImage *img_orig;

IplImage *img_clone;

img_orig = (IplImage*) param;

int x_ROI =0, y_ROI =0 , wi_ROI =0, he_ROI =0;

switch(events)

{

case CV_EVENT_LBUTTONDOWN:

{

my_point = cvPoint(x, y);

}

break;

case CV_EVENT_MOUSEMOVE:

{

if(flags == CV_EVENT_FLAG_LBUTTON )

{

img_clone = cvCloneImage(img_orig);

cvRectangle(img_clone, my_point,cvPoint(x,y),

CV_RGB(0,255,0),1,8,0);

cvShowImage("choose area",img_clone);

}

}

break;

case CV_EVENT_LBUTTONUP:

{

img_clone = cvCloneImage(img_orig);

if(my_point.x > x)

{

x_ROI = x;

wi_ROI = my_point.x - x;

}

else

{

x_ROI = my_point.x;

wi_ROI = x - my_point.x;

}

if(my_point.y > y)

{

y_ROI = y;

he_ROI = my_point.y - y;

}

else

{

y_ROI = my_point.y;

he_ROI = y - my_point.y;

}

my_ROI.x = x_ROI;

my_ROI.y = y_ROI;

my_ROI.width = wi_ROI;

my_ROI.height = he_ROI;

cvSetImageROI(img_clone,cvRect(x_ROI,

y_ROI,wi_ROI, he_ROI));

cvNot(img_clone, img_clone);

cvResetImageROI(img_clone);

cvShowImage("choose area", img_clone);

}

break;

} }

A selection of OpenCV functions for processing images

The second part of this tutorial mainly concerns some (mostly) simple OpenCV commands to process images. When using sophisticated methodologies (like

optical flow; see first part of this tutorial) to detect or trace motion, it often provides better results “smoothing” images (= or processed frames) first,

in order to iron out outliers produced by noise and camera artifacts.

OpenCV offers five different basic smoothing operations, which can be invoked by the command

cvSmooth(IplImage* source, IplImage* destination, int smooth_type,

int param1 = 3, int param2 = 0, double param3 = 0, double param4 =0);

. I think it is clear that the first two arguments represent the input and the output image.

More interesting is the third parameter that serves as a placeholder for one of five different values (which also determine the meaning of the parameters param1 to param4). In the

following part I give an overview of the possible values for parameter three. If you are in need for more details, please, consult a book (like ‘Learning OpenCV’ by Gary Bradski and

Adrian Kaehler) or an expert article on this.

The smooth_type CV_BLUR, for instance, calculates the mean color values of all pixels within an area around a central pixel (area specified by

param1 and param2).

CV_BLUR_NO_SCALE does the same as CV_BLUR but there is no division to create an average.

CV_MEDIAN performs a similar operation with the only exception that it calculates the median value over the specified area.

CV_GAUSSIAN is more complicated and does smoothing operations based on the Gaussian function (= normal distribution).

param1 and param2 again define the area to which the algorithm

is applied. param3 is the sigma value of the Gaussian function (will be calculated automatically if not

specified) and if a value for param4 will be given there will be a different sigma value in horizontal (= param3 in this case) and in vertical direction.

CV_BILATERAL is similar to the Gaussian smoothing, but weights more similar pixels more highly than less similar ones.

In the code

samples that are part of this article, only one of the above “smoothing”

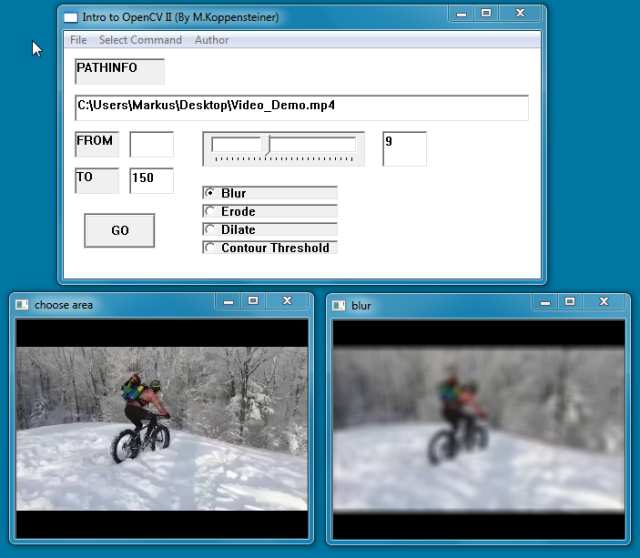

functions is implemented. The method Blur(int square_size, IplImage*, IplImage*) (see image on top of page) of the

Image_OP class carries out

a simple blur based on the mean of a square area of pixels. How changing the size

of this area affects the “blur” can be demonstrated by compiling the source

code that comes with this tutorial. Just load a movie, select the option button

“Blur” and move the bar of the trackbar control.

Attention: If you intend to use other types of smoothing functions (like CV_GAUSSIAN) problems might

occur, because such functions do not accept all values that are returned by the

trackbar.

Dilate and Erode

Another way of

removing noise from an image, but isolating or joining disparate regions as

well, is based on dilation and erosion. For both kinds of transformations OpenCV offers corresponding functions (cvDilate() and

cvErode()). These functions

have a kernel (a small square or circle with an anchor point in the center of

this area) running over an image. While this happens, the maximal (=dilation) or

minimal (=erosion) pixel value of the kernel is computed and the pixel of the

image under the anchor point is replaced by this maximum or minimum.

Because both

functions perform similar tasks, they take the same arguments. For this reason

I will discuss them for

cvErode(IplImage* src, IplImage* dest,IplConvKernel* kernel = NULL, int

iterations = 1);

only. The first two arguments are the source- and the destination

image, the third argument is a pointer to an

IplConvKernel structure, and the

last argument is the number of iterations performed by the algorithm. Creating

your own kernel using the

IplConvKernel structure will

not be discussed here, for this reason the standard (3x3 square kernel) kernel

will be used.

Again, both

functions are implemented as methods of the Image_OP class and linked

to the behavior of the main window’s trackbar

control. Just load a video and click on the option button Erode (or on the button Dilate).

Moving the bar of the trackbar will then change the

parameter iter (= iterations) of the Image_OP::Erode() or the

Image_OP::Dilate() method. Depending

on which of the two options you have chosen, the images will show expanded

bright regions or expanded dark regions.

Drawing contours

In this section I

present some code that is able to extract the contours of images. In OpenCV, contours are represented as sequences of points that

form a curve. To filter these point locations, OpenCV

provides the function cvFindContours(IplImage*, CvMemStorage*, CvSeq**,int headerSize,CvContourRetrievalMode,CvChainApproxMethod).

The first argument

should be an 8-bit single channel image that will be interpreted as a binary

image (all nonzero pixels are 1). The second argument is a linked list of

memory blocks that is used to handle dynamic memory allocation. The third argument represents a pointer to

the linked list in which the found points (contours) are stored.

The next arguments

are optional and will not be discussed here in great detail, because they are

not used in the code sample. The fourth argument can be simply set to sizeof(CvContour). The fifth

argument encompasses four options: CV_RETR_EXTRENAL = extracts extreme outer contours;

CV_RETR_LIST = is the standard

option and extracts all contours; CV_RETR_CCOMP = extracts contours and organizes

them in a two level hierarchy; CV_RETR_TREE = produces hierarchy of nested contours. The sixth

argument determines how the contours are approximated (please look this up in a

book on OpenCV).

The single step

that needs to be carried out to display the contours of an image can be found in

the method Image_OP::Draw_Contours() (see below). Similar to methods discussed before, one of

the method’s arguments (here: first argument defining the threshold) is linked

to the trackbar of the program’s main window.

Using the code

void Image_OP::Draw_Contours(int threshold, IplImage* orig_image, IplImage* manipulated_img)

{

CvMemStorage* mem_storage = cvCreateMemStorage(0);

CvSeq* contours =0;

IplImage* gray_img = cvCreateImage(cvSize(orig_img->width,orig_img->height)

IPL_DEPTH_8U,1);

int found_contours =0;

cvNamedWindow("contours only");

cvCvtColor(orig_img, gray_img, CV_RGB2GRAY),

cvThreshold (gray_img, gray_img, threshold, 255, CV_THRESH_BINARY);

found_contours = cvFindContours(gray_img, mem_storage, &contours);

cvZero(gray_img);

if(contours)

{

cvDrawContours(gray_img,cvScalarAll(255),cvScalarAll(255),100);

}

cvShowImage("contours only", gray_img);

cvReleaseImage(gray_img);

cvReleaseMemStorage(&mem_storage);

}

Saving motion data as video file

Contents of this section are strongly linked to the contents presented in my previous tutorial on OpenCV. The basic structure for the code sample

below can already be found there (see the Video_OP::Play_Video() method).

For this reason I keep the introduction to this topic very short. I just want to say some words on the FourCC notation, which was developed to

identify data formats and is widely used to access AVI video codecs. The OpenCV

macro CV_FOURCC provides this functionality and takes a four character code that denotes a particular codec

(e.g., CV_FOURCC(’D’,’I’,’V’,’X’)) . A prerequisite for applying

CV_FOURCC successfully is, of course, that the corresponding video codec is installed on the machine you are using.

Using the code

- Capture video file by invoking

this->Get_Video_from_File(char* file_name); - Invoke

Video_OP::Write_Video(int from, int to, char* path); (see code below). - Create a video writer by invoking

cvCreateVideoWriter(path, CV_FOURCC(’M’,’J’,’P’,’G’); - Set up loop to process successive frames (or images) of video file.

- Grab frames by calling

cvQueryFrame(CvCapture*); - Add frames (=images) to video file by calling

cvWriteFrame(CvVideoWriter *,IplImage*); - Define delay of presentation by using

cvWaitKey(int); (here: for demonstration purposes only)

void Video_OP::Write_Video(int from, int to, char* path)

{

this->my_on_off = true;

int key =0;

int frame_counter = from;

int fps = this->Get_Frame_Rate();

cvNamedWindow( "write to avi", CV_WINDOW_AUTOSIZE );

this->Go_to_Frame(from);

int frame_counter = from;

CvVideoWriter *video_writer = cvCreateVideoWriter(path,

CV_FOURCC('M','J','P','G'),fps,size);

while(this->my_on_off == true && frame_counter <= to)

{

this->my_grabbed_frame = cvQueryFrame(this->my_p_capture);

if( !this->my_grabbed_frame ) break;

cvWriteFrame(video_writer,my_grabbed_frame);

cvShowImage( "write to avi" ,my_grabbed_frame);

frame_counter++;

key = cvWaitKey(1000 /fps);

if (key == ’q’) break;

}

cvReleaseCapture( &my_p_capture );

cvDestroyWindow( "write to avi");

cvReleaseVideoWriter(&video_writer);

}

...

Additional points of interest

Most of the methods and operations that have been introduced here can be used in combination. This means that

image operations that will be performed on the first frame of a video file can be confined to the region that has been selected with the mouse. In addition,

these manipulations will be applied to all frames of a video if you click on the button ‘GO’ of the program’s main window.

There are methods in the source code files that have not been discussed here. For example, the

Video_OP class contains a method that turns

a movie into single images and a method that does quite the opposite, namely turning single images into a movie. If you try to do the latter you also find

some code that demonstrates how to retrieve the files of a folder by invoking the Win32 API functions

FindFirstFile() and FindNextFile().

OpenCV offers its own code to create a trackbar (or a slider) and to set up a message handler for it. I preferred to use the Win32 GUI trackbars

instead, because it seemed more convenient to me. Still, you find some code in the source code files that shows how to use OpenCV’s

own trackbar control. As a side issue the program and its source code files also demonstrate how buttons, sliders, textfields, and option buttons can be placed onto a window

and used in a Win32 program.

There is no guarantee that the presented code is bug-free (and not all exceptions are handled), but I hope it is helpful for somebody who is looking for guidance on the topics discussed here.