Here we'll give brief instructions on how to install MTCNN, TensorFlow and Keras on Raspberry Pi. Then we launch face detection on a video file to test the performance, followed by a short explanation on how to run the detection in real-time mode. Finally, we look at how we can speed up the detection using a motion detector.

Introduction

Face recognition is one area of Artificial Intelligence (AI) where deep learning (DL) has had great success over the past decade. The best face recognition systems can recognize people in images and video with the same precision humans can – or even better. The two main base stages of face recognition are person verification and identification.

In the first (current) half of this article series, we will:

- Discuss the existing AI face detection methods and develop a program to run a pretrained DNN model

- Consider face alignment and implement some alignment algorithms using face landmarks

- Run the face detection DNN on a Raspberry Pi device, explore its performance, and consider possible ways to run it faster, as well as to detect faces in real time

- Create a simple face database and fill it with faces extracted from images or videos

We assume that you are familiar with DNN, Python, Keras, and TensorFlow. You are welcome to download this project code ...

In the previous article, we implemented the first stage of a common facial recognition system based on modern AI approaches – face detection. In this article, we’ll discuss the second stage of the pipeline – face alignment – based on the perspective transformation function from the OpenCV library. This is not a mandatory stage. However, it can improve the recognition accuracy, and it always used in real-life facial recognition software.

Alignment Algorithm

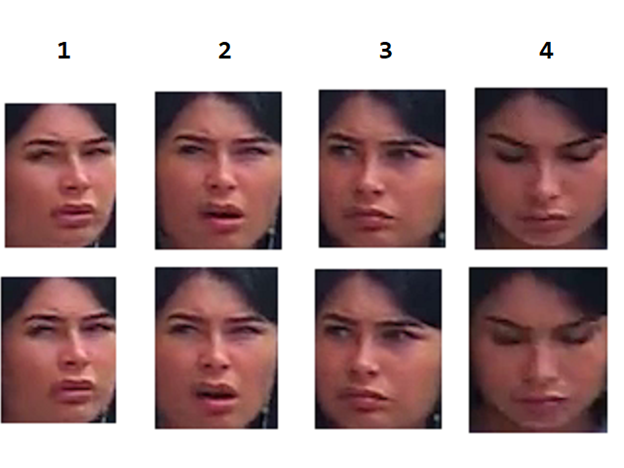

In a nutshell, face alignment is an algorithm of transforming a face picture extracted from an image or a video frame to a specified orientation, position, and scale. In real-life images and frames on which face recognition has to work, faces can appear under the various angles and with the various scale factors. The picture below shows the same face in different positions relative to the camera view.

1 – the face is rotated right

2 – the face is tilted to the side

3 – the face is rotated left

4 – the face is tilted down

The face alignment goal is to fit a face to an image of the same size, and to normalize the face view: center the face inside the image; scale the face relative to the image, and so on.

Face alignment can use the various algorithms, depending on the available information about the face and its position. We’re going to use the MTCNN model, since we know the face’s bounding box is in a 2D image and has five facial landmark positions (keypoints). We need to develop a transformation that will use keypoints of a face to bring it to a standard view.

Fortunately, we don’t need to reinvent the wheel. In computer vision, such transformation is known as perspective transform, and it is relatively easy to implement with the OpenCV library. All we need is to select four points in the source image and four matching points in the target image.

Look at the picture below. On the left side, we've drawn the keypoints found with the MTCNN algorithm. On the right side, we show three points that we can calculate based on the known coordinates of the three keypoints: 1, 2, and 3.

Point 6 is in the middle between points 1 and 2. Points 7 and 8, together with keypoints 1 and 2, form a parallelogram. The distance between points 1 and 7 is the same as the distance between points 2 and 8, which is the double distance between points 6 and 3. So point 3 is the center of the parallelogram.

Implementation of the Algorithm

Let’s implement the perspective transform algorithm using four corners of the parallelogram:

class Face_Align:

def __init__(self, size):

self.size = size

def align_point(self, point, M):

(x, y) = point

p = np.float32([[[x, y]]])

p = cv2.perspectiveTransform(p, M)

return (int(p[0][0][0]), int(p[0][0][1]))

def align(self, frame, face):

(x1, y1, w, h) = face['box']

(l_eye, r_eye, nose, mouth_l, mouth_r) = Utils.get_keypoints(face)

(pts1, pts2) = self.get_perspective_points(l_eye, r_eye, nose, mouth_l, mouth_r)

s = self.size

M = cv2.getPerspectiveTransform(pts1, pts2)

dst = cv2.warpPerspective(frame, M, (s, s))

f_aligned = copy.deepcopy(face)

f_aligned['box'] = (0, 0, s, s)

f_img = dst

l_eye = self.align_point(l_eye, M)

r_eye = self.align_point(r_eye, M)

nose = self.align_point(nose, M)

mouth_l = self.align_point(mouth_l, M)

mouth_r = self.align_point(mouth_r, M)

f_aligned = Utils.set_keypoints(f_aligned, (l_eye, r_eye, nose, mouth_l, mouth_r))

return (f_aligned, f_img)

class Face_Align_Nose(Face_Align):

def get_perspective_points(self, l_eye, r_eye, nose, mouth_l, mouth_r):

(xl, yl) = l_eye

(xr, yr) = r_eye

(xn, yn) = nose

(xm, ym) = ( 0.5*(xl+xr), 0.5*(yl+yr) )

(dx, dy) = (xn-xm, yn-ym)

(xl2, yl2) = (xl+2.0*dx, yl+2.0*dy)

(xr2, yr2) = (xr+2.0*dx, yr+2.0*dy)

s = self.size

pts1 = np.float32([[xl, yl], [xr, yr], [xr2, yr2], [xl2, yl2]])

pts2 = np.float32([[s*0.25, s*0.25], [s*0.75, s*0.25], [s*0.75, s*0.75], [s*0.25,s*0.75]])

return (pts1, pts2)

The above code contains two classes. The base class, Face_Align, implements the transformation algorithm using two functions from the OpenCV library: getPerspectiveTransform and warpPerspective. The first function evaluates the transformation matrix, and the second one transforms the image with the matrix. The points for evaluating the perspective matrix must be provided with the get_perspective_points method. The specific implementation of the method is realized in the inherited class, Face_Align_Nose.

Adding Face Alignment to the Detector

Now we can add the face alignment algorithm to the video face detector described in the previous article:

class VideoFD:

def __init__(self, detector):

self.detector = detector

def detect(self, video, save_path = None, align = False, draw_points = False):

detection_num = 0;

capture = cv2.VideoCapture(video)

img = None

dname = 'AI face detection'

cv2.namedWindow(dname, cv2.WINDOW_NORMAL)

cv2.resizeWindow(dname, 960, 720)

frame_count = 0

dt = 0

face_num = 0

if align:

fa = Face_Align_Nose(160)

while(True):

(ret, frame) = capture.read()

if frame is None:

break

frame_count = frame_count+1

t1 = time.time()

faces = self.detector.detect(frame)

t2 = time.time()

p_count = len(faces)

detection_num += p_count

dt = dt + (t2-t1)

if (not (save_path is None)) and (len(faces)>0) :

f_base = os.path.basename(video)

for (i, face) in enumerate(faces):

if align:

(f_cropped, f_img) = fa.align(frame, face)

else:

(f_cropped, f_img) = self.detector.extract(frame, face)

if (not (f_img is None)) and (not f_img.size==0):

if draw_points:

Utils.draw_faces([f_cropped], (255, 0, 0), f_img, draw_points, False)

face_num = face_num+1

dfname = os.path.join(save_path, f_base + ("_%06d" % face_num) + ".png")

cv2.imwrite(dfname, f_img)

if len(faces)>0:

Utils.draw_faces(faces, (0, 0, 255), frame)

if not (save_path is None):

dfname = os.path.join(save_path, f_base + ("_%06d" % face_num) + "_frame.png")

cv2.imwrite(dfname, frame)

cv2.imshow(dname,frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

capture.release()

cv2.destroyAllWindows()

fps = frame_count/dt

return (detection_num, fps)

Let’s launch face detection on a video file:

d = MTCNN_Detector(50, 0.95)

vd = VideoFD(d)

v_file = r"C:\PI_FR\video\5_2.mp4"

save_path = r"C:\PI_FR\detect"

(f_count, fps) = vd.detect(v_file, save_path, True, False)

print("Face detections: "+str(f_count))

print("FPS: "+str(fps))

In the picture below, we show faces in the same positions, but now they are aligned with the use of our algorithm.

You can see that now all the pictures are of the same size. However, the alignment algorithm did not do a good job. When a face is rotated left or right, the algorithm fails and severely distorts the face. This is because the tip of the nose does not lay in the same plane with the other points. When the face is rotated to the side, point 3 ceases being the center of the parallelogram.

Modifying Alignment Algorithm

Let’s try to modify the alignment algorithm using other face landmarks. In the picture below, we introduce a new point – 9.

Point 9 is calculated as the middle between points 4 and 5. We still use four points – 1, 2, 8, 7 – as the base for perspective transform. But now the calculation of points 7 and 8 is based on the coordinates of point 9. We assume that points 6 and 9 lay on the middle line of the parallelogram. The code below implements this algorithm:

class Face_Align_Mouth(Face_Align):

def get_perspective_points(self, l_eye, r_eye, nose, mouth_l, mouth_r):

(xl, yl) = l_eye

(xr, yr) = r_eye

(xml, yml) = mouth_l

(xmr, ymr) = mouth_r

(xn, yn) = ( 0.5*(xl+xr), 0.5*(yl+yr) )

(xm, ym) = ( 0.5*(xml+xmr), 0.5*(yml+ymr) )

(dx, dy) = (xm-xn, ym-yn)

(xl2, yl2) = (xl+1.1*dx, yl+1.1*dy)

(xr2, yr2) = (xr+1.1*dx, yr+1.1*dy)

s = self.size

pts1 = np.float32([[xl, yl], [xr, yr], [xr2, yr2], [xl2, yl2]])

pts2 = np.float32([[s*0.3, s*0.3], [s*0.7, s*0.3], [s*0.7, s*0.75], [s*0.3, s*0.75]])

return (pts1, pts2)

Using the new Face_Align_Mouth class in the video detector and launching it with the same video file, we get the following aligned faces.

You see that now there is no face distortion, and that all faces are aligned properly. Note that not all the aligned faces look like they are right in front of the camera. For example, the rotated faces still look slightly rotated. Unfortunately, we cannot reconstruct the front view of a rotated face in a 2D image.

Nevertheless, all face keypoints now have the same coordinates, relative to the final image. This is exactly what we want from the alignment algorithm – to have the face normalized to the same image coordinates. This approach ensures that the features extracted from a face in the database and a face input via a camera belong to the same space.

Next Steps

In the next article of this series, we’ll run our face detector on a Raspberry Pi device. Stay tuned!