Introduction

Application Cleaner is a simple concept that performs a search and replace on program code/data to remove any spyware URLs within program code to stop programs such as web-browsers from calling home and was written in VS2010 using C# and makes extensive use of the System.IO.Filestream and System.IO.Fileinfo classes to open, read and then edit program code or data.

Threading for the File Scanner and Form

The program works by starting a new thread that calls the code that is shown below that is a recursive function used to iterate the directory tree of the folders and calls the function "ScanFolderFiles" with a Directoryinfo object to perform the processing.

private static void ScanFolders(DirectoryInfo DInfo)

{

ScanFolderFiles(DInfo);

if (RootFolderOnly) return;

foreach (DirectoryInfo SubDIofo in DInfo.GetDirectories())

{

ScanFolders(SubDIofo);

}

}

private static void ScanFolderFiles(DirectoryInfo DInfo)

{

foreach (FileInfo FInfo in DInfo.GetFiles())

{

if (!Running) return;

while (Paused) { Thread.Sleep(2000); }

if (!FInfo.Name.EndsWith(".backup"))

{

ReadFile(FInfo);

DisplayNewLinks();

}

}

);

}

The user is given the option of pausing or terminating the scanners thread by setting the static bool values of Running or Pause to true or false.

ReadFile opens up the file using a file stream that reads all of the file into a byte[] array that is then chopped up into a byte array of 10k chunks so that URLs can be searched for and added to a links dictionary with the Links.Displayed value set as false and then DisplayNewLinks() is called.

private static void DisplayNewLinks()

{

foreach (Link L in Scanner.Links.Values)

{

if (!L.Displayed)

{

L.Displayed = true;

AddNewFind(L.Finfo.Name, L.LinkText, L.Find,

L.ChunkCount,L.Start , L.ImageIndex, L.Finfo.Directory.FullName);

}

}

}

Note that all of the above work was completed using the Scanners own thread and that the "AddNewFind" function locks a "NewFind" string before appending the data past in as '¬' separated values to the end of the string so that a form timer can later call Scanner.GetListViewItems() that again locks the "NewFind" string and then proceeds to convert the string into ListViewItems that are then appended to the forms main ListView.

public static Dictionary<int, ListViewItem> GetListViewItems()

{

Dictionary<int, ListViewItem> LVItems = new Dictionary<int, ListViewItem>();

string[] Items = null;

lock (NewFind)

{

Items = NewFind.Replace(Environment.NewLine,

"~").Split('~');

NewFind = "";

MessageCount = 0;

}

foreach (string Item in Items)

{

string[] Data = Item.Split('¬');

if (Data.Length > 2)

{

int Num = 0;

int.TryParse(Data[0], out Num);

ListViewItem LVI = new ListViewItem(Data);

if (Num > 0)

LVItems.Add(Num, LVI);

}

}

return LVItems;

}

Reading files and then processing the data is very CPU intensive and without any Thread.Sleeps() in the above code the form timer would hardly get a chance to fire and the form would freeze up so depending on how many links are waiting to be displayed, the main Scanner thread sleeps now and then to give the U.I a chance to catch up and that concludes our part on Threading.

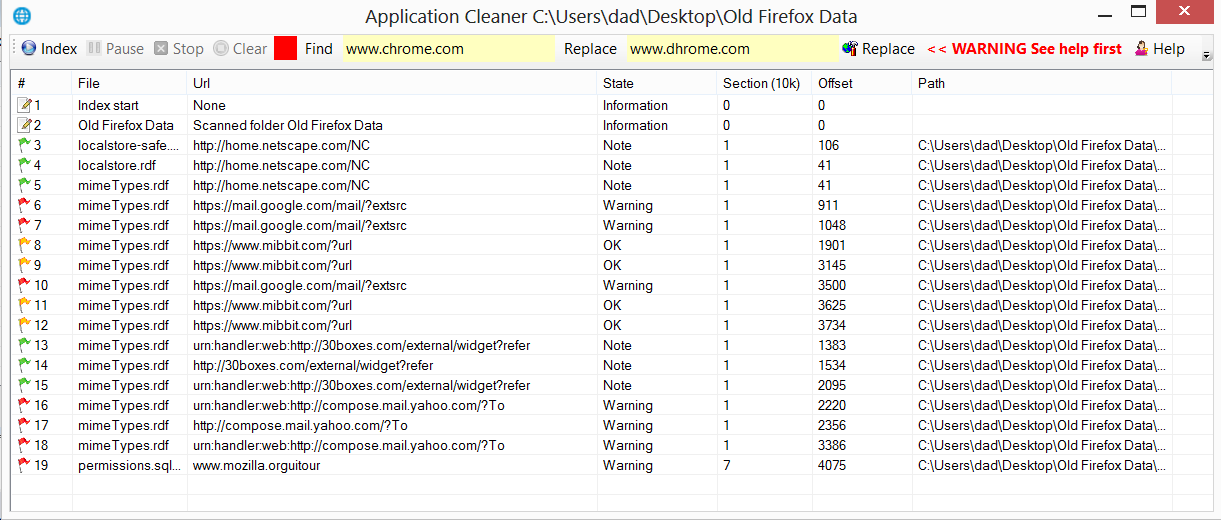

Output Display and Sorting the List-view Results

Application cleaner uses color coded flags in the list-view to denote the type of URL found so that the user can perform a manual search and replace or double-click items in the list for auto-replace to do the work but I soon discovered that your average web-browser contains about 5000 URLs embedded in the program code, DLLs and data with one well known web-browser having over 20,000 URLs ( I will come back to this later) so the rush was on to sort the list-view results.

The code that was added to the form to sort the list view is shown below and you should note from the picture above that columns [0], [4] and [5] are all numeric values and are used in the code below.

private SortOrder OrderBy = SortOrder.Ascending;

private void LVScanner_ColumnClick(object sender, ColumnClickEventArgs e)

{

bool IsNumber = false;

if (OrderBy == SortOrder.Ascending) OrderBy = SortOrder.Descending;

else OrderBy = SortOrder.Ascending;

int Col = int.Parse(e.Column.ToString());

if (Col > 6) return;

if (Col == 0 || Col == 4 || Col == 5) IsNumber = true;

this.LVScanner.ListViewItemSorter = new ListViewItemComparer

(e.Column, OrderBy, IsNumber);

LVScanner.Sort();

}

Our code for the ListViewItemComparer class is shown below and it could be expanded to pre-scan some of the column data to decide if the column is numeric or not but all that takes time and code so I decide to simply hardcode the values into the code for now.

using System;

using System.Collections;

using System.Windows.Forms;

using System.Text;

public class ListViewItemComparer : IComparer

{

private int col;

private SortOrder order;

private bool IsNumber = false;

public ListViewItemComparer()

{

col = 0;

order = SortOrder.Ascending;

}

public ListViewItemComparer(int column, SortOrder order, bool isNumber)

{

col = column;

this.order = order;

this.IsNumber = isNumber;

}

public int SafeGetInt(string Text)

{

Text = Text.Trim();

int Value = 0;

int End = Text.IndexOf(".");

if (End > -1) Text = Text.Substring(0, End);

End = Text.IndexOf(" ");

if (End > -1) Text = Text.Substring(0, End).Trim();

End = Text.IndexOf("/");

if (End > -1) Text = Text.Substring(0, End);

int.TryParse(Text, out Value);

return Value;

}

public int CompareNumber(object x, object y)

{

int returnVal = -1;

int IntX = SafeGetInt(((ListViewItem)x).SubItems[col].Text);

int IntY = SafeGetInt(((ListViewItem)y).SubItems[col].Text);

if (IntX > IntY)

returnVal = 1;

if (order == SortOrder.Descending)

returnVal *= -1;

return returnVal;

}

public int Compare(object x, object y)

{

int returnVal = -1;

if (this.IsNumber) return CompareNumber(x, y);

returnVal = String.Compare(((ListViewItem)x).SubItems[col].Text,

((ListViewItem)y).SubItems[col].Text);

if (order == SortOrder.Descending)

returnVal *= -1;

return returnVal;

}

}

20,000 URLs Hidden in a Browser, Are You Serious!

Well yes and that's without the cached history or cookies and one of the reasons this number is so high is because when a program is compiled that uses embedded Photoshop images then each image will contain a URL pointing to ns.adobe.com and then you have ww3.com XML schemas all over the place plus hundreds of URLs needed for SSL-Certificates from Verisign & Co but even then it still leaves a lot.

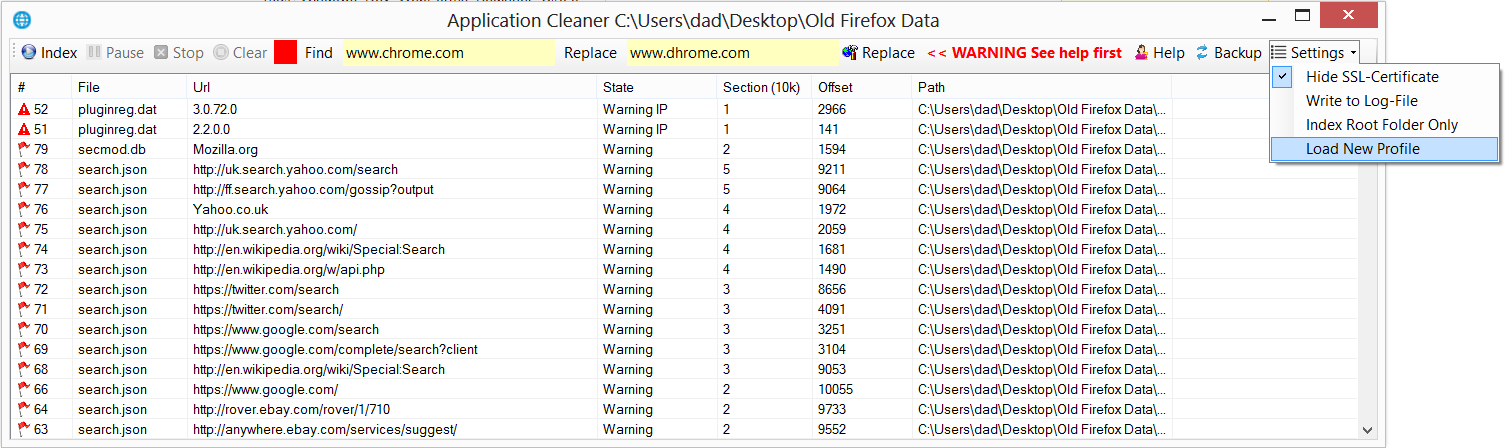

With so much data, it became necessary to remove this common type of data from the results so that users could see the wood through the trees and the way that I decided to do this was to first add the option of hiding SSL-Certificate results and to then make use of profile that could be loaded by the user to filter the results for a particular type of data.

As you can see, our data is now sorted by state in the above picture and if you look close at the top two rows, you will see that Application Cleaner also manages to search the program code and data for anything that looks like an IP-Address but sometimes makes a mistake with four digit version numbers that look the same as an IP-Address.

Scanning for embed IP-Addresses is nice to have because viruses often use these addresses to call home and this program makes them easy to spot but many browsers also hardcode Googles DNS-Server IP address of 8.8.8.8 into the browser so that these browsers can bypass your local security if they are being blocked by URLs in the firewall or the DNS-Server/Virus blocker is trying to block the requests.

The project uses profile files that configure the scanner for a particular type of data to be indexed and contains a list of values to be automatically searched and replace and the function used to load profiles is shown below and works a bit like the old Windows .ini files with a bit of a twist and for me the Windows registry with its 400,000 folders is getting a bit full and far too many programs get to read its contents to generate fingerprints.

public static string LoadSettingFromFile(string Key, string Default, string Profile)

{

string FileName = Environment.CurrentDirectory + "\\" + Profile;

if (!File.Exists(FileName))

{

File.WriteAllText(FileName, Key + " " + Default + Environment.NewLine);

return Default;

}

string[] Lines = File.ReadAllLines(FileName);

foreach (string Line in Lines)

{

if (Line.ToLower().Trim().StartsWith(Key.ToLower()))

return Line.ChopOffBefore(Key).Trim();

}

StreamWriter SW = File.AppendText(FileName);

SW.WriteLine(Key + " " + Default);

SW.Close();

return Default;

}

Objectives and Achievements

I use a proxy server and a DNS-Server to block spyware on our LAN and I was getting a bit tired of seeing the proxy server logs filling up over night when no one was even browsing and I also knew that even Microsoft was bypassing the Windows proxy server setting to try to call home using encrypted SSL traffic because my firewall as a last line of defence was blocking these malicious requests from getting out so I decide to tackle this problem at source by editing the machine code of the programs that were behind the attempts to use backdoors.

It now takes me about half an hour to doctor most browsers using this program so that they are unable to upload super cookies that get installed in browsers when you have to run one of them "Install" programs that uses a downloader to install a browser that makes a profit by leaching and then selling your private data to the highest bidder.

Improvements

This is my second attempt with this little program and my mistake in the first version was not to index the program contents but to instead do a quick search and replace in one go when the file was being read but this proved to be a bit slow when having to re-scan the data each time a search and replace was done but that has been fixed now but it would be nice to somehow make it even fast since it can take five minutes to scan the index for a project.

Replacing URLs strings in sections of machine code was easy so long as the length of the text being replaced is kept the same to ensure that machine code jumps in the code all remain the same and for this reason the program will reject any attempt to replace "SpywareHome.com" with "abc,com" but this is not the case with XML type data so it would be nice to have find a fix for this but it would need to take into account that the last-write date for the files must be preserved because some programs check for this.

As you can see, I am useless at documentation and the help text for the program needs rewriting from scratch by anyone but you will find the full source code for the project is well commented and you are free to download a copy by clicking the link at the top of the page and to make the project your own.

Enjoy!

Dr Gadgit