Table of Contents

Any computing device has a finite amount of hardware resources and as more applications and services are competing for them, the user experience often becomes sluggish.

Some of the performance degradation is because of the installed crapware, but some may be inherent to the underlying technology of the programs that run during the system start-up, while you use your application or just run in the background regardless if you need them or not. To make matters worse, mobile devices will deplete their battery charge faster due to increase CPU and IO activity.

You've probably heard of many performance benchmarks comparing runtimes on some very specific characteristics or even functionally equivalent applications developed with competing technologies.

So far I have not found one that was focused on the start-up performance and the impact on the whole system for applications developed using different technologies.

In my opinion this type of benchmark could be more relevant to the user experience than others.

This kind of information can be helpful when making the decision what technology to use based on the hardware systems targeted. I have focused on a few popular technologies like .net/C#, java, mono/C# and finally native code compiled with C++, all tested with their default install settings.

As of the time I'm writing this article (July 2010), Windows XP has still the largest market share of the operating systems in use and the latest versions of these technologies are Oracle's Java 1.6, Novell's Mono 2.6.4, Microsoft's .Net 4.0 and Visual C++ from Visual Studio 2010.

The reason such a benchmark is difficult to be fair and consistent is because of the large number of factors that come into play when running a test like this and the difficulty of finding the same functionality and behavior as a common denominator.

Hence, I have focused on a few simple benchmarks that could be easily reproduced and are common to all technologies.

The first test will determine the time it takes from the moment a process is created to the moment the execution enters the main function and the process is ready to do something useful. This is strictly from the user's perspective and will measure the wall clock time as a living person will experience. I will call this the Start-up time and as you'll see along this article this is the most difficult to gauge and probably the most important for the user experience.

The next batch of measurements will capture the memory usage and the Kernel and User times as processor times as opposed to the Start-up time mentioned above. These last two CPU times are actually for the full life of the process and not only for entering the main function. I tried to keep the internal logic to a minimum since I'm interested in the frameworks' overhead not the applications'.

In this article, when I mention the OS APIs, I'm referring to Windows XP and I'll use the runtime term loosly, even when speaking about the native C++ code. There is no OS API to retrieve the Start-up time as I defined it above.

For that I had to design my own method of calculating for all frameworks. The difficulty in getting this time is because I measure this time right before the process is created and then in the called process as soon as it enters the main function.

To get around this impediment I used the simplest inter-process communication available:pass the creation time as a command line argument when creating the process and return the time difference as the exit code. The steps to make this happen are described below:

In the caller process (BenchMarkStartup.exe) get the current UTC system time Start the test process passing this time as an argument In the forked process, get the current system UTC time on the first line in the main function In the same process, calculate and adjust the time difference Return the time difference as an exit code Capture the exit code in the caller process (BenchMarkStartup.exe) as the time difference.You will notice that during the article I have two values for the times, cold and warm. A cold start is measured on a machine that has just been rebooted and as few as possible applications have started before it. A warm start is measured for subsequent starts of the same application. Cold starts tend to be slower mostly because of the

IO component associated with it. Warm starts take advantage of the

OS prefetch functionality and tend to be faster mostly for the start-up time.

For the managed runtimes, the JIT compiler will consume some extra CPU time and memory when compared with the native code.

The results can be skewed by existing applications or services that are loaded or ran just before these tests, especially for the cold start-up time. If other applications or services have loaded libraries your application is also using, than the I/O operations will reduced and the start-up time improved.

On the Java side, there are a some applications designed for loading and cashing dlls, so, when the tests are running, you would have to disable the Java Quick Starter or Jinitiator too.

I think that caching and prefetching should better be left to the OS to manage them and not waste resources needlessly.

The C++ test code for the called process is actually the most straight forward:

When it gets a command line parameter, it converts it to __int64 that is just another way to represent the FILETIME.

The FILETIME is the 100 nanoseconds units encountered since 1601/1/1, so we make the difference and return the difference as milliseconds. The 32 bit size exit code should more than enough for the time values we are dealing with not to overflow.

int _tmain(int argc, _TCHAR* argv[])

{

FILETIME ft;

GetSystemTimeAsFileTime(&ft);

static const __int64 startEpoch2 = 0;

if( argc < 2 )

{

::Sleep(5000);

return -1;

}

FILETIME userTime;

FILETIME kernelTime;

FILETIME createTime;

FILETIME exitTime;

if(GetProcessTimes(GetCurrentProcess(), &createTime, &exitTime, &kernelTime, &userTime))

{

__int64 diff;

__int64 *pMainEntryTime = reinterpret_cast<__int64 *>(&ft);

_int64 launchTime = _tstoi64(argv[1]);

diff = (*pMainEntryTime -launchTime)/10000;

return (int)diff;

}

else

return -1;

}

Below is the code that creates the test process, feeds it the original time and then retrieves the Start-up time as the exit code and displays other process attributes. The first call counts as a cold start and subsequent calls are considered warm. Based on the latter, some helper functions provide statistics for multiple warm samples and then display the results.

DWORD BenchMarkTimes( LPCTSTR szcProg)

{

ZeroMemory( strtupTimes, sizeof(strtupTimes) );

ZeroMemory( kernelTimes, sizeof(kernelTimes) );

ZeroMemory( preCreationTimes, sizeof(preCreationTimes) );

ZeroMemory( userTimes, sizeof(userTimes) );

BOOL res = TRUE;

TCHAR cmd[100];

int i,result = 0;

DWORD dwerr = 0;

PrepareColdStart();

::Sleep(3000);

for(i = 0; i <= COUNT && res; i++)

{

STARTUPINFO si;

PROCESS_INFORMATION pi;

ZeroMemory( &si, sizeof(si) );

si.cb = sizeof(si);

ZeroMemory( &pi, sizeof(pi) );

::SetLastError(0);

__int64 wft = 0;

if(StrStrI(szcProg, _T("java")) && !StrStrI(szcProg, _T(".exe")))

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("java -client -cp .\\.. %s \"%I64d\""), szcProg,wft);

}

else if(StrStrI(szcProg, _T("mono")) && StrStrI(szcProg, _T(".exe")))

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("mono %s \"%I64d\""), szcProg,wft);

}

else

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("%s \"%I64d\""), szcProg,wft);

}

if( !CreateProcess( NULL,cmd,NULL,NULL,FALSE,0,NULL,NULL,&si,&pi ))

{

dwerr = GetLastError();

_tprintf( _T("CreateProcess failed for '%s' with error code %d:%s.\n"),szcProg, dwerr,GetErrorDescription(dwerr) );

return dwerr;

}

dwerr = WaitForSingleObject( pi.hProcess, 20000 );

if(dwerr != WAIT_OBJECT_0)

{

dwerr = GetLastError();

_tprintf( _T("WaitForSingleObject failed for '%s' with error code %d\n"),szcProg, dwerr );

CloseHandle( pi.hProcess );

CloseHandle( pi.hThread );

break;

}

res = GetExitCodeProcess(pi.hProcess,(LPDWORD)&result);

FILETIME CreationTime,ExitTime,KernelTime,UserTime;

if(GetProcessTimes(pi.hProcess,&CreationTime,&ExitTime,&KernelTime,&UserTime))

{

__int64 *pKT,*pUT, *pCT;

pKT = reinterpret_cast<__int64 *>(&KernelTime);

pUT = reinterpret_cast<__int64 *>(&UserTime);

pCT = reinterpret_cast<__int64 *>(&CreationTime);

if(i == 0)

{

_tprintf( _T("cold start times:\nStartupTime %d ms"), result);

_tprintf( _T(", PreCreationTime: %u ms"), ((*pCT)- wft)/ 10000);

_tprintf( _T(", KernelTime: %u ms"), (*pKT) / 10000);

_tprintf( _T(", UserTime: %u ms\n"), (*pUT) / 10000);

_tprintf( _T("Waiting for statistics for %d warm samples"), COUNT);

}

else

{

_tprintf( _T("."));

kernelTimes[i-1] = (int)((*pKT) / 10000);

preCreationTimes[i-1] = (int)((*pCT)- wft)/ 10000;

userTimes[i-1] = (int)((*pUT) / 10000);

strtupTimes[i-1] = result;

}

}

else

{

printf( "GetProcessTimes failed for %p", pi.hProcess );

}

CloseHandle( pi.hProcess );

CloseHandle( pi.hThread );

if((int)result < 0)

{

_tprintf( _T("%s failed with code %d: %s\n"),cmd, result,GetErrorDescription(result) );

return result;

}

::Sleep(1000);

}

if(i <= COUNT )

{

_tprintf( _T("\nThere was an error while running '%s', last error code = %d\n"),cmd,GetLastError());

return result;

}

double median, mean, stddev;

if(CalculateStatistics(&strtupTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("\nStartupTime: mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&preCreationTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("PreCreation: mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&kernelTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("KernelTime : mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&userTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("UserTime : mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

return GetLastError();

}

Notice that for starting the mono or the java applications the command line is different than .net or native code. Also I have not used Performance monitor counters anywhere.

The caller process is retrieving the kernel time and the user time for the child process. If you wonder why I have not used the creation time as provide by the GetProcessTimes, there are two reasons for that.

The first is that it will require DllImports for .net and Mono, and JNI for Java that would have made the applications much 'fatter'.

The second reason is that I noticed that the creation time is not really the time when CreateProcess API was invoked. Between the two I could see a difference between 0 and 10 ms when running from the internal hard drive, but that can grow to hundreds of milliseconds when ran from slower media like a network drive or even seconds (no typo here folks, it's seconds) when run it from a floppy drive. That time difference will show up in my tests as 'Precreation time' and is only for your information.

I can only speculate that it is like that because the OS does not account for the time needed to read the files from the media when creating a new process, because it always shows up in cold starts and hardly ever in warm starts.

Calculating the start-up time in the called .net code is a little different than C++, but using the FromFileTimeUtc helper method from DateTime makes it almost as easy as in C++.

private const long TicksPerMiliSecond = TimeSpan.TicksPerSecond / 1000;

static int Main(string[] args)

{

DateTime mainEntryTime = DateTime.UtcNow;

int result = 0;

if (args.Length > 0)

{

DateTime launchTime = System.DateTime.FromFileTimeUtc(long.Parse(args[0]));

long diff = (mainEntryTime.Ticks - launchTime.Ticks) / TicksPerMiliSecond;

result = (int)diff;

}

else

{

System.GC.Collect(2, GCCollectionMode.Forced);

System.GC.WaitForPendingFinalizers();

System.Threading.Thread.Sleep(5000);

}

return result;

}

In order to use mono you have to download it and change the environment variable path by adding C:\PROGRA~1\MONO-2~1.4\bin\ or whatever the path is for your version. When you install it, you can deselect the XSP and GTK# components since they are not required by this test. To compile it, the easiest way is to use the buildMono.bat I've included in the download.

I've included C# Visual Studio projects for versions 1.1, 2.0, 3.5 and 4.0. If you only want to run the binaries you would have to download and install their respective runtime. To build them it you'll need Visual Studio 2003 and 2010, or the specific SDKs if you love the command prompt. To force the loading of the targeted runtime version, I've created config files for all .net executables.

They should look like below, except the specific version:

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<startup>

<supportedRuntime version="v1.1.4322" />

</startup>

</configuration>

If you want to build the Java test you would have to download java SDK, install it and set the PATH correctly before running. Also before building, you will have to set up the right compile path to javac.exe that depends on version. It should look like below:

set path=C:\Program Files\Java\jdk1.6.0_16\bin;%path%

Again, I have a buildJava.bat file in the download that can help. The code for the Java test is below and has to make the adjustment to the java epoch:

public static void main(String[] args)

{

long mainEntryTime = System.currentTimeMillis();

int result = 0;

if (args.length > 0)

{

long fileTimeUtc = Long.parseLong(args[0]);

long launchTime = fileTimeUtc - 116444736000000000L;

launchTime /= 10000;

result = (int)(mainEntryTime - launchTime);

}

else

{

try

{

System.gc();

System.runFinalization();

Thread.sleep(5000);

}

catch (Exception e)

{

e.printStackTrace();

}

}

java.lang.System.exit(result);

}

Java's lack of time resolution when it comes to measuring the duration from a given time is the reason why I had to use millisecons rather than a more granular unit offered by the other runtimes. However one millisecond is good enough for these tests.

You might think that memory is not a real factor when it comes to performance but this is a superficial assessment. When a system runs low on available physical memory, paging activity increases and that leads to increased disk IO and kernel CPU activity that would adversely affect all of the running applications.

A Windows process has many aspects of using the memory and I'll limit my measurements to the Private Bytes, Minimum and Peak Working Set. You can get more information on this complex topic from the Windows experts.

If you wonder why the called process was waiting 5 seconds when there was no argument, now you have the answer. After 2 seconds of waiting the caller will measure the memory usage in the code below:

BOOL PrintMemoryInfo( const PROCESS_INFORMATION& pi)

{

if(WAIT_TIMEOUT != WaitForSingleObject( pi.hProcess, 2000 ))

return FALSE;

if(!EmptyWorkingSet(pi.hProcess))

printf( "EmptyWorkingSet failed for %x\n", pi.dwProcessId );

BOOL bres = TRUE;

PROCESS_MEMORY_COUNTERS_EX pmc;

if ( GetProcessMemoryInfo( pi.hProcess, (PROCESS_MEMORY_COUNTERS*)&pmc, sizeof(pmc)) )

{

printf( "PrivateUsage: %lu KB,", pmc.PrivateUsage/1024 );

printf( " Minimum WorkingSet: %lu KB,", pmc.WorkingSetSize/1024 );

printf( " PeakWorkingSet: %lu KB\n", pmc.PeakWorkingSetSize/1024 );

}

else

{

printf( "GetProcessMemoryInfo failed for %p", pi.hProcess );

bres = FALSE;

}

return bres;

}

Minimum Working Set is a value that I've calculated after the the memory for the called process was shrunk by EmptyWorkingSet API.

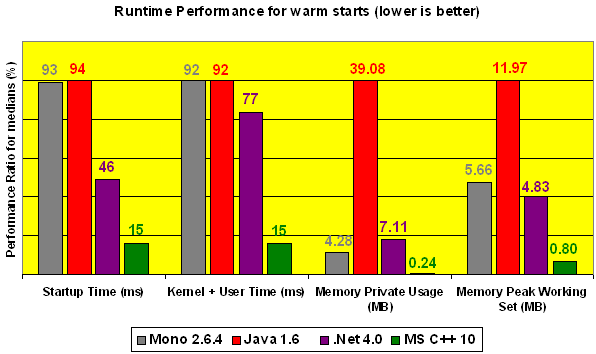

There are a lot of results to digest from these tests. I've picked what I considered to be the most relevant measurments for this topic and I placed a summary for warm starts in the graph at the top of this page. More results are available if you ran the tests in debug mode.

For warm starts I ran the tests 9 times, but the cold start is a one time shot as I described above. I've included only the median values and the Kernel and User time were lumped together as the CPU time. The results below were generated on a Windows XP machine with a Pentium 4 CPU running at 3 GHz with 2 GB of RAM.

| Runtime | Cold Start | Warm Start | Private Usage

(KB) | Min Working Set

(KB) | Peak Working Set

(KB) |

Startup

Time(ms) | CPU

Time(ms) | Startup

Time(ms) | CPU

Time(ms) |

| .Net 1.1 | 1844 | 156 | 93 | 93 | 3244 | 104 | 4712 |

| .Net 2.0 | 1609 | 93 | 78 | 93 | 6648 | 104 | 5008 |

| .Net 3.5 | 1766 | 125 | 93 | 77 | 6640 | 104 | 4976 |

| .Net 4.0 | 1595 | 77 | 46 | 77 | 7112 | 104 | 4832 |

| Java 1.6 | 1407 | 108 | 94 | 92 | 39084 | 120 | 11976 |

| Mono 2.6.4 | 1484 | 156 | 93 | 92 | 4288 | 100 | 5668 |

| CPP code | 140 | 30 | 15 | 15 | 244 | 40 | 808 |

You might think that .Net 4.0 and .Net 2.0 are breaking the laws of physics by having a Start-up time lower than the CPU time, but remember that the CPU time is for the full life of the process and Start-up is just for entering the main function. That merely tell us that there are some optimizations to improve the Start-up speed for these frameworks.

As you can easily see, C++ is a clear winner in all categories but with some caveats to that: the caller process 'helps' the C++ tests by preloading some common dlls and also there are no calls made to the garbage collector.

I don't have historical data for all runtimes, but looking only at .net, the trend below shows that newer versions tend to be faster at the expense of using more memory.

Since all runtimes but the native code are using intermediate code, the next natural step would be to try to make a native image if possible and evaluate the performance again.

Java does not have an easy to use tool for this purpose. GCJ is a half step in this direction but it's really not part of the official runtime, so I'll ignore it. Mono has a similar feature known as Ahead of Time (AOT). Unfortunately this compilation feature will fail on Windows.

.Net has supported native code generation from the beginning and ngen.exe is part of the runtime distribution.

For your convenience, I've added make_nativeimages.bat to the download that would generate native images for the assemblies used for testing.

| Runtime | Cold Start | Warm Start | Private Usage

(KB) | Min Working Set

(KB) | Peak Working Set

(KB) |

Startup

Time(ms) | CPU

Time(ms) | Startup

Time(ms) | CPU

Time(ms) |

| .Net 1.1 | 2110 | 140 | 109 | 109 | 3164 | 108 | 4364 |

| .Net 2.0 | 1750 | 109 | 78 | 77 | 6592 | 108 | 4796 |

| .Net 3.5 | 1859 | 140 | 78 | 77 | 6588 | 108 | 4800 |

| .Net 4.0 | 1688 | 108 | 62 | 61 | 7044 | 104 | 4184 |

Again it seems that we are seeing another paradox: the start-up time appears to be higher for native compiled assemblies and that will defeat the purpose of using native code generation. But if we think more it starts to make sense because the IO operation required to load the native image could last more than compiling the tiny amount of code in our test programs.

You can run a particular test by passing the testing executable as a parameter to the BenchMarkStartup.exe. For Java the package name will have to match the directory structure, so the argument JavaPerf.StartupTest will require a ..\JavaPerf folder.

I've included runall.bat in the download, but that batch will fail to capture realistic cold start-up times.

If you want the real test you can reboot manually or call the benchmark.bat from the release folder in a scheduled task overnight every 20 - 30 minutes and get the results in the text log files (as I did). That will run real tests for all runtimes by rebooting your machine.

Latest computers often will throttle the CPU frequency to save energy but that could alter these tests. So, before you run the tests, in addition to the things I've mentioned previously you have to set up the Power Scheme to 'High Performance' in order to get consistent results. With so many software and hardware factors you can expect quite different results and even changes in runtime ranking.

So far the applications I’ve tested were attached to the console and did not have any UI component. Adding UI it gives a new meaning to the start-up time that becomes the time from the moment the process was started and the time the user interface is displayed ready for use. To keep it simple the user interface consists only of a window with some text in the center.

It looks like the startup time can be easily measured just from the caller process by timing the interval between creating the process and returning from

WaitForInputIdle . Unfortunately, that is not the case except for MFC and WinForms in .Net 1.1 from the tests I’ll describe below. The other tested frameworks will return from WaitForInputIdle even before the UI is displayed.

That made me revert to the previous method I’ve used above for getting the start-up time.

Another hurdle was the fact that unlike the other frameworks, java lacked a way to signal user inactivity immediately after the application started unless a third party solution was used. Hence I replaced the user inactivity with the last known event when the UI was shown. The script for calculating the start-up time becomes:

In the caller process (BenchMarkStartupUI.exe) get the current UTC system time Start the test process passing this time as an argument In the forked process, get the current system UTC time in the last event when the UI is displayed In the same process, calculate and adjust the time difference Return the time difference as an exit code Capture the exit code in the caller process (BenchMarkStartupUI.exe) as the time difference.

The caller code to calculate the startup time is similar with the code for non UI tests, except this time we can control the Window status and process lifetime through plain Windows messages and we’ll will use javaw.exe instead of java.exe.

DWORD BenchMarkTimes( LPCTSTR szcProg)

{

ZeroMemory( strtupTimes, sizeof(strtupTimes) );

ZeroMemory( kernelTimes, sizeof(kernelTimes) );

ZeroMemory( preCreationTimes, sizeof(preCreationTimes) );

ZeroMemory( userTimes, sizeof(userTimes) );

BOOL res = TRUE;

TCHAR cmd[100];

int i,result = 0;

DWORD dwerr = 0;

PrepareColdStart();

::Sleep(3000);

for(i = 0; i <= COUNT && res; i++)

{

STARTUPINFO si;

PROCESS_INFORMATION pi;

ZeroMemory( &si, sizeof(si) );

si.cb = sizeof(si);

ZeroMemory( &pi, sizeof(pi) );

::SetLastError(0);

__int64 wft = 0;

if(StrStrI(szcProg, _T("java")) && !StrStrI(szcProg, _T(".exe")))

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("javaw.exe -client -cp .\\.. %s \"%I64d\""), szcProg,wft);

}

else if(StrStrI(szcProg, _T("mono")) && StrStrI(szcProg, _T(".exe")))

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("mono %s \"%I64d\""), szcProg,wft);

}

else

{

wft = currentWindowsFileTime();

_stprintf_s(cmd,100,_T("%s \"%I64d\""), szcProg,wft);

}

if( !CreateProcess( NULL,cmd,NULL,NULL,FALSE,0,NULL,NULL,&si,&pi ))

{

dwerr = GetLastError();

_tprintf( _T("CreateProcess failed for '%s' with error code %d:%s.\n"),szcProg, dwerr,GetErrorDescription(dwerr) );

return dwerr;

}

if(!CloseUIApp(pi))

break;

dwerr = WaitForSingleObject( pi.hProcess, 20000 );

if(dwerr != WAIT_OBJECT_0)

{

dwerr = GetLastError();

_tprintf( _T("WaitForSingleObject failed for '%s' with error code %d\n"),szcProg, dwerr );

CloseHandle( pi.hProcess );

CloseHandle( pi.hThread );

break;

}

res = GetExitCodeProcess(pi.hProcess,(LPDWORD)&result);

FILETIME CreationTime,ExitTime,KernelTime,UserTime;

if(GetProcessTimes(pi.hProcess,&CreationTime,&ExitTime,&KernelTime,&UserTime))

{

__int64 *pKT,*pUT, *pCT;

pKT = reinterpret_cast<__int64 *>(&KernelTime);

pUT = reinterpret_cast<__int64 *>(&UserTime);

pCT = reinterpret_cast<__int64 *>(&CreationTime);

if(i == 0)

{

_tprintf( _T("cold start times:\nStartupTime %d ms"), result);

_tprintf( _T(", PreCreationTime: %u ms"), ((*pCT)- wft)/ 10000);

_tprintf( _T(", KernelTime: %u ms"), (*pKT) / 10000);

_tprintf( _T(", UserTime: %u ms\n"), (*pUT) / 10000);

_tprintf( _T("Waiting for statistics for %d warm samples"), COUNT);

}

else

{

_tprintf( _T("."));

kernelTimes[i-1] = (int)((*pKT) / 10000);

preCreationTimes[i-1] = (int)((*pCT)- wft)/ 10000;

userTimes[i-1] = (int)((*pUT) / 10000);

strtupTimes[i-1] = result;

}

}

else

{

printf( "GetProcessTimes failed for %p", pi.hProcess );

}

CloseHandle( pi.hProcess );

CloseHandle( pi.hThread );

if((int)result < 0)

{

_tprintf( _T("%s failed with code %d: %s\n"),cmd, result,GetErrorDescription(result) );

return result;

}

::Sleep(1000);

}

if(i <= COUNT )

{

result = GetLastError();

_tprintf( _T("\nThere was an error while running '%s', last error code = %d: %s\n"),cmd,result,GetErrorDescription(result));

return result;

}

double median, mean, stddev;

if(CalculateStatistics(&strtupTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("\nStartupTime: mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&preCreationTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("PreCreation: mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&kernelTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("KernelTime : mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

if(CalculateStatistics(&userTimes[0], COUNT, median, mean, stddev))

{

_tprintf( _T("UserTime : mean = %6.2f ms, median = %3.0f ms, standard deviation = %6.2f ms\n"),

mean,median,stddev);

}

return GetLastError();

}

Measuring memory usage is also similar with the previous way of doing it except for using the default and minimized states. Helper functions like MinimizeUIApp or CloseUIApp are not the subject of this article so I won’t describe them.

DWORD BenchMarkMemory( LPCTSTR szcProg)

{

TCHAR cmd[100];

STARTUPINFO si;

PROCESS_INFORMATION pi;

ZeroMemory( &si, sizeof(si) );

si.cb = sizeof(si);

ZeroMemory( &pi, sizeof(pi) );

DWORD dwerr;

if(StrStrI(szcProg, _T("java")) && !StrStrI(szcProg, _T(".exe")))

{

_stprintf_s(cmd,100,_T("javaw -client -cp .\\.. %s"), szcProg);

}

else if(StrStrI(szcProg, _T("mono")) && StrStrI(szcProg, _T(".exe")))

{

_stprintf_s(cmd,100,_T("mono %s"), szcProg);

}

else

{

_stprintf_s(cmd,100,_T("%s"), szcProg);

}

::SetLastError(0);

if( !CreateProcess( NULL,cmd,NULL,NULL,FALSE,0,NULL,NULL,&si,&pi ))

{

dwerr = GetLastError();

_tprintf( _T("CreateProcess failed for '%s' with error code %d:%s.\n"),szcProg, dwerr,GetErrorDescription(dwerr) );

return dwerr;

}

::Sleep(3000);

PROCESS_MEMORY_COUNTERS_EX pmc;

if ( GetProcessMemoryInfo( pi.hProcess, (PROCESS_MEMORY_COUNTERS*)&pmc, sizeof(pmc)) )

{

printf( "Normal size->PrivateUsage: %lu KB,", pmc.PrivateUsage/1024 );

printf( " Current WorkingSet: %lu KB\n", pmc.WorkingSetSize/1024 );

}

else

{

printf( "GetProcessMemoryInfo failed for %p", pi.hProcess );

}

HWND hwnd = MinimizeUIApp(pi);

::Sleep(2000);

if ( GetProcessMemoryInfo( pi.hProcess, (PROCESS_MEMORY_COUNTERS*)&pmc, sizeof(pmc)) )

{

printf( "Minimized-> PrivateUsage: %lu KB,", pmc.PrivateUsage/1024 );

printf( " Current WorkingSet: %lu KB\n", pmc.WorkingSetSize/1024 );

}

else

printf( "GetProcessMemoryInfo failed for %p", pi.hProcess );

if(!EmptyWorkingSet(pi.hProcess))

printf( "EmptyWorkingSet failed for %x\n", pi.dwProcessId );

else

{

ZeroMemory(&pmc, sizeof(pmc));

if ( GetProcessMemoryInfo( pi.hProcess, (PROCESS_MEMORY_COUNTERS*)&pmc, sizeof(pmc)) )

{

printf( "Minimum WorkingSet: %lu KB,", pmc.WorkingSetSize/1024 );

printf( " PeakWorkingSet: %lu KB\n", pmc.PeakWorkingSetSize/1024 );

}

else

printf( "GetProcessMemoryInfo failed for %p", pi.hProcess );

}

if(hwnd)

::SendMessage(hwnd,WM_CLOSE,NULL,NULL);

dwerr = WaitForSingleObject( pi.hProcess, 20000 );

if(dwerr != WAIT_OBJECT_0)

{

dwerr = GetLastError();

_tprintf( _T("WaitForSingleObject failed for '%s' with error code %d\n"),szcProg, dwerr );

}

CloseHandle( pi.hProcess );

CloseHandle( pi.hThread );

return GetLastError();

}

You can still create native windows using Win32 APIs if you wanted, but chances are you are going to use an existing library to do it. There are several C++ based UI frameworks in use for developing UI Applications like ATL,

WTL,

MFC and others. The performance test uses the MFC library linked dynamically since it is the most popular UI framework for C++ development. The code snippets below show how the start-up time is calculated during the dialog initialization and returned from the application.

void CCPPMFCPerfDlg::OnShowWindow(BOOL bShow, UINT nStatus)

{

CDialog::OnShowWindow(bShow, nStatus);

FILETIME ft;

GetSystemTimeAsFileTime(&ft);

if( __argc < 2 )

{

theApp.m_result = 0;

return;

}

FILETIME userTime;

FILETIME kernelTime;

FILETIME createTime;

FILETIME exitTime;

if(GetProcessTimes(GetCurrentProcess(), &createTime, &exitTime, &kernelTime, &userTime))

{

__int64 diff;

__int64 *pMainEntryTime = reinterpret_cast<__int64 *>(&ft);

_int64 launchTime = _tstoi64(__targv[1]);

diff = (*pMainEntryTime -launchTime)/10000;

theApp.m_result = (int)diff;

}

else

theApp.m_result = 0;

}

int CCPPMFCPerfApp::ExitInstance()

{

int result = CWinApp::ExitInstance();

if(!result)

return 0;

else

return m_result;

}

.Net offered Windows Forms as a mean to write UI applications from the beginning. The test code below is similar in all versions of .Net.

public partial class Form1 : Form

{

private const long TicksPerMiliSecond = TimeSpan.TicksPerSecond / 1000;

int _result = 0;

public Form1()

{

InitializeComponent();

}

[STAThread]

static int Main()

{

Application.EnableVisualStyles();

Application.SetCompatibleTextRenderingDefault(false);

Form1 f = new Form1();

Application.Run(f);

return f._result;

}

protected override void OnActivated(EventArgs e)

{

base.OnActivated(e);

if (Environment.GetCommandLineArgs().Length == 1)

{

System.GC.Collect(2, GCCollectionMode.Forced);

System.GC.WaitForPendingFinalizers();

}

else if (this.WindowState == FormWindowState.Normal)

{

DateTime mainEntryTime = DateTime.UtcNow;

string launchtime = Environment.GetCommandLineArgs()[1];

DateTime launchTime = System.DateTime.FromFileTimeUtc(long.Parse(launchtime));

long diff = (mainEntryTime.Ticks - launchTime.Ticks) / TicksPerMiliSecond;

_result = (int)diff;

}

}

}

Microsoft’s latest UI running on top of .Net framework is WPF and is supposed to be more comprehensive than Windows Forms and geared toward latest versions of Windows. The relevant code for getting the start-up time is shown below.

public partial class Window1 : Window

{

public Window1()

{

InitializeComponent();

}

private const long TicksPerMiliSecond = TimeSpan.TicksPerSecond / 1000;

private void Window_Loaded(object sender, RoutedEventArgs e)

{

if (Environment.GetCommandLineArgs().Length == 1)

{

System.GC.Collect(2, GCCollectionMode.Forced);

System.GC.WaitForPendingFinalizers();

}

else if (this.WindowState == WindowState.Normal)

{

DateTime mainEntryTime = DateTime.UtcNow;

string launchtime = Environment.GetCommandLineArgs()[1];

DateTime launchTime = System.DateTime.FromFileTimeUtc(long.Parse(launchtime));

long diff = (mainEntryTime.Ticks - launchTime.Ticks) / TicksPerMiliSecond;

(App.Current as App)._result= (int)diff;

}

}

}

public partial class App : Application

{

internal int _result = 0;

protected override void OnExit(ExitEventArgs e)

{

e.ApplicationExitCode = _result;

base.OnExit(e);

}

}

Java has two popular UI libraries Swing and AWT. Since Swing is more popular than AWT I’ve used it to build the UI test as it shows below.

public class SwingTest extends JFrame

{

static int result = 0;

JLabel textLabel;

String[] args;

SwingTest(String[] args)

{

this.args = args;

}

public static void main(String[] args)

{

try

{

SwingTest frame = new SwingTest(args);

frame.setTitle("Simple GUI");

frame.enableEvents(AWTEvent.WINDOW_EVENT_MASK | AWTEvent.FOCUS_EVENT_MASK);

frame.setDefaultCloseOperation(JFrame.DISPOSE_ON_CLOSE);

frame.setResizable(true);

frame.textLabel = new JLabel("Java Swing UI Test", SwingConstants.CENTER);

frame.textLabel.setAutoscrolls(true);

frame.textLabel.setPreferredSize(new Dimension(300, 100));

frame.getContentPane().add(frame.textLabel, BorderLayout.CENTER);

frame.setLocation(100, 100);

frame.pack();

frame.setVisible(true);

}

catch (Exception ex)

{ System.exit(-1); }

}

protected void processWindowEvent(WindowEvent e)

{

super.processWindowEvent(e);

switch (e.getID())

{

case WindowEvent.WINDOW_OPENED:

long mainEntryTime = System.currentTimeMillis();

if (args.length == 0)

{

try

{

System.gc();

System.runFinalization();

Thread.sleep(2000);

}

catch (Exception ex)

{

ex.printStackTrace();

}

}

else

{

long fileTimeUtc = Long.parseLong(args[0]);

long launchTime = fileTimeUtc - 116444736000000000L;

launchTime /= 10000;

result = (int)(mainEntryTime - launchTime);

}

break;

case WindowEvent.WINDOW_CLOSED:

System.exit(result);

break;

}

}

}

Mono has its own WinForms that are compatible with Microsoft’s Forms. The C# code is almost the same with the C# code shown above, only the build command is different:

set path=C:\PROGRA~1\MONO-2~1.4\bin\;%path%

gmcs -target:exe -o+ -nostdlib- -r:System.Windows.Forms.dll -r:System.Drawing.dll MonoUIPerf.cs -out:Mono26UIPerf.exe

These commands are also available in buildMonoUI.bat for your convenience.

UI applications adjust the working set on Windows XP when they run minimized or in default size and I’ve captured these extra measurements in addition to the ones I had from the previous tests. I’ve left only the 'private bytes' for the default sized window because always is very close or the same as for the minimized window.

| UI/Runtime version | Cold Start | Warm Start | Current Working Set | Private Usage

(KB) | Min Working Set

(KB) | Peak Working Set

(KB) |

Startup

Time(ms) | CPU

Time(ms) | Startup

Time(ms) | CPU

Time(ms) | Minimized

Size(KB) | Default

Size(KB) |

| Forms 1.1 | 4723 | 296 | 205 | 233 | 584 | 8692 | 5732 | 144 | 8776 |

| Forms 2.0 | 3182 | 202 | 154 | 171 | 604 | 8552 | 10252 | 128 | 8660 |

| Forms 3.5 | 3662 | 171 | 154 | 155 | 600 | 8604 | 10252 | 128 | 8712 |

| WPF 3.5 | 7033 | 749 | 787 | 733 | 1824 | 14160 | 15128 | 184 | 14312 |

| Forms 4.0 | 3217 | 265 | 136 | 139 | 628 | 9224 | 10956 | 140 | 9272 |

| WPF 4.0 | 6828 | 733 | 718 | 702 | 2056 | 16456 | 16072 | 212 | 16620 |

| Swing Java 1.6 | 1437 | 546 | 548 | 546 | 3664 | 21764 | 43708 | 164 | 21780 |

| Forms Mono 2.6.4 | 4090 | 968 | 804 | 984 | 652 | 12116 | 6260 | 132 | 15052 |

| MFC CPP | 875 | 108 | 78 | 61 | 592 | 3620 | 804 | 84 | 3636 |

The poor results for WPF compared to the Forms might be in part because the WPF was

back ported from Windows Vista to Windows XP and it’s feature set is larger.

Since an image is worth a 1000 words, in the graph below I’m showing the Start-up time, CPU cumulated time, the Working Set when the window is at default size and the Private bytes for the latest version of each technology.

As in the previous non UI tests, C++/MFC appears to perform a lot better than the other frameworks.

You can use ngen.exe or use the make_nativeimages.bat to generate native images for the assemblies used for testing.

| UI/Runtime version | Cold Start | Warm Start | Current Working Set | Private Usage

(KB) | Min Working Set

(KB) | Peak Working Set

(KB) |

Startup

Time(ms) | CPU

Time(ms) | Startup

Time(ms) | CPU

Time(ms) | Minimized

Size(KB) | Default

Size(KB) |

| Forms 1.1 | 6046 | 280 | 237 | 218 | 568 | 8116 | 5640 | 144 | 8204 |

| Forms 2.0 | 5316 | 140 | 217 | 186 | 580 | 7928 | 10144 | 580 | 8036 |

| Forms 3.5 | 2866 | 202 | 158 | 140 | 588 | 7992 | 10140 | 132 | 8092 |

| Forms 4.0 | 4149 | 156 | 217 | 187 | 632 | 8416 | 10892 | 144 | 8476 |

| WPF 3.5 | 7844 | 671 | 889 | 708 | 1840 | 13644 | 15052 | 1840 | 13800 |

| WPF 4.0 | 6520 | 718 | 718 | 718 | 2032 | 15748 | 15976 | 216 | 15948 |

|

Again, it appears that using native images for very small UI test applications won’t yield any performance gain, and sometimes it might be even worse than without native code generation.

You can execute the included RunAllUI.bat from the download, but that batch will fail to capture realistic cold start-up times. Since this time you are running UI applications you can’t use the same scheduler trick without logging in after reboot unless you create your own auto reboot settings.

Hopefully, this article will help you cut through the buzz words and give you an idea of the overhead encountered when you trade performance for the convenience of using a managed runtime versus native code and help out when making a decision on the software technology to use for development.

Also feel free to create your own new tests modelled after my own or test on different platforms. I've included solutions for Visual Studio 2003, 2008 and 2010 with source code and build files for Java and Mono. The compile options have been set to optimized for speed when available.

Version 1.0 released in July 2010.

Version 1.1 as of September 2010, added UI benchmarks.